Hardware-related API#

Cameras#

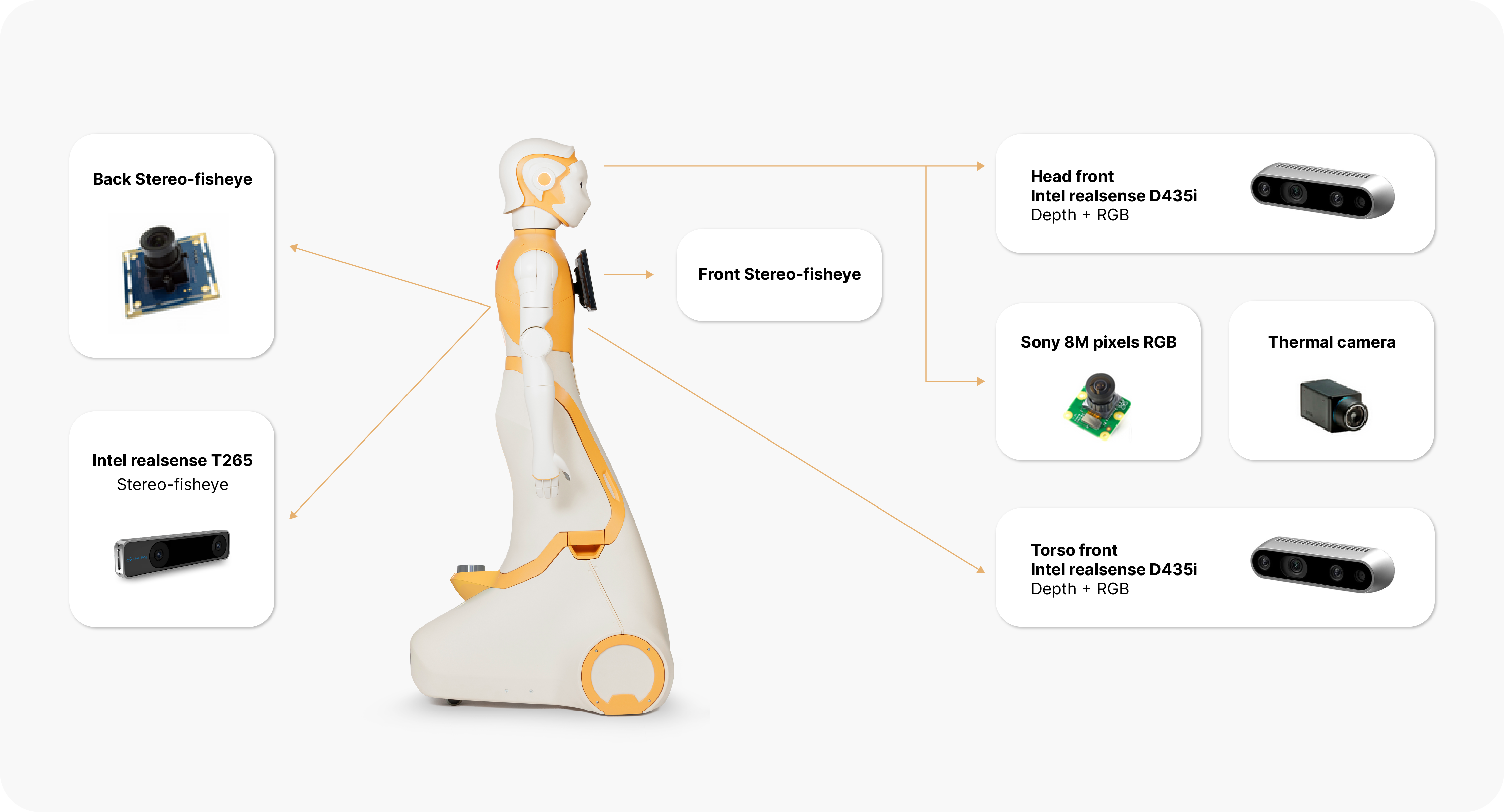

The following Figure illustrates the cameras mounted on the robot:

Torso front camera#

Stereo RGB-D camera: This camera is mounted on the frontal side of the torso below the touchscreen and provides RGB images along with a depth image obtained by using an IR projector and an IR camera. The depth image is used to obtain a point cloud of the scene.

Torso front camera-related topics:

/torso_front_camera/aligned_depth_to_color/camera_info/torso_front_camera/aligned_depth_to_color/image_raw/*/torso_front_camera/color/camera_info/torso_front_camera/color/image_raw/*/torso_front_camera/depth/camera_info/torso_front_camera/depth/color/points/torso_front_camera/depth/image_rect_raw/*/torso_front_camera/infra1/camera_info/torso_front_camera/infra1/image_rect_raw/*/torso_front_camera/infra2/image_rect_raw/compressed

Torso back camera#

Stereo-fisheye camera: This camera is mounted on the back side of the torso, right below the emergency button, and provides stereo, fisheye and black and white images.

Head camera#

Either one of these cameras is located inside ARI’s head.

RGB camera: provides RGB images

RGB-D camera: provides RGB-D images

Head camera-related topics:

Optional cameras#

The touchscreen can include up to three more cameras:

thermal camera

RGB camera

RGB-D camera

In order to learn how to access the cameras, please refer to Accessing ARI sensors.

LEDs#

See LEDs API.

Animated eyes#

ARI has LCD eyes which provide a collection of eyes expressions. These can be use to support engaging interactions, along with head and arms movements.

See How-to: Control ARI’s expressions for the list of available expression, API, and code samples.

Speakers and microphones#

ARI has an array of four microphones that can be used to record audio and process it in order to perform tasks such as speech recognition. The microphone is located on the circular gap of the torso. There are two HIFI full-range speakers just below it.

The ReSpeaker Mic Array V2.0 consists of 4 microphones (https://www.seeedstudio.com/ReSpeaker-Mic-Array-v2-0.html). See ARI microphone array and audio recording for details.

To learn more about how to process speech in ARI, refer to Dialogue management.

Joints#

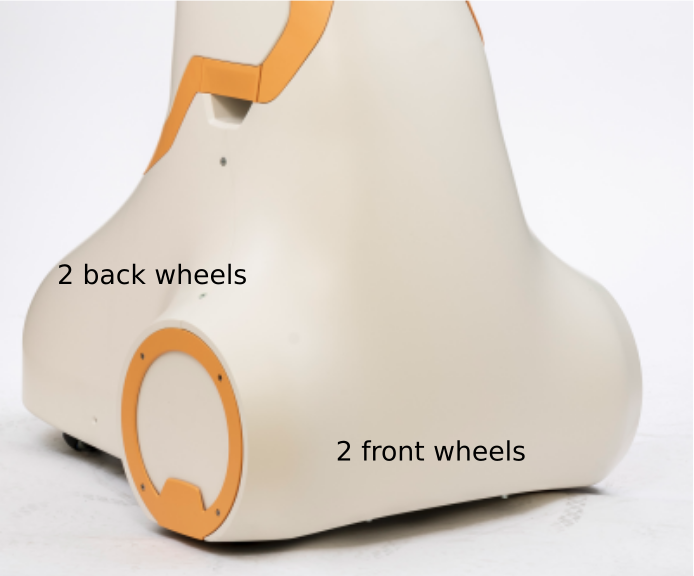

Base#

The base joints of ARI are the front and back wheels of the robot. Take care when wheeling ARI, especially with the smaller back wheels.

The wheels can be controlled using a ros topic, specifying the desired linear and angular velocity of the robot. These velocities are specified in meters per second and are translated to wheel angular velocities internally.

Drive wheels topics:

To get more information on how to move around ARI, please refer to Navigation.

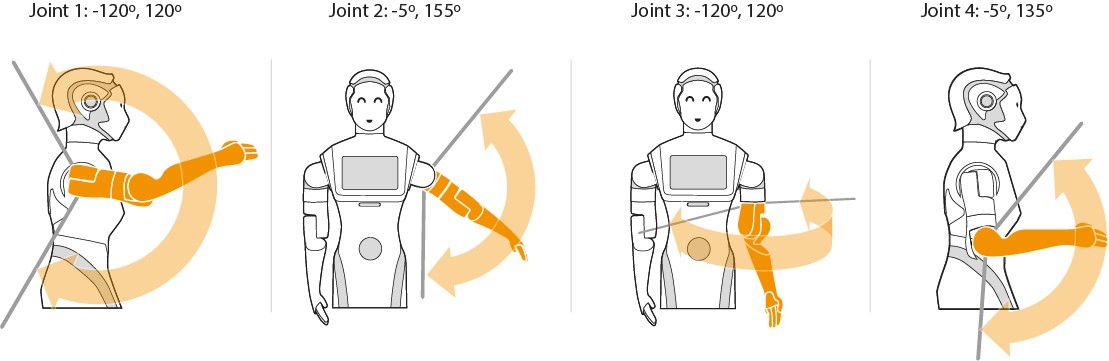

Arms#

ARI’s joints and arm movements are illustrated below:

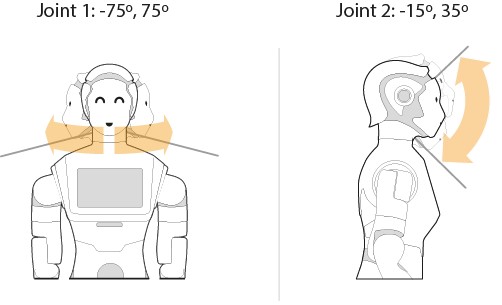

Head joints#

The joint movements for ARI’s head are shown below:

Please refer to the following entries to to learn how to move ARI’s upper body:

Upper body motion and play_motion

play_motion: How to play a pre-recorded motion

whole_body_motion_control

move_it

play_motion

LIDAR#

LIDAR topics: