Navigation-related API#

This section details ARI’s autonomous navigation framework. The navigation software uses Visual SLAM to perform mapping and localization using the RGB-D camera of the torso. This system works by detecting keypoints or features from the camera input and recognising previously seen locations in order to create a map and localize. The map obtained is represented as an Occupancy Grid Map (OGM) that can later be used to make the robot localize and navigate autonomously in the environment using move_base (http://wiki.ros.org/move_base)

Navigation architecture#

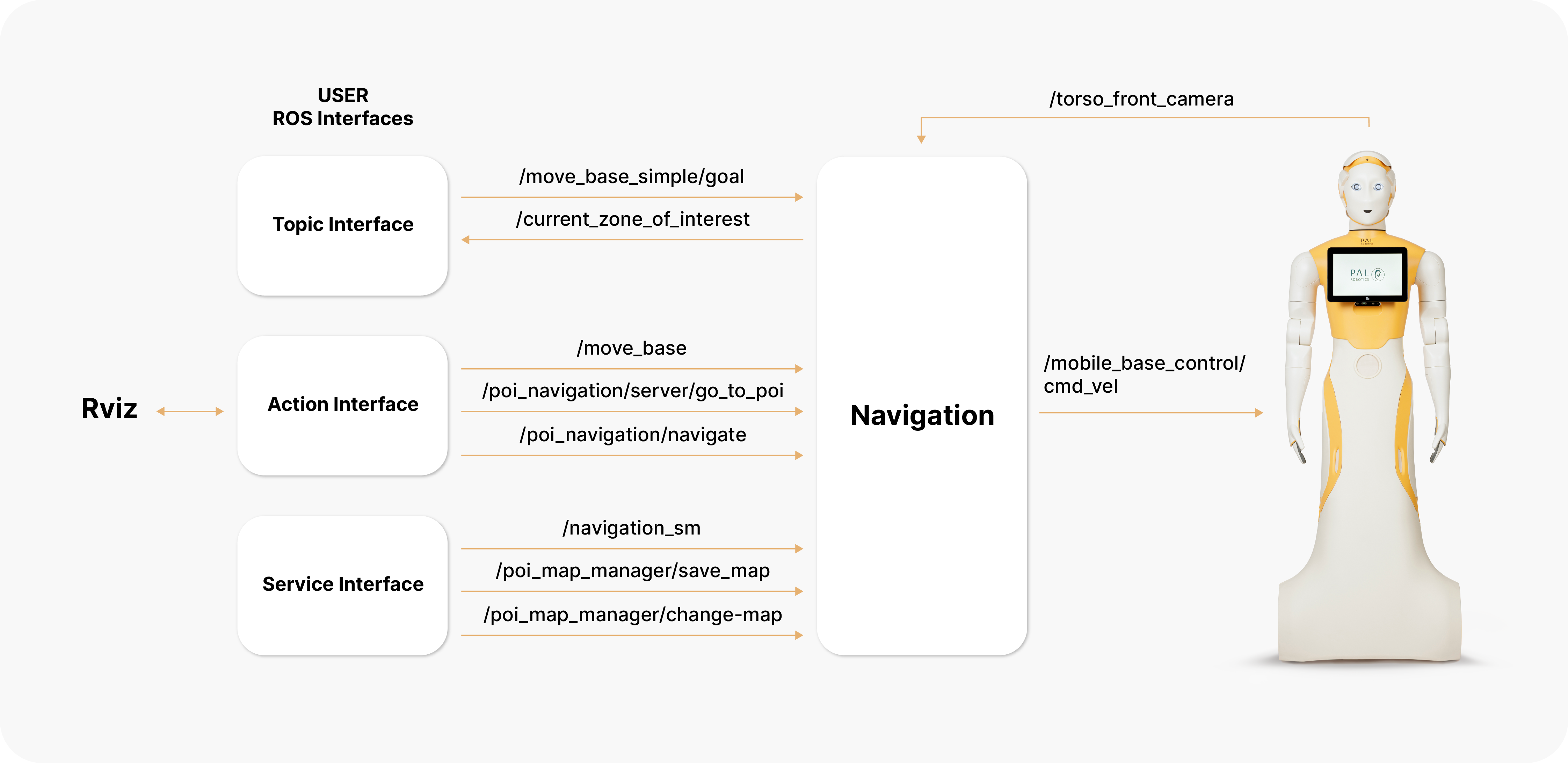

The navigation software provided with ARI can be seen as a black box with the inputs and outputs shown in the figure below.

As can be seen, the user can communicate with the navigation software using ROS (Robotics Operating System: http://wiki.ros.org/) actions and services. Note that Rviz (http://wiki.ros.org/rviz) also can use these interfaces to help the user perform navigation tasks.

ROS nodes comprising the navigation architecture communicate using topics, actions and services, but also using parameters in the ROS param server.

Topic interfaces#

topic_move_base_simple_goal

Topic interface to send the robot to a pose specified in /map metric coordinates. Use this interface if no monitoring of the navigation status is required.

Topic to print the name of the zone of interest where the robot is at present, if any.

Action interfaces#

Action to send the robot to a pose specified in /map metric coordinates. Use

of this interface is recommended when the user wants to monitor the status of

the action.

action_poi_navigation_server-go_to_poi

Action to send the robot to an existing Point Of Interest (POI) by providing its identifier. POIs can be set using Rviz Map Editor, see section Laser-based SLAM and path planning on the robot.

action_pal_waypoint_navigate

Action to make the robot visit all the POIs of a given group or subset. POIs and POI groups can be defined using the Rviz Map Editor, see section Laser-based SLAM and path planning on the robot.

Service interfaces#

Service to set the navigation mode to mapping or to localization mode.

In order to set the mapping mode:

rosservice call /pal_navigation_sm "input: 'MAP'"

In order to set the robot in localization mode:

rosservice call /pal_navigation_sm "input: 'LOC'"

In the localization mode the robot is able to plan paths to any valid point on the map.

Service to save the map with a given name. Example:

rosservice call /pal_map_manager/save_map "directory: 'my_office_map'"

The directory argument is the name of the map. If empty, a timestamp will be

used. The maps are stored in $HOME/.pal/ari_maps/configurations.

Service to choose the active map. Example:

rosservice call /pal_map_manager/change_map "input: 'my_office_map'"