PAL Interaction simulator¶

The interaction simulator is a hybrid simulation tool within ROS4HRI that runs a substantial part of PAL’s interaction pipeline, including components like hri_face_detect, hri_person_manager, communication_hub, expressive_eyes, and knowledge_core. Additionally, it simulates certain elements, such as using a chat interface instead of the ASR/TTS tools and enabling drag-and-drop of virtual objects instead of detecting them.

Running the simulator¶

To execute the simulator, run the following command in your development container or from PAL’s public tutorials Docker image:

ros2 launch interaction_sim simulator.launch.py

The interaction simulator starts several nodes, including:

gscam: Publishes images from the webcam.

hri_face_detect: Detects faces in images.

hri_person_manager: Combines faces, bodies, and voices into full person profiles.

hri_emotion_recognizer: Recognizes emotions on detected faces.

attention_manager: Determines where the robot should look based on detected faces.

expressive_eyes: Procedurally generates the robot’s face and moves its eyes.

communication_hub: Manages dialogues with the user.

knowledge_core: PAL’s OWL/RDF knowledge base.

hri_visualization: Generates a camera image overlay with detected faces, bodies, and emotions.

The simulator’s display is based on ROS’s RQt, with two custom plugins:

rqt_human_radar(documentation): Visualizesdetected people around the robot, and enables adding virtual objects and people

rqt_chat: Simulates chat interactions with the robot. Messages sentare published to the topic

/humans/voices/anonymous_speaker/speech, and responses are sent to action/tts_engine/tts.

The figure below gives a complete overview of the architecture.

People Perception¶

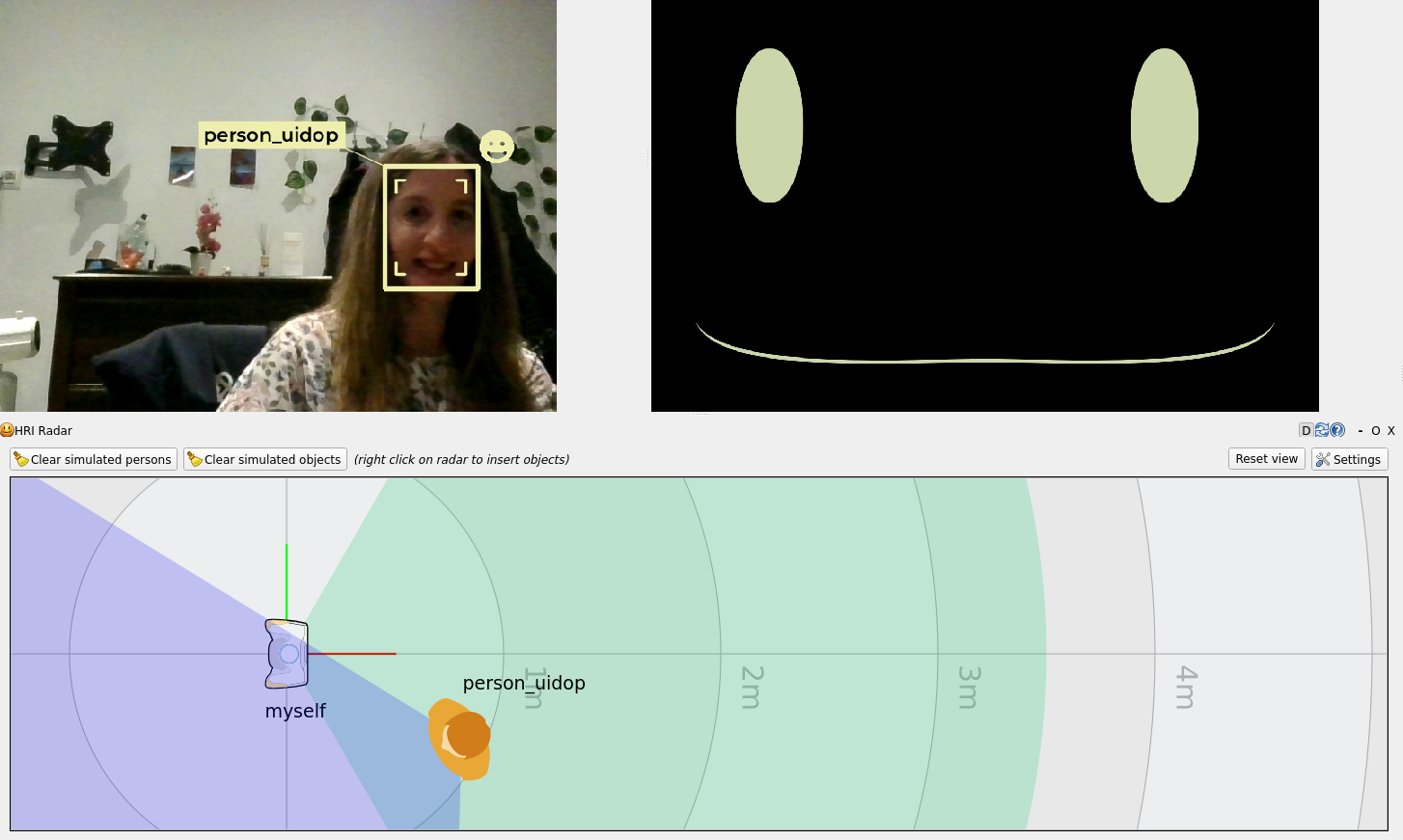

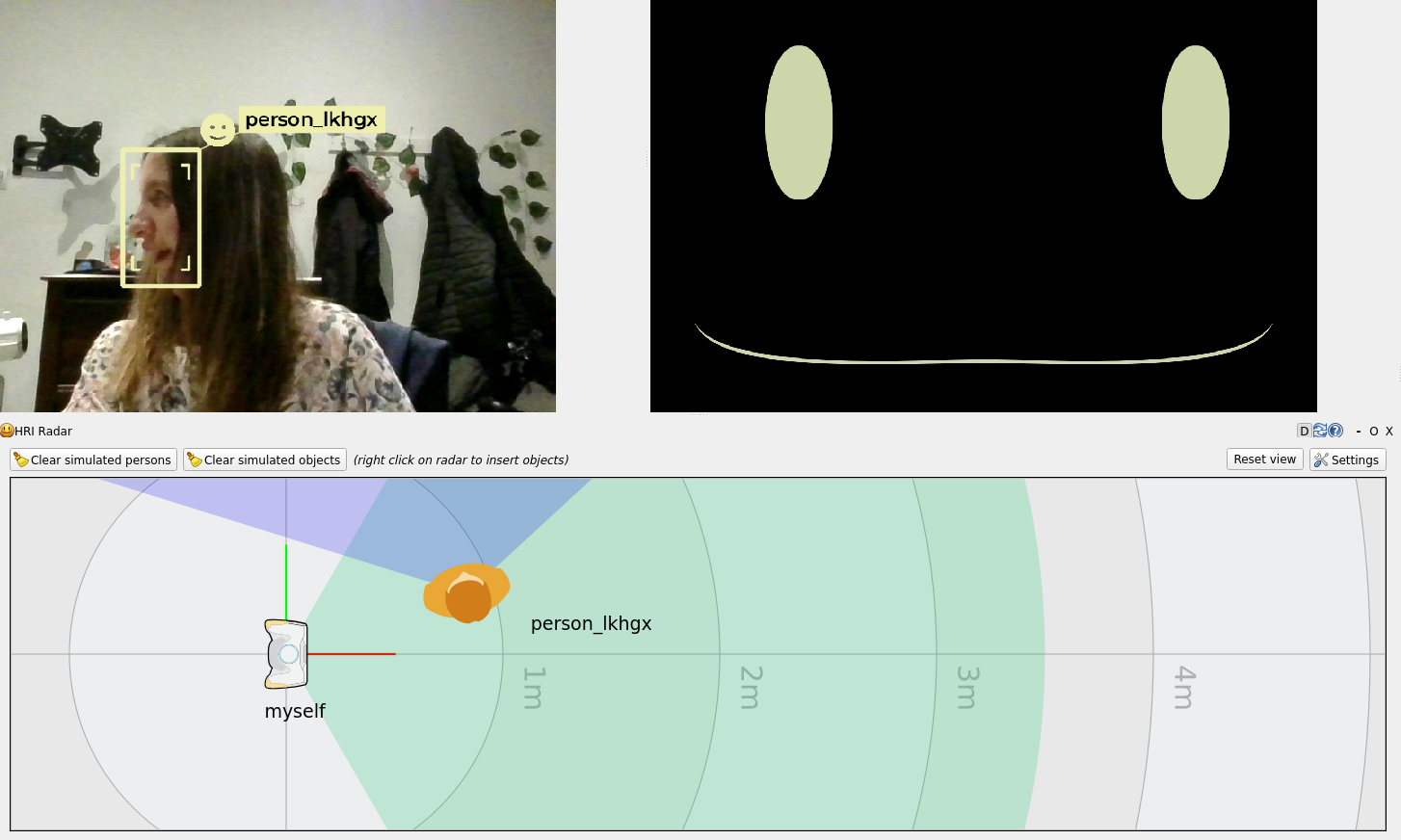

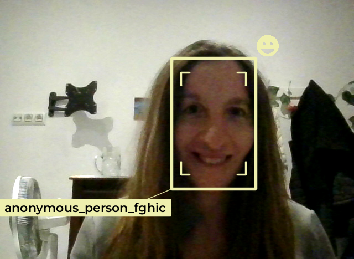

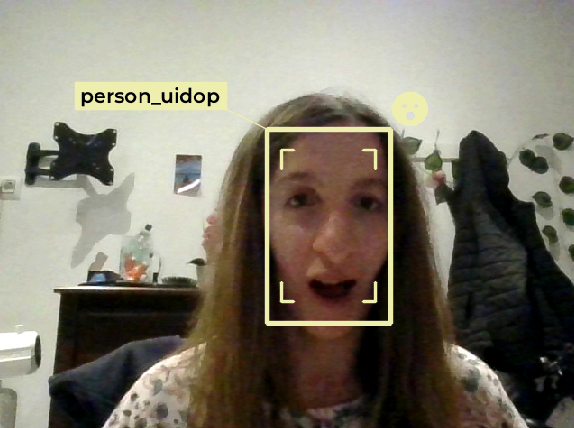

Outputs the image detected by the USB camera, highlighting faces and bodies with bounding boxes. Each person is assigned a unique ID, and detected emotions are represented as emojis near their bounding boxes.

Testing Instructions

Position yourself in front of the camera.

Ensure your face is detected and given an ID.

Change facial expressions (e.g., smile, surprise) and observe corresponding emoji updates.

Happy face detection¶

Surprised face detection¶

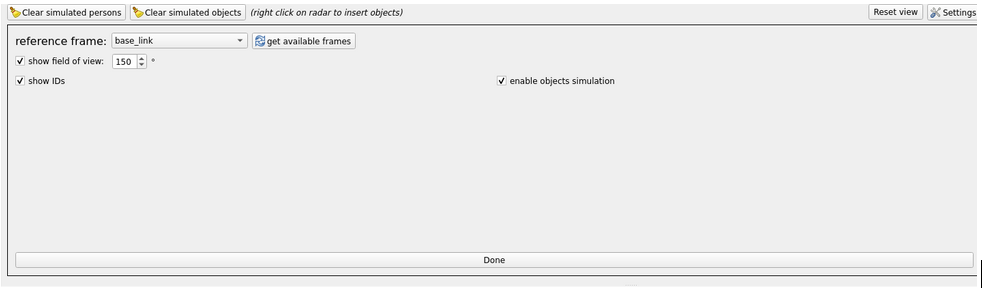

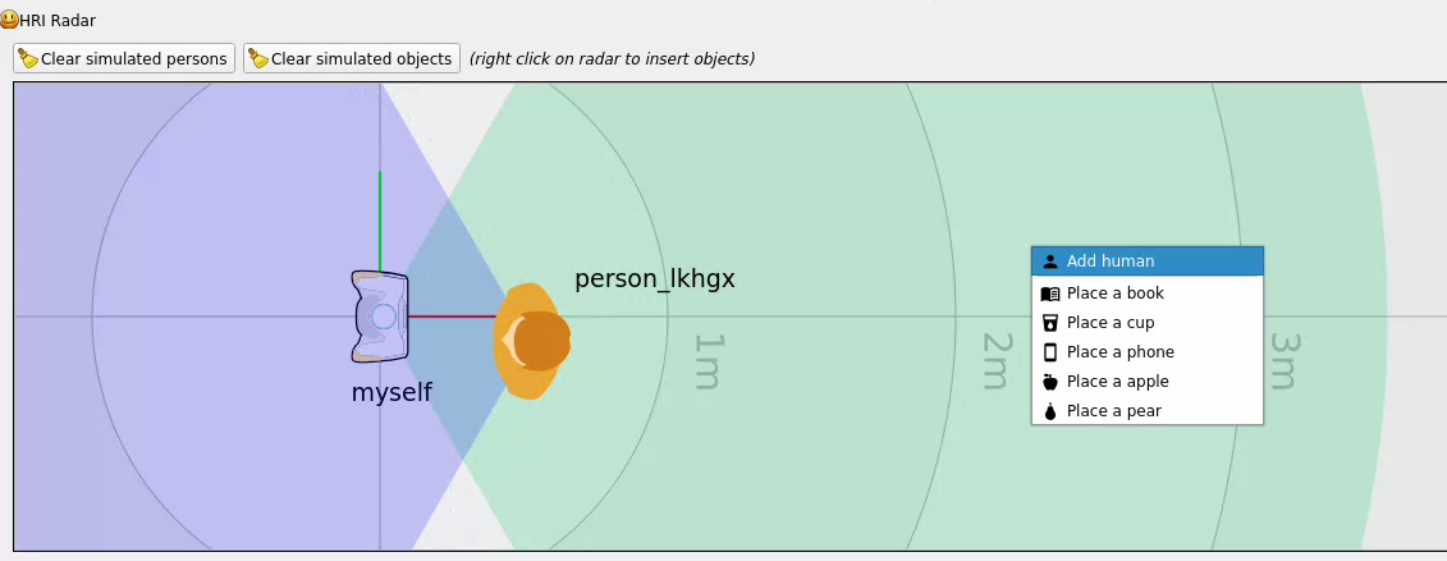

Human Radar¶

The Human Radar component displays (and optionally simulate) humans in the vicinity of the robot. It provides spatial data, including distance and angle relative to the robot.

Testing Instructions

Place yourself in front of the camera and ensure detection.

Move side-to-side or closer/further away and observe changes in the radar

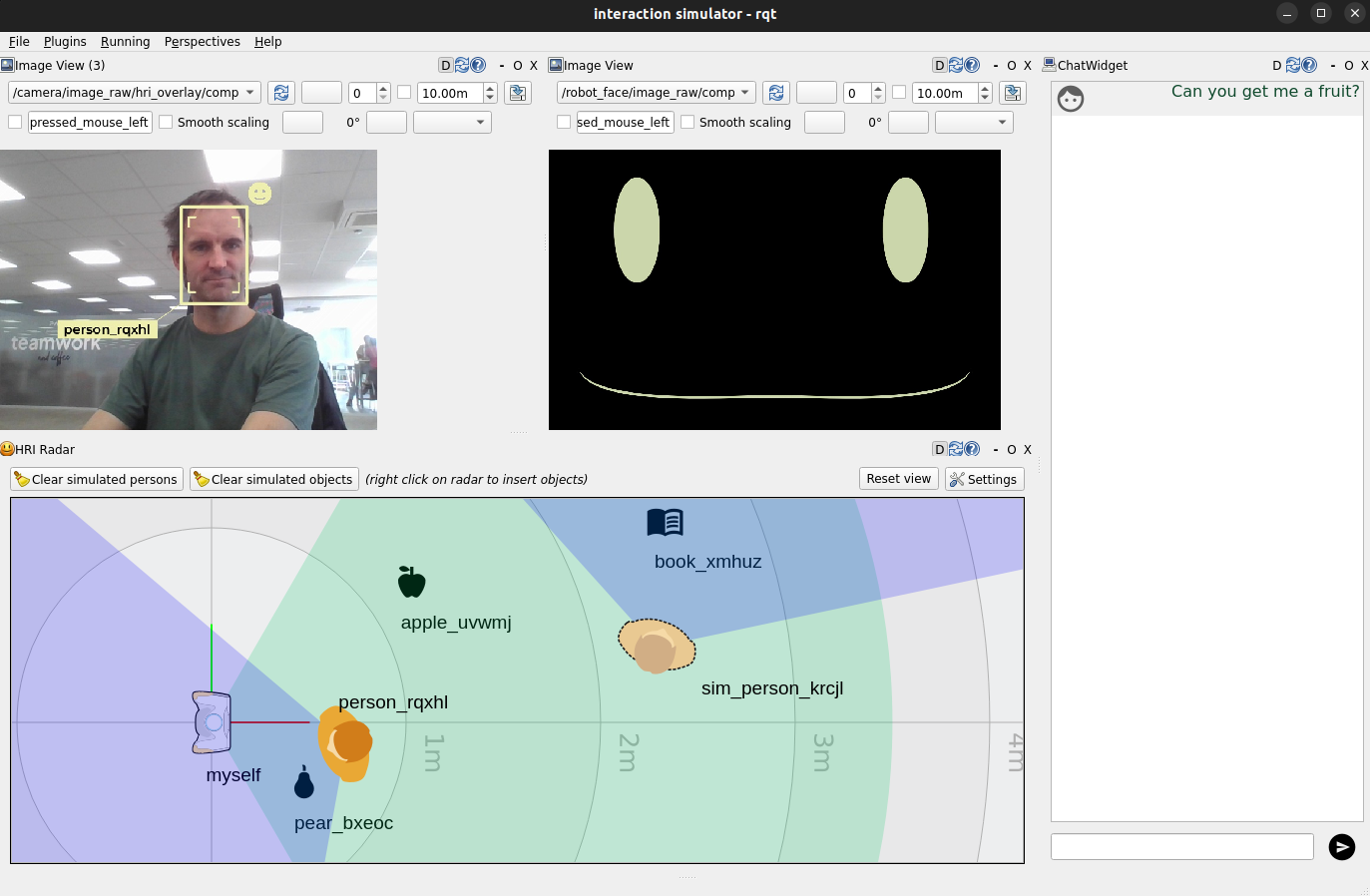

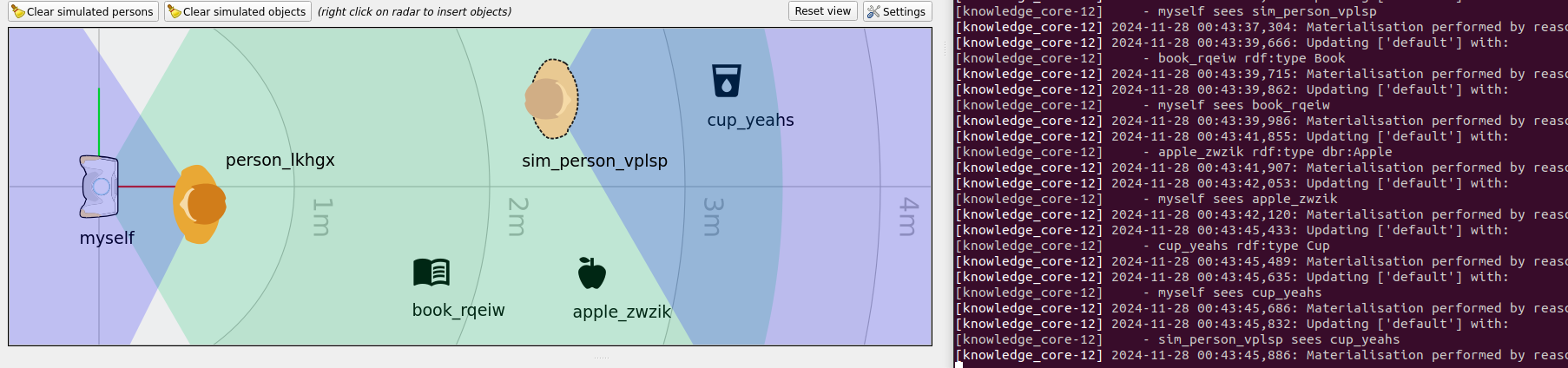

Simulating objects and interaction with the knowledge base¶

You can also add virtual objects or people by pressing .

Right click and add one of the available objects, such as book, phone or apple, or simulated humans.

It will drop the object at the designated location. The green zone is the field of view of the robot, and blue zones the field of view of the humans (with the darker orange being the detected real user, and lighter orange being a virtual human just added). Once you add an object, it will be automatically added on robot’s knowledge base.

You can drag and drop the object inside or outside of the robot’s field of view to automatically update the visibility status in the knowledge base.

- sim_person_vplsp rdf:type Human

- myself sees sim_person_vplsp

- book_rqeiw rdf:type Book

- myself sees book_rqeiw

- apple_zwzik rdf:type dbr:Apple

- myself sees apple_zwzik

- cup_yeahs rdf:type Cup

- myself sees cup_yeahs

- sim_person_vplsp sees cup_yeahs

You can then query the knowledge base to list what the robot is currently seeing

(ie, objects or people in its field of view). In the figure above, the robot

sees an apple, a cup, a book and two people, while sim_person_vplsp only

sees a cup:

> ros2 service call /kb/query kb_msgs/srv/Query "patterns: ['myself sees ?var']"

requester: making request: kb_msgs.srv.Query_Request(patterns=['myself sees ?var'], vars=[], models=[])

response:

kb_msgs.srv.Query_Response(success=True, json='[{"var": "sim_person_vplsp"}, {"var": "person_lkhgx"}, {"var": "cup_yeahs"}, {"var": "apple_zwzik"}, {"var": "book_rqeiw"}]', error_msg='')

> ros2 service call /kb/query kb_msgs/srv/Query "patterns: ['sim_person_vplsp sees ?var']"

requester: making request: kb_msgs.srv.Query_Request(patterns=['sim_person_vplsp sees ?var'], vars=[], models=[])

response:

kb_msgs.srv.Query_Response(success=True, json='[{"var": "cup_yeahs"}]', error_msg='')

Note

If you add an object outside the field of view of the robot or simulated human, it will

be added to the knowledge base as an object, but not as an object seen by any

entity. For instance, as cup_yeahs is in the field of view of the

simulated human, but apple_zwzik is only indicated as seen by myself

(the robot).

Robot face¶

Displays the expressive face of the robot (e.g., TIAGo Pro face) and tracks people detected using the attention_manager.

Testing Instructions

Publish desired expressions directly via ROS on /robot_face/expression. See available expressions in

Expression.msg.

ros2 topic pub /robot_face/expression hri_msgs/msg/Expression "expression: sad"

Move side to side and, as you see changes in the position of humans detected in the radar, see how the robot adjusts its gaze to look at you (see example below)

Chat interface¶

The rqt_chat simulates a person speaking by publishing on the

/humans/voices/anonymous_speaker/speech (see /humans/voices/*/speech)

whenever the user types in the chat, and simulates a Text-to-Speech (TTS) engine

by exposing a ‘fake’ say skill and outputting the response back on

the interface.

Asides the simulation environment, you need to run the dialogue engine separately. Right now, it supports:

See Create an application with rpk on how to create a new application with LLM integration.

See also¶

PAL simulation tools

💡 Knowledge and reasoning for more information on the knowledge base.