Build a complete LLM-enabled interactive app¶

🏁 Goal of this tutorial

This tutorial will guide you through the installation and use of the ROS4HRI framework, a set of ROS nodes and tools to build interactive social robots.

We will use a set of pre-configured Docker containers to simplify the setup process.

We will also explore how a simple yet complete social robot architecture can be assembled using ROS 2, PAL Robotics’ toolset to quickly generate robot application templates, and a LLM backend.

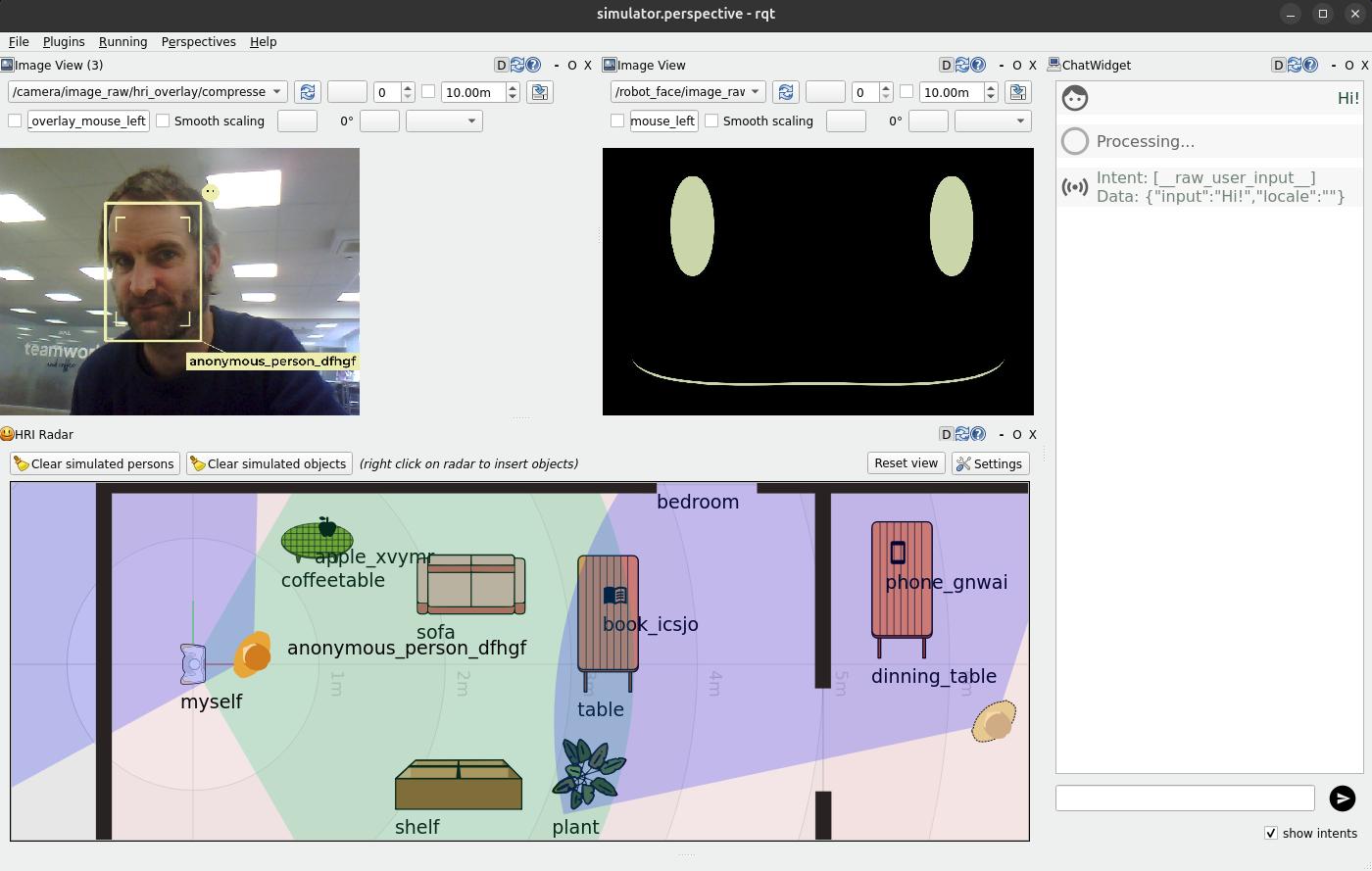

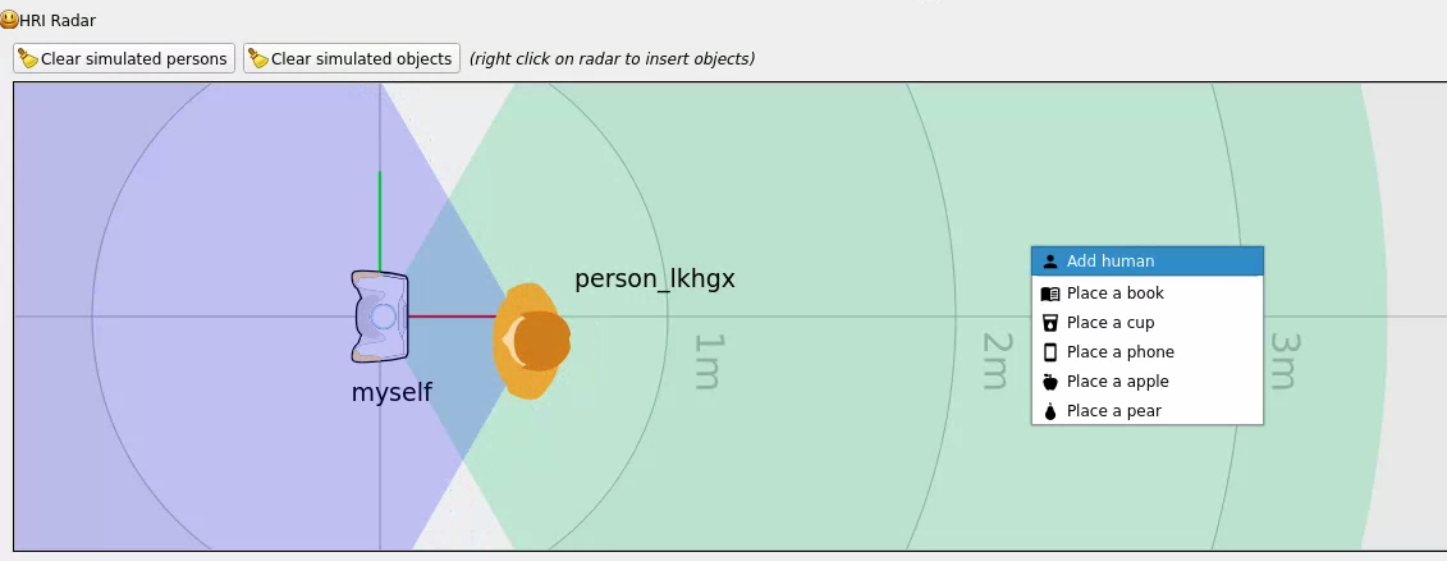

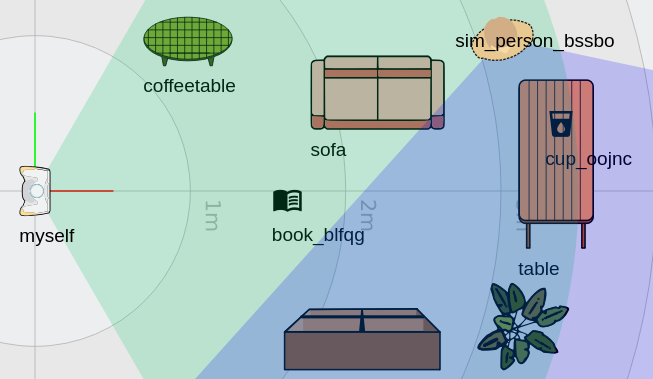

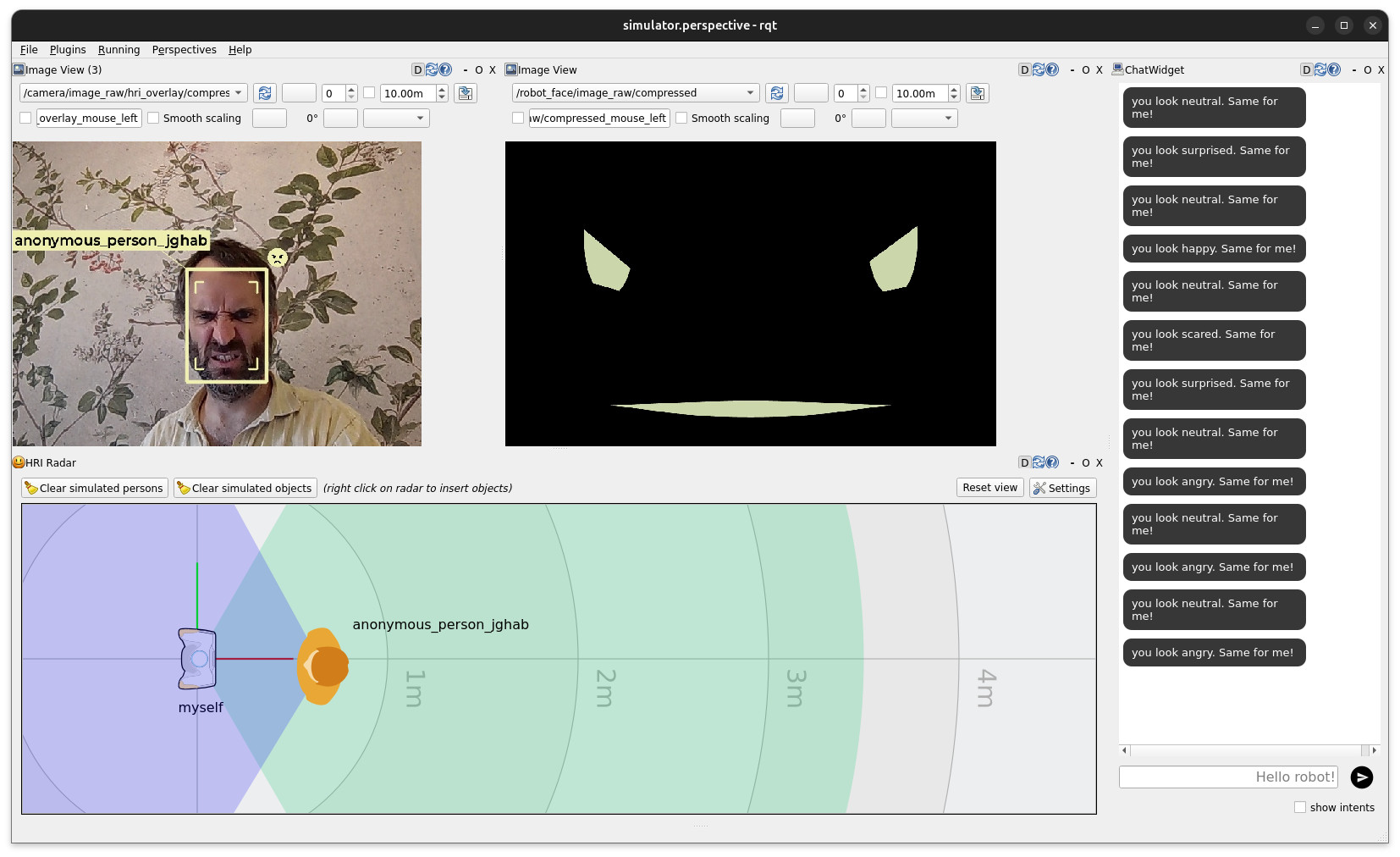

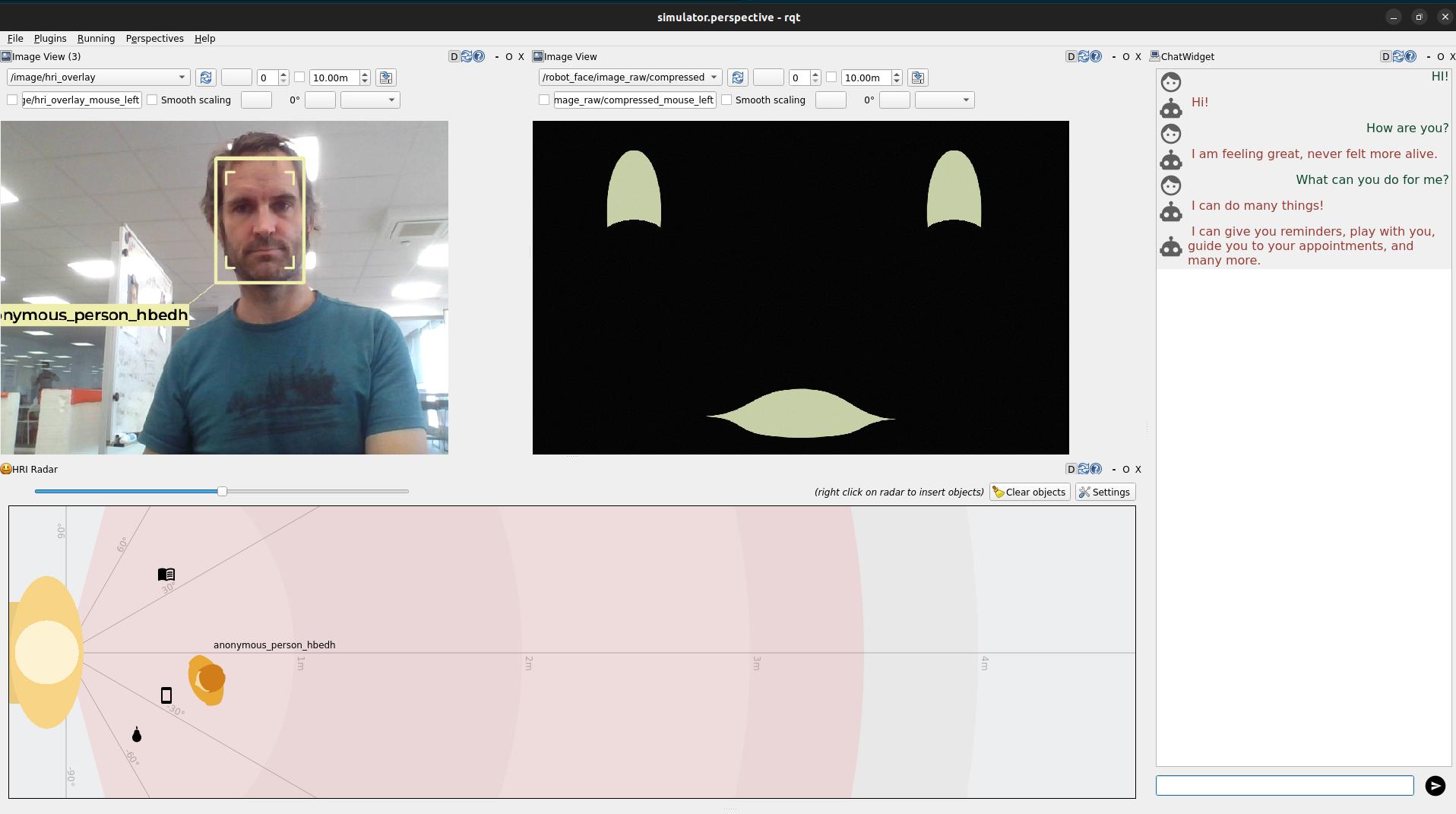

PAL’ Social interaction simulator¶

PART 0: Preparing your environment¶

Pre-requisites¶

To follow ‘hands-on’ the tutorial, you will need to be able to run a

Docker container on your machine, with access to a X server (to display

graphical applications like rviz and rqt). We will also use the

webcam of your computer.

Any recent Linux distribution should work, as well as MacOS (with XQuartz installed).

The tutorial alo assumes that you have a basic understanding of ROS 2 concepts (topics, nodes, launch files, etc). If you are not familiar with ROS 2, you can check the official ROS 2 tutorials.

Get the public PAL tutorials Docker image¶

Fetch the PAL tutorials public Docker image:

docker pull palrobotics/public-tutorials-alum-devel:latest

Then, run the container, with access to your webcam and your X server.

xhost +

mkdir ros4hri-exchange

docker run -it --name ros4hri \

--device /dev/video0:/dev/video0 \

-e DISPLAY=$DISPLAY \

-v /tmp/.X11-unix:/tmp/.X11-unix \

-v `pwd`/ros4hri-exchange:/home/user/exchange \

--net=host \

palrobotics/public-tutorials-alum-devel:latest bash

Note

The --device option is used to pass the webcam to the

container, and the -e: DISPLAY and

-v /tmp/.X11-unix:/tmp/.X11-unix options are used to display

graphical applications on your screen.

PART 1: Warm-up with face detection¶

Start the webcam node¶

First, let’s start a webcam node to publish images from the webcam to ROS.

In the terminal, type:

ros2 run gscam gscam_node --ros-args -p gscam_config:='v4l2src device=/dev/video0 ! video/x-raw,framerate=30/1 ! videoconvert' \

-p use_sensor_data_qos:=True \

-p camera_name:=camera \

-p frame_id:=camera \

-p camera_info_url:=package://interaction_sim/config/camera_info.yaml

Note

The gscam node is a ROS 2 node that captures images from a

webcam and publishes them on a ROS topic. The gscam_config

parameter is used to specify the webcam device to use

(/dev/video0), and the camera_info_url parameter is used to

specify the camera calibration file. We use a default calibration

file that works reasonably well with most webcams.

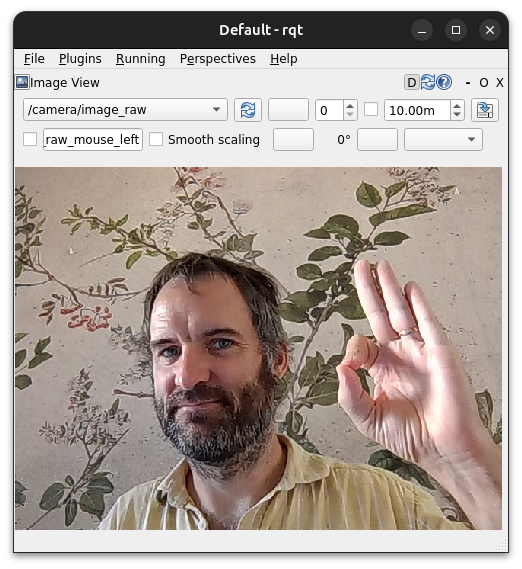

You can open rqt to check that the images are indeed published:

rqt

Note

If you need to open another Docker terminal, run

docker exec -it -u user ros4hri bash

Then, in the Plugins menu, select Visualization > Image View,

and choose the topic /camera/image_raw:

rqt image view¶

Face detection¶

hri_face_detect is an open-source ROS 1/ROS 2 node, compatible with ROS4HRI, that detects faces in images. This node is installed by default on all PAL robots.

It is already installed in the Docker container.

By default, hri_face_detect expect images on /image topic:

before starting the node, we need to configure topic remapping:

mkdir -p $HOME/.pal/config

nano $HOME/.pal/config/ros4hri-tutorials.yml

Then, paste the following content:

/hri_face_detect:

remappings:

image: /camera/image_raw

camera_info: /camera/camera_info

Press Ctrl+O to save, then Ctrl+X to exit.

Then, you can launch the node:

ros2 launch hri_face_detect face_detect.launch.py

You should see on your console which configuration files are used:

$ ros2 launch hri_face_detect face_detect.launch.py

[INFO] [launch]: All log files can be found below /home/user/.ros/log/2024-10-16-12-39-10-518981-536d911a0c9c-203

[INFO] [launch]: Default logging verbosity is set to INFO

[INFO] [launch.user]: Loaded configuration for <hri_face_detect>:

- System configuration (from lower to higher precedence):

- /opt/pal/alum/share/hri_face_detect/config/00-defaults.yml

- User overrides (from lower to higher precedence):

- /home/user/.pal/config/ros4hri-tutorials.yml

[INFO] [launch.user]: Parameters:

- processing_rate: 30

- confidence_threshold: 0.75

- image_scale: 0.5

- face_mesh: True

- filtering_frame: camera_color_optical_frame

- deterministic_ids: False

- debug: False

[INFO] [launch.user]: Remappings:

- image -> /camera/image_raw

- camera_info -> /camera/camera_info

[INFO] [face_detect-1]: process started with pid [214]

...

Note

This way of managing launch parameters and remapping is not part of base ROS 2: it is an extension (available in ROS humble) provided by PAL Robotics to simplify the management of ROS 2 nodes configuration.

See for instance the launch file of hri_face_detect to understand how it is used.

You should immediately see on the console that some faces are indeed detected

Let’s visualise them:

start

rviz2:

rviz2

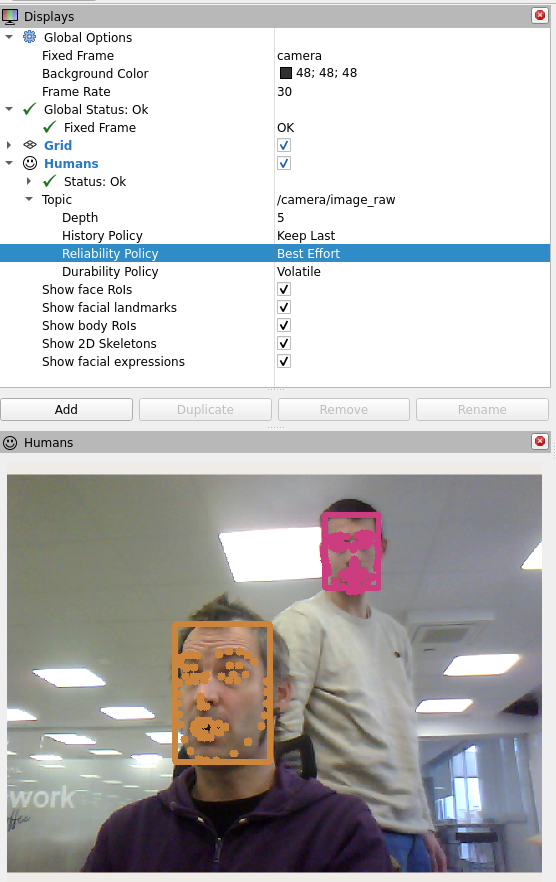

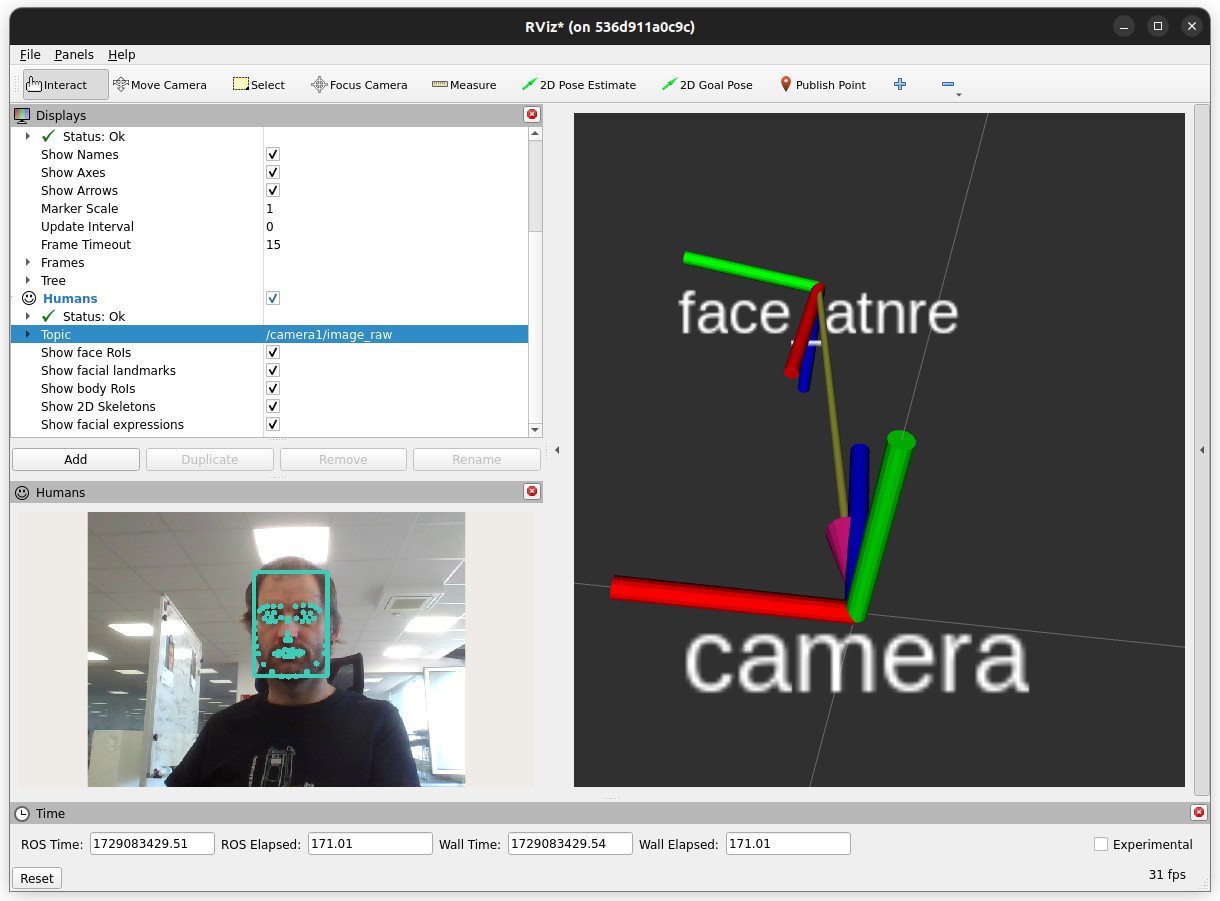

In

rviz, visualize the detected faces by adding theHumansplugin, which you can find in thehri_rvizplugins group. The plugin setup requires you to specify the image stream you want to use to visualize the detection results, in this case/camera/image_raw. You can also find the plugin as one of those available for the/camera/image_rawtopic.

Important

Set the quality of service (QoS) of the

/camera/image_raw topic to Best Effort, otherwise no image will be displayed:

Set the QoS of the /camera/image_raw topic to Best Effort¶

In

rviz, enable as well thetfplugin, and set the fixed frame tocamera. You should now see a 3D frame, representing the face position and orientation of your face.

rviz showing a 3D face frame¶

📚 Learn more

This tutorial does not go much further with exploring the ROS4HRI tools and nodes. However, you can find more information:

in the 👥 Social perception section of this documentation

in the ROS4HRI wiki page

You can also check the ROS4HRI Github organisation and the original paper.

PART 4: Integration with LLMs¶

Adding a chatbot¶

Step 1: creating a chatbot¶

use

rpkto create a newchatbotskill using the basic chabot intent extraction template:

$ rpk create -p src intent

ID of your application? (must be a valid ROS identifier without spaces or hyphens. eg 'robot_receptionist')

chatbot

Full name of your skill/application? (eg 'The Receptionist Robot' or 'Database connector', press Return to use the ID. You can change it later)

Choose a template:

1: basic chatbot template [python]

2: complete intent extraction example: LLM bridge using the OpenAI API (ollama, chatgpt) [python]

Your choice? 1

What robot are you targeting?

1: Generic robot (generic)

2: Generic PAL robot/simulator (generic-pal)

3: PAL ARI (ari)

4: PAL TIAGo (tiago)

5: PAL TIAGo Pro (tiago-pro)

6: PAL TIAGo Head (tiago-head)

Your choice? (default: 1: generic) 2

Compile the chatbot:

colcon build

source install/setup.bash

Then, start your chatbot:

ros2 launch chatbot chatbot.launch.py

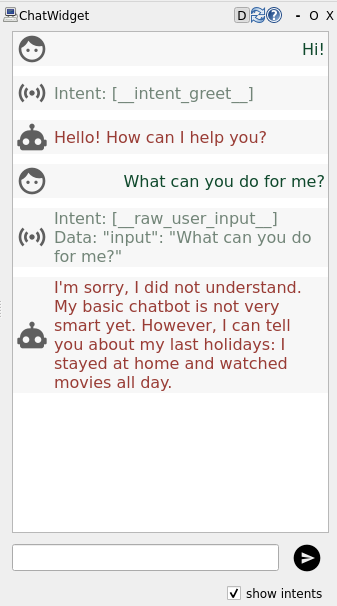

If you now type a message in the rqt_chat plugin, you should see

the chatbot responding to it:

Chatbot responding to a message¶

If you tick the checkbox at the bottom, you can also see in the chat window the

intents that the chatbot has identified in the user input. For now, our basic

chatbot only recognises the __intent_greet__ intent when you type Hi or

Hello.

Step 2: integrating the chatbot with the mission controller¶

To fully understand the intent pipeline, we will modify the chatbot to recognise a ‘pick up’ intent, and the mission controller to handle it.

open

chatbot/node_impl.pyand modify your chatbot to check whether incoming speech matches[please] pick up [the] <object>:

1import re

2

3class BasicChatbot(Node):

4

5 # [...]

6

7 def contains_pickup(self, sentence):

8 sentence = sentence.lower()

9

10 # matches sentences like: [please] pick up [the] <object> and return <object>

11 pattern = r"(?:please\s+)?pick\s+up\s+(?:the\s+)?(\w+)"

12 match = re.search(pattern, sentence)

13 if match:

14 return match.group(1)

then, in the

on_dialogue_interactionmethod, check if the incoming speech matches the pattern, and if so, return a__intent_grab_object__(see Intents for more details about intents and their expected parameters):

1def on_dialogue_interaction(self, request, response):

2

3 #...

4

5 pick_up_object = self.contains_pickup(input)

6

7 if pick_up_object:

8 self.get_logger().warn(f"I think the user want to pick up a {pick_up_object}. Sending a GRAB_OBJECT intent")

9 intents.append(Intent(intent=Intent.GRAB_OBJECT,

10 data=json.dumps({"object": pick_up_object}),

11 source=user_id,

12 modality=Intent.MODALITY_SPEECH,

13 confidence=.8))

14 suggested_response = f"Sure, let me pick up this {pick_up_object}"

15

16

17 # elif ...

Note

the Intent message is defined in the hri_actions_msgs

package, and contains the intent, the data associated with the

intent, the source of the intent (here, the current user_id), the

modality (here, speech), and the confidence of the recognition.

Check the Intents documentation for details, or directly the Intent.msg definition.

Test your updated chatbot by recompiling the workspace

(colcon build) and relaunching the chatbot.

If you now type pick up the cup in the chat window, you should see

the chatbot recognising the intent and sending a GRAB_OBJECT intent

fo the mission controller.

finally, modify the mission controller function handling inbound intents, in order to manage the

GRAB_OBJECTintent. Openemotion_mirror/mission_controller.pyand modify theon_intentmethod to handle theGRAB_OBJECTintent:1def on_intent(self, msg): 2 #... 3 4 if msg.intent == Intent.GRAB_OBJECT: 5 # on a real robot, you would call here a manipulation skill 6 goal = Say.Goal() 7 goal.input = f"<set expression(tired)> That {data['object']} is really heavy...! <set expression(neutral)>" 8 self.say.send_goal_async(goal) 9 10 # ...

Re-compile and re-run the mission controller. If you now type

pick up the cup in the chat window, you should see the mission

controller reacting to it.

📚 Learn more

In this example, we directly use the say skill to respond to the user.

When developing a full application, you usually want to split your architecture into multiple nodes, each responsible for a specific task.

The PAL application model, based on the RobMoSys methodology, encourages the development of a single mission controller, and a series of tasks and skills that are orchestrated by the mission controller.

You can read more about this model here: 📝 Developing robot apps.

Integrating with a Large Language Model (LLM)¶

Next, let’s integrate with an LLM.

Step 1: install ollama¶

ollama is an open-source tool that provides a simple REST API to

interact with a variety of LLMs. It makes it easy to install different

LLMs, and to call them using the same REST API as, eg, OpenAI’s ChatGPT.

To install ollama on your machine, follow the instructions on the

official repository:

Note

For convenience, we recommend to install ollama outside of your Docker

environment, on your host machine directly.

curl -fsSL https://ollama.com/install.sh | sh

Once it is installed, you can start the ollama server with:

ollama serve

Open a new terminal, and run the following command to download a first model and check it works:

ollama run gemma3:1b

Note

Visit the ollama model page to see the list of available models.

Depending on the size of the model and your computer configuration, the response time can be quite long.

Having a fast GPU helps :-)

Step 2: calling ollama from the chatbot¶

ollama can be accessed from your code either by calling the REST API

directly, or by using the ollama Python binding. While the REST API

is more flexible (and makes it possible to easily use other

OpenAI-compatible services, like ChatGPT), the Python binding is very

easy to use.

Note

If you are curious about the REST API, use rpk LLM chatbot

template to generate an example of a chatbot that calls ollama

via the REST API.

install the

ollamapython binding inside your Docker image:pip install ollama

Modify your chatbot to connect to

ollama, using a custom prompt. Openchatbot/chatbot/node_impl.pydo the following changes:

1# add to the imports

2from ollama import Client

3

4# ...

5

6class BasicChatbot(Node):

7

8 # modify the constructor:

9 def __init__(self) -> None:

10 # ...

11

12 self._ollama_client = Client()

13 # if ollama does not run on the local host, you can specify the host and

14 # port. For instance:

15 # self._ollama_client = Client("x.x.x.x:11434")

16

17 # dialogue history

18 self.messages = [

19 {"role": "system",

20 "content": """

21 You are a helpful robot, always eager to help.

22 You always respond with concise and to-the-point answers.

23 """

24 }]

25

26 # ...

27

28 # modify on_dialogue_interaction:

29 def on_dialogue_interaction(self,

30 request: DialogueInteraction.Request,

31 response: DialogueInteraction.Response):

32

33 # we can have multiple dialogues: we use the dialogue_id to

34 # identify to which conversation this new input belongs

35 target_dialogue_id = request.dialogue_id

36

37 user_id = request.user_id

38 input = request.input

39

40 # response_expected might be False if, for instance, the robot

41 # itself is saying something. It might be useful to add it to

42 # the dialogue history with the user, but we do not need to

43 # generate a response.

44 response_expected = request.response_expected

45 suggested_response = ""

46 intents = []

47

48 self.get_logger().info(f"input from {user_id} to dialogue {target_dialogue_id}: {input}")

49 self._nb_requests += 1

50

51 self.messages.append({"role": "user", "content": input})

52

53 llm_res = self._ollama_client.chat(

54 messages=self.messages,

55 model="gemma3:1b"

56 )

57

58 content = llm_res.message.content

59

60 self.get_logger().info(f"The LLM answered: {content}")

61

62 self.messages.append({"role": "assistant", "content": content})

63

64 response.response = content

65 response.intents = []

66 return response

As you can see, calling ollama is as simple as creating a Client

object and calling its chat method with the messages to send to the

LLM and the model to use.

In this example, we append to the chat history (self.messages) the

user input and the LLM response after each interaction, thus building a

complete dialogue.

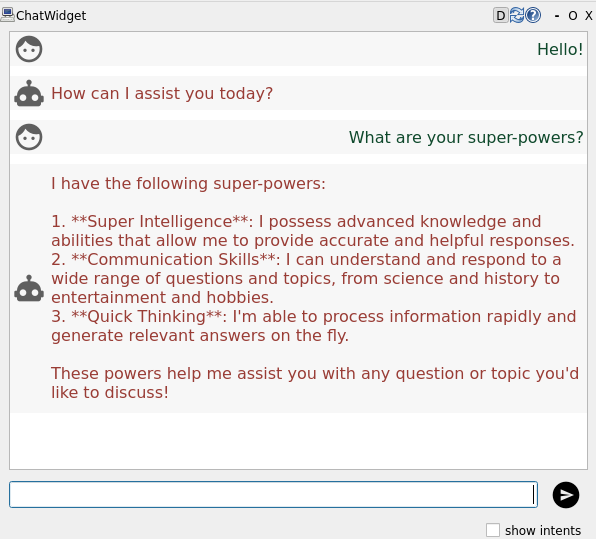

Recompile and restart the chatbot. If you now type a message in the chat window, you should see the chatbot responding with a text generated by the LLM:

Example of a chatbot response generated by an LLM (in this example, Phi4)¶

Attention

Depending on the LLM model you use, the response time can be quite

long. By default, after 10s, communication_hub will time out. In that

case, the chatbot answer will not be displayed in the chat window.

Step 3: extract user intents¶

To recognise intents from the LLM response, we can use a combination of prompt engineering and LLM structured output.

to generate structured output (ie, a JSON-structured response that includes the recognised intents), we first need to write a Python object that corresponds to the expected output of the LLM:

1from pydantic import BaseModel

2from typing import Literal

3from hri_actions_msgs.msg import Intent

4

5# Define the data models for the chatbot response and the user intent

6class IntentModel(BaseModel):

7 type: Literal[Intent.BRING_OBJECT,

8 Intent.GRAB_OBJECT,

9 Intent.PLACE_OBJECT,

10 Intent.GUIDE,

11 Intent.MOVE_TO,

12 Intent.SAY,

13 Intent.GREET,

14 Intent.START_ACTIVITY,

15 ]

16 object: str | None

17 recipient: str | None

18 input: str | None

19 goal: str | None

20

21class ChatbotResponse(BaseModel):

22 verbal_ack: str | None

23 user_intent: IntentModel | None

Here, we use the type BaseModel from the pydantic library so

that we can generate the formal model corresponding to this Python

object (using the JSON schema specification).

then, modify the chatbot to force the LLM to return a JSON-structured response that includes the recognised intents:

1 # ...

2 def on_dialogue_interaction(self,

3 request: DialogueInteraction.Request,

4 response: DialogueInteraction.Response):

5

6 target_dialogue_id = request.dialogue_id

7 user_id = request.user_id

8 input = request.input

9 response_expected = request.response_expected

10 suggested_response = ""

11 intents = []

12

13 self.get_logger().info(f"input from {user_id} to dialogue {target_dialogue_id}: {input}")

14 self._nb_requests += 1

15

16 self.messages.append({"role": "user", "content": input})

17

18 llm_res = self._ollama_client.chat(

19 messages=self.messages,

20 model="gemma3:1b",

21 format=ChatbotResponse.model_json_schema() # <- the magic happens here

22 )

23

24 json_res = ChatbotResponse.model_validate_json(llm_res.message.content)

25

26 self.get_logger().info(f"The LLM answered: {json_res}")

27

28 verbal_ack = json_res.verbal_ack

29 if verbal_ack:

30 # if we have a verbal acknowledgement, add it to the dialogue history,

31 # and send it to the user

32 self.messages.append({"role": "assistant", "content": verbal_ack})

33 response.response = verbal_ack

34

35 user_intent = json_res.user_intent

36 if user_intent:

37 response.intents = [Intent(

38 intent=user_intent.type,

39 data=json.dumps(user_intent.model_dump())

40 )]

41

42 return response

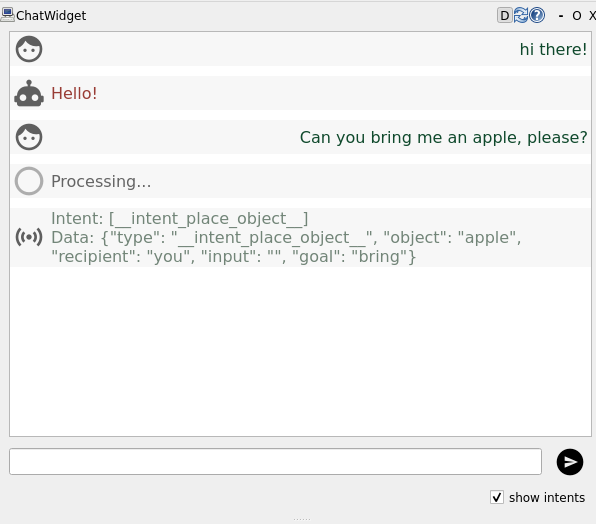

Now, the LLM will always return a JSON-structured response that includes

an intent (if one was recognised), and a verbal acknowledgement. For

instance, when asking the robot to bring an apple, it returns an

intent PLACE_OBJECT with the object apple:

Example of a structured LLM response¶

Step 4: prompt engineering to improve intent recognition¶

To improve the intent recognition, we can use prompt engineering: we can provide the LLM with a prompt that will guide it towards generating a response that includes the intents we are interested in.

One key trick is to provide the LLM with examples of the intents we are interested in.

Here an example of a longer prompt, that would yield better results:

PROMPT = """

You are a friendly robot called $robot_name. You try to help the user to the best of your abilities.

You are always helpful, and ask further questions if the desires of the user are unclear.

Your answers are always polite yet concise and to-the-point.

Your aim is to extract the user goal.

Your response must be a JSON object with the following fields (both are optional):

- verbal_ack: a string acknowledging the user request (like 'Sure', 'I'm on it'...)

- user_intent: the user overall goal (intent), with the following fields:

- type: the type of intent to perform (e.g. "__intent_say__", "__intent_greet__", "__intent_start_activity__", etc.)

- any thematic role required by the intent. For instance: `object` to

relate the intent to the object to interact with (e.g. "lamp",

"door", etc.)

Importantly, `verbal_ack` is meant to be a *short* acknowledgement sentence,

unconditionally uttered by the robot, indicating that you have understood the request -- or that we need more information.

For more complex verbal actions, return a `__intent_say__` instead.

However, for answers to general questions that do not require any action

(eg: 'what is your name?'), the 'user_intent' field can be omitted, and the

'verbal_ack' field should contain the answer.

The user_id of the person you are talking to is $user_id. Always use this ID when referring to the person in your responses.

Examples

- if the user says 'Hello robot', you could respond:

{

"user_intent": {"type": "__intent_greet__", "recipient": "$user_id"}

}

- if the user says 'What is your name?', you could respond:

{

"verbal_ack":"My name is $robot_name. What is your name?"

}

- if the user say 'take a fruit', you could respond (assuming a object 'apple1' of type 'Apple' is visible):

{

"user_intent": {

"type":"__intent_grab_object__",

"object":"apple1",

},

"verbal_ack": "Sure"

}

- if the user say 'take a fruit', but you do not know about any fruit. You could respond:

{

"verbal_ack": "I haven't seen any fruits around. Do you want me to check in the kitchen?"

}

- the user says: 'clean the table'. You could return:

{

"user_intent": {

"type":"__intent_start_activity__",

"object": "cleaning_table"

},

"verbal_ack": "Sure, I'll get started"

}

If you are not sure about the intention of the user, return an empty user_intent and ask for confirmation with the verbal_ack field.

"""

This prompt uses Python’s templating system to include the robot’s name and the user’s ID in the prompt.

You can use this prompt in your script by substituting the variables with the actual values:

from string import Template

actual_prompt = Template(PROMPT).safe_substitute(robot_name="Robbie", user_id="Alice")

Then, you can use this prompt in the ollama call:

# ...

def __init(self) -> None:

# ...

self.messages = [

{"role": "system",

"content": Template(PROMPT).safe_substitute(robot_name="Robbie", user_id="user1")

}]

# ...

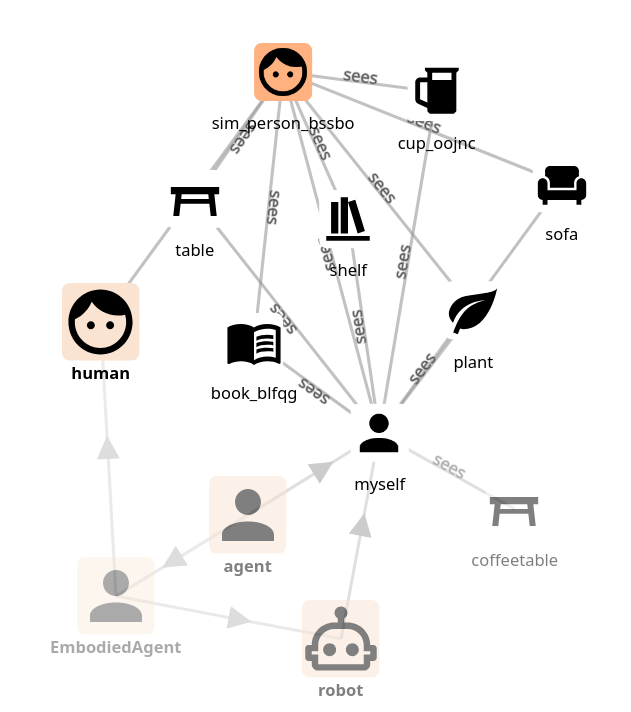

Closing the loop: integrating LLM and symbolic knowledge representation¶

Finally, we can use the knowledge base to improve the intent recognition.

For instance, if the user asks the robot to bring the apple, we can

use the knowledge base to check whether an apple is in the field of view

of the robot.

Note

It is often convenient to have a Python interpreter open to quickly test knowledge base queries.

Open ipython3 in a terminal from within your Docker image, and

then:

from knowledge_core.api import KB; kb = KB()

kb["* sees *"] # etc.

First, let’s query the knowledge base for all the objects that are visible to the robot:

1from knowledge_core.api import KB

2

3# ...

4

5def __init__(self) -> None:

6

7 # ...

8

9 self.kb = KB()

10

11

12def environment(self) -> str:

13 """ fetch all the objects and humans visible to the robot,

14 get for each of them their class and label, and return a string

15 that list them all.

16

17 A more advanced version could also include the position of the objects

18 and spatial relations between them.

19 """

20

21 environment_description = ""

22

23 seen_objects = self.kb["myself sees ?obj"]

24 for obj in [item["obj"] for item in seen_objects]:

25 details= self.kb.details(obj)

26 label= details["label"]["default"]

27 classes= details["attributes"][0]["values"]

28 class_name= None

29 if classes:

30 class_name= classes[0]["label"]["default"]

31 environment_description += f"- I see a {class_name} labeled {label}.\n"

32 else:

33 environment_description += f"- I see {label}.\n"

34

35 self.get_logger().info(

36 f"Environment description:\n{environment_description}")

37 return environment_description

Note

The kb.details method returns a dictionary with details about

a given knowledge concept. The attributes field contains e.g. the

class of the object (if known or inferred by the knowledg base).

📚 Learn more

To inspect in details the knowledge base, we recommend using Protégé, an open-source tool to explore and modify ontologies.

The ontology used by the robot (and the interaction simulator) is

stored in /opt/pal/alum/share/oro/ontologies/oro.owl. Copy this

file to your ~/exchange folder to access it from your host and

inspect it with Protégé.

We can then use this information to ground the user intents in the physical world of the robot.

Add an environment update before each calls to the LLM:

1def on_dialogue_interaction(self,

2 request: DialogueInteraction.Request,

3 response: DialogueInteraction.Response):

4

5 # ...

6

7 self.messages.append({"role": "system", "content": self.environment()})

8 self.messages.append({"role": "user", "content": input})

9

10 # ...

Re-compile and restart your chatbot. You can now ask the robot e.g. what it sees.

The final chatbot code should look like:

1import json

2import time

3from ollama import Client

4

5from knowledge_core.api import KB

6

7from rclpy.lifecycle import Node

8from rclpy.lifecycle import State

9from rclpy.lifecycle import TransitionCallbackReturn

10from rcl_interfaces.msg import ParameterDescriptor

11from rclpy.action import ActionServer, GoalResponse

12from hri_actions_msgs.msg import Intent

13from i18n_msgs.action import SetLocale

14from i18n_msgs.srv import GetLocales

15from diagnostic_msgs.msg import DiagnosticArray, DiagnosticStatus, KeyValue

16from chatbot_msgs.action import Dialogue

17from chatbot_msgs.srv import DialogueInteraction

18from rclpy.action import CancelResponse

19from rclpy.action.server import ServerGoalHandle

20from rclpy.callback_groups import ReentrantCallbackGroup

21

22from pydantic import BaseModel

23from typing import Literal

24from hri_actions_msgs.msg import Intent

25from string import Template

26

27PROMPT = """

28You are a friendly robot called $robot_name. You try to help the user to the best of your abilities.

29You are always helpful, and ask further questions if the desires of the user are unclear.

30Your answers are always polite yet concise and to-the-point.

31

32Your aim is to extract the user goal.

33

34Your response must be a JSON object with the following fields (both are optional):

35- verbal_ack: a string acknowledging the user request (like 'Sure', 'I'm on it'...)

36- user_intent: the user overall goal (intent), with the following fields:

37 - type: the type of intent to perform (e.g. "__intent_say__", "__intent_greet__", "__intent_start_activity__", etc.)

38 - any thematic role required by the intent. For instance: `object` to

39 relate the intent to the object to interact with (e.g. "lamp",

40 "door", etc.)

41

42Importantly, `verbal_ack` is meant to be a *short* acknowledgement sentence,

43unconditionally uttered by the robot, indicating that you have understood the request -- or that we need more information.

44For more complex verbal actions, return a `__intent_say__` instead.

45

46However, for answers to general questions that do not require any action

47(eg: 'what is your name?'), the 'user_intent' field can be omitted, and the

48'verbal_ack' field should contain the answer.

49

50The user_id of the person you are talking to is $user_id. Always use this ID when referring to the person in your responses.

51

52Examples

53- if the user says 'Hello robot', you could respond:

54{

55 "user_intent": {"type": "__intent_greet__", "recipient": "$user_id"}

56}

57

58- if the user says 'What is your name?', you could respond:

59{

60 "verbal_ack":"My name is $robot_name. What is your name?"

61}

62

63- if the user say 'take a fruit', you could respond (assuming a object 'apple1' of type 'Apple' is visible):

64{

65 "user_intent": {

66 "type":"__intent_grab_object__",

67 "object":"apple1",

68 },

69 "verbal_ack": "Sure"

70}

71

72- if the user say 'take a fruit', but you do not know about any fruit. You could respond:

73{

74 "verbal_ack": "I haven't seen any fruits around. Do you want me to check in the kitchen?"

75}

76

77- the user says: 'clean the table'. You could return:

78{

79 "user_intent": {

80 "type":"__intent_start_activity__",

81 "object": "cleaning_table"

82 },

83 "verbal_ack": "Sure, I'll get started"

84}

85

86If you are not sure about the intention of the user, return an empty user_intent and ask for confirmation with the verbal_ack field.

87"""

88

89

90# Define the data models for the chatbot response and the user intent

91class IntentModel(BaseModel):

92 type: Literal[Intent.BRING_OBJECT,

93 Intent.GRAB_OBJECT,

94 Intent.PLACE_OBJECT,

95 Intent.GUIDE,

96 Intent.MOVE_TO,

97 Intent.SAY,

98 Intent.GREET,

99 Intent.START_ACTIVITY,

100 ]

101 object: str | None

102 recipient: str | None

103 input: str | None

104 goal: str | None

105

106

107class ChatbotResponse(BaseModel):

108 verbal_ack: str | None

109 user_intent: IntentModel | None

110##################################################

111

112

113class BasicChatbot(Node):

114

115 def __init__(self) -> None:

116 super().__init__('intent_extractor_chatbot')

117

118 # Declare ROS parameters. Should mimick the one listed in config/00-defaults.yaml

119 self.declare_parameter(

120 'my_parameter', "my_default_value.",

121 ParameterDescriptor(

122 description='Important parameter for my chatbot')

123 )

124

125 self.get_logger().info("Initialising...")

126

127 self._dialogue_start_action = None

128 self._dialogue_interaction_srv = None

129 self._get_supported_locales_server = None

130 self._set_default_locale_server = None

131

132 self._timer = None

133 self._diag_pub = None

134 self._diag_timer = None

135

136 self.kb = KB()

137

138 self._nb_requests = 0

139 self._dialogue_id = None

140 self._dialogue_result = None

141

142 self._ollama_client = Client()

143 # if ollama does not run on the local host, you can specify the host and

144 # port. For instance:

145 # self._ollama_client = Client("x.x.x.x:11434")

146

147 self.messages = [

148 {"role": "system",

149 "content": Template(PROMPT).safe_substitute(robot_name="Robbie", user_id="user1")

150 }]

151

152 self.get_logger().info('Chatbot chatbot started, but not yet configured.')

153

154 def environment(self) -> str:

155 environment_description = ""

156

157 seen_objects = self.kb["myself sees ?obj"]

158 for obj in [item["obj"] for item in seen_objects]:

159 details = self.kb.details(obj)

160 label = details["label"]["default"]

161 classes = details["attributes"][0]["values"]

162 class_name = None

163 if classes:

164 class_name = classes[0]["label"]["default"]

165 environment_description += f"- I see a {class_name} labeled {label}.\n"

166 else:

167 environment_description += f"- I see {label}.\n"

168

169 self.get_logger().info(

170 f"Environment description:\n{environment_description}")

171 return environment_description

172

173 def on_dialog_goal(self, goal: Dialogue.Goal):

174 # Here we check if the goal is valid and the node is able to accept it

175 #

176 # For simplicity, we allow only one dialogue at a time.

177 # You might want to change this to allow multiple dialogues at the same time.

178 #

179 # We also check if the dialogue role is supported by the chatbot.

180 # In this example, we only support only the "__default__" role.

181

182 if self._dialogue_id or goal.role.name != '__default__':

183 return GoalResponse.REJECT

184 return GoalResponse.ACCEPT

185

186 def on_dialog_accept(self, handle: ServerGoalHandle):

187 self._dialogue_id = tuple(handle.goal_id.uuid)

188 self._dialogue_result = None

189 handle.execute()

190

191 def on_dialog_cancel(self, handle: ServerGoalHandle):

192 if self._dialogue_id:

193 return CancelResponse.ACCEPT

194 else:

195 return CancelResponse.REJECT

196

197 def on_dialog_execute(self, handle: ServerGoalHandle):

198 id = tuple(handle.goal_id.uuid)

199 self.get_logger().warn(

200 f"Starting '{handle.request.role.name}' dialogue with id {id}")

201

202 try:

203 while handle.is_active:

204 if handle.is_cancel_requested:

205 handle.canceled()

206 return Dialogue.Result(error_msg='Dialogue cancelled')

207 elif self._dialogue_result:

208 if self._dialogue_result.error_msg:

209 handle.abort()

210 else:

211 handle.succeed()

212 return self._dialogue_result

213 time.sleep(1e-2)

214 return Dialogue.Result(error_msg='Dialogue execution interrupted')

215 finally:

216 self.get_logger().warn(f"Dialogue with id {id} finished")

217 self._dialogue_id = None

218 self._dialogue_result = None

219

220 def on_dialogue_interaction(self,

221 request: DialogueInteraction.Request,

222 response: DialogueInteraction.Response):

223

224 target_dialogue_id = request.dialogue_id

225 user_id = request.user_id

226 input = request.input

227 response_expected = request.response_expected

228 suggested_response = ""

229 intents = []

230

231 self.get_logger().info(

232 f"input from {user_id} to dialogue {target_dialogue_id}: {input}")

233 self._nb_requests += 1

234

235 self.messages.append({"role": "system", "content": self.environment()})

236 self.messages.append({"role": "user", "content": input})

237

238 llm_res = self._ollama_client.chat(

239 messages=self.messages,

240 # you can also try with more powerful models, see https://ollama.com/models

241 model="gemma3:1b",

242 format=ChatbotResponse.model_json_schema()

243 )

244

245 json_res = ChatbotResponse.model_validate_json(llm_res.message.content)

246

247 self.get_logger().info(f"The LLM answered: {json_res}")

248

249 verbal_ack = json_res.verbal_ack

250 if verbal_ack:

251 # if we have a verbal acknowledgement, add it to the dialogue history,

252 # and send it to the user

253 self.messages.append({"role": "assistant", "content": verbal_ack})

254 response.response = verbal_ack

255

256 user_intent = json_res.user_intent

257 if user_intent:

258 response.intents = [Intent(

259 intent=user_intent.type,

260 data=json.dumps(user_intent.model_dump())

261 )]

262

263 return response

264

265 def on_get_supported_locales(self, request, response):

266 response.locales = [] # list of supported locales; empty means any

267 return response

268

269 def on_set_default_locale_goal(self, goal_request):

270 return GoalResponse.ACCEPT

271

272 def on_set_default_locale_exec(self, goal_handle):

273 """Change here the default locale of the chatbot."""

274 result = SetLocale.Result()

275 goal_handle.succeed()

276 return result

277

278 #################################

279 #

280 # Lifecycle transitions callbacks

281 #

282 def on_configure(self, state: State) -> TransitionCallbackReturn:

283

284 # configure and start diagnostics publishing

285 self._nb_requests = 0

286 self._diag_pub = self.create_publisher(

287 DiagnosticArray, '/diagnostics', 1)

288 self._diag_timer = self.create_timer(1., self.publish_diagnostics)

289

290 # start advertising supported locales

291 self._get_supported_locales_server = self.create_service(

292 GetLocales, "~/get_supported_locales", self.on_get_supported_locales)

293

294 self._set_default_locale_server = ActionServer(

295 self, SetLocale, "~/set_default_locale",

296 goal_callback=self.on_set_default_locale_goal,

297 execute_callback=self.on_set_default_locale_exec)

298

299 self.get_logger().info("Chatbot chatbot is configured, but not yet active")

300 return TransitionCallbackReturn.SUCCESS

301

302 def on_activate(self, state: State) -> TransitionCallbackReturn:

303 """

304 Activate the node.

305

306 You usually want to do the following in this state:

307 - Create and start any timers performing periodic tasks

308 - Start processing data, and accepting action goals, if any

309

310 """

311 self._dialogue_start_action = ActionServer(

312 self, Dialogue, '/chatbot/start_dialogue',

313 execute_callback=self.on_dialog_execute,

314 goal_callback=self.on_dialog_goal,

315 handle_accepted_callback=self.on_dialog_accept,

316 cancel_callback=self.on_dialog_cancel,

317 callback_group=ReentrantCallbackGroup())

318

319 self._dialogue_interaction_srv = self.create_service(

320 DialogueInteraction, '/chatbot/dialogue_interaction', self.on_dialogue_interaction)

321

322 # Define a timer that fires every second to call the run function

323 timer_period = 1 # in sec

324 self._timer = self.create_timer(timer_period, self.run)

325

326 self.get_logger().info("Chatbot chatbot is active and running")

327 return super().on_activate(state)

328

329 def on_deactivate(self, state: State) -> TransitionCallbackReturn:

330 """Stop the timer to stop calling the `run` function (main task of your application)."""

331 self.get_logger().info("Stopping chatbot...")

332

333 self.destroy_timer(self._timer)

334 self._dialogue_start_action.destroy()

335 self.destroy_service(self._dialogue_interaction_srv)

336

337 self.get_logger().info("Chatbot chatbot is stopped (inactive)")

338 return super().on_deactivate(state)

339

340 def on_shutdown(self, state: State) -> TransitionCallbackReturn:

341 """

342 Shutdown the node, after a shutting-down transition is requested.

343

344 :return: The state machine either invokes a transition to the

345 "finalized" state or stays in the current state depending on the

346 return value.

347 TransitionCallbackReturn.SUCCESS transitions to "finalized".

348 TransitionCallbackReturn.FAILURE remains in current state.

349 TransitionCallbackReturn.ERROR or any uncaught exceptions to

350 "errorprocessing"

351 """

352 self.get_logger().info('Shutting down chatbot node.')

353 self.destroy_timer(self._diag_timer)

354 self.destroy_publisher(self._diag_pub)

355

356 self.destroy_service(self._get_supported_locales_server)

357 self._set_default_locale_server.destroy()

358

359 self.get_logger().info("Chatbot chatbot finalized.")

360 return TransitionCallbackReturn.SUCCESS

361

362 #################################

363

364 def publish_diagnostics(self):

365

366 arr = DiagnosticArray()

367 msg = DiagnosticStatus(

368 level=DiagnosticStatus.OK,

369 name="/intent_extractor_chatbot",

370 message="chatbot chatbot is running",

371 values=[

372 KeyValue(key="Module name", value="chatbot"),

373 KeyValue(key="Current lifecycle state",

374 value=self._state_machine.current_state[1]),

375 KeyValue(key="# requests since start",

376 value=str(self._nb_requests)),

377 ],

378 )

379

380 arr.header.stamp = self.get_clock().now().to_msg()

381 arr.status = [msg]

382 self._diag_pub.publish(arr)

383

384 def run(self) -> None:

385 """

386 Background task of the chatbot.

387

388 For now, we do not need to do anything here, as the chatbot is

389 event-driven, and the `on_user_input` callback is called when a new

390 user input is received.

391 """

392 pass

Next steps¶

Interaction simulator architecture¶

We have completed a simple social robot architecture, with a mission controller that can react to user intents, and a chatbot that can extract intents from user.

You can now:

Extend the mission controller: add more intents, more complex behaviours, etc;

Structure your application: split your mission controller into tasks and skills, and orchestrate them: 📝 Developing robot apps;

Integrate navigation and manipulation, using the corresponding navigation skills and manipulation skills

Finally, deploy your application on your robot: Deploying ROS 2 packages on your robot.

See also¶

📝 Developing robot apps: learn more about the PAL application model

Intents: learn more about the intent messages

PAL Interaction simulator: learn more about the interaction simulator

💡 Knowledge and reasoning: learn more about the knowledge base and reasoning

return to the Tutorials main page