1 TIAGo++ handbook¶

2 Package contents¶

2.1 Overview¶

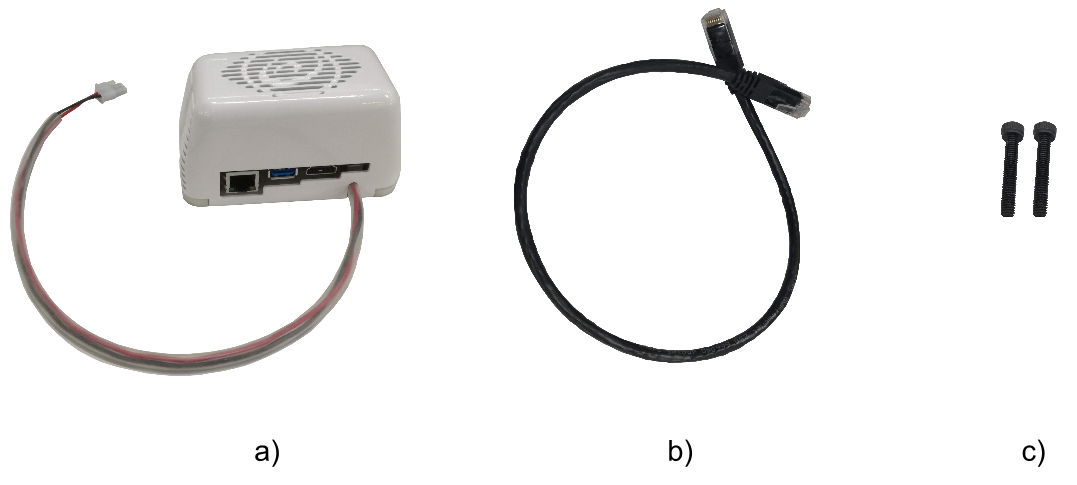

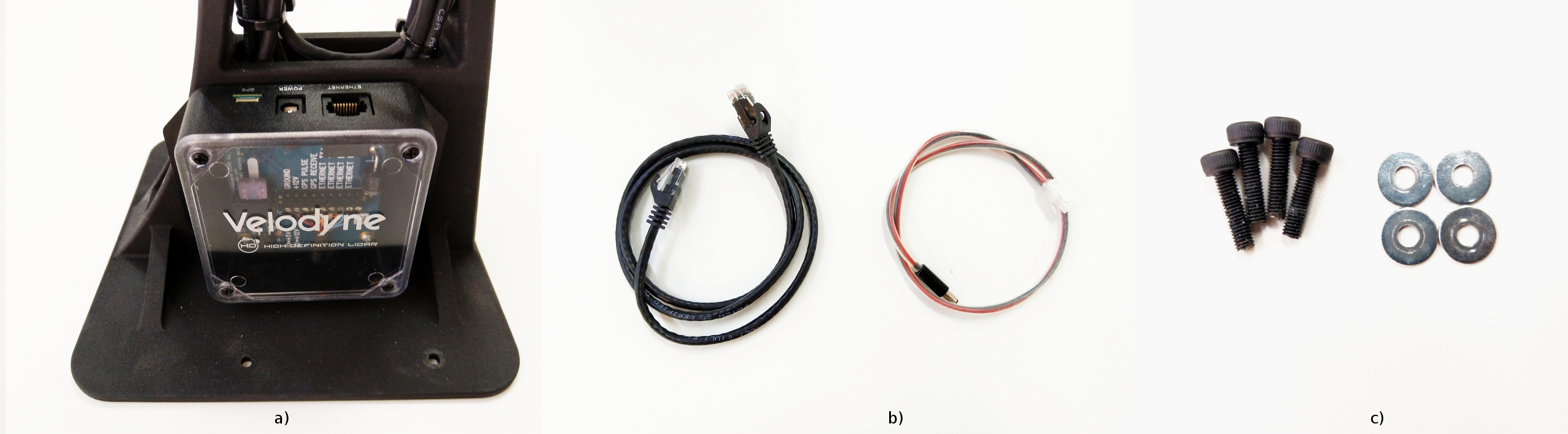

This section includes a list of items and accessories that come with TIAGo++. Make sure they’re all present:

Figure: Components inside transportation box¶

3 Specifications¶

3.1 Robot overview¶

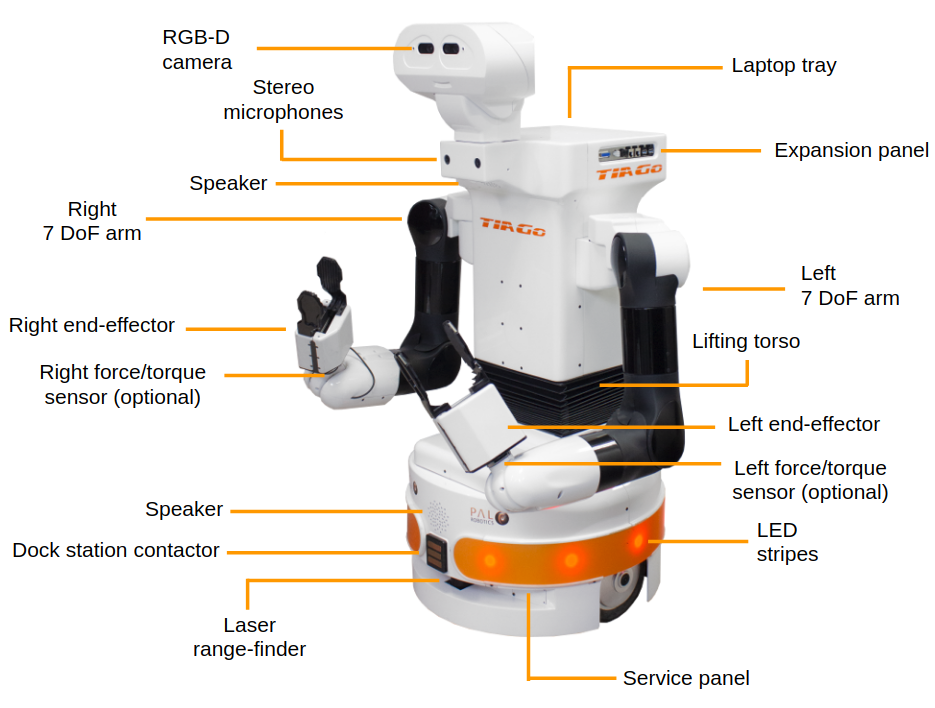

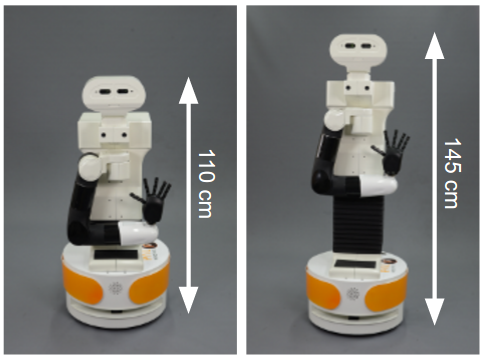

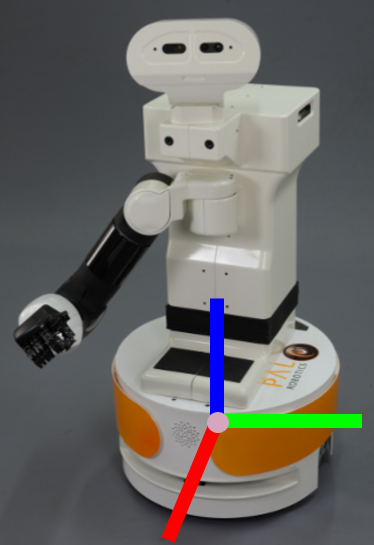

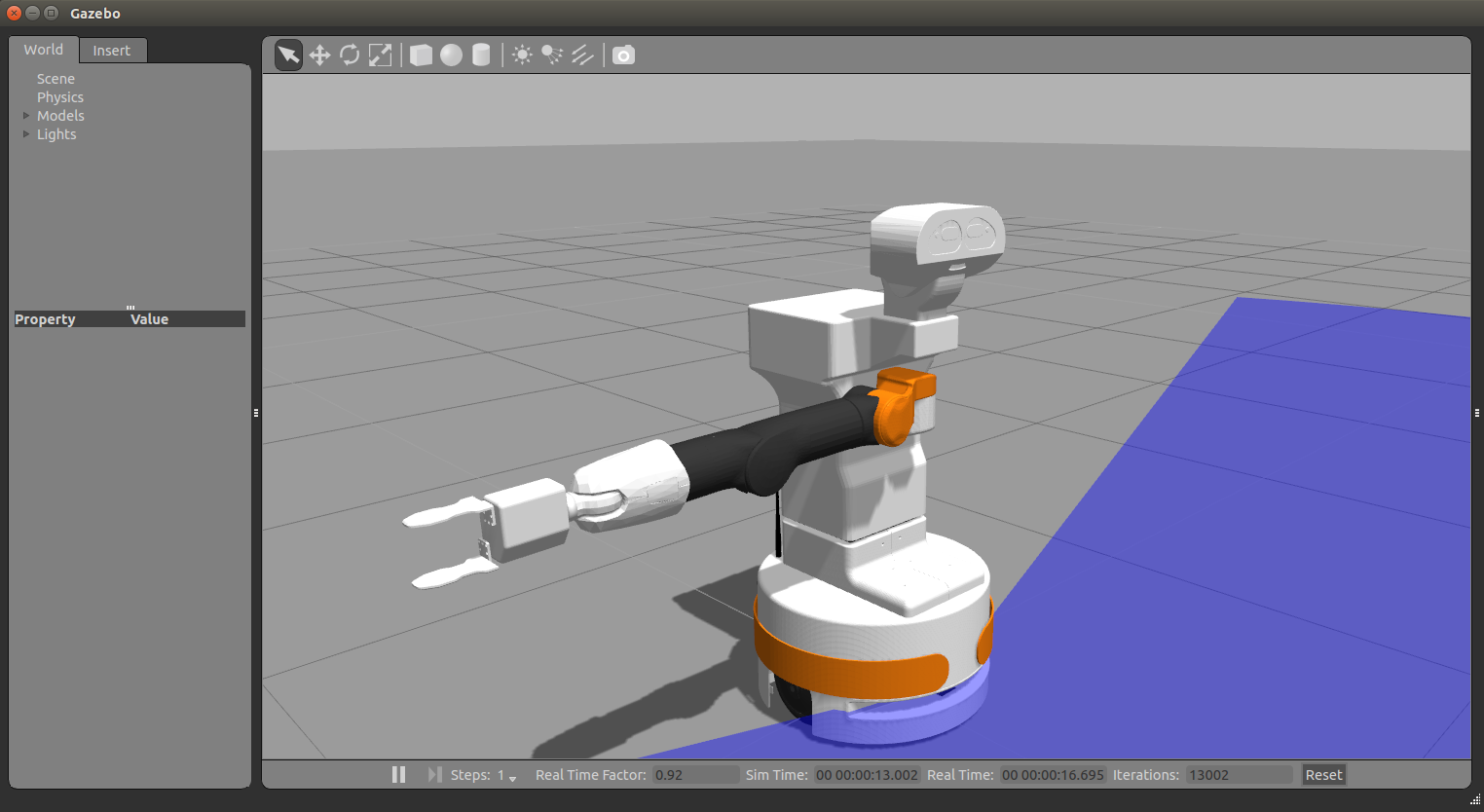

TIAGo++ ’s main parts are depicted in figure below, and its main specifications are summarized in table below:

Figure: TIAGo++ ’s main components¶

Robot’s main specifications:

Height |

110 – 145 cm |

Weight |

72 Kg |

Base footprint |

Ø 54 cm |

Mobile base |

2 |

Torso lift |

1 |

Arm |

4 |

Wrist |

3 |

Head |

2 |

Hey5 hand |

19 (3 actuated) |

PAL gripper |

2 |

Drive system |

Differential |

Max speed |

1 m/s |

Lift stroke |

35 cm |

Payload |

2 Kg |

Reach |

87 cm |

Battery |

36 V, 20 Ah |

Base |

Laser range-finder, Sonars, IMU |

Torso |

Stereo microphones |

Arm |

Motors current feedback |

Wrist |

Force/Torque |

Head |

RGB-D camera |

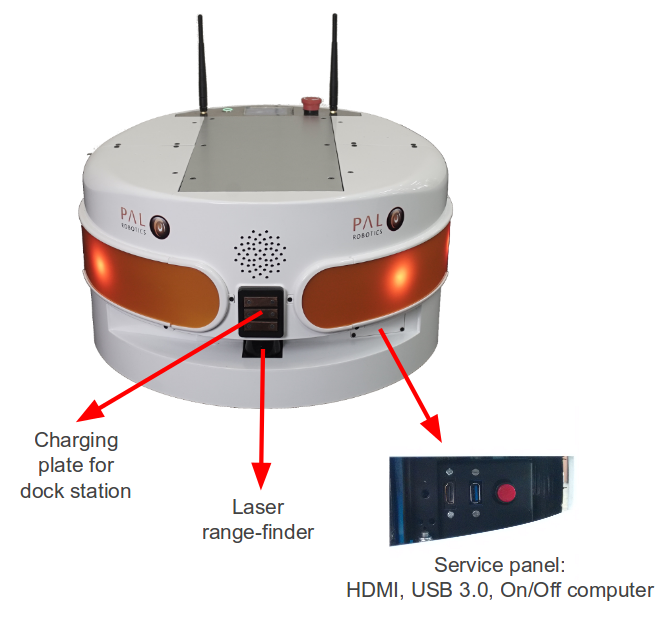

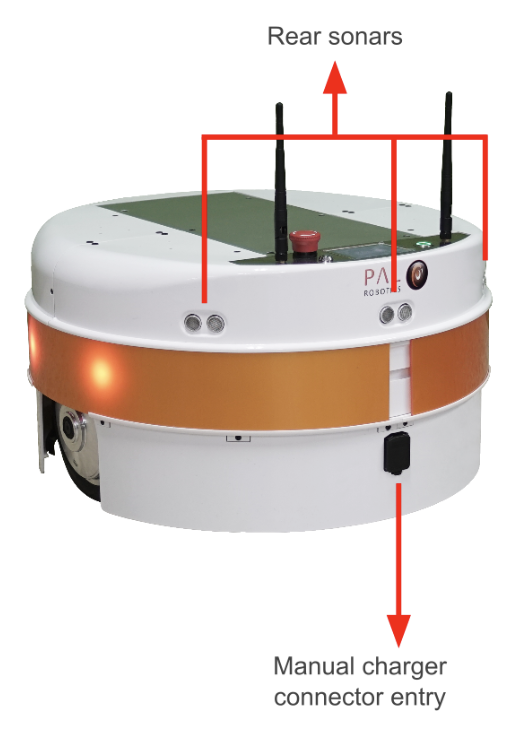

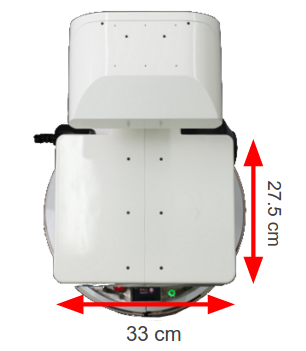

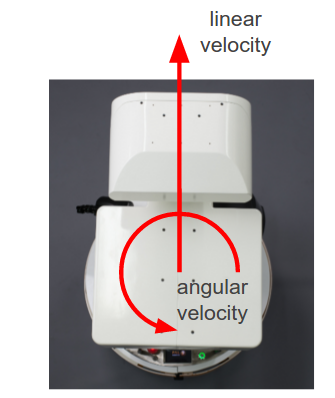

3.2 Mobile base¶

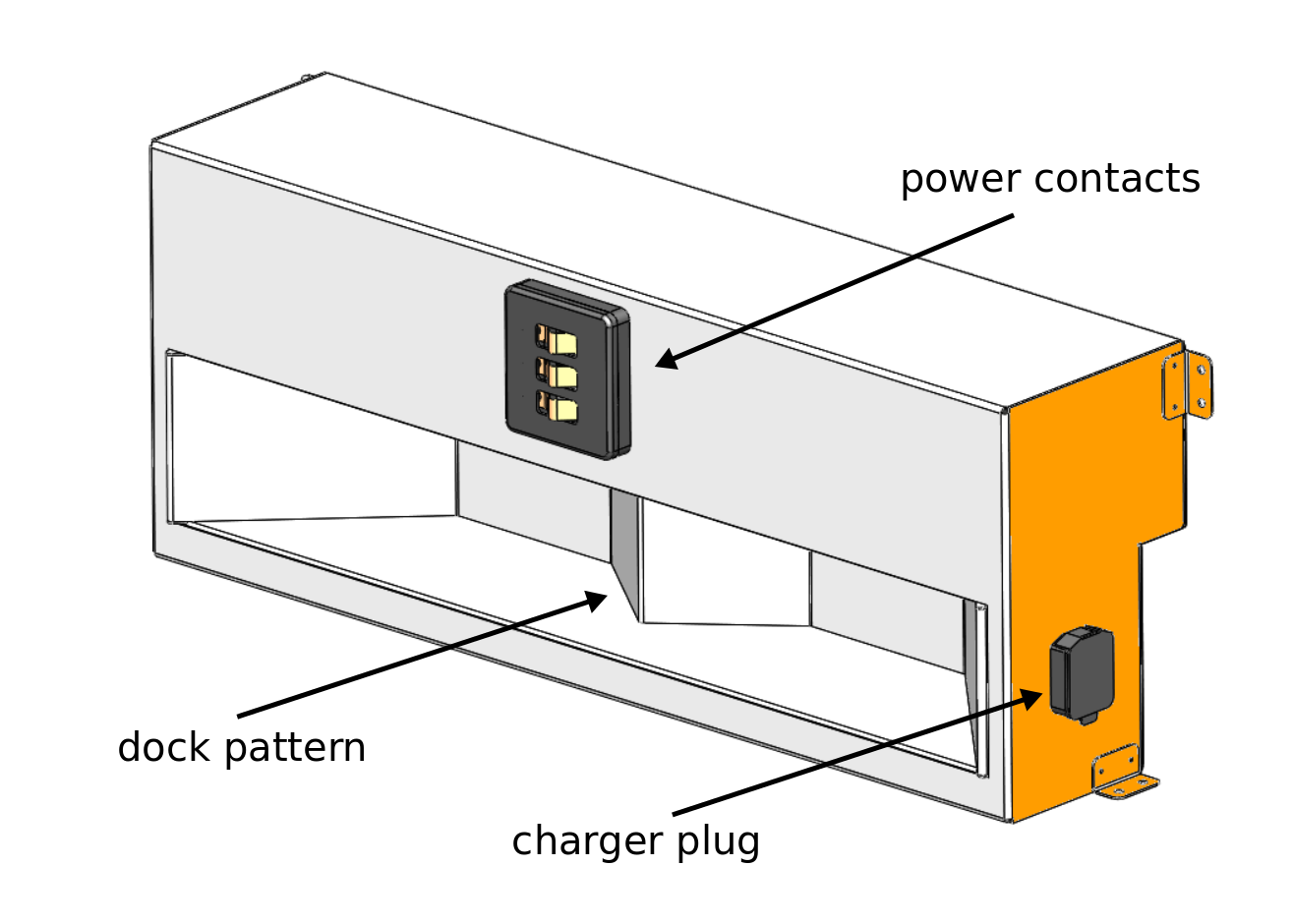

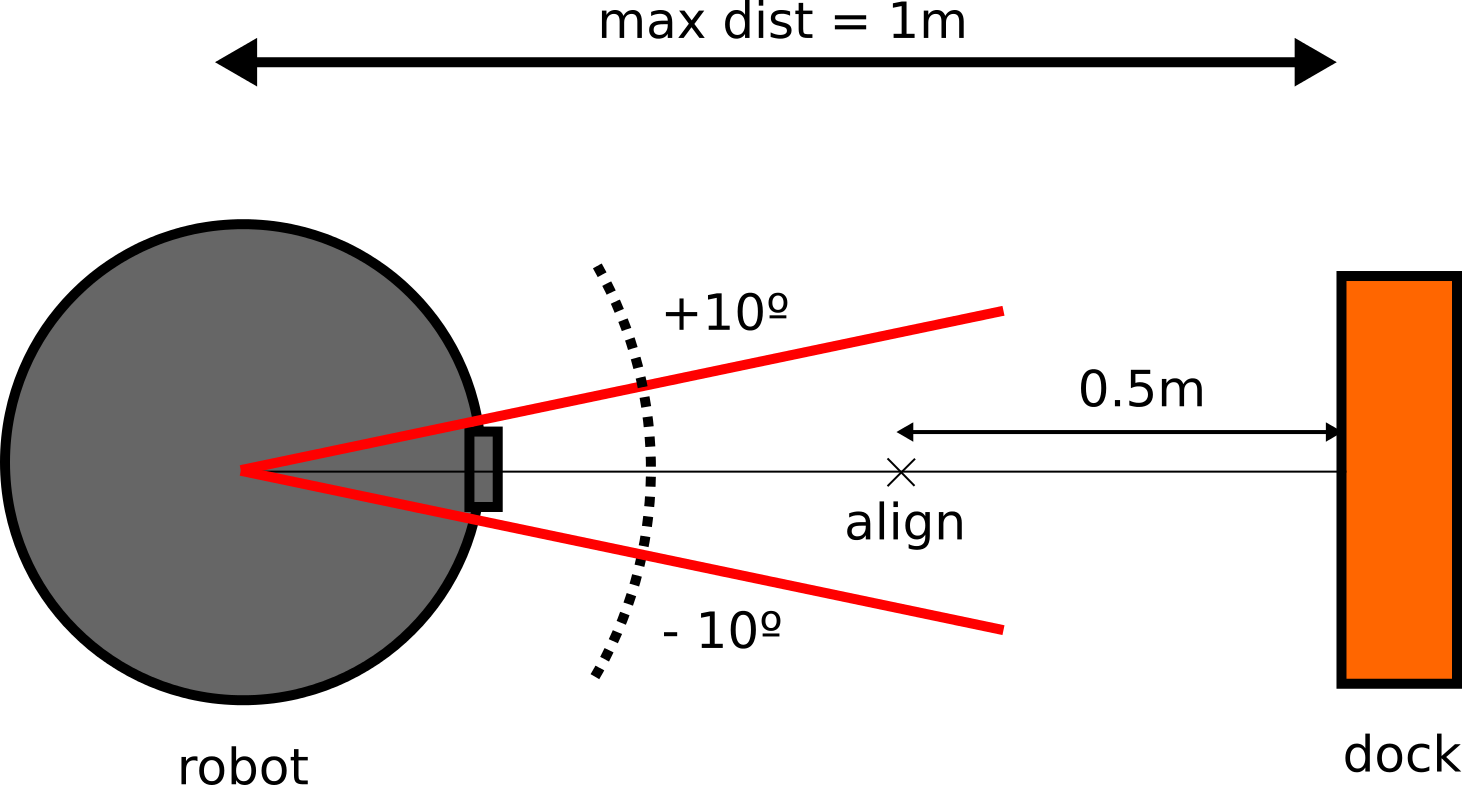

TIAGo++’s mobile base is provided with a differential drive mechanism and contains an onboard computer, batteries, power connector, laser-range finder, three rear sonars, a user panel, a service panel and two WiFi networks to ensure wireless connectivity. Furthermore, the version of TIAGo with a docking station has a charging plate on the front

Figure: Mobile base front view¶

Figure: Mobile base rear view¶

3.2.1 Onboard computer¶

The specifications of TIAGo ’s onboard computer depends on the configuration options you have ordered. The different possibilities are shown in table below:

Component |

Description |

|---|---|

CPU |

Intel i5 / i7 |

RAM |

8 / 16 GB |

Hard disk |

250 / 500 GB SSD |

Wi-Fi |

802.11 a/b/g/n/ac |

Bluetooth |

Smart 4.0 Smart Ready |

3.2.2 Battery¶

The specifications of the battery supplied with TIAGo++ are shown in table:

Type |

Li-Ion |

|---|---|

V_nominal |

36.0 V |

V_max |

42.0 V |

V_cutoff |

30.0 V |

Nominal capacity |

20 Ah |

Nominal energy |

720 Wh |

Max. continuous discharge current |

20 A |

Pulse discharge current |

60 A |

Max. charging current |

15 A |

Charging method |

CC/CV |

Weight |

7.5 kg |

TIAGo++ can be equipped with two batteries. In this case, the total Nominal capacity is 1440 Wh.

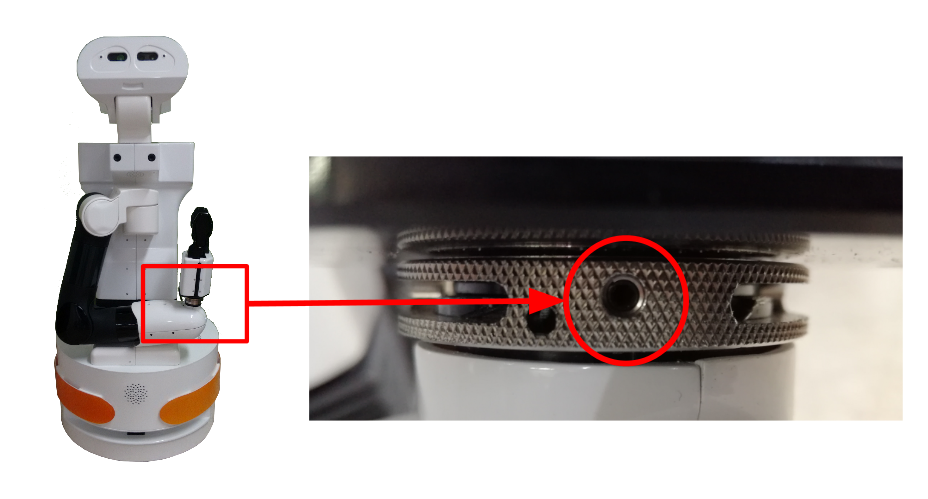

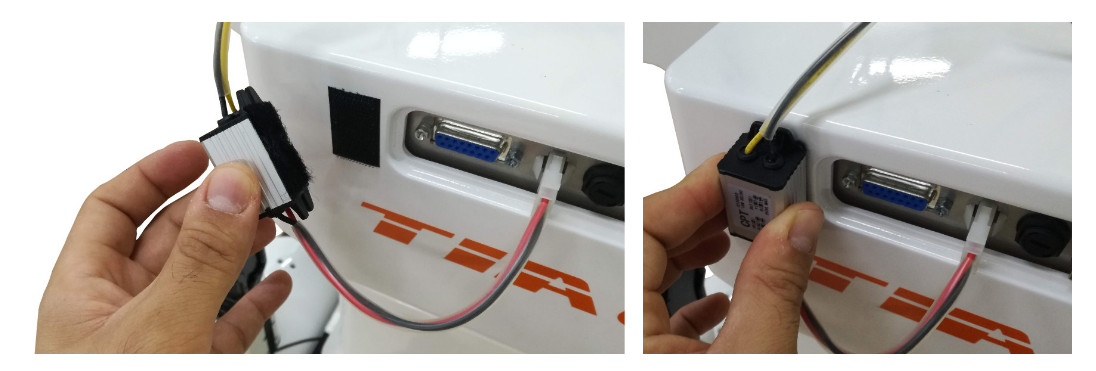

3.2.3 Power connector¶

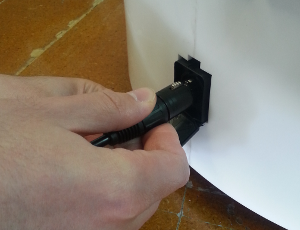

TIAGo++ must be charged only with suplied charger. To insert the charger connector, open the lid located on the rear part.

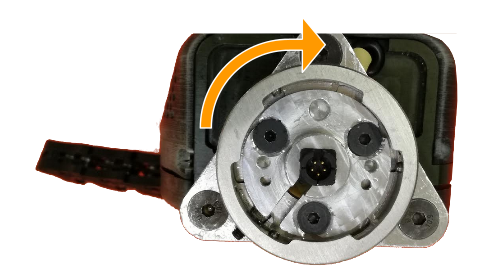

Figure: Charging connector entry¶

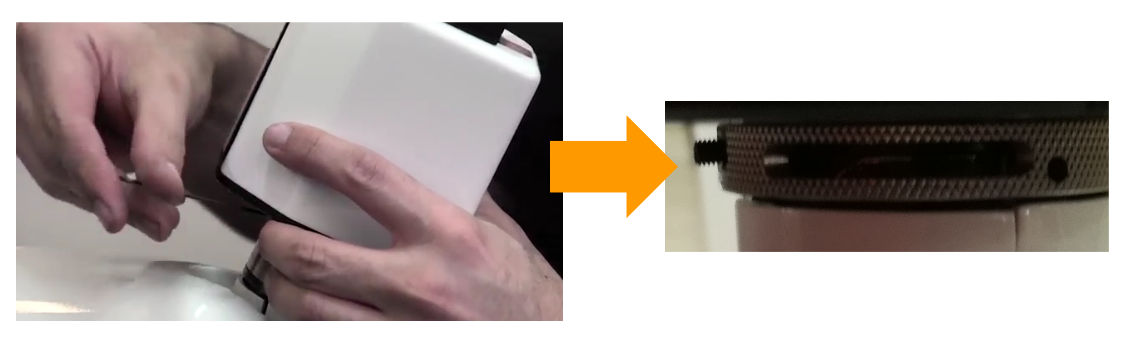

Connection Insert charging connector with metal lock facing up, push it until you hear a ’click’.

Figure: Charger connector insertion procedure¶

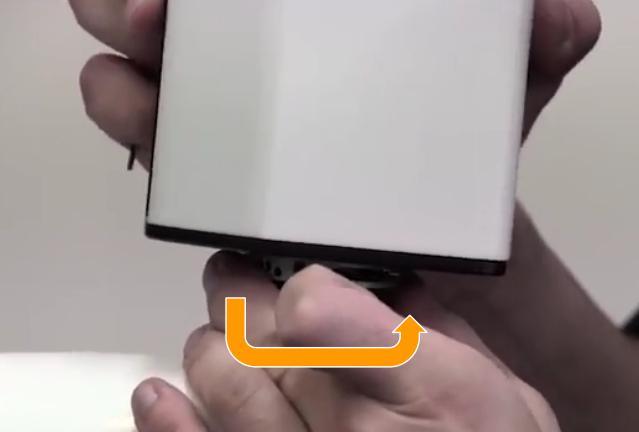

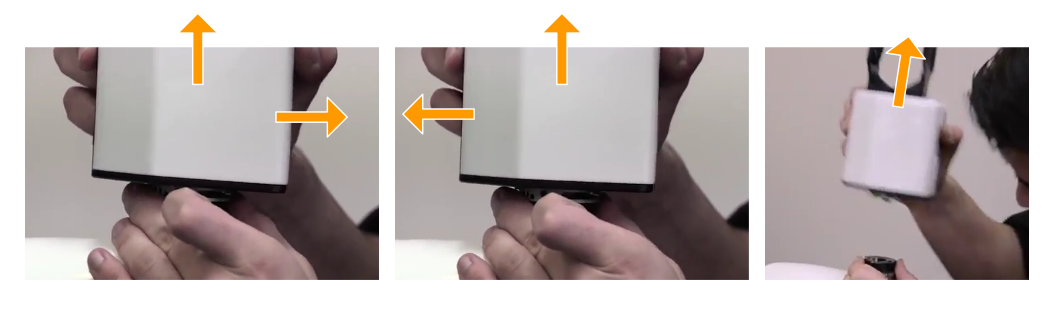

Disconnection Once charge is completed, connector can be removed. In order to do so, press metal lock and pull firmly the connector (see the figure below).

Figure: Charger connector removal procedure¶

3.2.4 Laser range-finder¶

The specifications of the laser on the front part of the mobile base depend on the configuration options you have ordered. The lasers supported are shown in table:

Manufacturer |

Hokuyo |

|---|---|

Model |

URG-04LX-UG01 |

Range |

0.02 - 5.6 m |

Frequency |

10 Hz |

Field of view |

180 degrees |

Step angle: |

0.36 degrees |

Manufacturer |

SICK |

|---|---|

Model |

TIM561-2050101 |

Range |

0.05 - 10 m |

Frequency |

15 Hz |

Field of view |

180 degrees |

Step angle: |

0.33 degrees |

Manufacturer |

SICK |

|---|---|

Model |

TIM571-2050101 |

Range |

0.05 - 25 m |

Frequency |

15 Hz |

Field of view |

180 degrees |

Step angle: |

0.33 degrees |

3.2.5 Sonars¶

The rear part of the mobile base has three ultrasound sensors, here referred to as sonars. One is centered and the other two are placed at 30º on the left and right. See table for the sonar’s specifications

Manufacturer |

Devantech |

|---|---|

Model |

SFR05 |

Frequency |

40 kHz |

Measure distance |

0.03 - 1 m |

3.2.6 IMU¶

The Inertial Measurement Unit is mounted at the center of the mobile base and may be used to monitor inertial forces and attitude. The specifications are presented in the table:

Manufacturer |

InvenSense |

|---|---|

Model |

MPU-6050 |

Gyroscope |

3-axis |

Accelerometer |

3-axis |

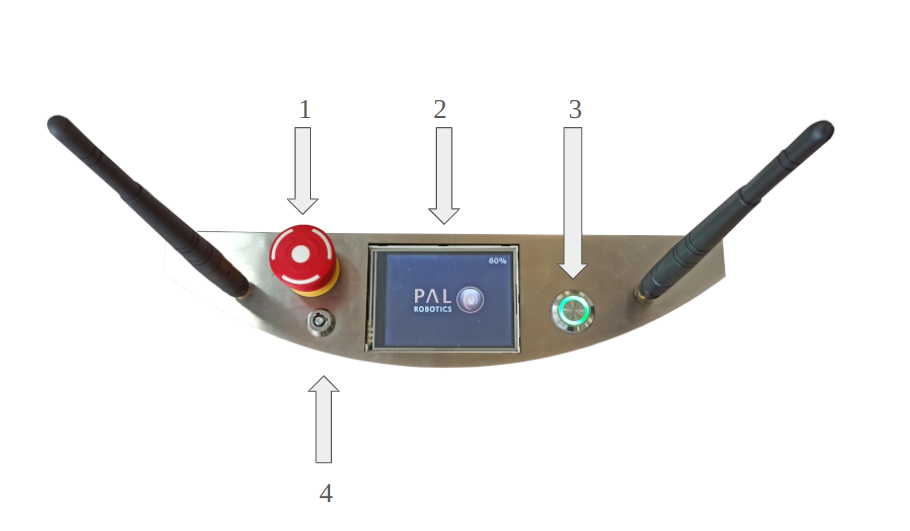

3.2.7 User panel¶

The user panel is on the top, rear part of TIAGo mobile base. It provides the buttons to power up and shutdown the robot, and a screen to give visual feedback on the robot’s status. All the specific elements of the user panel are shown in the figure below and the description of each element is presented in teh table:

Figure: User Panel¶

Number |

Name / Short description |

|---|---|

1 |

Emergency stop |

2 |

Information display |

3 |

On / Off button |

4 |

Electric switch |

Electric switch The electric switch is the main power control switch. Before turning TIAGo ON make sure first that this switch is ON, i.e. its red light indicator is ON. On the other hand, when TIAGo is not going to be used for a long period, please press the switch so that its red light indicator turns OFF. Note that this switch should not be turned OFF before using the On/Off button to turn OFF the onboard computer of the robot. Turning OFF this switch will cut instantaneously the power supply to all the robot components, including the onboard computer. Do not use this switch as emergency stop. For the emergency stop please refer to the next section.

Emergency stop When pushed, motors are stopped and disconnected. Green indicator will blink fast in order to notify the emergency state.

To start the normal behaviour again, a two step validation must be executed: emergency button must be released rotating clockwise, and then On/On button must be pressed for 1 second. The green light will change to fixed state.

Information display 320x240 Color TFT display that shows battery level on the top-right corner.

On / Off button Standby control button. It is a push button with a green light to indicate the current system status.

Light |

State |

Name / Short description |

|---|---|---|

Off |

Fixed |

Standby |

On |

Fixed |

Running |

On |

Slow-Blink |

System in process of shutdown |

On |

Fast-Blink |

Emergency state |

After main power is connected, i.e. electric switch is ON (see Figure: User Panel), user must press this button during 1 second in order to start the TIAGo.

To set again the system in standby mode when is running, press again the button. The green light will blink slowly during shut down procedure and light-off when standby mode reached.

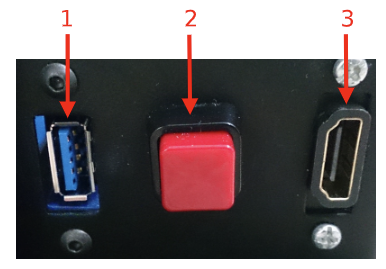

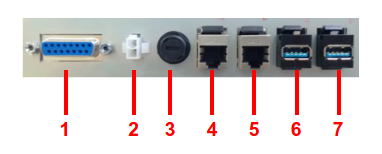

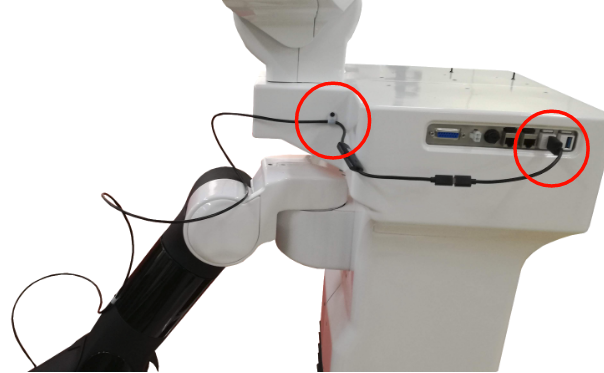

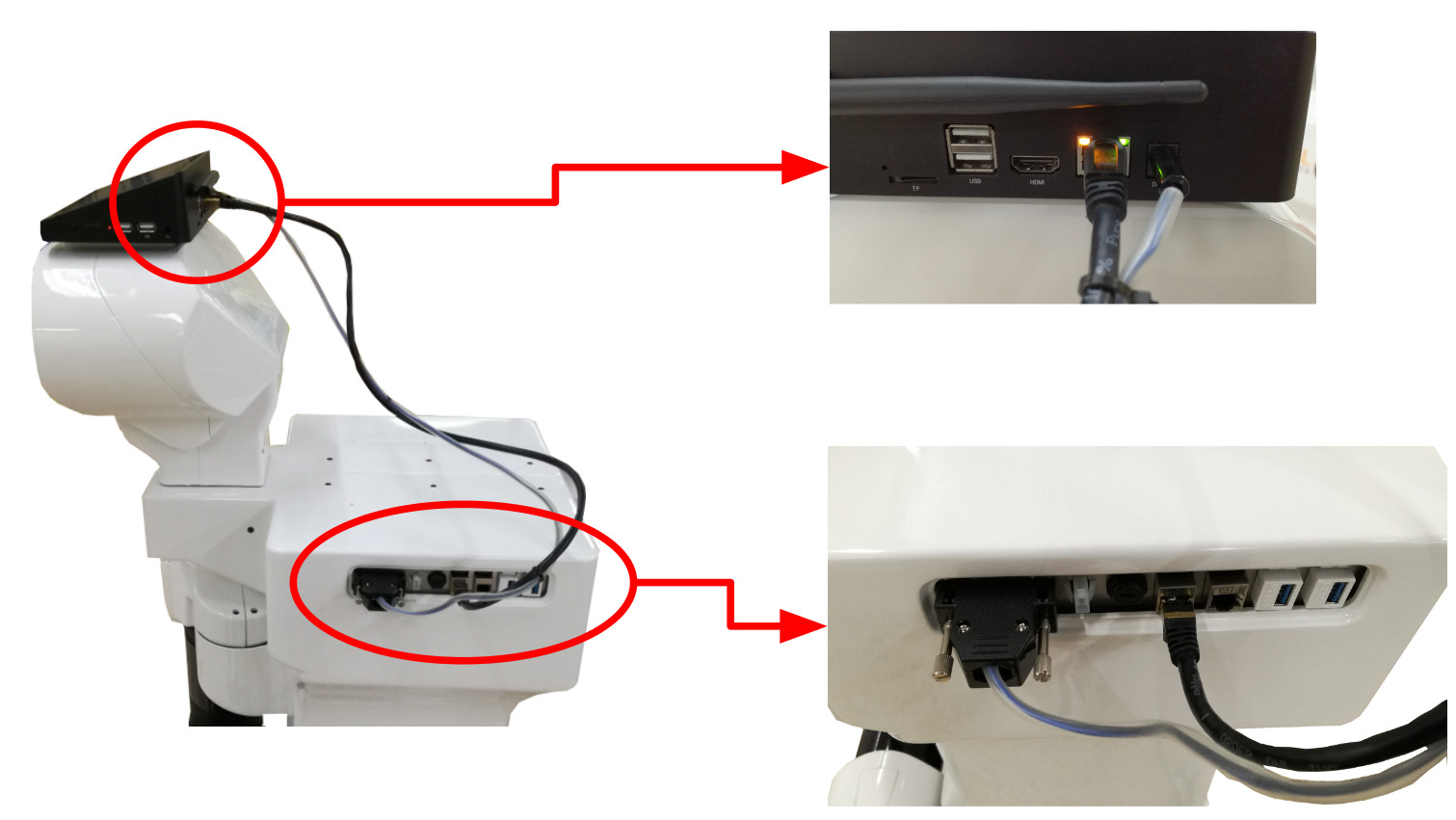

3.2.8 Service panel¶

It is possible to access the service panel by removing the cover behind the laser (see Figure: Service panel).

This service panel gives access to video, usb and on/off button of the robot’s computer. It can be used for reinstallation or debug propouses.

Figure: Service panel¶

Number |

Name / Short description |

|---|---|

1 |

USB 3.0 |

2 |

On/Off button computer |

3 |

HDMI (not in TIAGo Lite) |

3.2.9 Connectivity¶

TIAGo is equipped with a dual band Wireless 802.11b/g/n/ac interface, plus bluetooth 4.0 and a WiFi antenna. When the WiFi interface is configured as access point, it has a 802.11g interface.

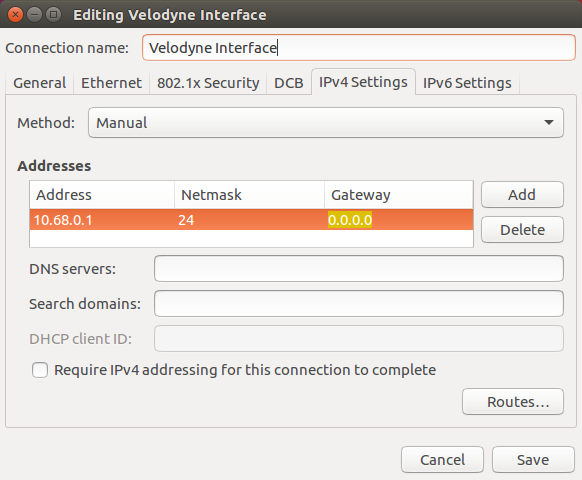

There are two Gigabit Ethernet ports, ports 2 and 3 in the expansion panel figure, that can be used to connect to the robot’s internal network. For this network, the IP address range 10.68.0.0/24 has been reserved. The IP addresses used in the building network MUST not use this range because it can interfere with the robot’s services.

3.3 Torso¶

TIAGo++ ’s torso is the structure that supports the robot’s arm and head, and is equipped with an internal lifter mechanism which allows the user to change the height of the robot. Furthermore, it featuresan expansion panel and a laptop tray.

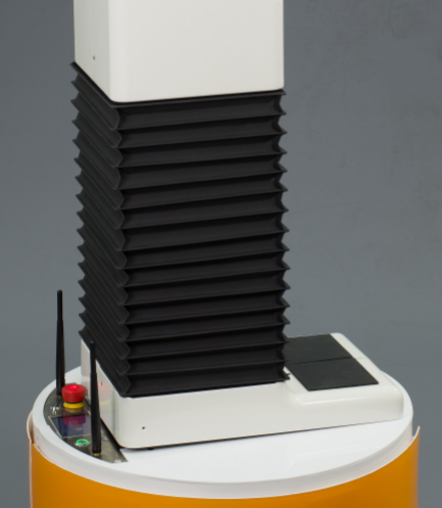

3.3.1 Lifter¶

The lifter mechanism is placed underneath the industrial bellows, shown in Figure: Industrial bellows of the lifting torso. The lifter is able to move at 50 mm/s and has a stroke of 350 mm. The minimum and maximum height of the robot is shown in Figure: Height range of the robot.

Figure: Industrial bellows of the lifting torso¶

Figure: Height range of the robot¶

3.3.2 Expansion Panel¶

The expansion panel is located on the top left part of the torso and the connectors exposed are shown in figure below and specified in the table.

Figure: Expansion panel¶

Number |

Name / Short description |

|---|---|

1 |

CAN Service connector |

2 |

Mini-Fit Power supply 12 V and 5 A |

3 |

Fuse 5 A |

4 |

GigE port |

5 |

GigE port |

6 |

USB 2.0 port |

7 |

USB 3.0 port |

The CAN service connector is reserved for maintenance purposes and shall not be used.

3.3.3 Laptop tray¶

The laptop tray is the flat surface on top of the torso just behind the robot’s head, see Figure: Laptop tray dimensions. It has mounting points to add new equipment, supporting to 5 kg, or it can be used to place a laptop in order to work in place with the robot making use of the WiFi connectivityor using one of the ethernet ports in the expansion panel.

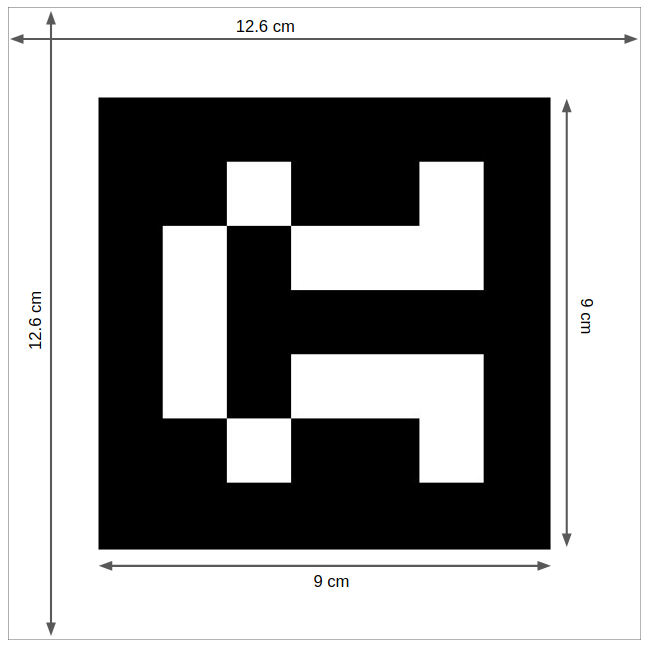

Figure: Laptop tray dimensions¶

Figure: Laptop placed on the rear tray of the robot¶

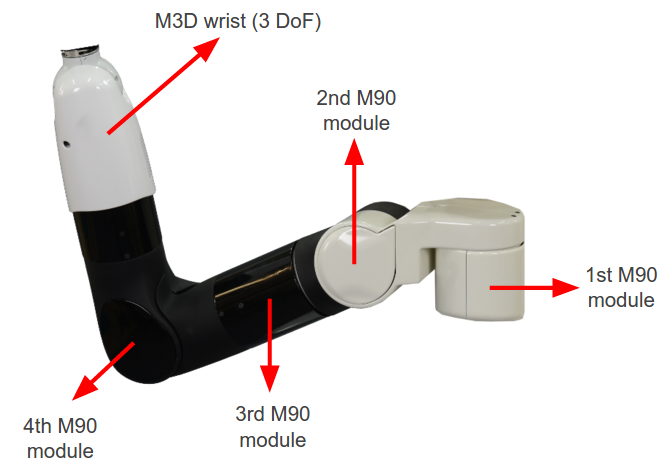

3.4 Arm¶

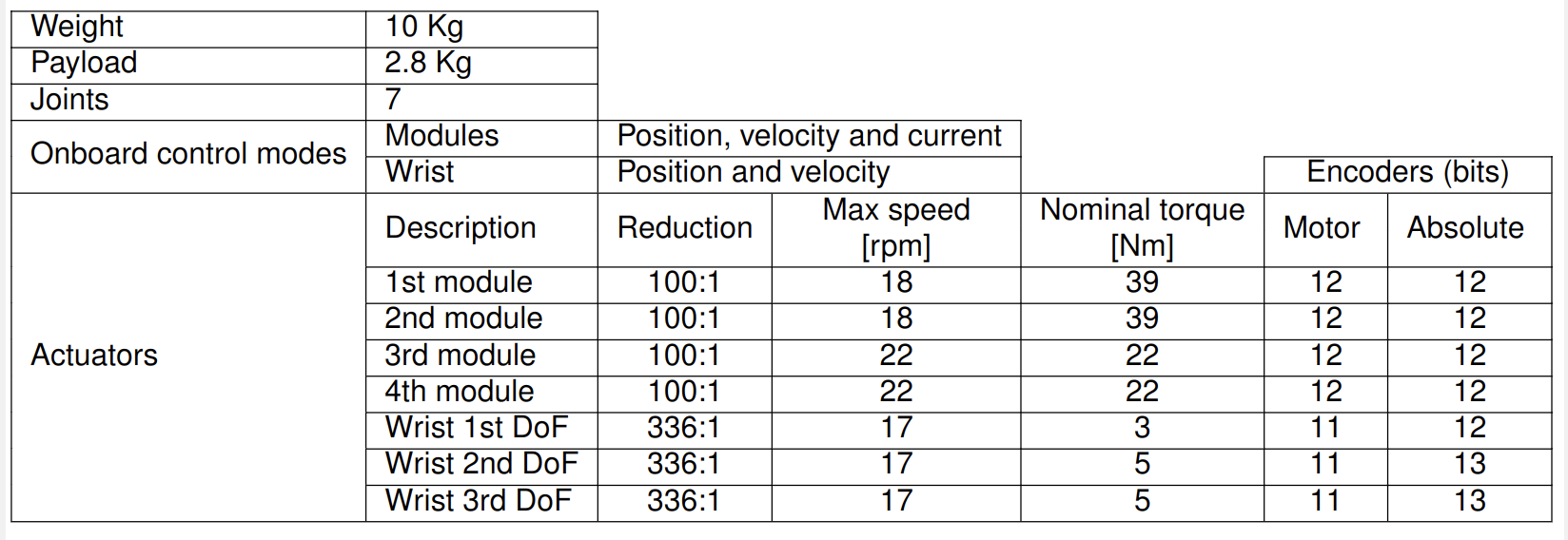

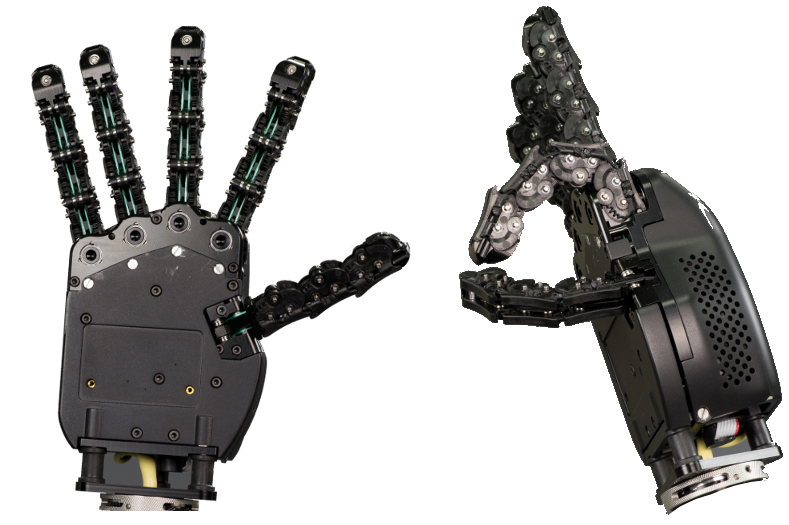

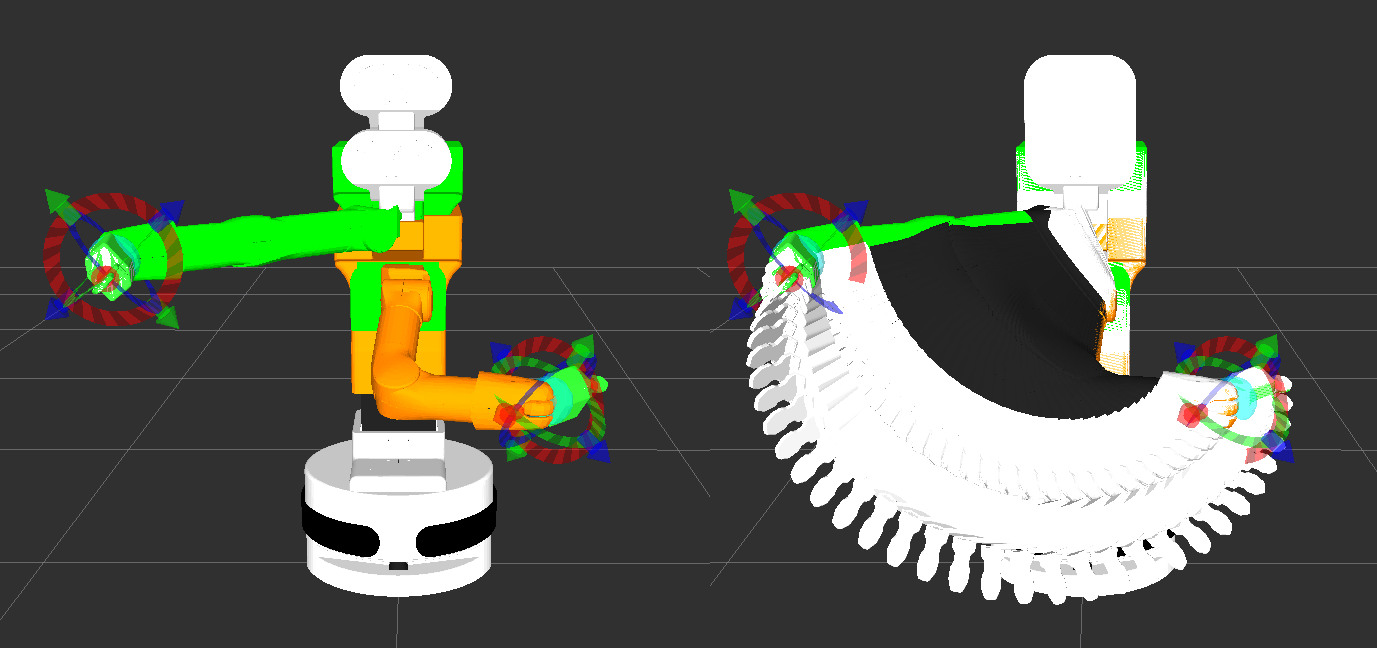

TIAGo++’s arm is composed of four M90 modules and one 3 DoF wrist, M3D, as shown in figure below. The main specifications of the arm and wrist are shown in table:

Figure: Arm components¶

Figure: Arm and wrist specifications¶

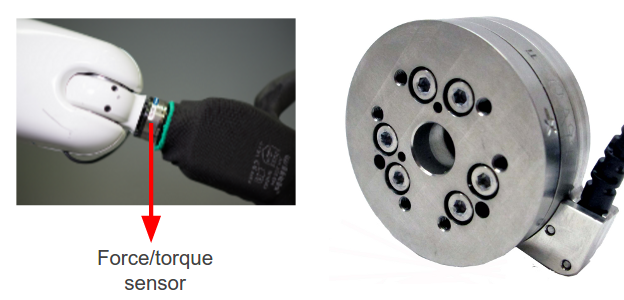

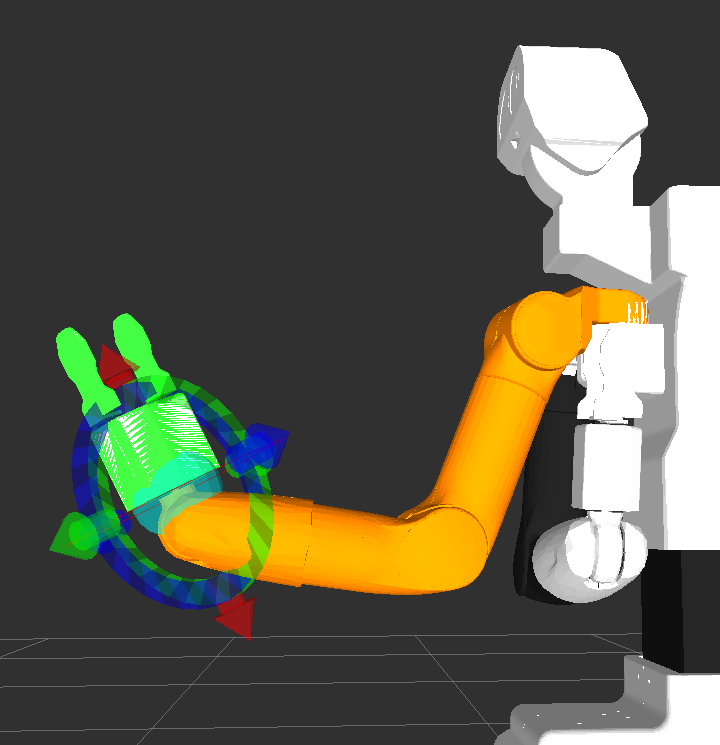

3.5 Force/Torque sensor¶

The Force/Torque sensor integrated on the end-point of the wrist is an ATI mini45, see figure below. The mainspecifications of the sensor are summarized in the table below.

Figure: Force/torque sensor placement and close view¶

Main specifications of the force/torque sensor¶

3.6 End-effector¶

TIAGo++ ’s end-effector is one of the modular features of the robot. TIAGo++ can be used with six inter-changeable end-effectors: the Hey5 hand, the PAL parallel gripper, the Schunk WSG32 industrial gripper, the Robotiq 2F-85 gripper, the Robotiq-2F-140 gripper and the Robotiq EPick vacuum gripper.

Warning

Since September 2019 the Schunk WSG32 gripper is no longer available as the manufacturer has discountinued this product. Documentation about this end-effector is kept in this handbook as reference for customers already owning it.

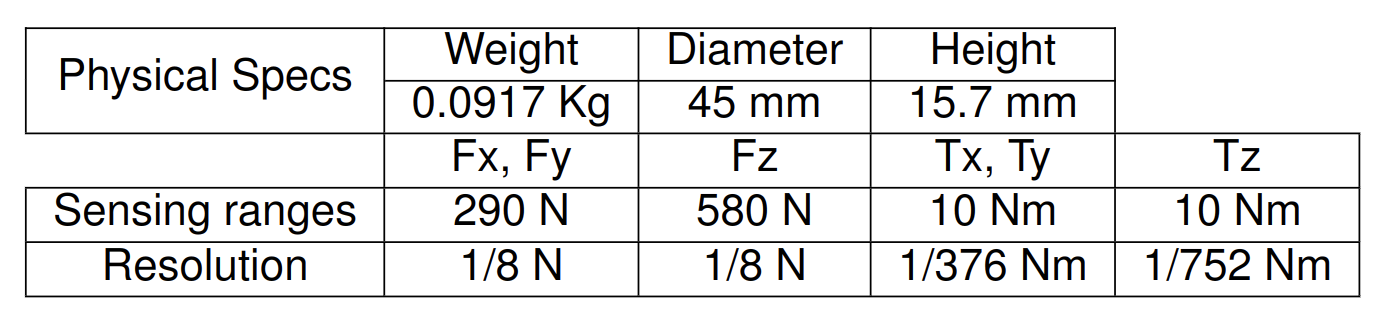

3.6.1 Hey5 hand¶

The Hey5 hand is shown in the figure below. The main specifications of this underactuated, self-contained hand are summarized in the table.

Figure: Hey5 hand¶

Weight |

720 g |

Payload |

1 Kg |

Joints |

19 |

Description |

Max speed [rpm] |

Max troque [Nm] |

|---|---|---|

Thumb |

32 |

0.23 |

Index |

32 |

0.23 |

Middle+right+little |

34 |

0.45 |

Credits and attribution

The Hey5 hand has been developed by PAL Robotics Inc., with contributions from QBrobotics srl.

The Hey5 hand is a derivative of the Pisa/IIT SoftHand open source project by M. G. Catalano, G. Grioli, E. Farnioli, A. Serio, C. Piazza and A. Bicchi.

The Pisa/IIT SoftHand project is distributed under Creative Commons Attribution 4.0 International License and is available at NaturalMachineMotionInitiative.com.

3.6.2 PAL gripper¶

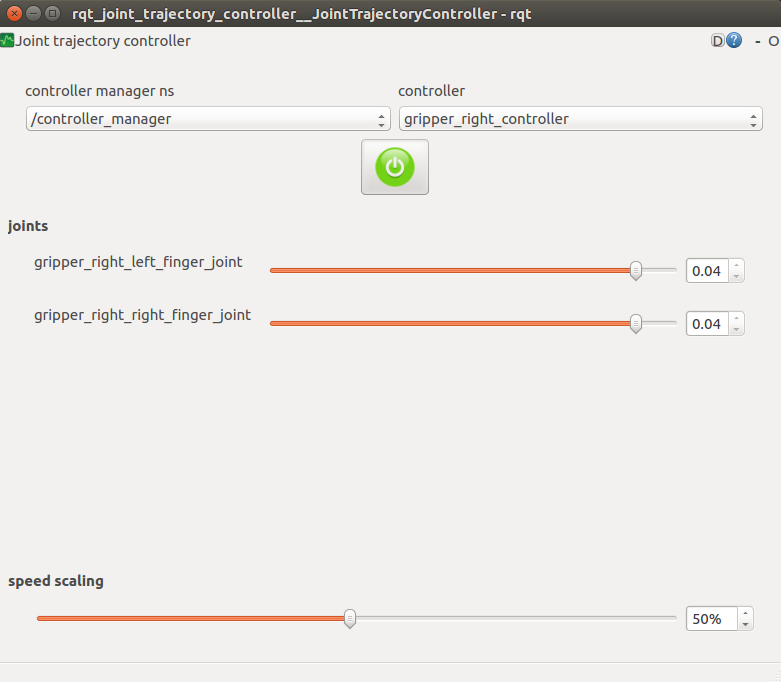

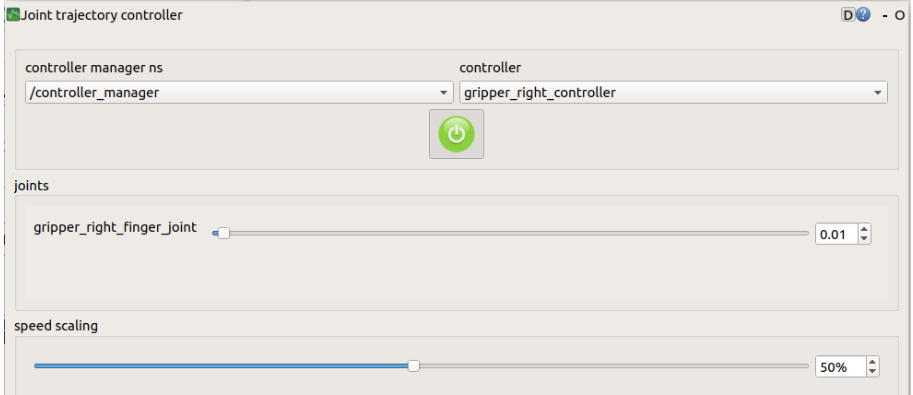

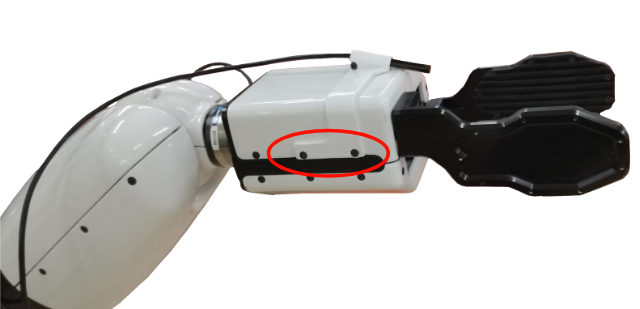

The PAL parallel gripper is shown in figure below. The gripper contains two motors, each controlling one of the fingers. Each finger has a linear range of 4 cm.

Figure: PAL gripper¶

Weight |

800 g |

Payload |

2 Kg |

interchangeable fingers |

Yes |

Description |

Reduction |

Max speed [rpm] |

Max troque [Nm] |

Absolute encoder |

|---|---|---|---|---|

Left finger |

193:1 |

55 |

2.5 |

12 bits |

Right finger |

193:1 |

55 |

2.5 |

12 bits |

3.6.3 Robotiq 2F-85/140 gripper¶

The adaptative robotiq gripper 2F-85 and 2F-140 are shown in the figure below. Their respective specifications canbe found in figure

Figure: Robotiq 2F-85/140 grippers¶

Model |

2F-85 |

2F-140 |

Weight |

900 g |

1000 g |

Form-fit grip payload |

5 kg |

2.5 kg |

Gripper size |

85 mm |

140 mm |

Grip force |

20 to 235 N |

10 to 125 N |

Closing speed |

20 to 150 mm/s |

30 to 250 mm/s |

Note

Since the arm without end effector has a maximum payload of 3 kg, the real payload of 2F-85 and 2F-140 are 2.1 kg and 2.0 kg respectively.

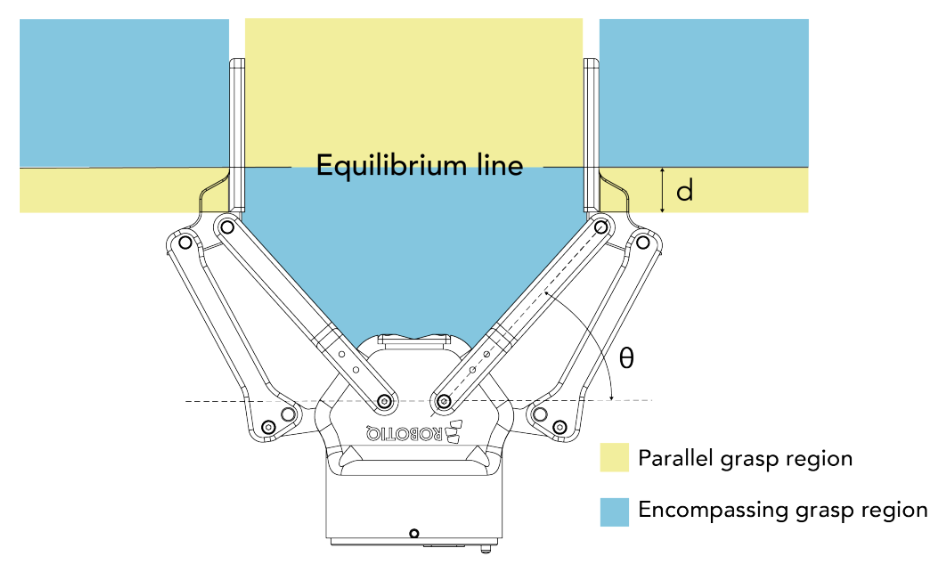

The gripper equilibrium line is the grasping region that separates the encompassing grasp from the parallel grasp. When grasping an object close enough to the inside (palm) of the gripper, the encompassing grasp will occur (unless the object size or shape is not adequate) and the fingers will close around the object.

If grasped above the equilibrium line, the same object will be picked up in a parallel grasp by the fingertips and the fingers will close with a parallel motion. The figure below shows the encompassing grasp region, the equilibrium line, and the parallel grasp region on the 2-Finger Adaptive Gripper.

Figure: Robotiq 2F-85/140 gripper equilibrium line¶

3.6.4 Robotiq EPick Vacuum gripper¶

The Robotiq EPick vacuum gripper is a gripper shown in the figure below. It uses a suction cup to create a vac- uum to grasp an object, without an external air supply, making it suitable for mobile robots. The mechanical specifications are listed in table below.

Figure: Robotiq 2F-85/140 gripper equilibrium line¶

Model |

EPick vacuum gripper |

Energy source |

Electricity |

Weight |

820 g |

Payload |

2 kg |

Maximum vacuum level |

80 % |

Maximum vacuum flow |

12 L/min |

Opertating ambient temperature |

5 to 40 °C |

3.7 Head¶

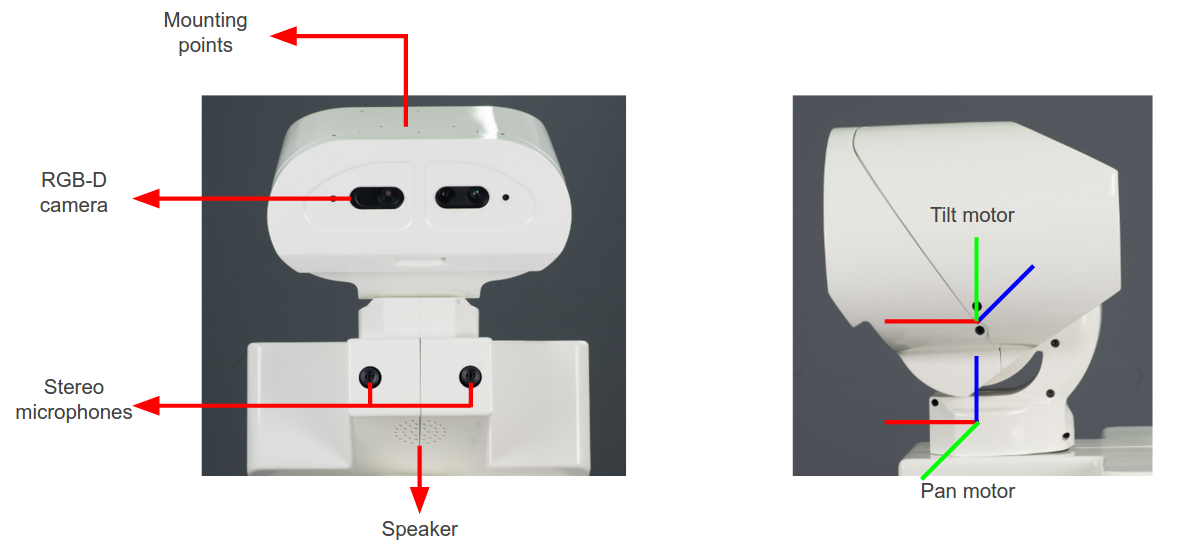

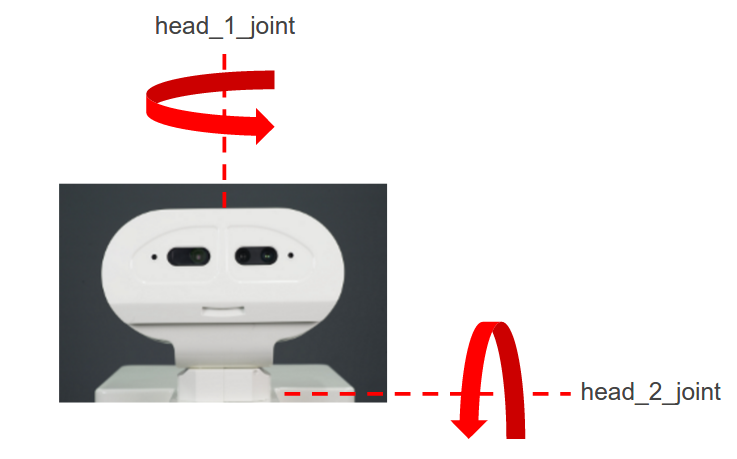

TIAGo++’s head is equipped with a pan-tilt mechanism, i.e. 2 DoF, and is equipped with stereo microphones, a speaker and an RGB-D camera. Furthermore, on top of the head there is a flat surface with mounting points to allow the user to add new sensors or equipment. Note that the head has a payload of 0.5 kg when adding new equipment. The figure below shows the location of each component and the two joints of the pan-tilt mechanism.

Figure: Head parts and components¶

3.7.1 Pan-tilt mechanism¶

Head pan-tilt main specifications:

Description |

Reduction |

Max speed [rpm] |

Max troque [Nm] |

Absolute encoder |

|---|---|---|---|---|

Pan motor |

200:1 |

63 |

6 |

12 bits |

Tilt motor |

200:1 |

63 |

6 |

12 bits |

3.7.2 Speaker¶

The speaker specified in the table is provided below the robot’s head.

Manufacturer |

VISATON |

Model |

FRS 5 |

Rated power |

5 W |

Max power |

8 W |

Nominal impedance Z |

8 Ohm |

Frequency response |

150-20000 Hz |

3.7.3 Stereo microphones¶

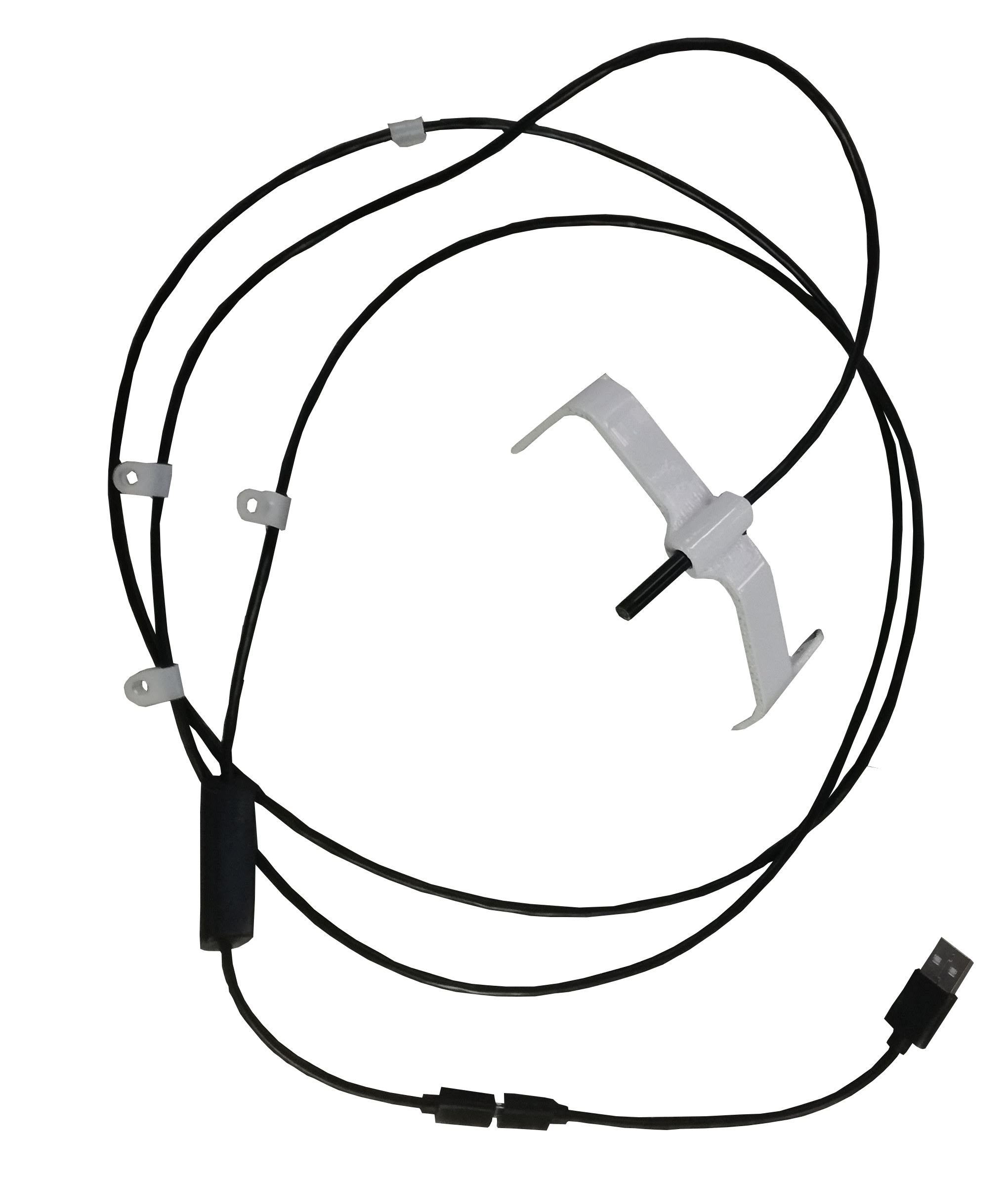

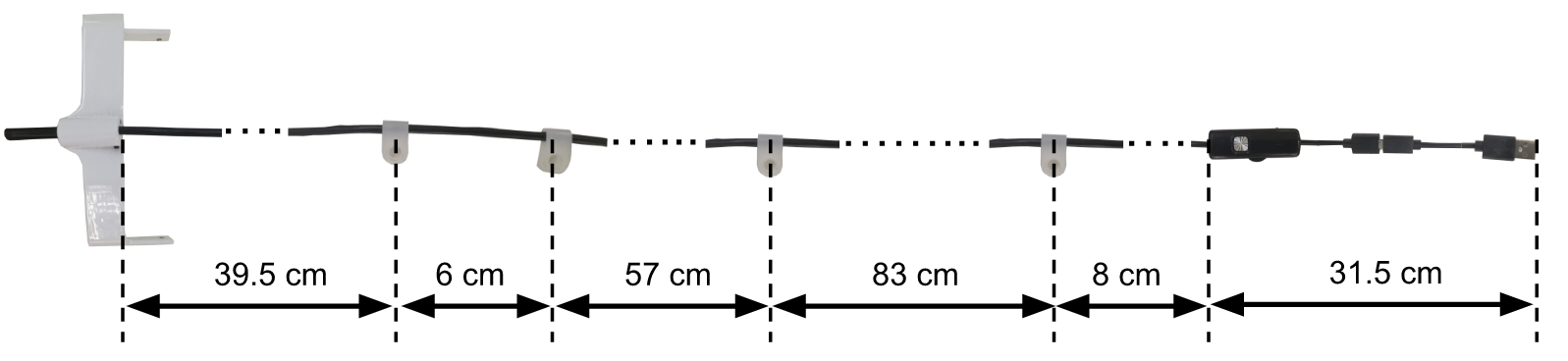

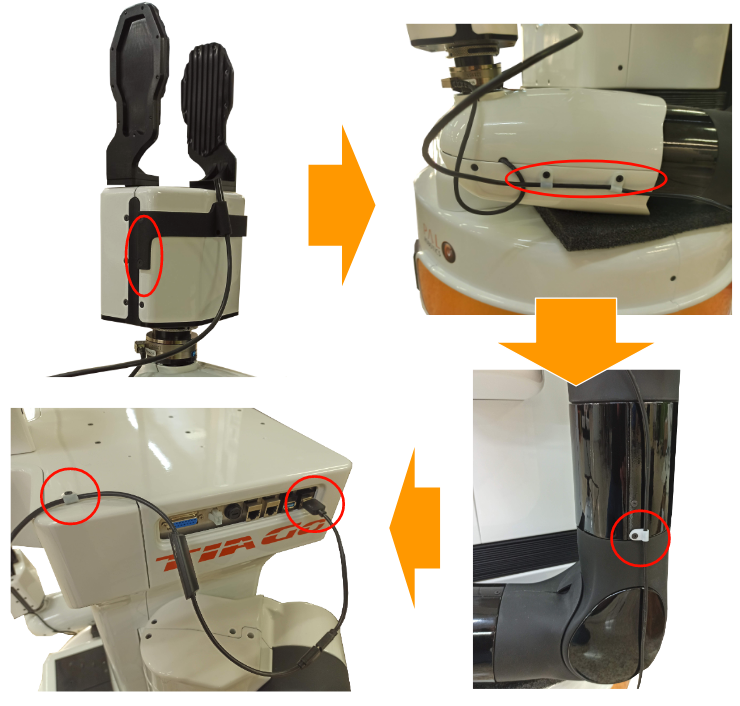

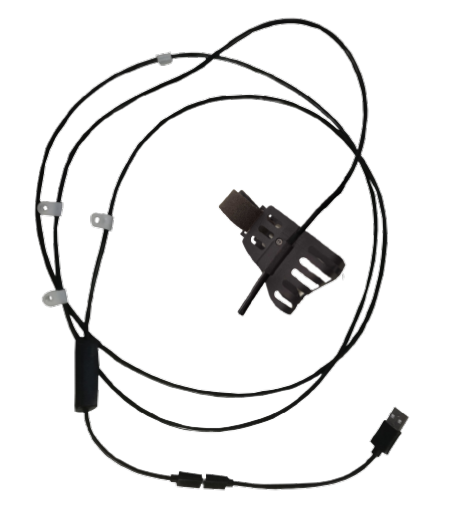

The Andrea SuperBeam Stereo Array Microphone is integrated just below the head of the robot. The specifications of the audio card are presented in the first table and the specifications of the Stereo Array Microphone are detailed in the second table:

Manufacturer |

Andrea Electronics |

Model |

SuperBeam Stereo Array Microphone |

Mic supply voltage |

1.4-5.0 VDC |

Supply bias resistor |

2.2k-39.9k Ohm |

Operating current (each channel) |

0.5 mA |

Output impedance at 1 kHz |

200 Ohm |

Max input sound level at 1 kHz, 3% THD |

115 dB |

Output signal level at THD < 3% @ 1 kHz |

24-120 mVrms |

Sensitivity at 1 kHz (0 dB = 1 V/Pa Vdc=1.6 V) |

-40 to -37 dBV |

Frequency response at 3 dB variation noise |

20 uVrms |

Operating temperature |

0-70C◦C |

Recommended operating distance |

30.5-122 cm |

Acoustic signal reduction at 1 kHz outside of 30◦beamform |

15-30 dB |

Noise reduction |

20-25 dB |

Manufacturer |

Andrea Electronics |

Model |

PureAudio USB-SA |

Supply voltage |

4.5 - 5.5 VDC |

Total power consumption |

120 mA |

A/D conversion resolution |

16 bit |

THD + N |

-84 dB |

Supply bias resistor |

2.2 kOhm @ 3.3 VDC |

Frequency response |

20-20000 Hz |

Input range |

0 - 1.25 Vrms |

Dynamic range |

95 dB |

Record gain range |

-6 to 33 dB |

3.7.4 RGB-D camera¶

TIAGo++’s head includes an RGB-D camera, specified below:

Manufacturer |

Orbbec |

Model |

Astra S |

Field of view |

60◦H, 49.5◦V, 73◦D |

Interface |

USB 2.0 |

Color stream modes |

QVGA 320x240 @ 30 fps, VGA 640x480 @ 30 fps, 1280x960 @ 10 fps |

Depth stream modes |

QVGA 320x240 @ 30 fps, VGA 640x480 @ 30 fps, 160x120 @ 30 fps |

Depth sensor range |

0.4 - 2 m |

3.8 Electrical parts and components¶

Neither TIAGo++ nor any of its electrical components or mechanical parts are connected to external ground. The chassis and all electromechanical components are physically isolated from the ground by the isolation rubber under its feet. Avoid touching any metal parts directly to prevent discharges and damage to TIAGo++’s electromechanical parts.

Electrical power supply and connectors

The power source supplied with TIAGo++ is compliant with the Directive on the restriction of the use of certain hazardous substances in electrical and electronic equipment 2002/95/EC (RoHS) and with the requirements of the applicable EC directives, according to the manufacturer. The power source is connected to the environment ground, whenever the supplied wire is used (Phase-Neutral-Earth).

4 Storage¶

4.1 Overview¶

This section contains information relating to the storage of TIAGo++.

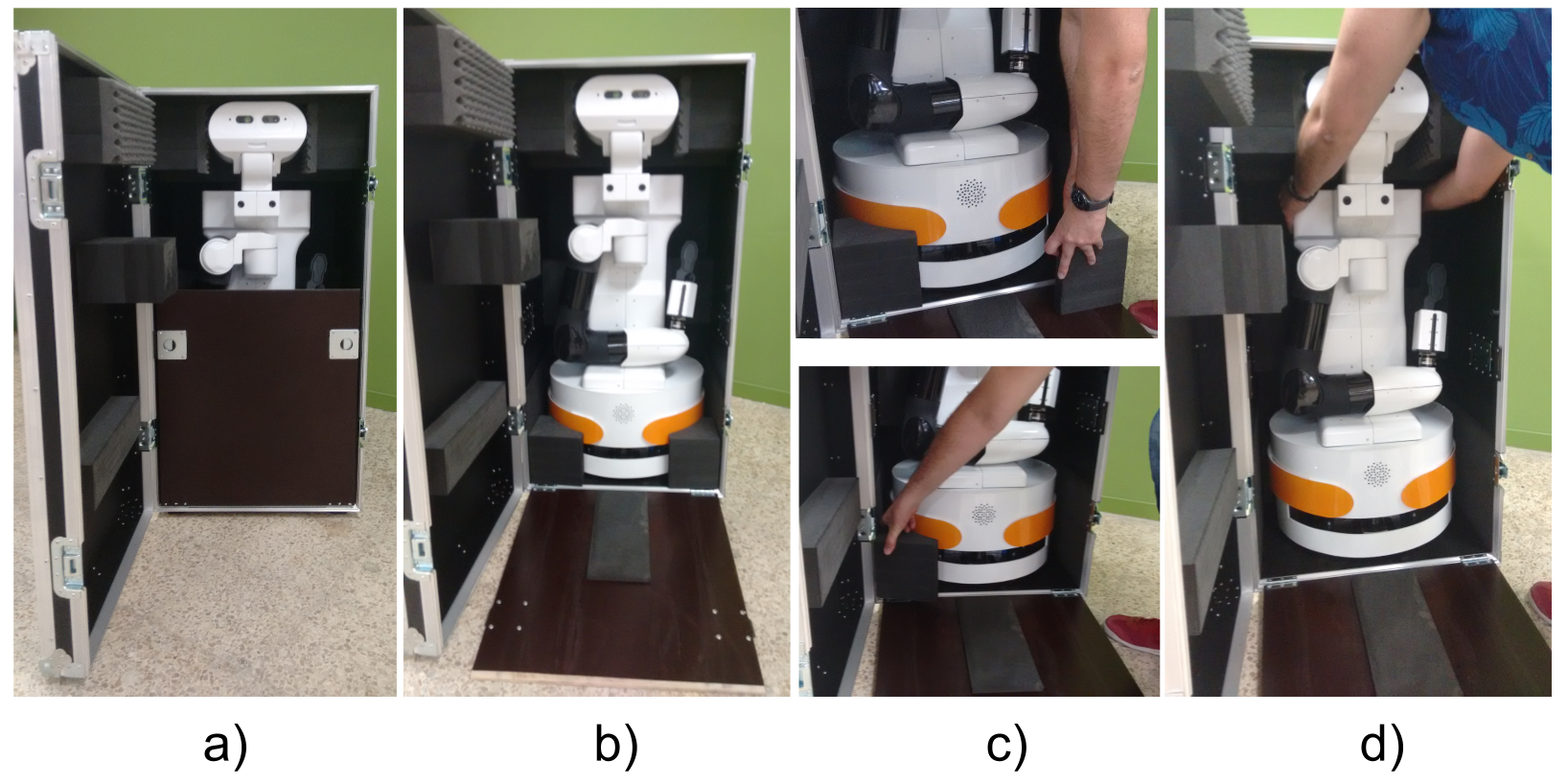

4.2 Unboxing TIAGo++¶

This section explains how to unbox TIAGo++ safely. TIAGo++ is shipped with the flightcase shown in figure:

Figure: TIAGo++ flightcase¶

The flightcase MUST be always transported vertically to ensure the robot’s safety. In order to move the flightcase, pull the handle on the back, as shown in figure below. To place the flightcase in a given location use one of your feet to help you carefully set the flightcase in an upright position.

Figure: Moving the fligthcase¶

Open the door of the crate, see figure below a and unfold the ramp as shown in figure below b. Remove the foam wedges holding the mobile base as shown in figure below c. Finally, pull out TIAGo from the upper part of its torso back and, if necessary, from the bottom part of the shoulder, as shown in figure below d. Do not pull out the the robot from any part of the mobile base cover as damaged could be caused to you or to the robot.

Figure: Unboxing procedure¶

5 Storage cautions¶

Always store TIAGo++ in a place where it will not be exposed to weather conditions.

The storage temperature range for TIAGo++ is between 0ºC ∼ +60ºC.

The storage temperature range for the batteries is between +10ºC ∼ +35ºC.

It is recommended to turn completly off (red power button is off) the TIAGo++ when the storage period exceeds two weeks.

It is recommended to charge the battery to 50% when storing it for more than two weeks.

Avoid the use or presence of water near TIAGo++.

Avoid any generation of dust close to TIAGo++.

Avoid the use or presence of magnetic devices or electromagneticfields near TIAGo++.

6 Introdution to safety¶

6.1 Overview¶

Safety is important when working with TIAGo++. This chapter provides an overview of safety issues, general usage guidelines to support safety, and describes some safety-related design features. Before operating the robot all users must read and understand this chapter!

6.2 Intended applications¶

It is important to clarify the intended usage of the robot before any kind of operation.

TIAGo++ is a robotics research and development platform meant to be operated in a controlled environment under supervision by trained staff at all time.

- The hardware and software of TIAGo++ allows to research and develop activities in the following areas:

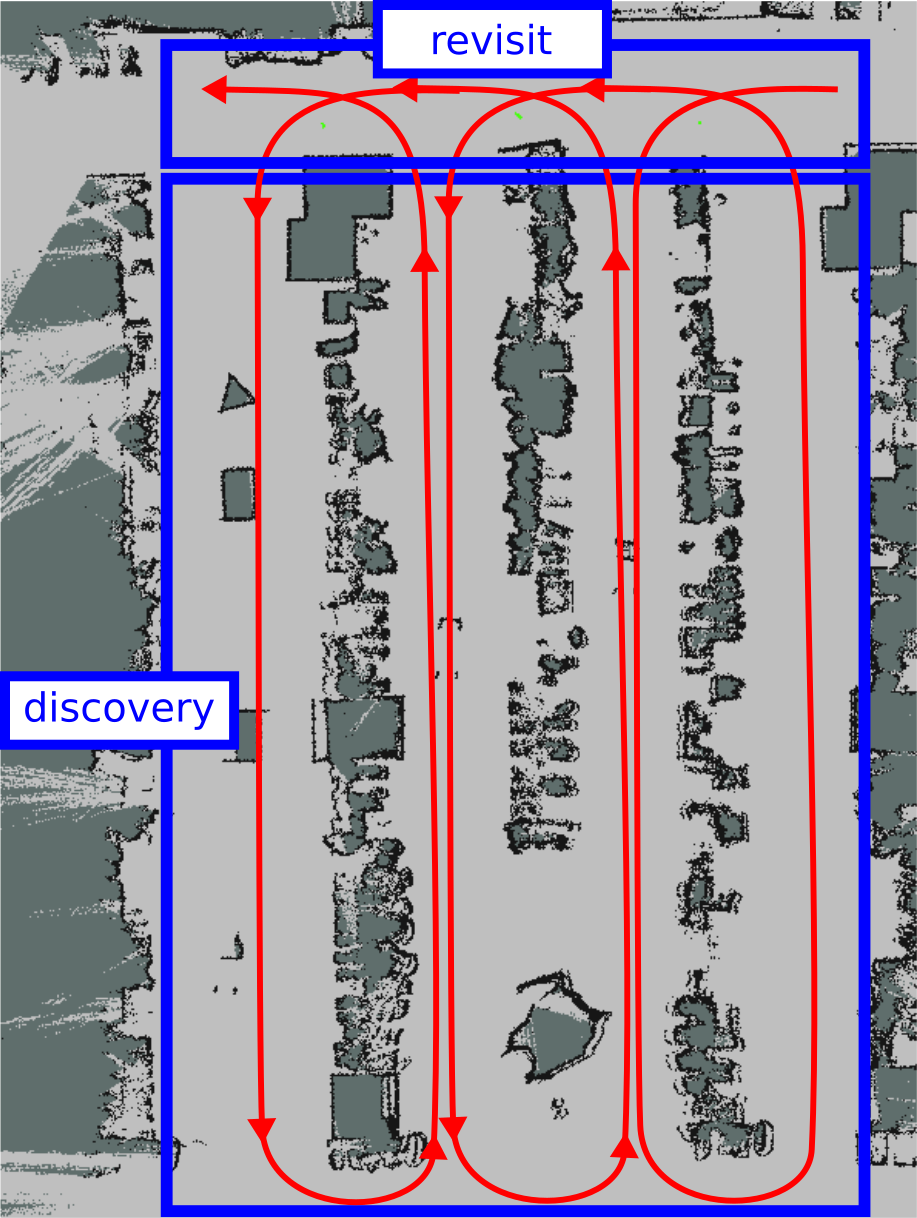

Navigation and SLAM

Manipulation

Perception

Speech recognition

Human-robot interaction

6.3 Working environment and usage guidelines¶

The working temperatures are:

Robot: +10ºC ~ +35ºC

The space where TIAGo++ operates should have a flat floor and be free of hazards. Specifically, stair-ways and other drop offs can pose an extreme danger. Avoid sharp objects (such as knives), sources of fire, hazardous chemicals, or furniture that could be knocked over.

Maintain a safe environment:

The terrain for TIAGo++ usage must be capable of supporting the weight of the robot (see Specifications section). It must be horizontal and flat. Do not use any carpet, to avoid tripping over.

Make sure the robot has adequate space for any expected or unexpected operation.

Make sure the environment is free of objects that could pose a risk if knocked, hit, or otherwise affected by TIAGo++.

Make sure there are no cables or ropes that could be caught in the covers or wheels; these could pull other objects over.

Make sure no animals are near the robot.

Be aware of the location of emergency exits and make sure the robot cannot block them.

Do not operate the robot outdoors.

Keep TIAGo++ away from flames and other heat sources.

Do not allow the robot to come in contact with liquids.

Avoid dust in the room.

Avoid the use or presence of magnetic devices near the robot.

Apply extreme caution with children.

6.4 Battery manipulation¶

The following guidelines must be respected when handling the robot in order to prevent damage to the robot’s internal batteries.

Do not expose to fire.

Do not expose the battery to water or salt water, or allow the battery to get wet.

Do not open or modify the battery case.

Do not expose to ambient temperatures above 49ºC for over 24 hours.

Do not store in temperatures below -5ºC over seven days.

For long term storage (more than 1 month) charge the battery to 50%.

Do not use the TIAGo++’s batteries for other purposes.

Do not use other devices but the supplied charger to recharge the battery.

Do not drop the batteries.

If any damage or leakage is observed, stop using the battery.

7 Safety measures in practice¶

Warning

This Section presents important information that must be taken into consideration when using the robot. Read carefully the instructions to ensure the safety of the people on the surroundings and to prevent damages to the environment and to the robot. Follow these instructions everytime the robot is used.

7.1 Turning the robot on properly¶

Warning

The procedure described in this section requires to have a clearance of about 1.5 m in front of the robot and at each of its sides in order to execute the required movements safely.

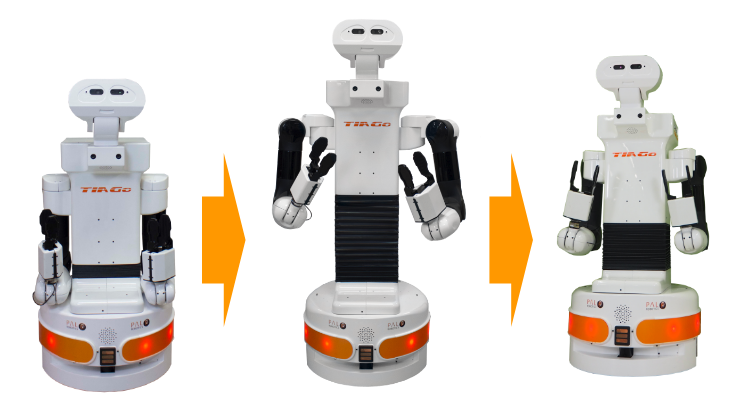

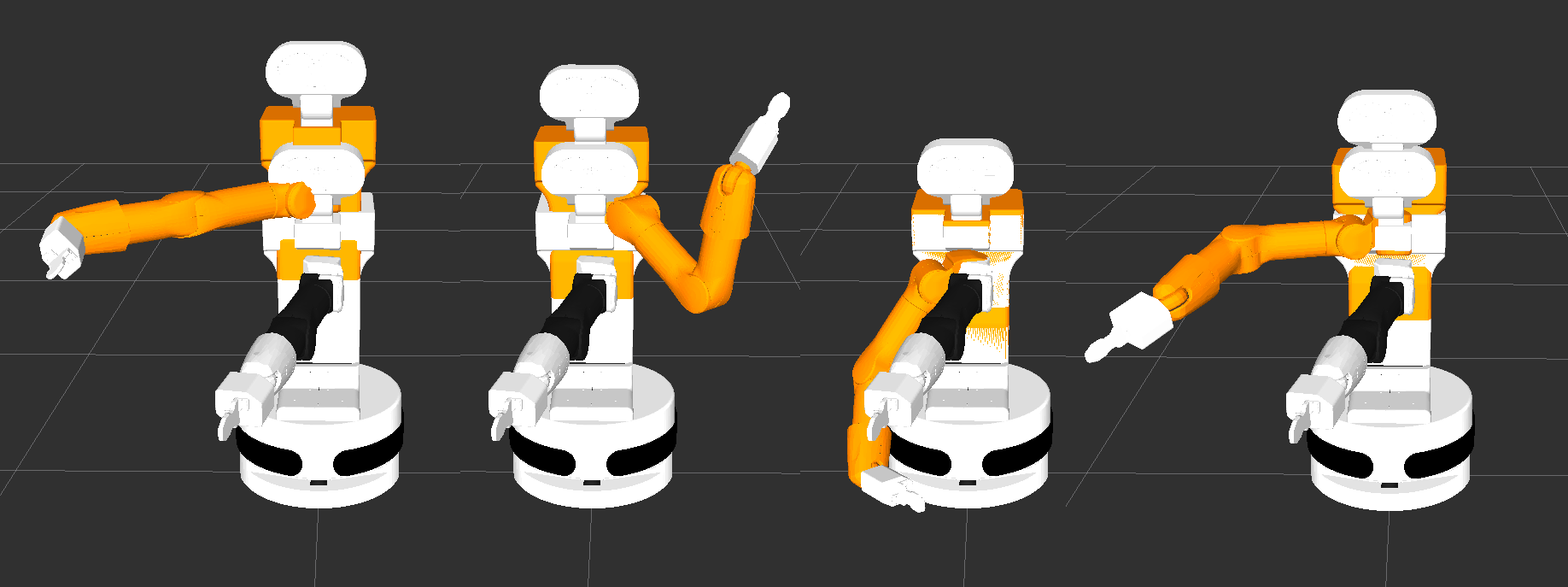

When the robot is started the arms will be lying on the lateral sides of the mobile base. If the robot arms are left in such position after turning the robot on the heat from the arm motors may be transfered, after some time, to the paint of the robot’s base cover which may end up melting it and causing aesthetical damage to these covers. In order to prevent this follow the procedure depicted in Figure: Procedure to start moving the arm safely and here after explained:

Raise the torso to its maximum height using the joystick. In case the torso does not move either press the button Get out of collision in the Demos tab of the WebCommander, see the section 13 WebCommander, or run the following command line instruction:

export ROS_MASTER_URI=http://tiago-0c:11311

rosservice call /get_out_of_collision

Execute the Offer Both movement using, for instance, the Movements tab of the WebCommander

Exectute the Home motion in order to fold back the arms and the torso into a safe configuration where no contacts occur with the base of the robot.

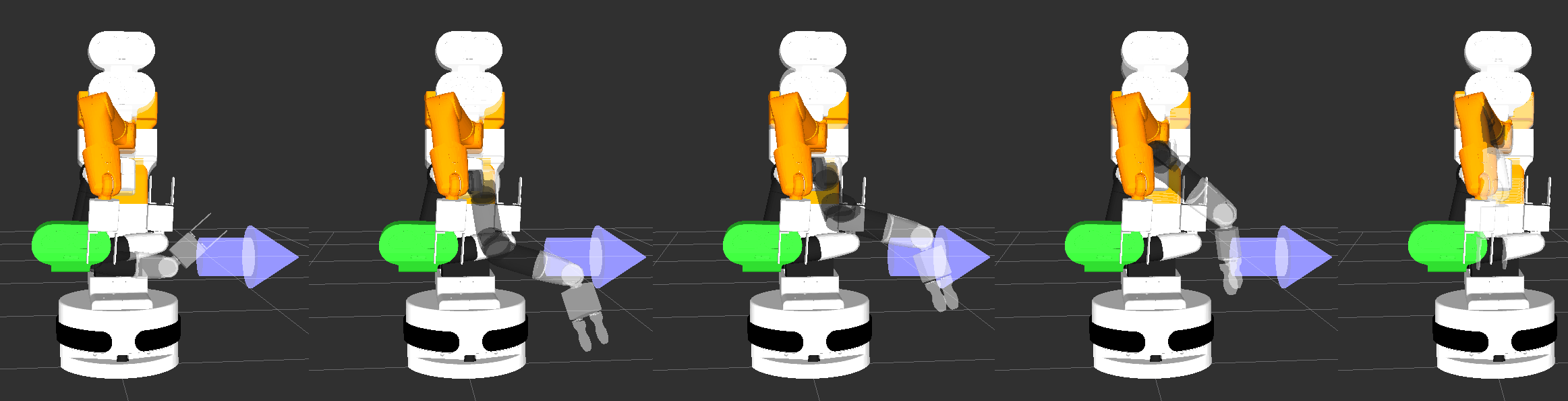

Figure: Procedure to start moving the arm safely¶

7.2 Shutting down the robot properly¶

Warning

The procedure described in this section when operating with TIAGo++ requires two people in order to be done properly.

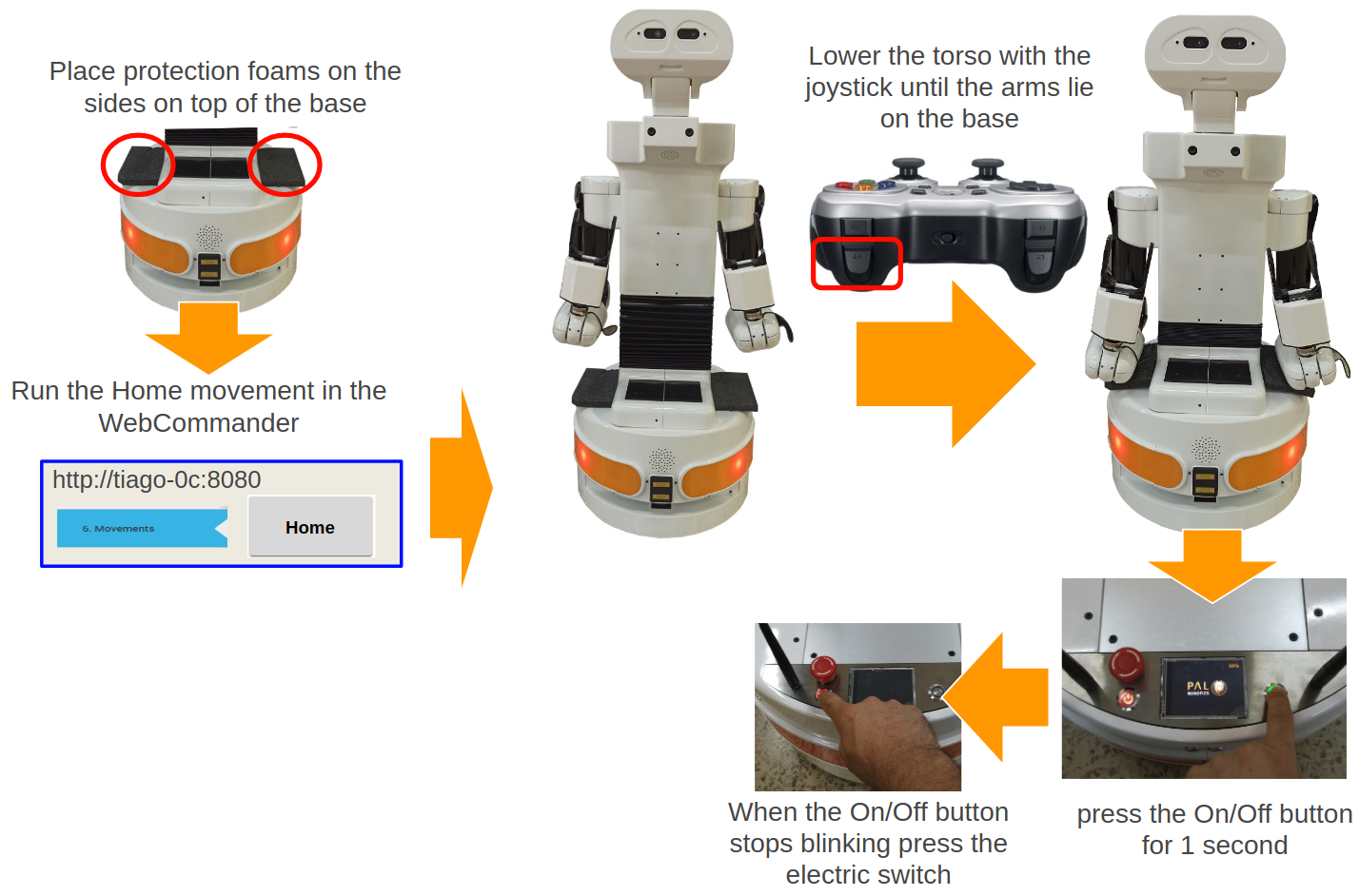

Special care needs to be taken when shutting down or powering off the motors by using the emergency button. In order to avoid bumping the arms against the base of the robot or the floor, the following procedure must be followed, as depicted in figure below:

Figure: Shutdown procedure¶

7.3 Emergency stop¶

Warning

To safely operate with the emergency stop of TIAGo++ two people are recommended.

The emergency stop button can be found on the back of the robot between the power button and the battery level display. As the name implies, this button should only be used only in exceptional cases, when an immediate stop of the robot’s motors is required.

To activate the emergency stop, the user has to push the button. To deactivate the emergency stop, the button has to be rotated clockwise, according to the indications on the button, until it pops out.

Be careful using this emergency stop because the motors will be switched OFF and the arms will fall down, while the computer remains on.

After releasing the emergency stop button, the user has to re-start the robot by pressing the On/Off button until it stops blinking. After this operation, the robot’s status should be restored to that prior to pressing the emergency button in few seconds.

7.4 Measures to prevent falls¶

TIAGo++ has been designed to be statically stable, even when the arms are holding their maximum payload in their most extreme kinematic configuration. Nevertheless, some measures need to be respected in order to avoid the robot from tipping over.

7.4.1 Measure 1¶

Do not apply external downward forces to the arms when they are extended in the direction shown in figure below:

Figure: Fall prevention measure 1¶

7.4.2 Measure 2¶

Do not navigate when the arms are extended, especially when the torso is also extended, see figure below:

Figure: Fall prevention measure 2¶

7.4.3 Measure 3¶

TIAGo++ has been designed to navigate in flat floor conditions. Do not navigate on floors with unevenness higher than 5%, see figure below:

Figure: Navigation in ramps is not recommended¶

7.4.4 Measure 4¶

Avoid navigating close to downward stairs, as TIAGo++’s laser range-finder will not detect this situation and the robot may fall down the stairs.

7.4.5 Measure 5¶

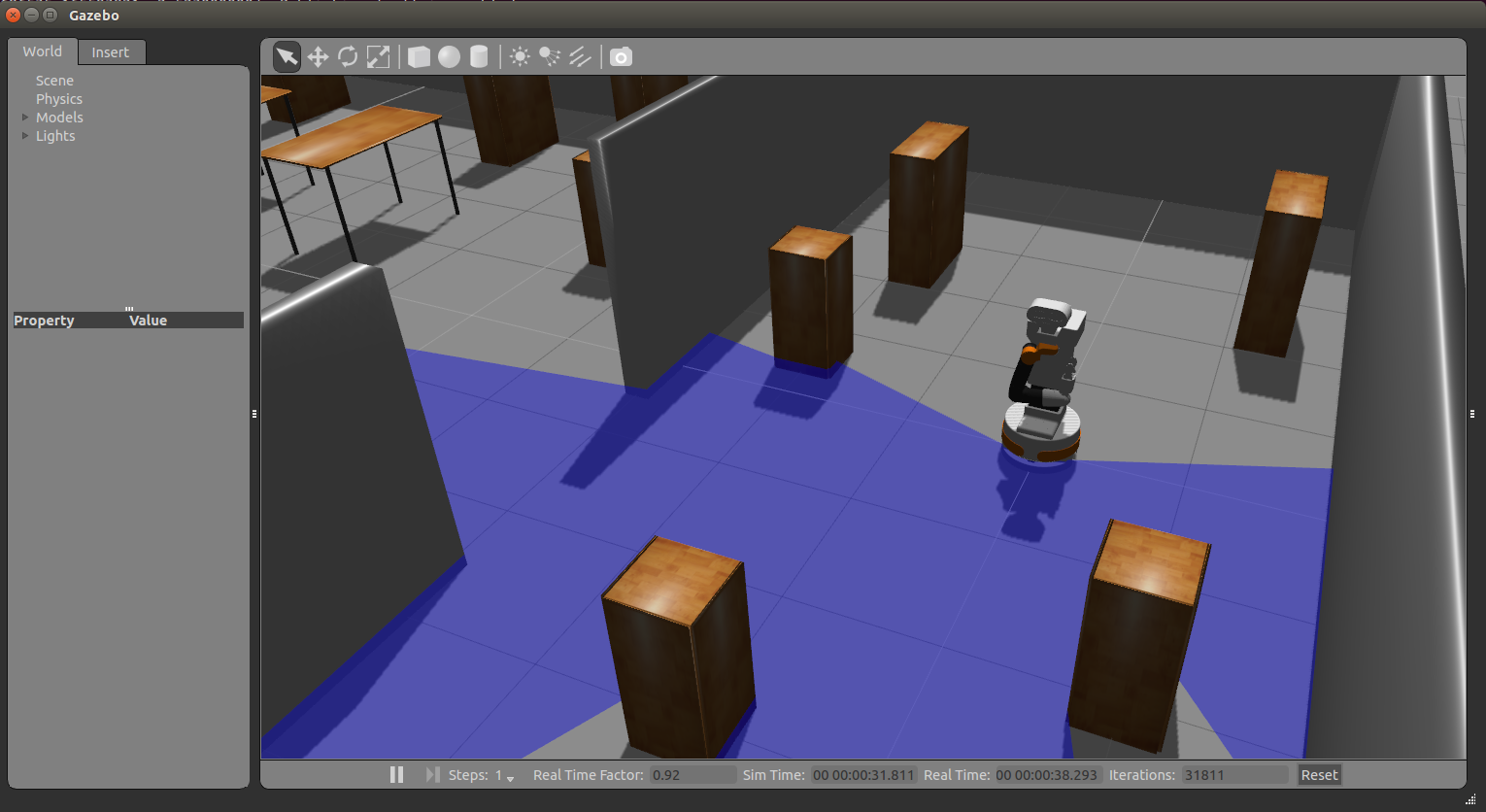

In order to maintain safety, it is highly recommended to navigate with the arms folded and the torso at a low extension, like in the predefined Home configuration, see figure below. This pose provides the following advantages:

Reduces the robot’s footprint, lowering the probability that the arms collide with the environment

Ensures the robot’s stability as the center of mass is close to the center of the robot and it is kept low

Figure: Fall prevention measure 4¶

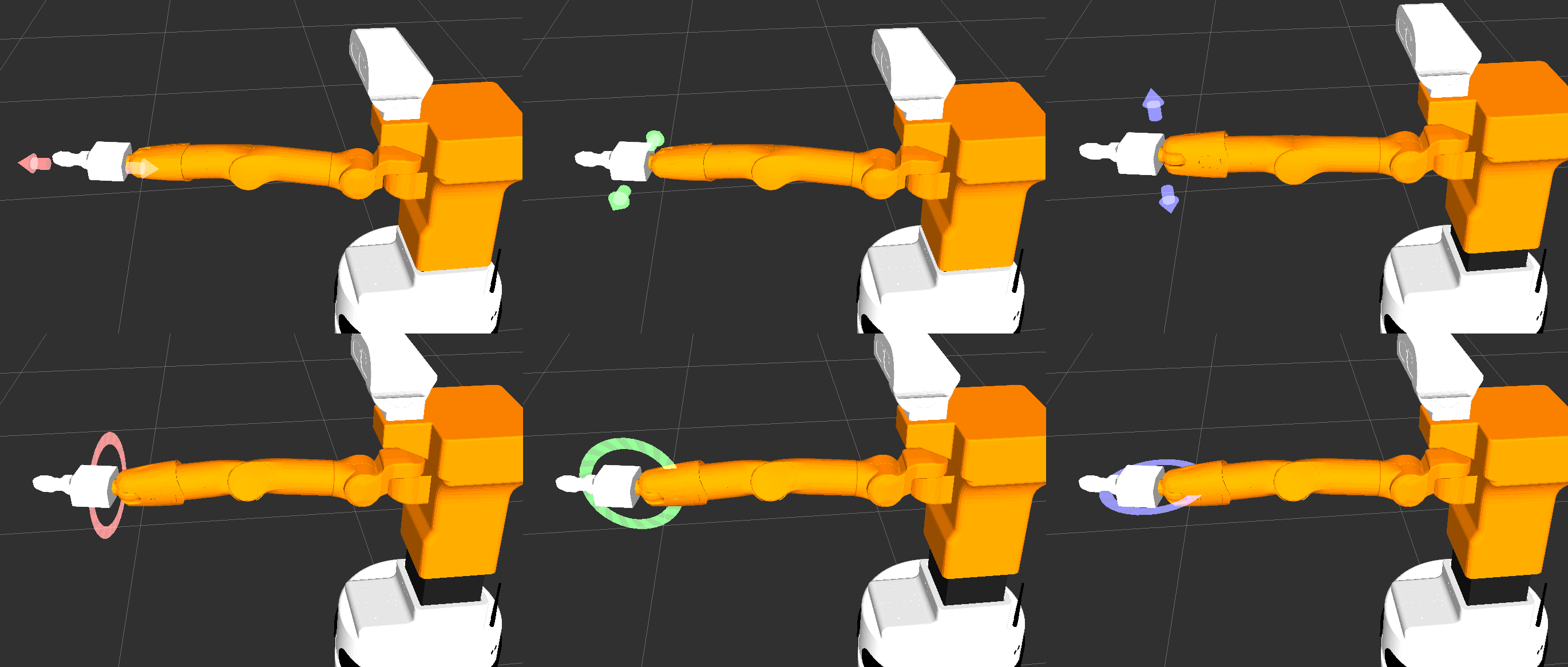

7.5 Measures to prevent collisions¶

Most collisions occur when moving TIAGo++’s arms. It is important to take the following measures into account in order to minimize the risk of collisions.

7.5.1 Measure 1¶

Make sure that there are no obstacles in the robot’s surroundings when playing back a predefined motion or moving the joints of the arms. Provided that the maximum reach of the arm is 86 cm without the end-effector, a safe way to move the arms is to have a clearance around the robot of about 1.5 m.

7.5.2 Measure 2¶

Another active measure that could mitigate damage due to collisions is

the collision detector node. This is found in the startup extras tab

of the Web Commander. This is, in fact, an example of how to implement

safety using feedforward current control. When enabled, this node monitors

the current consumption of the seven joints of the arms. If a joint is

consuming more than a given threshold, it stops the motion. Note that when

this node is running, the arms will not be able to handle some heavy objects

as the extra payload could cause extra current consumption in some joints.

This would abort the specific motion. It is also worth noting that the gravity

compensation mode generates some noise in the current when changing from current

to position control. In such a case, if the collision detector is activated,

this can trigger the abortion of the motion.

This safety measure is not available when the Whole Body Control is running.

It is important to remark that this node should not be used as a final safety

measure. This is just an example and is not fully tested as such.

7.6 How to proceed when an arm collision occurs¶

Warning

As prevention measure, when doing movements with TIAGo++’s arms it is strongly recommended that there are two people overseeing the robot and ready to react in case of need of an emergency stop.

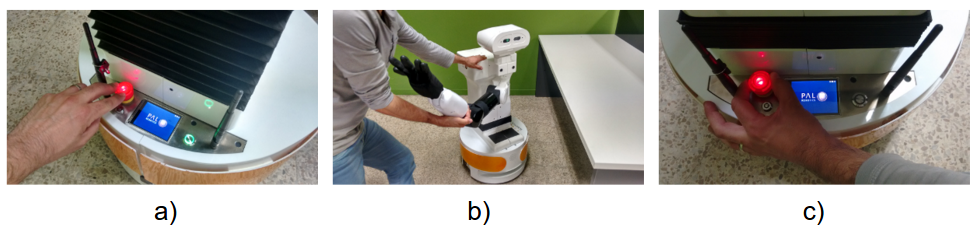

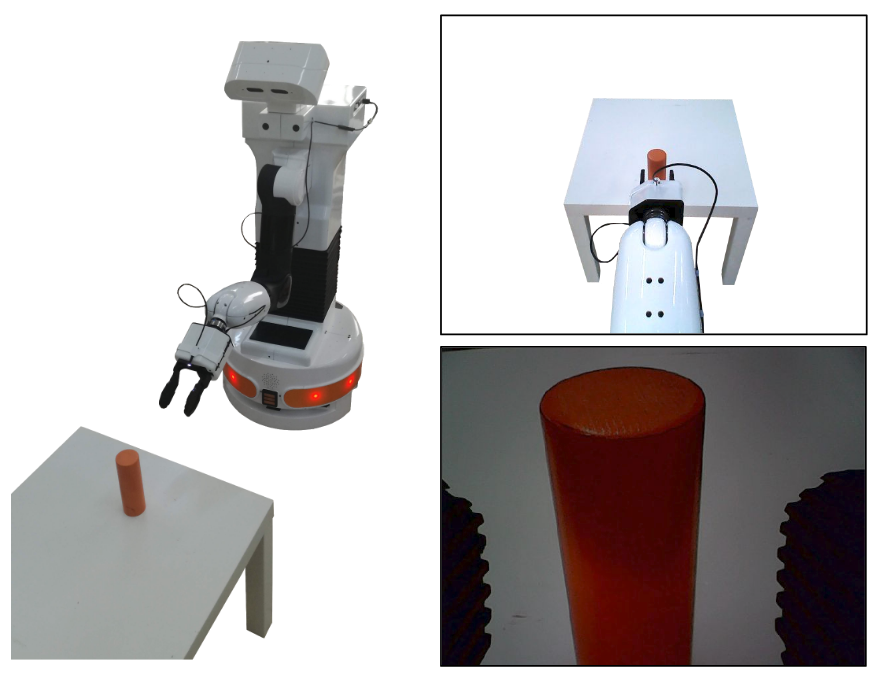

When any of the arms collide with the environment, see Figure: Example of arm collision onto a table, the motors of the arm will continue exerting force, which may cause potential damage to the environment or the arm covers. Serious damage to the motors will not occur, as they have integrated self-protection mechanisms to detect over-heating and overcurrent which switches them off automatically if necessary. Nevertheless, in order to minimize potential harm to the environment and to the robot, the following procedure should be undertaken, as depicted in Figure: How to proceed when a collision occurs:

Press the emergency button to power off the robot’s motors. The arms will fall down, so be ready to hold the wrists while the emergency button remains activated

Move the robot out of the collision by pushing the mobile base and pulling the arm to a safe place

Release the emergency button by rotating it clockwise according to the indications on the button until it pops out. When the On/Off button flashes, press it for 1 second

The power and control mode of the motors are restored and the robot is safe and operational

Figure: Example of arm collision onto a table¶

Figure: How to proceed when a collision occurs¶

7.7 Low battery shutdown¶

If the battery falls below a certain critical level, the current consumption is progressively reduced in order to make the arm fall down slowly and avoid any damage in the robot due to a blackout. Nevertheless, we recommend the user to avoid working when the battery is very low becuase when the arm falls down, even if slowly, it may collide with the environment.

7.8 Firefighting equipment¶

For correct use of TIAGo++ in a laboratory or location with safety conditions, it is recommended to have in place a C Class or ABC Class fire extinguisher (based on halogenated products), as these extinguishers are suitable for stifling an electrical fire.

If a fire occurs, please follow these instructions:

Call the firefighters.

Push the emergency stop button, as long as you can do so without any risk.

Only tackle a fire in its very early stages.

Always put your own and others’ safety first.

Upon discovering the fire, immediately raise an alarm.

Make sure the exit remains clear.

Fire extinguishers are only suitable for fighting a fire in its very early stages. Never tackle a fire if it is starting to spread or has spread to other items in the room, or if the room is filling with smoke.

If you cannot stop the fire or if the extinguisher runs out, get yourself and everyone else out of the building immediately, closing all doors behind you as you go. Then ensure the fire brigade are on their way.

7.9 Leakage¶

The battery is the only component of the robot that is able to leak. To avoid leakage of any substance from the battery, follow the instructions defined in section of 4 Storage, to ensure the battery is manipulated and used correctly

8 Robot Identification¶

The robot is identified by a physical label that can be found close to the power connector.

This label contains:

Business name and full address.

Designation of the machine.

Part Number (P.N.).

Year of construction.

Serial number (S.N.).

Figure: Identification label¶

9 Default network configuration¶

When shipped or when a fresh re-installation is performed, the robot is configured as an access point. The information about the robot’s network is provided in the table below. Note that the SSID ends with the serial number of the robot, i.e. in the given example the s/n is 0.

SSID |

tiago-0 |

Channel |

1 |

Mode key |

WPA-PSK |

Password |

|

Robot IP |

10.68.0.1 |

The address range 10.68.0.0-24 has been reserved. The robot computer name is tiago-Xc, where X is the

serial number without the “0s” on the left. The alias control is also defined in order to refer to the computer

name of the robot when connecting to it when it is set as access point or when using a direct connection, i.e.

an Ethernet cable between the robot and the development computer.

10 Software recovery¶

10.1 Overview¶

This section explains the System and Software reinstall procedure for TIAGo++.

10.2 Robot computer installation¶

To begin the installation process, plug a monitor and USB keyboard into the HDMI connector. See 3.2.8 Service panel section for information about the service panel.

BIOS configuration: Some options in the Control BIOS computer must be configured as follows:

Turn on the robot and press F2 repeatedly. Wait until the BIOS menu appears.

Enter Advance Mode by pressing F7.

- In the Advanced > CPU Configuration menu:

Set Intel Virtualization Technology to Disabled.

- In the Advanced > CPU Configuration > CPU Power Management Configuration menu:

Set Intel(R) SpeedStep (tm) to Disabled.

Set CPU C-states to Disabled.

- In the Boot > Boot Configuration menu:

Set Wait for ‘F1’ if Error to Disabled.

Go to Exit and select Save Changes & Reset.

Shut down the robot.

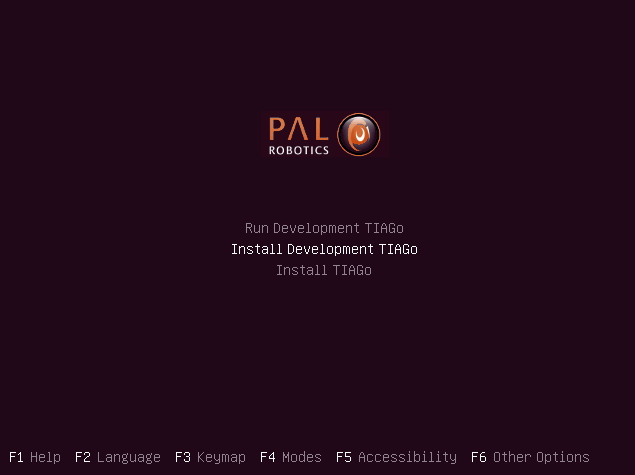

Installation: The installation is performed using the Software USB drive provided with TIAGo++.

Insert the Software USB drive.

Turn on the robot and press F2 repeatedly. Wait until the BIOS menu appears.

Enter the Boot Menu by pressing F8 and select the Software USB drive.

The Language menu will pop up. Select English.

The menu shown in Figure: System installation menu.

Select Install TIAGo++.

Select the keyboard layout by following the instructions.

10.3 Development computer installation¶

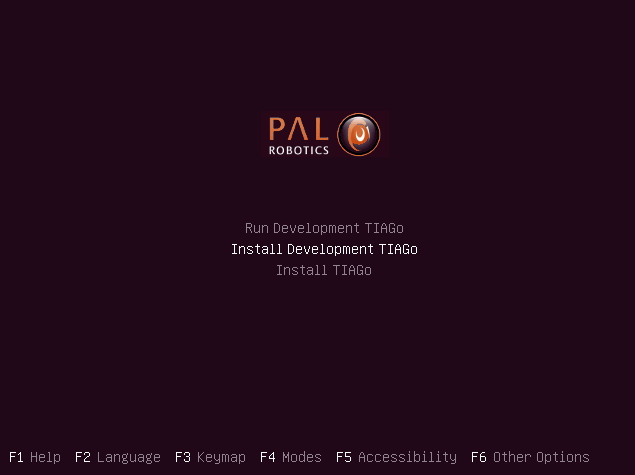

Hardware installation: Connect the computer to the electric plug, the mouse and the keyboard. Internet access is not required as the installation is self-contained.

Figure: System installation menu¶

Software Installation: The installation is performed using the Software USB drive provided with TIAGo++.

Insert the Software USB drive.

Turn on the computer, access the BIOS and boot the Software USB drive.

The Language menu will pop up. Select English.

The menu shown in figure below:

Figure: System installation menu¶

Choose Run Development TIAGo++ if you wish to run the development computer without installing it or to install it in a specific partition. Using the Install Development TIAGo++ option will delete all partitions in the disk and automatically install the system in a new partition.

Select the keyboard layout by following the instructions.

11 TIAGo++ Robot’s Internal Computers¶

11.1 TIAGo++ LAN¶

The name of TIAGo++’s computer is tiago-0c, where 0 needs to be replaced by the serial number of your robot. For the sake of clarity, hereafter we will use tiago-0c to refer to TIAGo++’s computer name.

In order to connect ot the robot, use ssh as follows:

ssh pal@tiago-0c

11.2 File system¶

The TIAGo++ robot’s computer has a protection against power failures that could corrupt the filesystem.

These partitions are created:

/: This is an union partition, the disk is mounted in /ro directory as read-only and all the changes arestored in RAM. So, all the changes are not persistent between reboots.

/home: This partition is read-write. Changes are persistent between reboots.

/var/log: This partition is read-write. Changes are persistent between reboots.

In order to work with the filesystem as read-write do the following:

root@tiago-0c:~# rw

Remounting as rw...

Mounting /ro as read-write

Binding system files...

root@tiago-0c:~# chroot /ro

rw command remounts all the partitions as read-write. Then with a chroot to /ro we have the same system than the default but all writable. All the changes performed will be persistent.

In order to return to the previous state do the following:

root@tiago-0c:~# exit

root@tiago-0c:~# ro

Remount /ro as read only

Unbinding system files

First exit command returns from the chroot. Then the ro script remounts the partitions in the default way.

11.3 Internal DNS¶

The control computer has a DNS server that is used for the internal LAN of the TIAGo++ with the domain name reem-lan. This DNS server is used by all the computers connected to the LAN.

When a computer is added to the internal LAN (using the Ethernet connector, for example) it can be added to the internal DNS with the command addLocalDns:

root@tiago-0c:~# addLocalDns -h

-h shows this help

-u DNSNAME dns name to remove

Example: addLocalDns -u terminal

The same command can be used to modify the IP of a name: if the dnsname exists in the local DNS, the IP address is updated.

To remove names in the local DNS, exit the command delLocalDns:

root@tiago-0c:~# delLocalDns -h

-h shows this help

-u DNSNAME dns name to remove

Example: addLocalDns -u terminal

These additions and removals in the local DNS are not persistent between reboots.

11.4 NTP¶

Since big jumps in the local time can have undesired effects on the robot applications, NTP is setup when the robot starts and before the ROS master is initiated. If no synchronization was possible, for example if the NTP servers are offline, the NTP daemon is stopped after a timeout.

To setup ntp as client edit the etc/ntp/.conf file and add your desired ntp servers. You can use your own local

time servers or external ones, such as ntp.ubuntu.com. You can also try uncommenting the default servers already present.

For example, if the local time server is in 192.168.1.6 add the following to the configuration file.

server 192.168.1.6 iburst

Restart the ntp daemon to test your servers.

systemctl restart ntp.service

Run the ntpq -p command and check that at least one of the configured servers has a nonzero reach value and a nonzero offset value.

The corrected date can be consulted with the date command.

Once the desired configuration is working make sure to make the changes in /etc/ntp.conf persistant and reboot the robot.

If, on the contrary, you want the robot to act as the NTP server of your network, no changes are needed. The current ntp daemon already acts as server. You will only need to configure NTP for the clients.

To configure NTP on the rest of the clients, like the development PCs, run:

systemct1 status ntp.service

If the service is active follow the previous steps to configure the ntp daemon. Once again a private or public NTP server can be used. If, instead the robot is desired as server add this line to /etc/ntp.conf.

server tiago-0c iburst

If the service is not found then that means ntp is not installed. Either install it with apt-get install ntp or make use of Ubuntu’s default ntp client called timesyncd.

To configure timesyncd simply edit the /etc/systemd/timesyncd.conf file and set the proper NTP server.

Restart the timesyncd daemon.

systemctl restart systemd-timesyncd.service

Check the corrected date with the date command. The time update can take a few seconds.

11.5 System upgrade¶

For performing system upgrades connect to the robot, make sure you have Internet access and run the pal_upgrade command as root user.

This will install the latest TIAGo++ software available from the PAL repositories.

Reboot after upgrade is complete.

11.6 Firmware update¶

To update firmware, use the application described in section 11.5 System upgrade. Check for updates for the pal-ferrum-firmware-* packages and install them.

Before running the script, place the arm in a safe position with a support underneath it, as during the installation of the script, the arm can tumble.

Run the update_firmware.sh script, as shown below. The update will take a few minutes.

pal@tiago-0c:~# rosrun firmware_update_robot update_firmware.sh

Finally, shut it down completely, power off with the electric switch and then power up the robot, as described in 3.2.7 User panel.

11.7 Meltdown and Spectre vulnerabilities¶

Meltdown and Spectre exploit critical vulnerabilities in modern processors.

Fortunately the linux Kernel has been patched to mitigate these vulnerabilities, this mitigation comes at a slight performance cost.

PAL Robotics configuration does not interfere with mitigation, whenever the installed kernel provides mitigation, it is not disable by our software configuration.

Below we provide some guidelines to disable the mitigation in order to recover the lost performance, this is not recommended by PAL Robotics and it is done on the customer’s own risk.

On this website the different tunables for disabling mitigation controls are displayed.

These kernel flags must be applied to the GRUB_CMDLINE_LINUX in /etc/default/grub. After changing them, update-grub must be executed, and the computer must be rebooted.

These changes need to be made in the persistent partition, as indicated in 11.2 File system

Be extremely careful when performing these changes, since they can prevent the system from booting properly.

12 Development Computer¶

12.1 Overview¶

The operating system used in the SDE Development Computer is based on a Linux Ubuntu distribution. Any documentation related to this specific Linux distribution applies to SDE. This document only points out how the PAL SDE differs from standard Ubuntu.

12.2 Computer requirements¶

A computer with 8 CPU cores is recommended. A powerful graphics card with resolution of at least 1920x1080 pixels is recommended in order to have a better user experience when using visualization tools like rviz and the Gazebo simulator. The development computer ISO provides support for Nvidia cards. In case of upgrading the kernel of the development computer PAL Robotics cannot ensure proper support for other graphic cards.

12.3 Setting ROS environment¶

In order to use the ROS commands and packages provided in the development ISO the following command needs to be executed when opening a new console

# Pal distro environment variable

export PAL_DISTRO=gallium

export ROS_DISTRO=noetic

source /opt/pal/${PAL_DISTRO}/setup.bash

If you are using a ros2 distribution

# Pal distro environment variable

export PAL_DISTRO=alum

export ROS_DISTRO=humble

source /opt/pal/${PAL_DISTRO}/setup.bash

A good way to spare the execution of this command everytime is to append it at the /home/pal/.bashrc file.

12.4 ROS communication with the robot¶

When developing applications for robots based on ROS, it is typical to have the rosmater running on the

robot’s computer and the development computer running ROS nodes connected to the rosmaster of the

robot. This is achieved by setting in each terminal of the development computer running ROS nodes the following environment variable:

export ROS_MASTER_URI=http://tiago-0c:11311

Note that in order to successfully exchange ROS messages between different computers, each of them needs to be able to resolve the hostname of the others. This means that the robot computer needs to be able to resolve the hostname of any development computer and vice versa. Otherwise, ROS messages will not be properly exchanged and unexpected behavior will occur.

Do the following checks before starting to work with a development computer running ROS nodes that point to the rosmaster of the robot:

ping tiago-0c

Make sure that the ping command reaches the robot’s computer.

Then do the same from the robot:

ssh pal@tiago-0c

ping devel_computer_hostname

If ping does not reach the development computer then proceed to add the hostname to the local DNS of the robot, as explained in 11.3 Internal DNS. Otherwise, you may export the environmental variable ROS_IP - the IP of the development computer that is visible from the robot. For example, if the robot is set as access point and

the development computer is connected to it and it has been given IP 10.68.0.128 (use ifconfig to figure it out), use the following command in all terminals used to communicate with the robot:

export ROS_MASTER_URI=http://tiago-0c:11311

export ROS_IP=10.68.0.128

All ROS commands sent will then use the computer’s IP rather than the hostname.

12.5 Compiling software¶

The development computer includes the ROS messages, system headers and our C++ open source headers necessary to compile and deploy software to the robot.

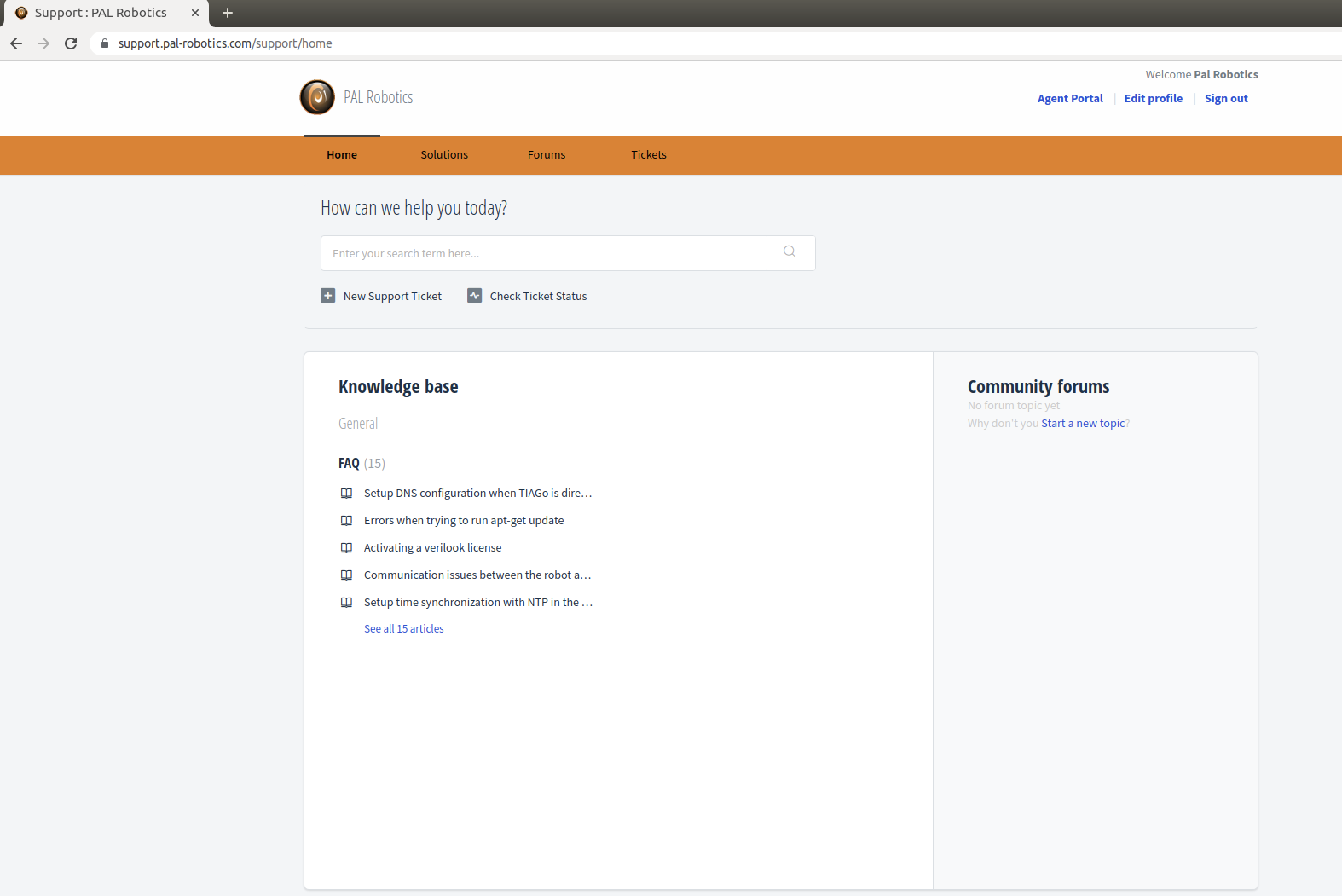

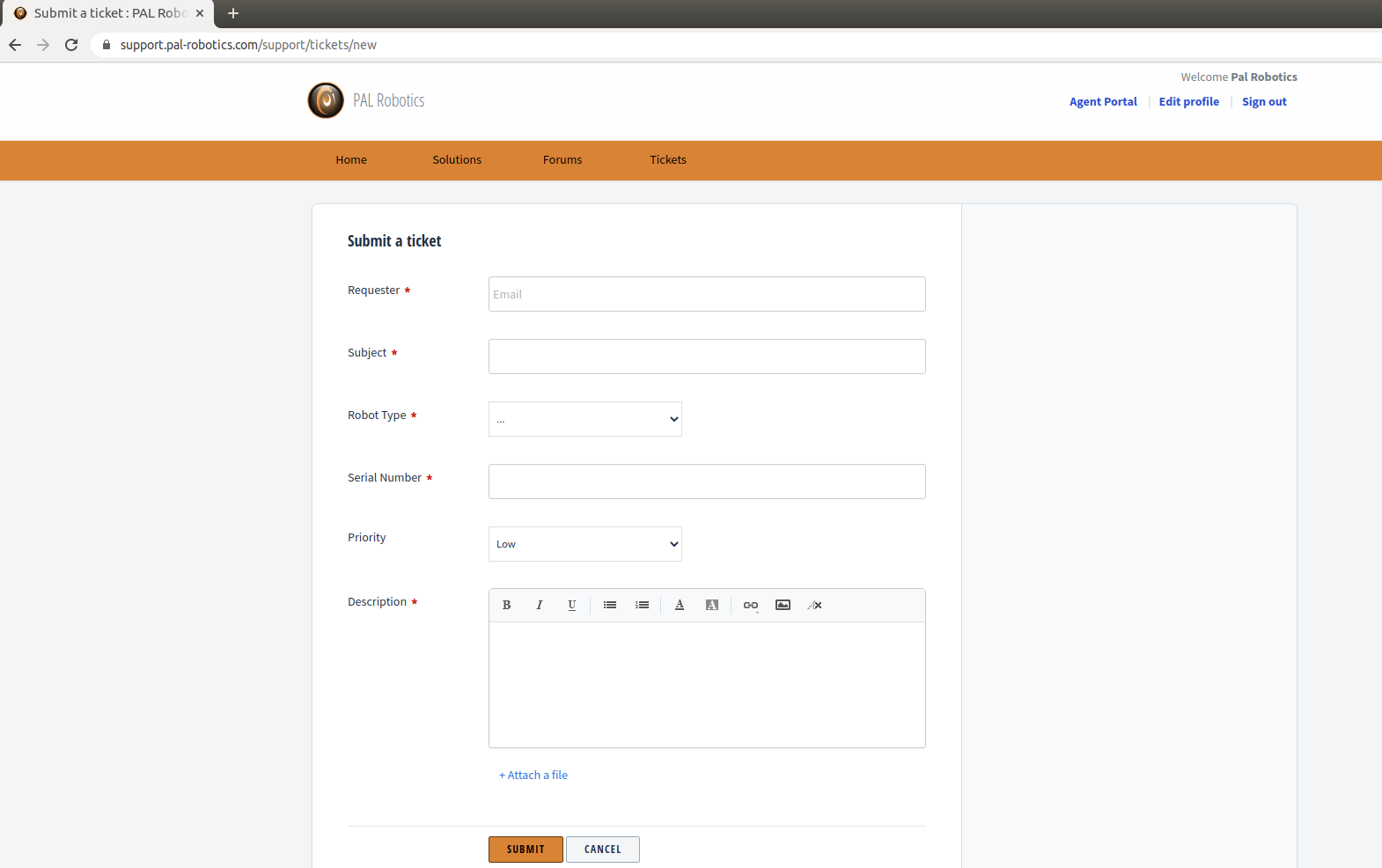

Some of the software APIs that we have developed are proprietary, and their headers are not included by default. If you require them you can contact us through our customer service portal and after signing a non disclosure agreement, they will be provided. These APIs are for accessing advanced features not available through a ROS API.

12.6 System Upgrade¶

In order to upgrade the software of the development computers, you have to use the pal_upgrade_chroot.sh command. Log in as a root and execute:

root@development:~# /opt/pal/${PAL_DISTRO}/lib/pal_debian_utils/pal_upgrade_chroot.sh

Notifications will appear whenever software upgrades are available.

12.7 NTP¶

Please follow the instructions on the 11.4 NTP.

13 WebCommander¶

The WebCommander is a web page hosted by TIAGo++. It can be accessed from any modern web browser that is able to connect to TIAGo++.

It is an entry point for some monitoring tasks, as well as for configuration tasks that require a Graphical User Interface (GUI).

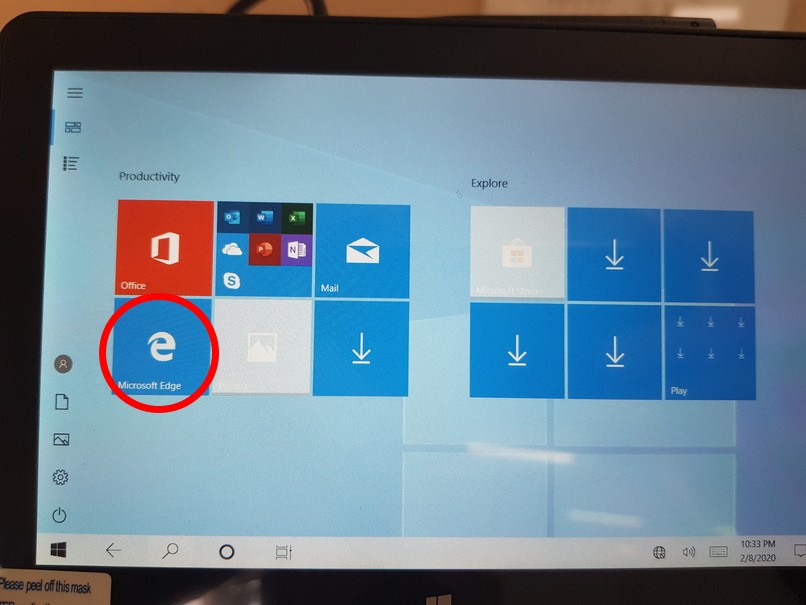

13.1 Accessing the WebCommander website¶

Ensure that the device you want to use to access the website is in the same network and able to connect to TIAGo++.

Open a web browser and type in the address bar the host name or IP address of TIAGo++’s control computer, and try to access port 8080:

If you are connected directly to TIAGo++, which means using robot as access point, you can also use:

13.2 Overview¶

The WebCommander website contains visualizations of the state of TIAGo++’s hardware, applications and installed libraries, as well as tools to configure parts of its behaviour.

13.3 Default tabs¶

TIAGo++ comes with a set of preprogrammed tabs that are described in this section, these tabs can also be modified and extended, as explained in the section 12.4 ROS communication with the robot. Each tab is an instantiation of a web commander plugin.

For each tab a description and the plugin type used to create it is defined.

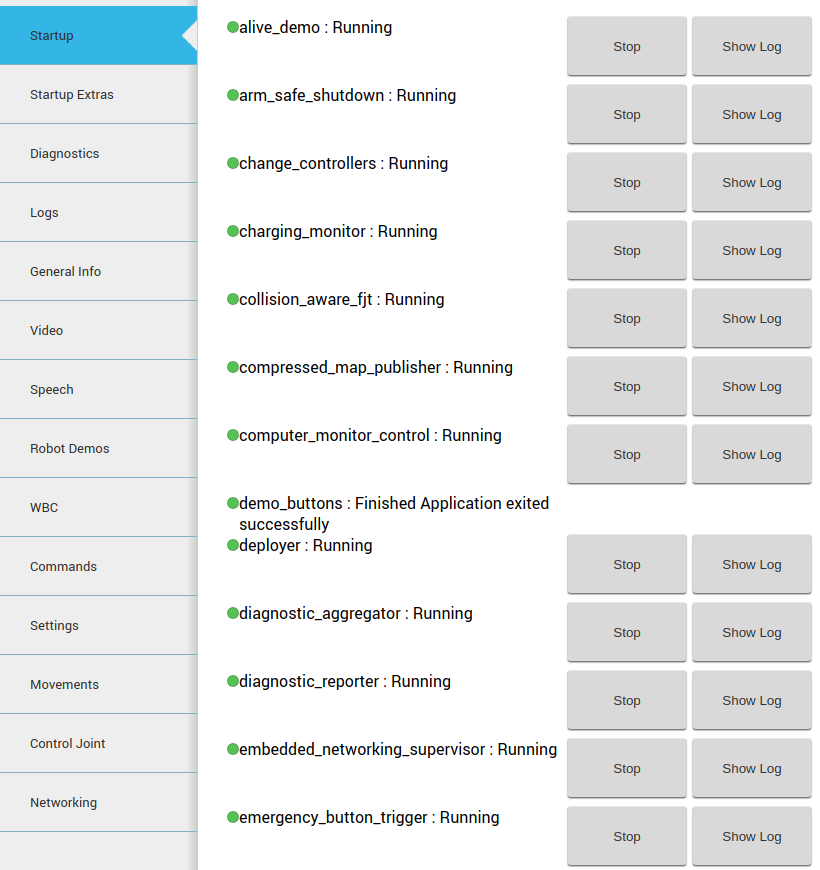

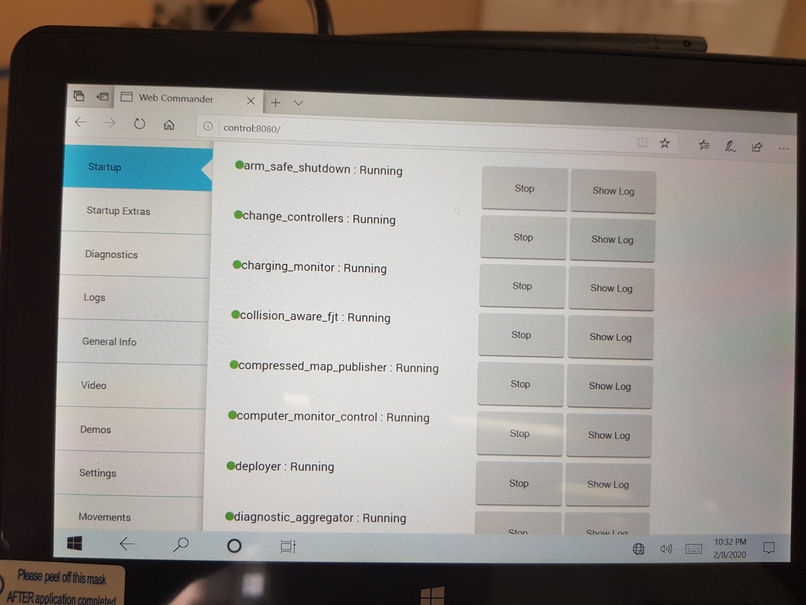

13.3.1 The startup tab¶

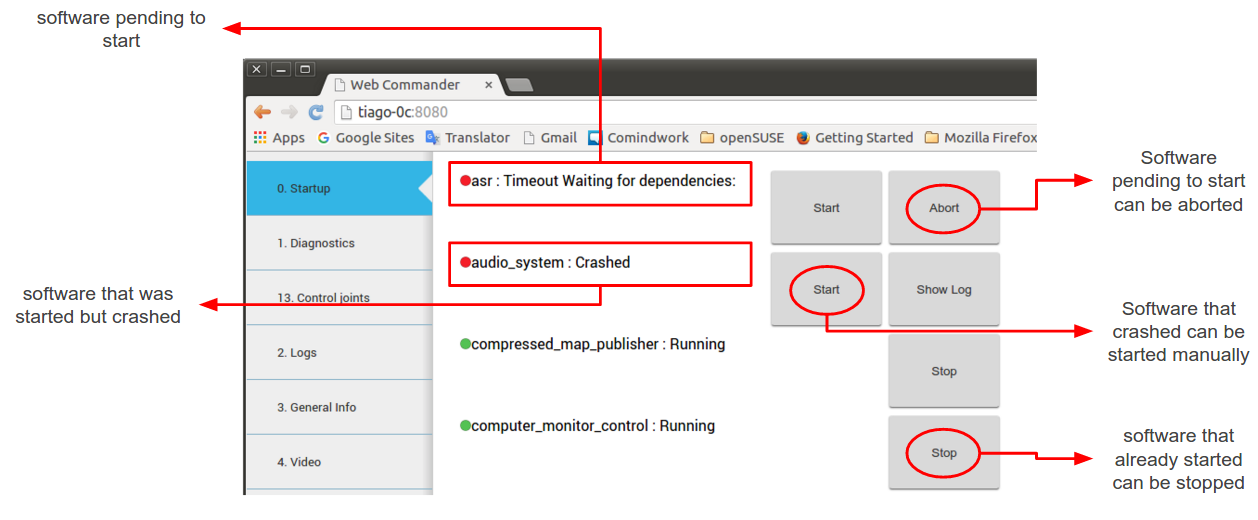

Plugin: Startup

Description: Displays the list of PAL software that is configured to be started in the robot, and whether it has been started or not.

Each application, or group of applications that provide a functionality, can choose to specify a startup dependency on other applications or group of applications. There are three possible states:

Green: All dependencies satisfied, application launched.

- Yellow: One or more dependencies missing or in error state, but

within reasonable time. Application not launched.

- Red: One or more dependencies missing or in error state, and maximum

wait time elapsed. Application not launched.

Additionally, there are two buttons on the right of each application. If the application is running, a “Stop” button is be displayed, which will stop the application when pressed. If the application is stopped or has crashed, the button “Start” is be displayed, which will start the application when pressed. The “Show Log” button, allows to display the log of the application.

Figure: The startup tab displays the launched applications and their dependencies¶

13.3.2 The startup extras tab¶

Plugin: Startup

Description: This tab is optional, if present it will contain a list of PAL software which is not started by default during the boot up of the robot. These are optional features that need to be manually executed by the user.

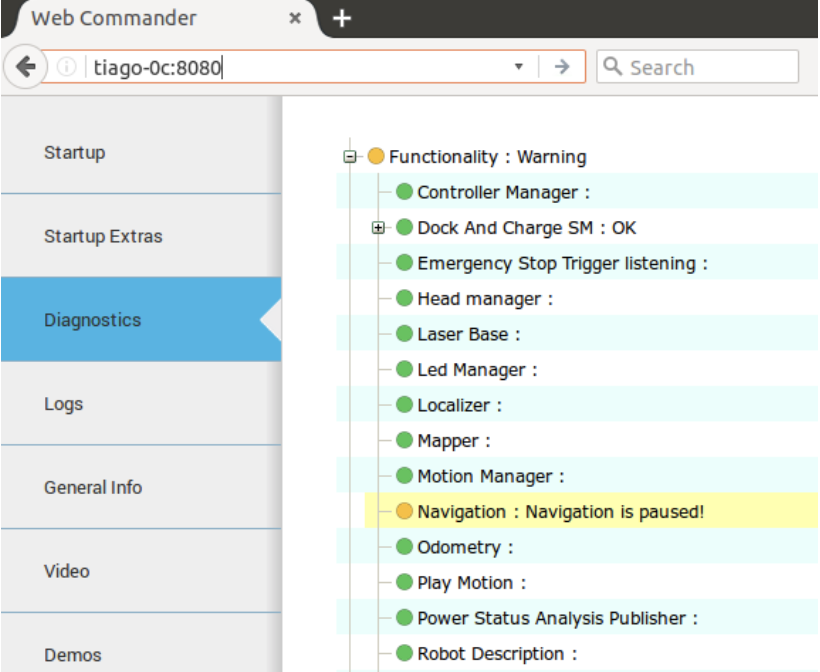

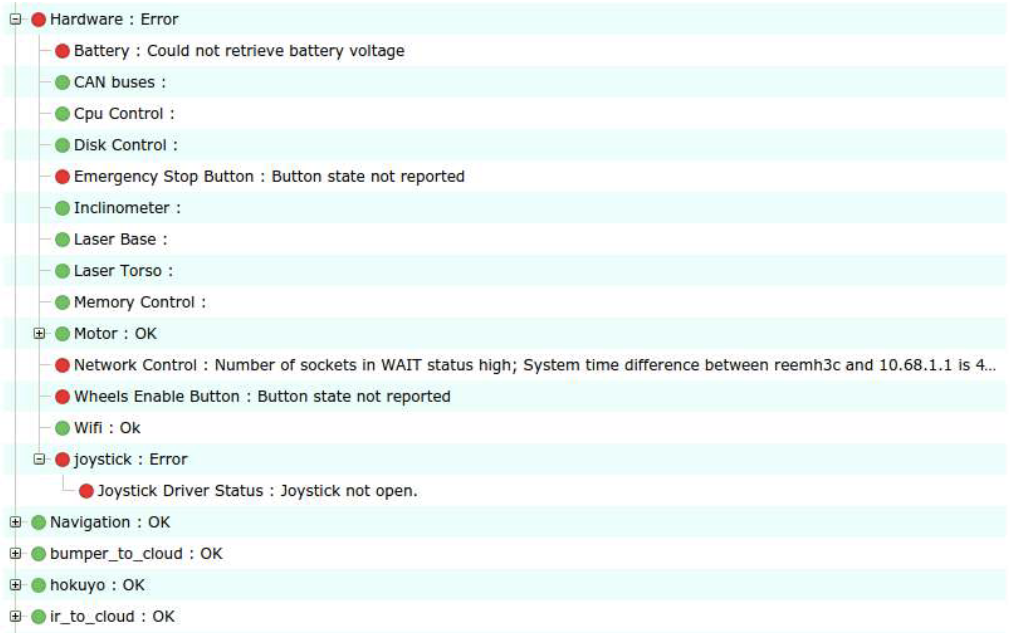

13.3.3 Diagnostics tab¶

Plugin: Diagnostics

Description: Displays the current status of TIAGo++’s hardware and software.

The data is organized in an hierarchical tree. The first level contains the hardware and functionality categories.

The functionalities are the software elements that run in TIAGo++, such as vision or text to speech applications.

Hardware diagnostics contain the hardware’s status, readings and possible errors.

Inside the hardware and functionality categories, there’s an entry for each individual functionality or device. Some devices are grouped together (motors, sonars), but each device can still be seen in detail.

The color of the dots indicates the status of the application or component.

Green: No errors detected.

Yellow: One or more anomalies detected, but they are not critical.

Red: One or more errors were detected which can affect the behaviour of the robot

Black: Stale, no information about the status is being provided

An example of this display is shown in the figure below. The status of a particular category can be expanded by clicking on the “+” symbol to the left of the name of the category. This will provide information specific to the device or functionality. If there’s an error, an error code will be shown.

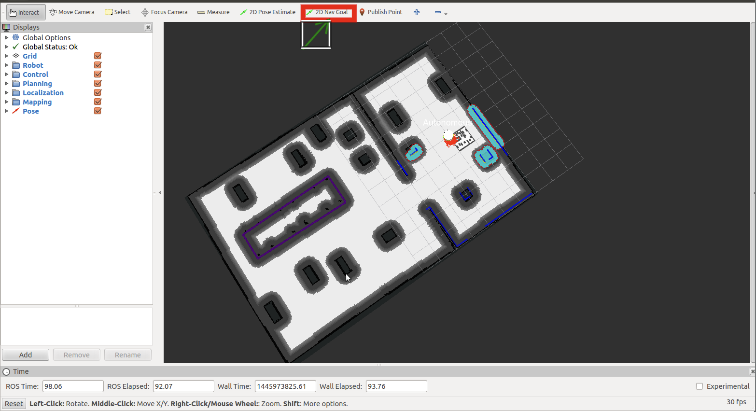

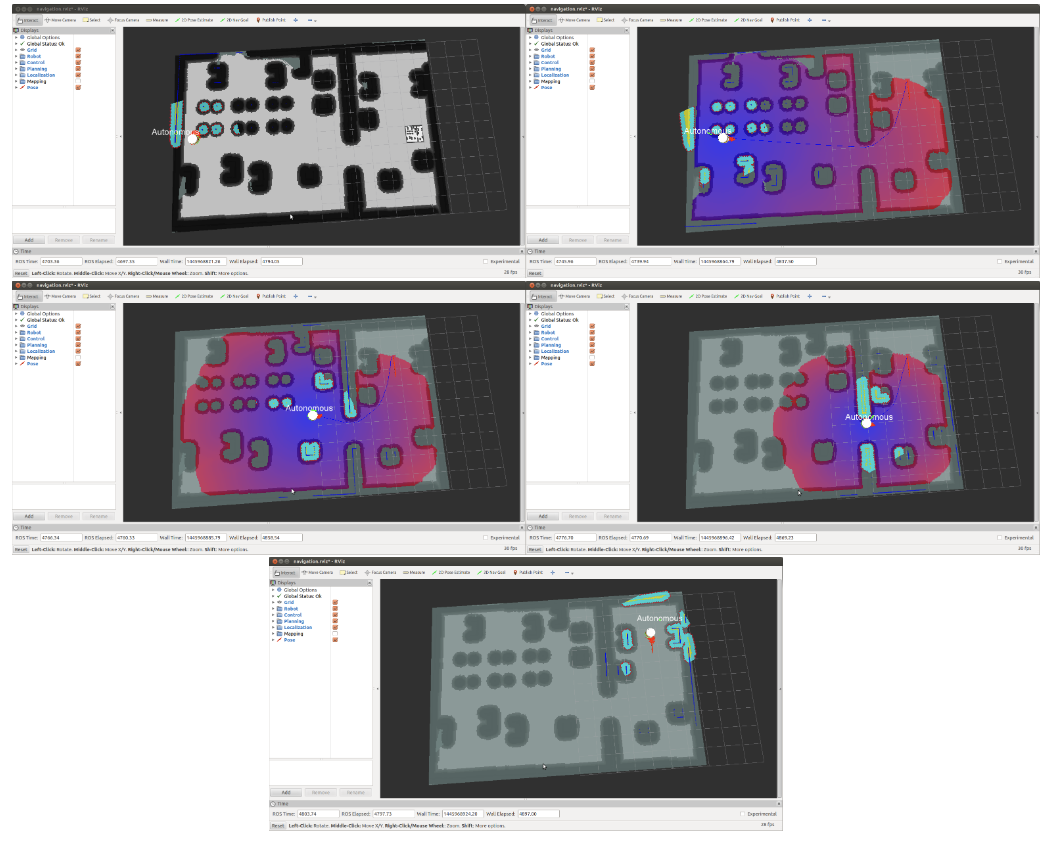

Figure: The Diagnostics tab displays the status of the hardware and software components of TIAGo++¶

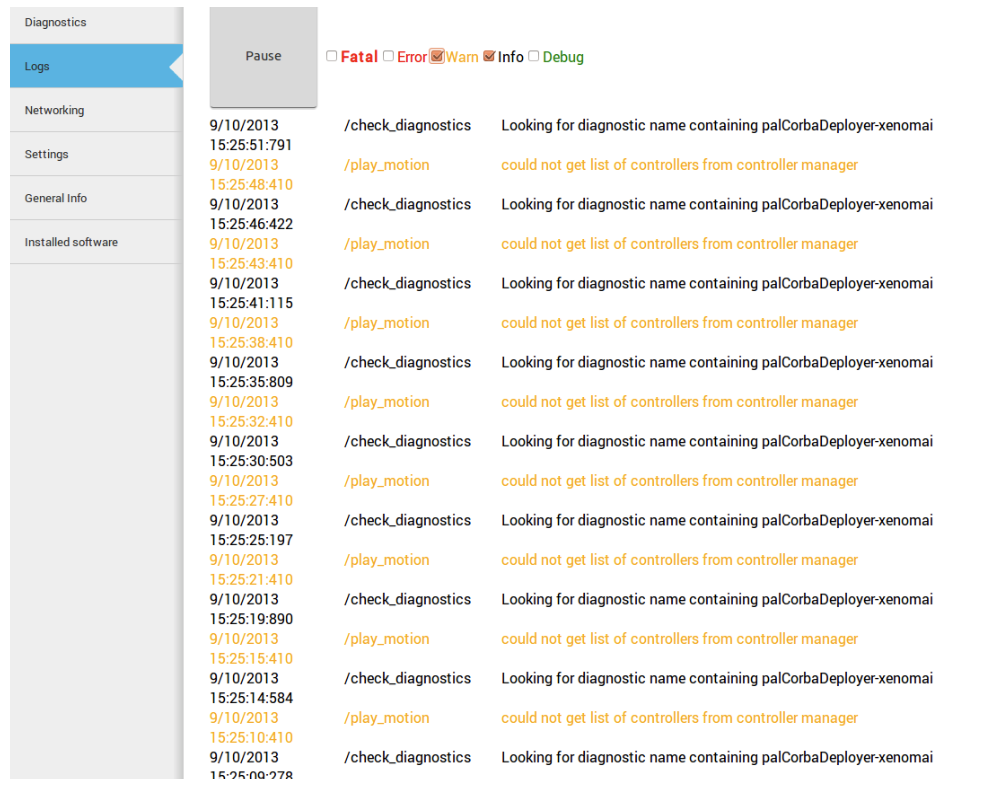

13.3.4 Logs Tab¶

Plugin: Logs

Description: Displays the latest messages printed by the applications’ logging system.

The logs are grouped by severity levels, from high to low: Fatal, Error, Warn, Info and Debug.

The logs are updated in real time, but messages printed before opening the tab can’t be displayed.

The log tab has different check-boxes to filter the severity of the messages that are displayed. Disabling a priority level will also disable all the levels below it, but they can be manually enabled. For instance, unchecking Error will also uncheck Warn, Info and Debug levels, but the user can click on any of them to reenable them.

Figure: The Log Tab displays the log messages as they are being published in the robot¶

13.3.5 General Info Tab¶

Plugin: General Info

Description: Displays the robot model, part number and serial number.

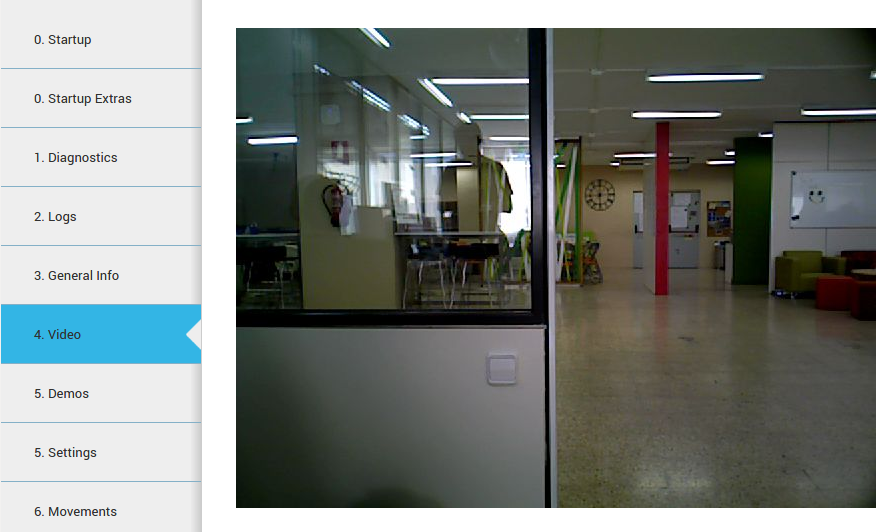

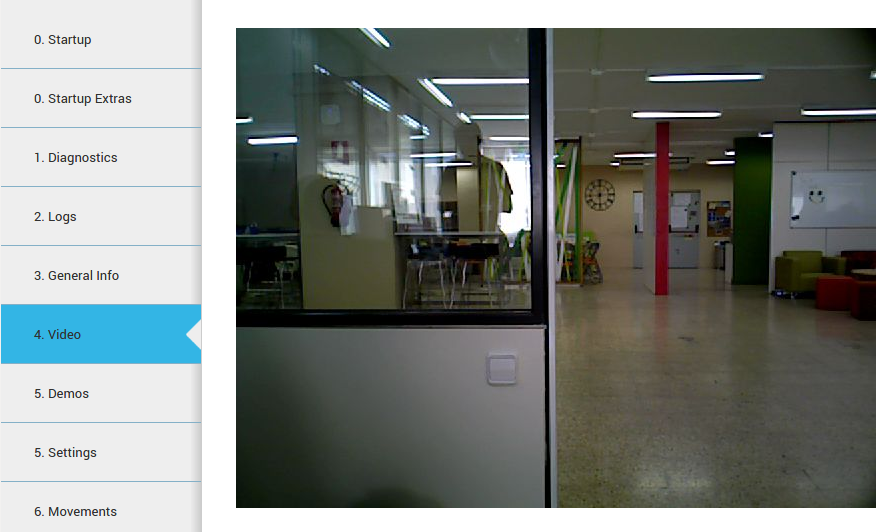

13.3.6 Video Tab¶

Plugin: Video

Description: Displays the images from a ROS topic in the WebCommander.

Figure: The Video Tab displays live video stream from the robot’s camera¶

13.3.7 Speech tab¶

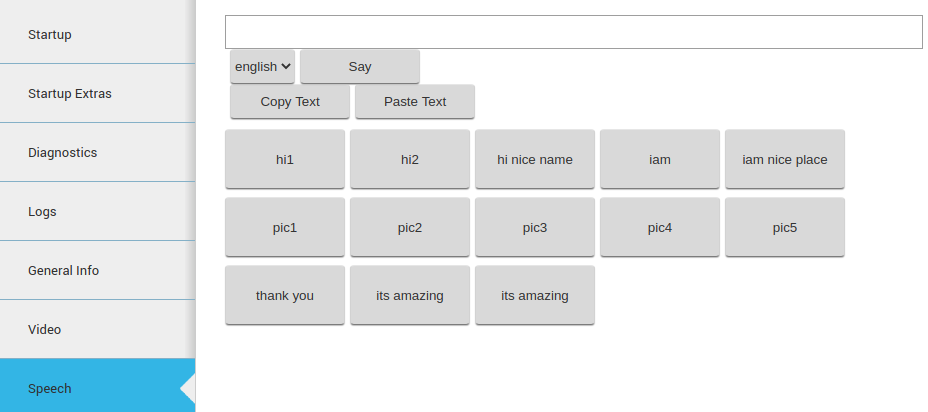

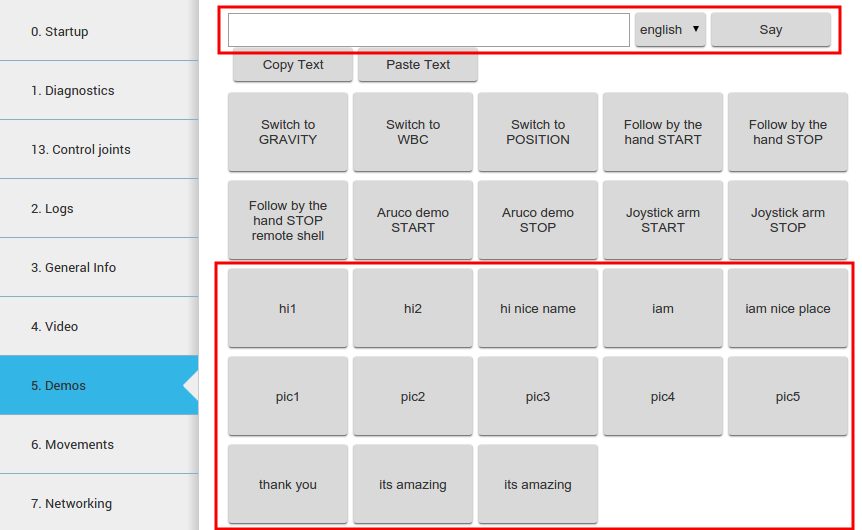

Plugin: Commands

Description: Displays buttons to trigger voice synthesis with some predefined text. In addition to this, the tab features a top text box where the user can write any sentence and synthesize it with the robot’s voice by pressing the “Say” button in the choosen language.

Figure: The Speech Tab displays predefined voice sentences¶

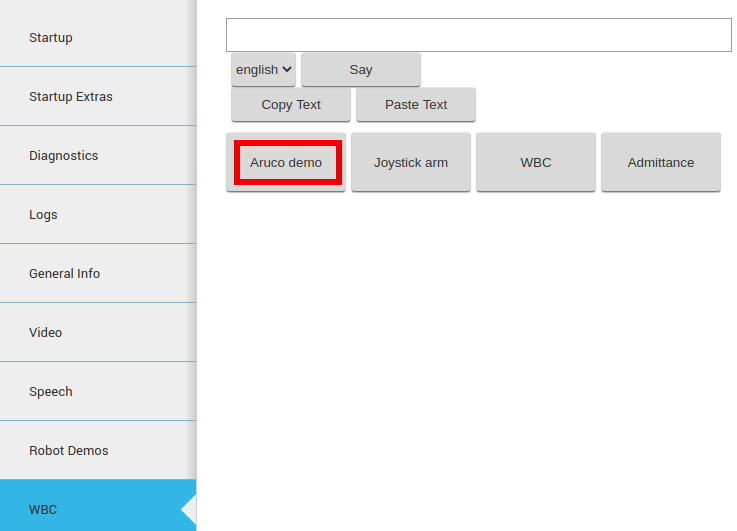

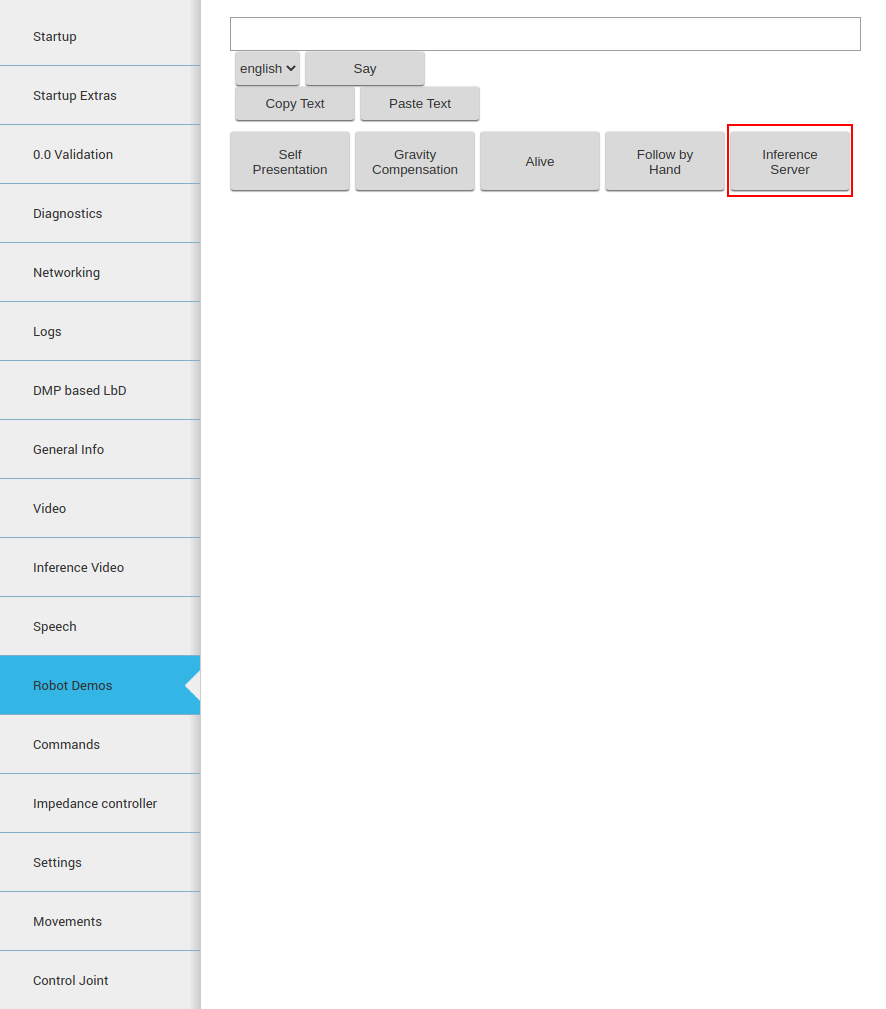

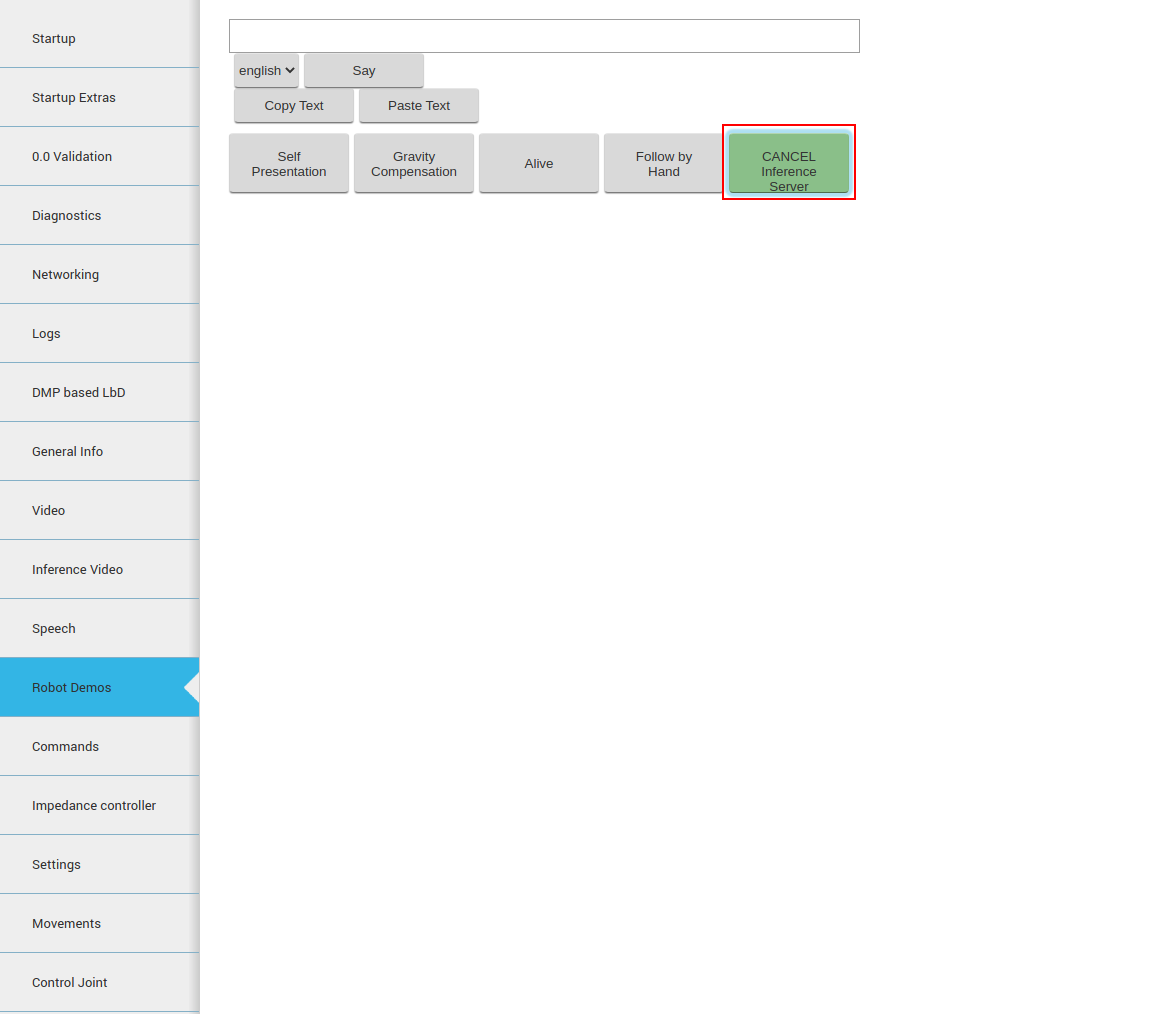

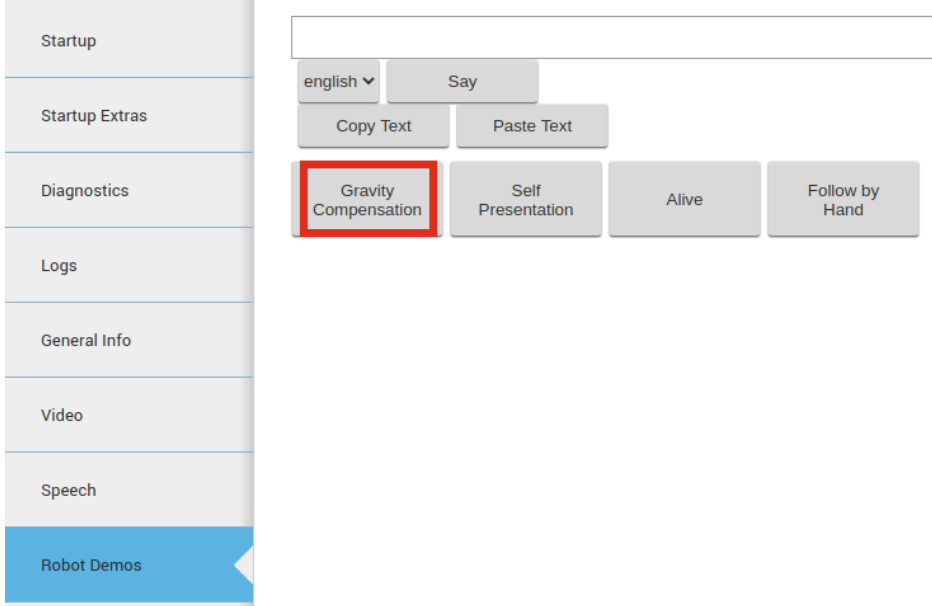

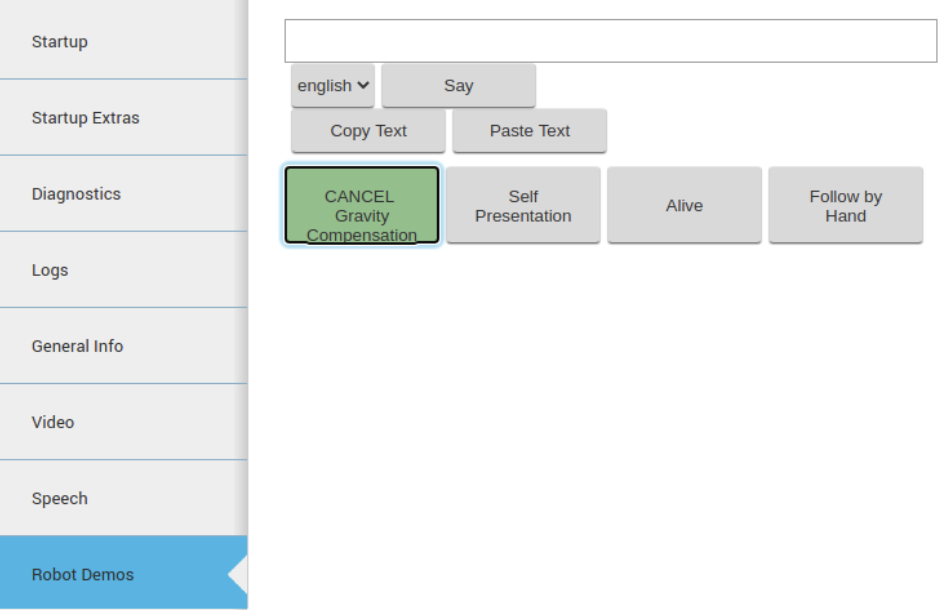

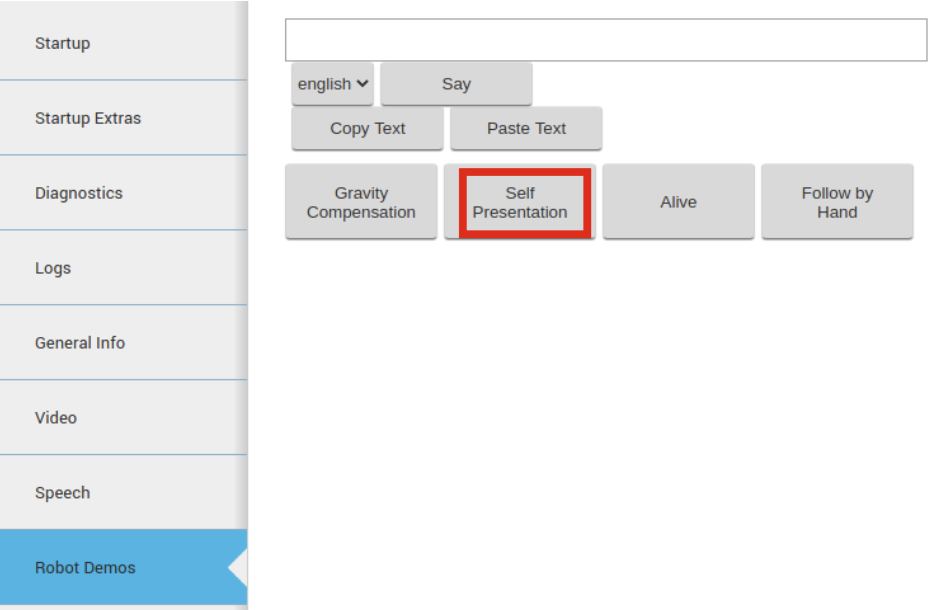

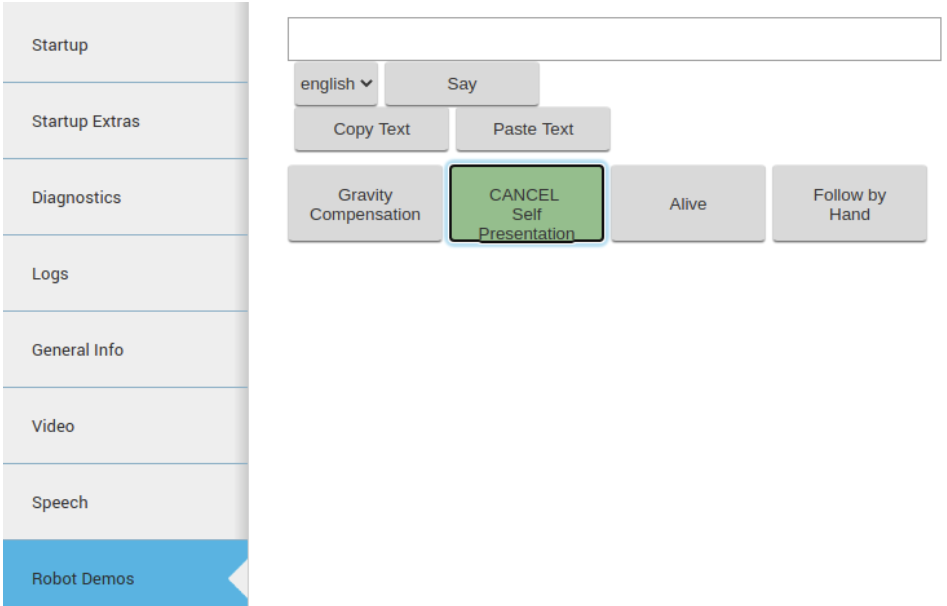

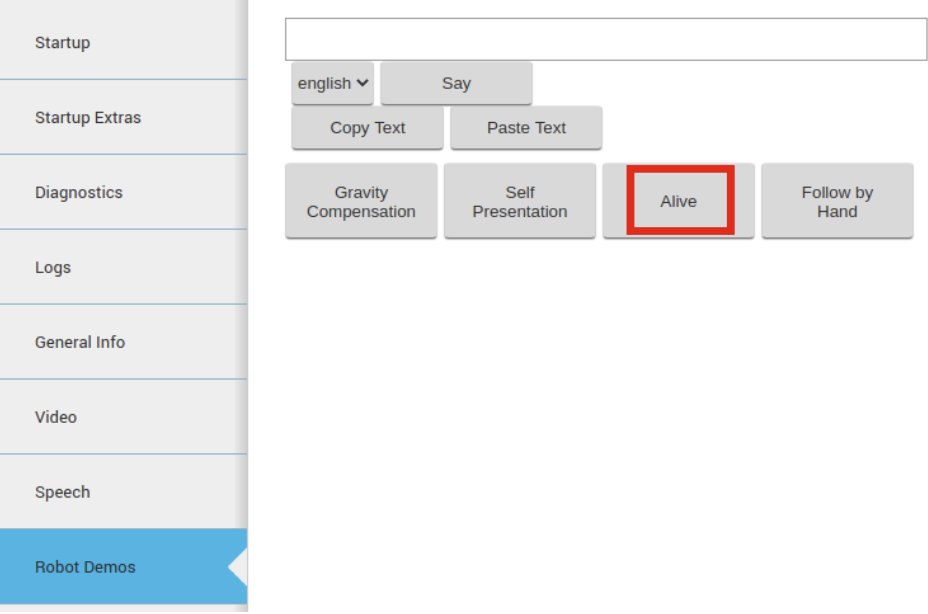

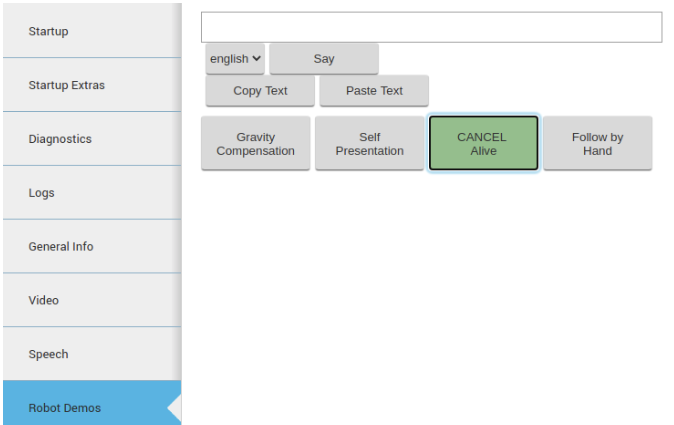

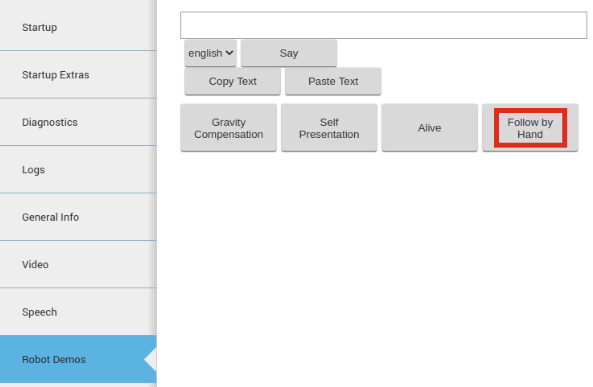

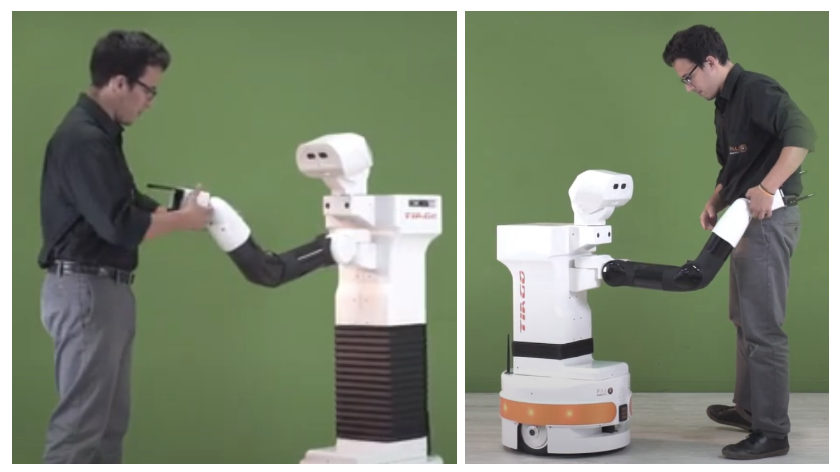

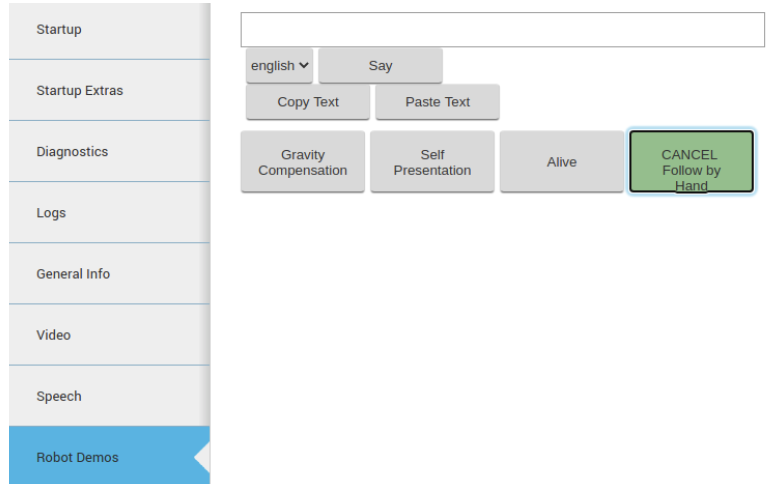

13.3.8 Robot Demos¶

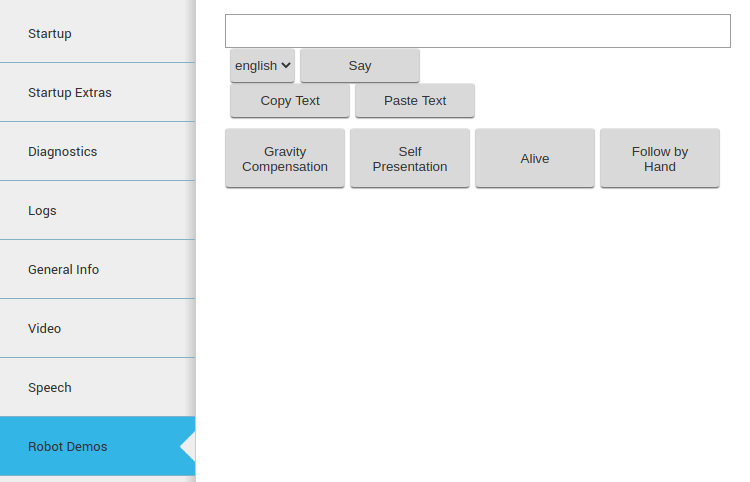

Plugin: Commands

Description: This tab provides several out-of-the-box demos including:

Gravity compensation

Self presentation

Alive demo

Follow by Hand demo

Figure: The Robot Demos Tab allows execution of several demos¶

For details of each demo please refer to Section Demos accessible via WebCommander

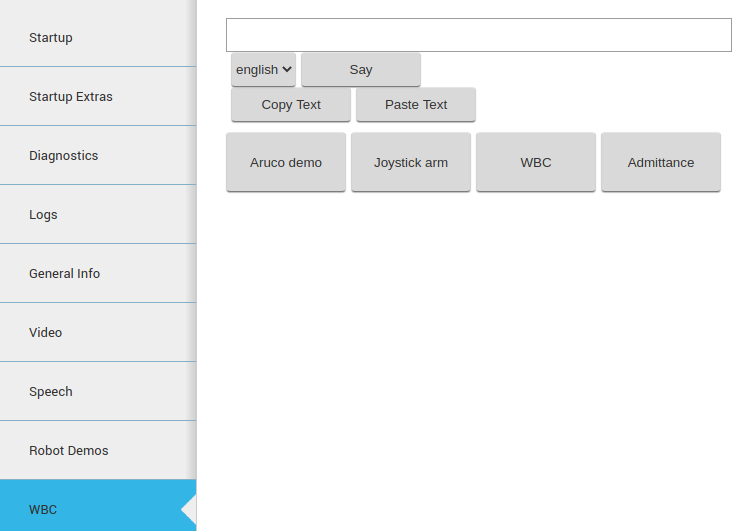

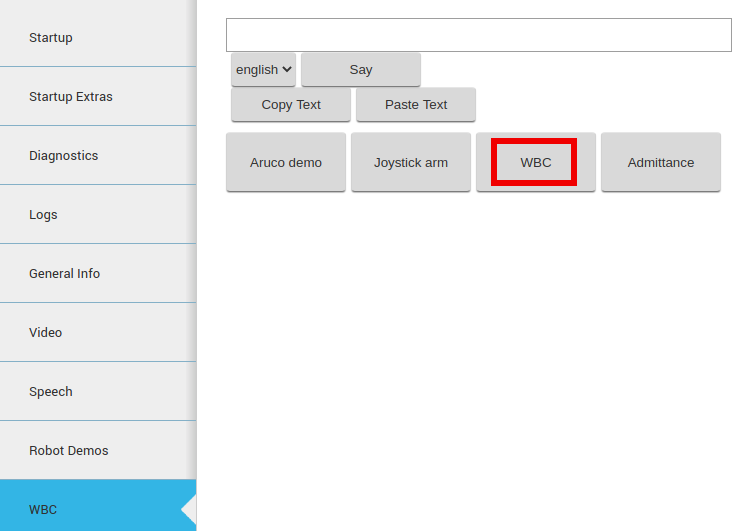

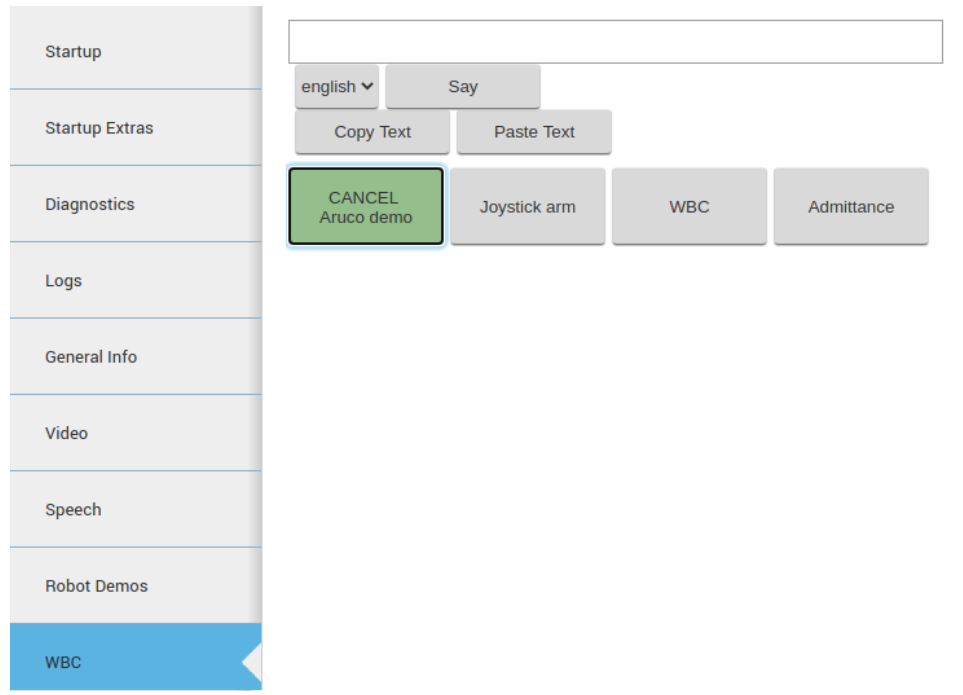

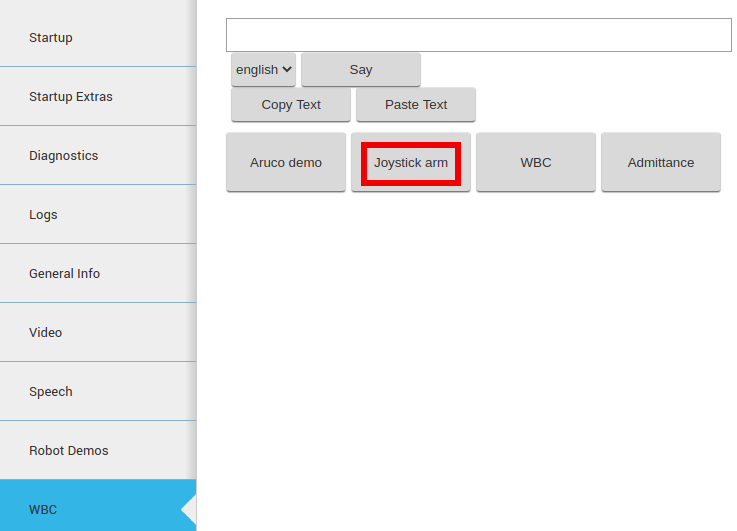

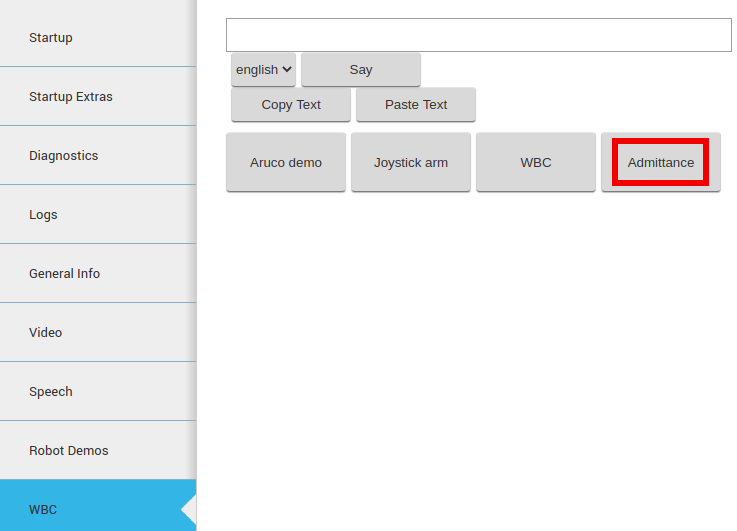

13.3.9 WBC¶

Plugin: Commands

Description: Several demos based on Whole Body Control can be executed if the corresponding Premium Software Package is installed in the robot.

Figure: The WBC Tab displays several demos using Whole Body Control¶

For a comprehensive explanation on how the different demos work please refer to the 41 Change controllers chapter.

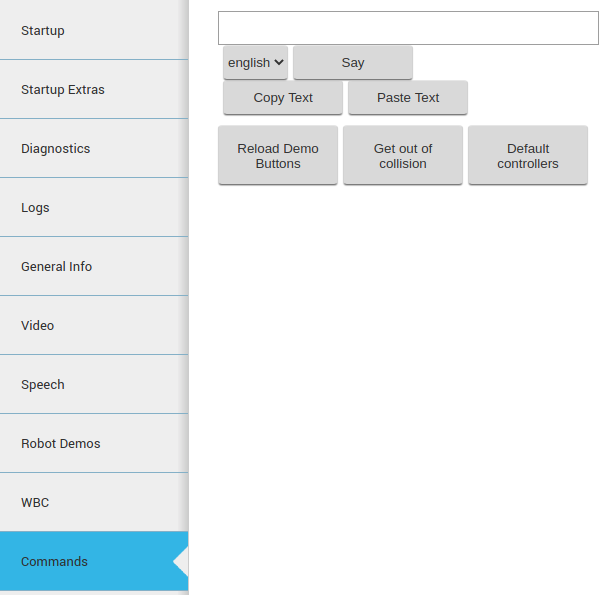

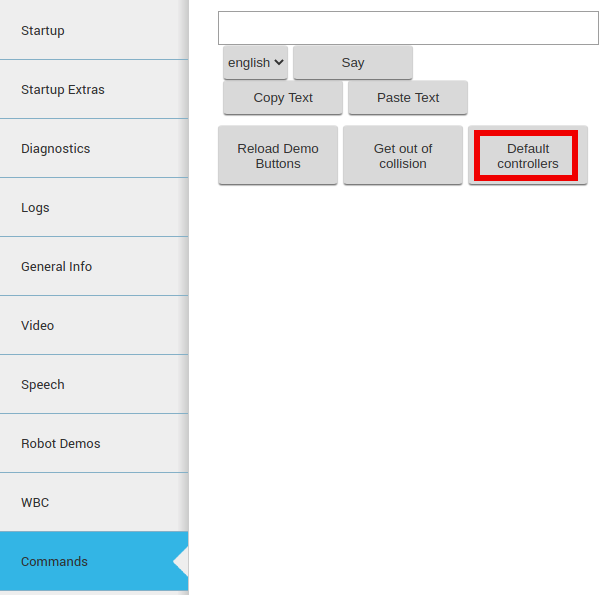

13.3.10 Commands¶

Plugin: Commands

Description: This tab provides several miscellaneous commands like:

Get out of collision: in case that the robot is in self-collision, or very close to this, this command will trigger a small movement so that the arm gets out of self-collision condition.

Default controllers: this button switches back to the default position controllers of the robot in case these have been changed.

Figure: The WBC Tab displays several demos using Whole Body Control¶

13.3.11 Settings Tab¶

Plugin: Commands

Description: The settings tab allows to change the behaviour of TIAGo++.

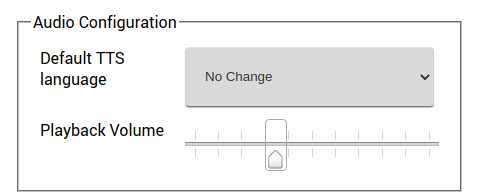

Currently it allows to configure the language of TIAGo++ for speech synthesis. It is possible to select one from a drop down list. Changing the text-to-speech language will change the default language when sending sentences to be spoken by TIAGo++ (see section 23 Text-to-Speech synthesis for further details).

Figure: The Settings tab allows to modify the behaviour of TIAGo++¶

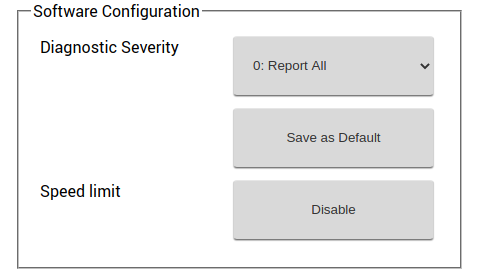

Software Configuration The Settings tab allows the user to configure some software of the robot. For example, the user can change the Diagnostic Severity reporting level so that, depending on this value, the robot will report certain errors by means of its LED stripes, voice, etc.

Figure: The Settings tab allows to modify the behaviour of TIAGo++¶

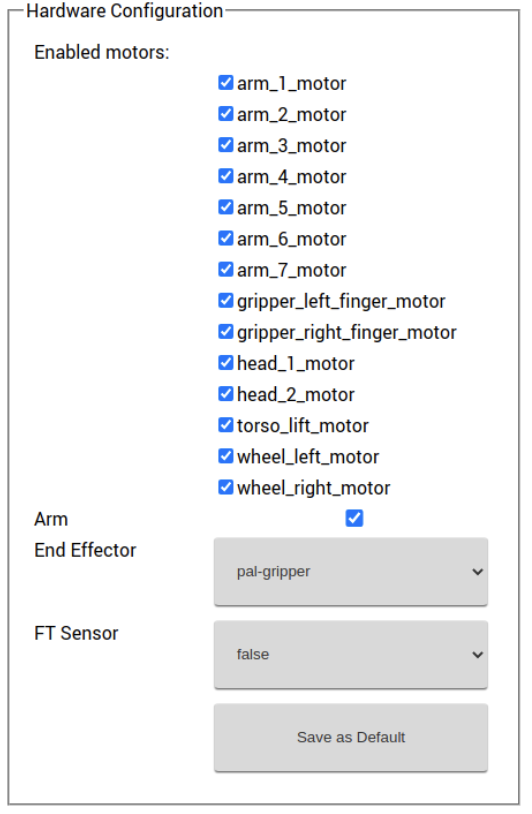

Hardware Configuration The Settings tab allows the user to configure the hardware of the robot. Hardware configuration will let the user to disable/enable the different motors, enable/disable the Arm module, choose different End Effector configuration, also also enable/disable the mounted F/T sensor.

Figure: TIAGo++ Hardware Configuration¶

For instance, to disable the “head_1_motor”, untick the head_1_motor checkbox in the “Enabled motors” options. If you want to switch to a different end-effector, then in the “End Effector” drop down, select the end effector that you are going to install, and click the “Save as Default” button at the bottom of the section. Reboot the robot for the above selected configuration to be taken into effect.

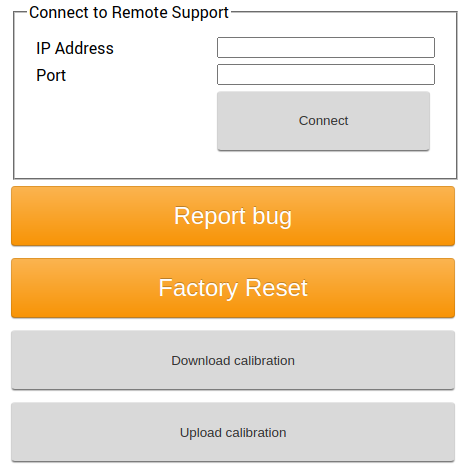

Remote Support The Settings tab is equipped with the remote support connection widget. A technician from PAL Robotics can give remote assistance to the robot by connecting through this widget. Using an issue in the support portal, the PAL technician will provide the IP Address and the Port, this information need to be filled in the respective fields of the widget and then pressing the Connect button will allow for the remote assitance. If the robot needs to be rebooted, the customer has to activate the remote support after each reboot because it is not persistent.

Figure: Remote support widget for TIAGo++¶

At any point of time after the connection had established, the remote connection can be terminated by clicking the Disconnect button.

Note

After clicking the Connect if the widget pops back to the normal, instead of showing the connection status, then it means that the robot is either not connected to internet (or) there should be some network issue.

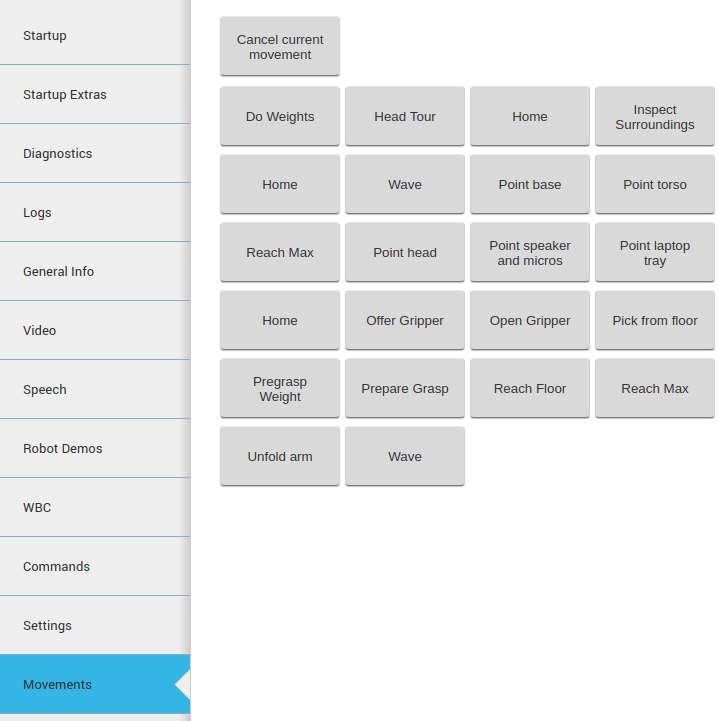

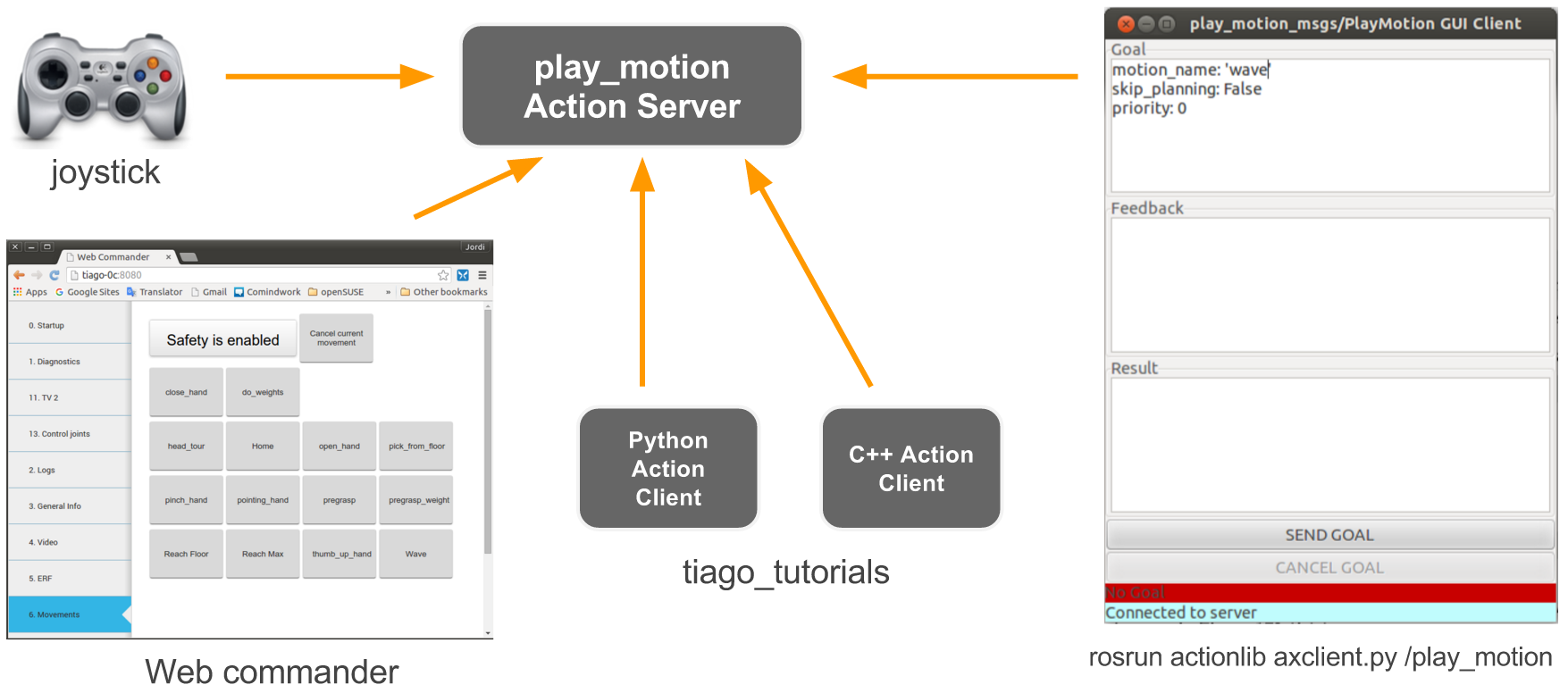

13.3.12 Movements Tab¶

Plugin: Movements

Description: Enables playing pre-recorded motions on TIAGo++.

The movement tab that can be seen in the next figure allows a user to send upper body motion commands to the robot. Clicking on a motion will execute it immediately in the robot. Make sure the arms have enough room to move before sending a movement, to avoid possible collisions.

Figure: The Movement tab allows to send upper body motions to TIAGo++¶

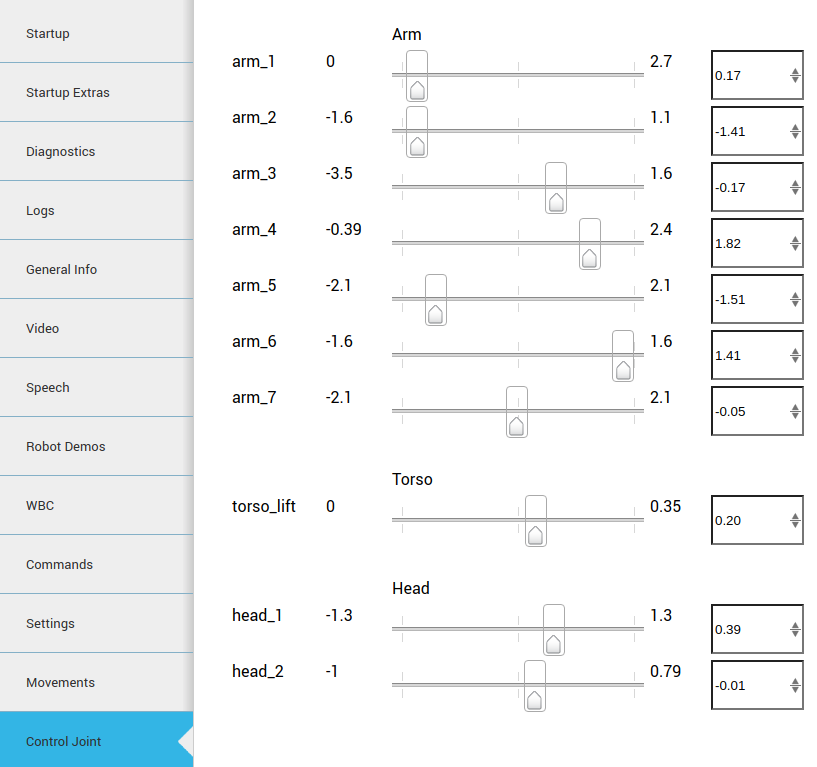

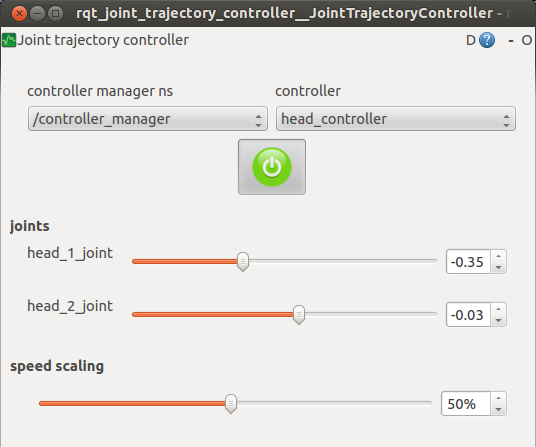

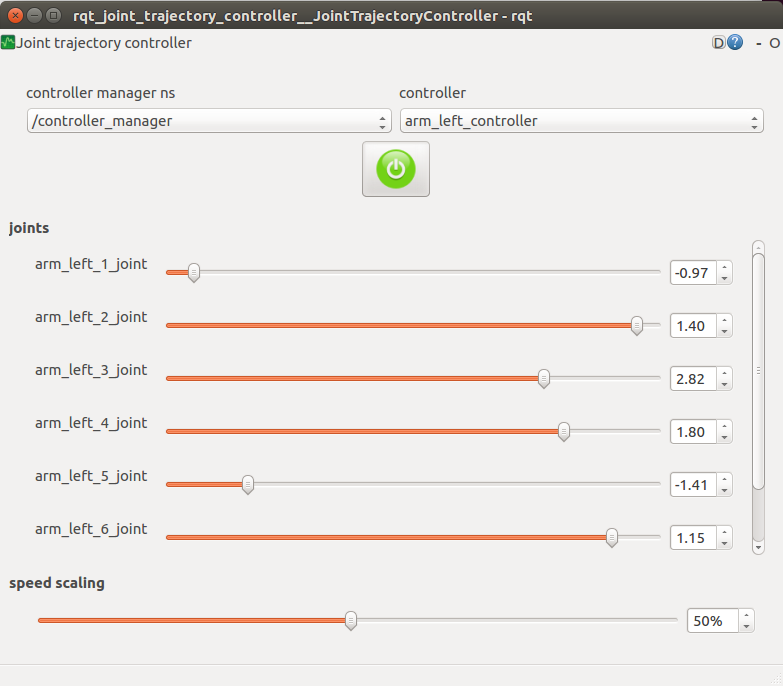

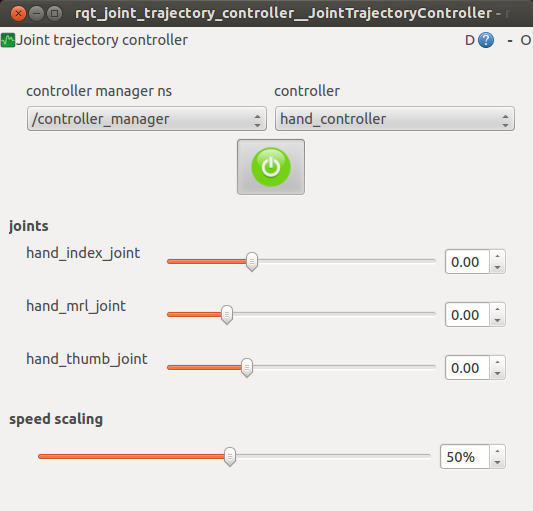

13.3.13 Control Joint Tab¶

Plugin: JointCommander

Description: Enables moving individual joints of TIAGo++ with sliders.

Figure: The Joint Control tab allows moving individual joint commands in position mode TIAGo++¶

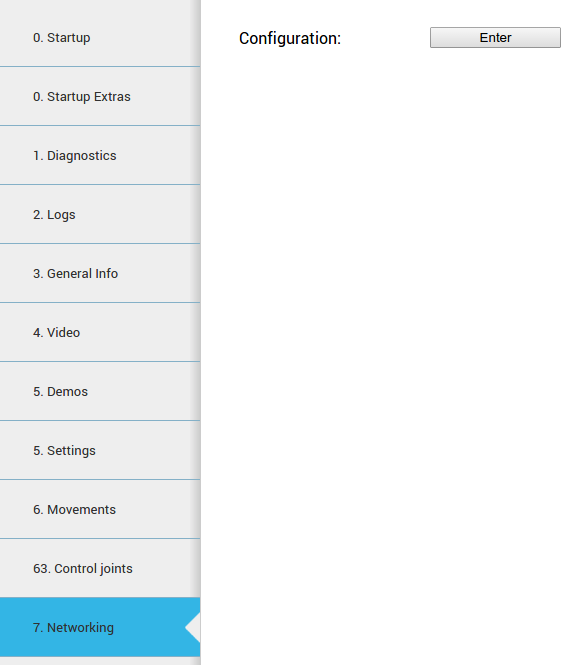

13.3.14 Networking tab¶

Plugin NetworkingEmbedded

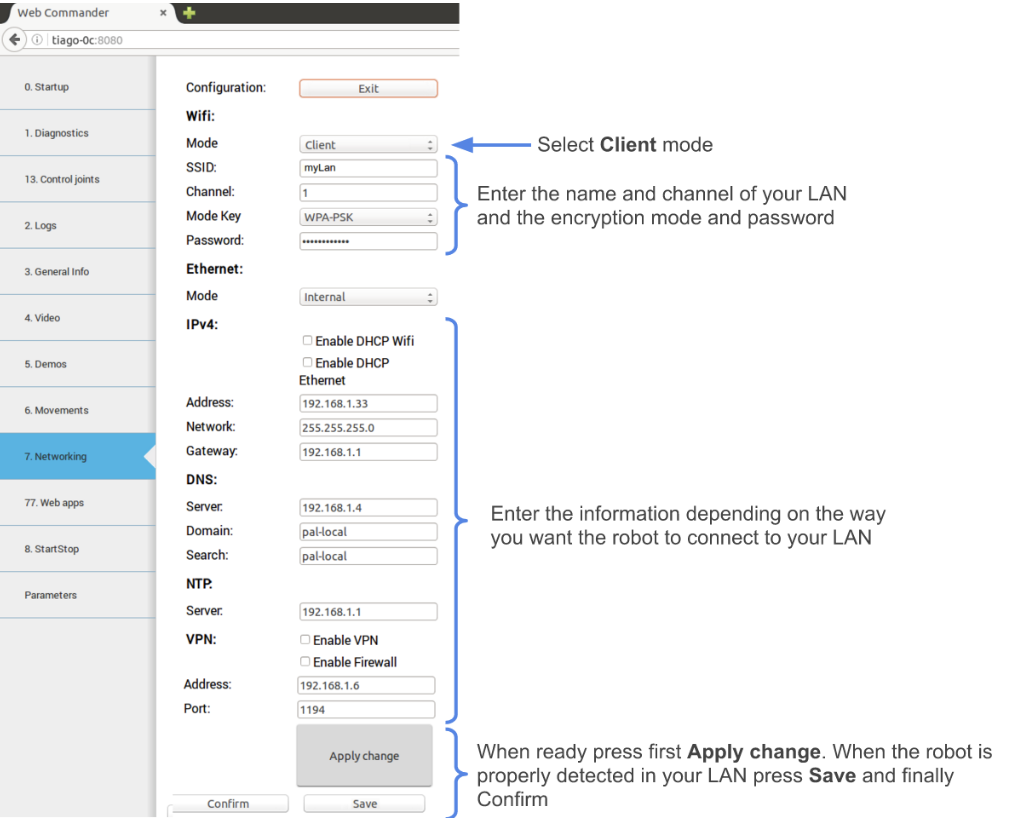

Description The figure below shows the networking tab. By default, the controls for changing the configuration are not visible in order to avoid access by multiple users.

Figure: Networking configuration¶

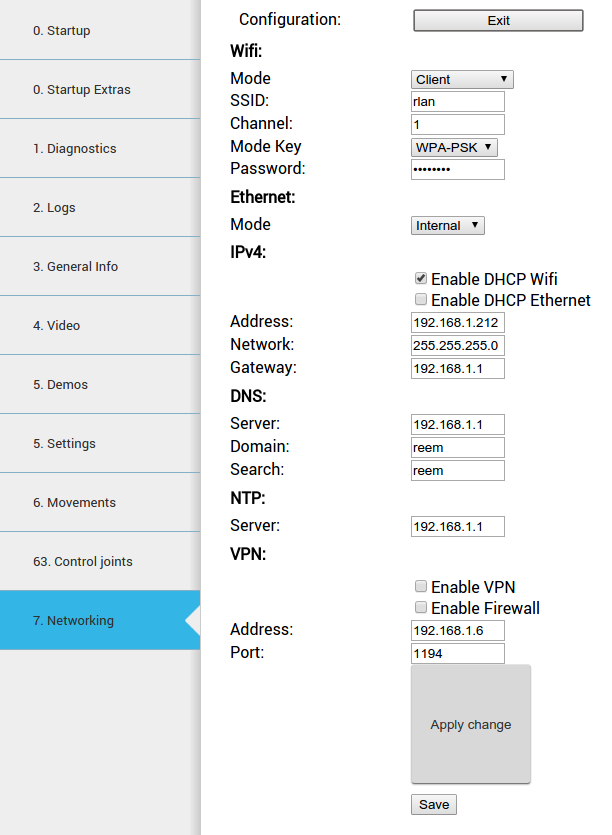

If the Enter button is pressed, the tab connects to the network configuration system and the controls shown in the figure below will appear.

When a user connects to the configuration system, all the current clients are disconnected and a message is shown in the status line.

Figure: Networking configuration controls¶

Configurations are separated in different blocks:

Wifi:

Mode: Can be selected whether WiFi connection works as client or access point.

SSID: ID of the Wi-Fi to connect to client mode or to publish in access point mode.

Channel: When the robot is in access point mode, use this channel.

Mode Key: Encryption of the connection. For more specific configurations select manual. In this case it is used the file /etc/wpa_supplicant.conf.manual that can be manually created in the robot.

Password: Password for the WiFi connection

Ethernet:

Mode: Can be selected whether the ethernet connection works as an internal LAN or external connection(see Expansion Panel section).

IPv4

Enable DHCP Wifi: Enables DHCP client in WiFi interface.

Enable DHCP Ethernet: Enables DHCP client in the external ethernet port.

Address, Network, Gateway: In client mode, the manual values of the building’s network are used by the Wi-Fi interface. This is the same for the external ethernet port.

DNS

Server: DNS server.

Domain: Domain to use in the robot.

Search: Domain to use in the search.

VPN

Enable VPN: If the customer has a PAL basestation, the robot can be connected to the customer’s VPN.

Enable Firewall: When activating the VPN, a firewall can be connected to avoid an incoming connection from outside the VPN.

Address: Building network IP address of the basestation.

Port: Port of the basestation where the VPN server is listening.

No changes are set until the Apply change button is pressed.

When the Save button is pressed (and confirmed), the current configuration is stored in the hard disk. Be sure to have a correct networking configuration before saving it. A bad configuration can make it impossible to connect to the robot. If this happens, a general reinstallation is needed.

Changes to the WiFi between client and access point could require a reboot of the computer in order to be correctly applied.

Using the diagnostic tab, it is possible to see the current state of the WiFi connection.

Connecting to a LAN In order to connect to your own LAN follow the steps below.

First of all you need to access the WebCommander via the URL

http://tiago-0c:8080 and go to the

Networking tab. Press Enter button and then follow the

instructions shown in figure Figure: Networking configuration.

Once you have filled in the right configuration and pressed the Apply change button it is very important to wait until you are able to ping the new robot IP in your own LAN. If it does not happen you might have to reboot the robot as the configuration changes have not been saved yet. The robot will reboot with its previous networking configuration, allowing you to repeat the process properly.

When the new configuration allows you to detect the robot in your own

LAN then you may proceed to enter the WebCommander again and press

the Save button and then the Confirm button.

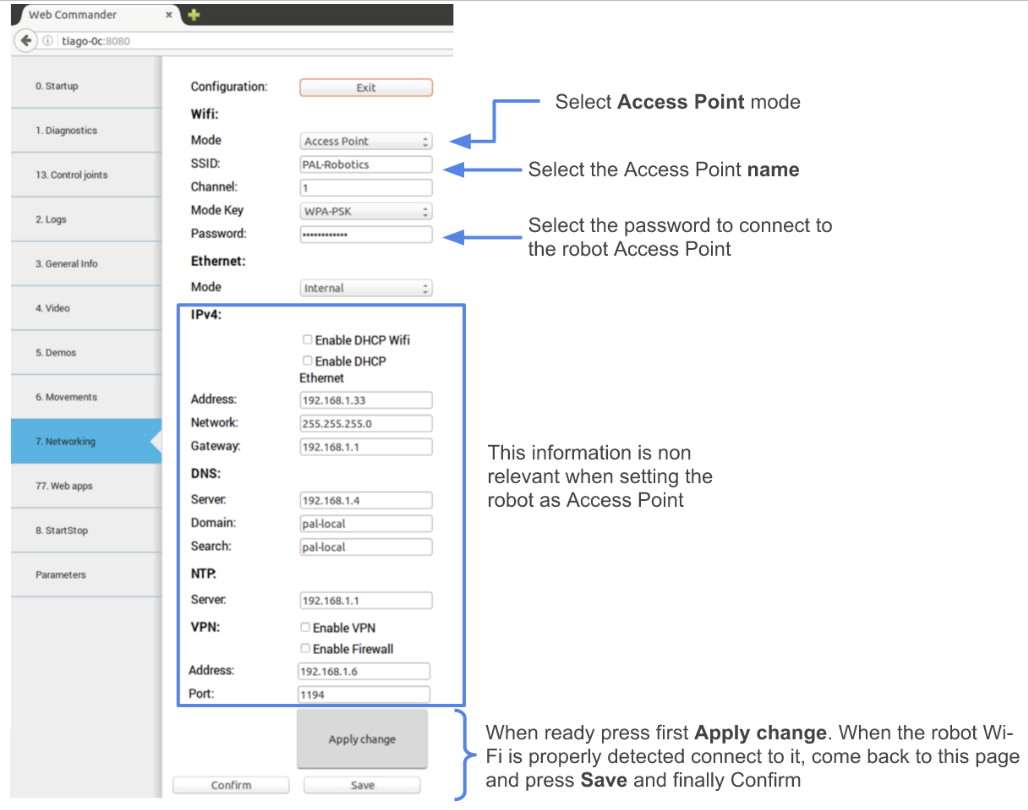

Setting as an Access Point In order to configure TIAGo++ as access point open the WebCommander via the URL

http://tiago-0c:8080 and go to the Networking tab. Press Enter button and then follow the instructions shown in the figure below.

Once you have filled in the right configuration and pressed the Apply change button it is very important to wait until the new Wi-Fi network is detected. A smartphone, a tablet or a computer provided with a WiFi card can be used for this purpose. If it does not happen you might have to reboot the robot as the configuration changes have not been saved yet. The robot will reboot with its previous networking configuration, allowing you to repeat the process properly.

When the new configuration allows you to detect the robot’s Wi-Fi then

you may proceed to enter the WebCommander after connecting to the

Wi-Fi of the robot and press the Save button and then the

Confirm button.

13.4 Tab configuration¶

The WebCommander is a configurable container for different types of content, and the configuration is done

through the /wt parameter in the ROS Parameter Server. On the robot’s startup, this parameter is loaded by

reading all the configuration files in /home/pal/.pal/wt/. For a file to be loaded, it needs to have a .yaml extension containing valid YAML syntax describing ROS Parameters: within the /wt namespace.

Figure: Configuring TIAGo++ to connect to a LAN¶

13.4.1 Parameter format¶

In the box below, an example of how a WebCommander configuration is displayed. It is a YAML file, where

/wt is a dictionary and each key in the dictionary creates a tab in the website with the key as the title of the tab.

Each element of the dictionary must contain a type key, whose value indicates the type of plugin to load. Additionally, it can have a parameters key with the parameters that the selected plugin requires.

Figure: Configuring TIAGo++ to connect to a LAN¶

wt:

"0. Startup":

type: "Startup"

"1. Diagnostics":

type: "Diagnostics"

"2. Logs":

type: "Logs"

"3. Behaviour":

type: "Commands"

Parameters::

buttons:

- name: "Say some text"

say:

text: "This is the text that will be said"

lang: "en_GB"

- name: "Unsafe Wave"

motion:

name: "wave"

safe: False

plan: True

The Parameters: in the box of this section would create four tabs. Named “0. Startup”, “1. Diagnostics”, “2. Logs” and “3. Behaviour”, of the types Startup, Diagnostics, Logs and Commands respectively. The first three plugins do not require Parameters:, but the Command type does, as explained in the Command Plugin section.

13.4.2 Startup Plugin Configuration¶

Description: Displays the list of PAL software that is configured to be started in the robot, and whether it has been started or not.

Parameters:

startup_ids A list of strings that contains the startup groups handled the instance of the plugin. See section 17.1.3 Additional startup groups.

13.4.3 Diagnostics Plugin Configuration¶

Description: Displays the current status of TIAGo++’s hardware and software.

Parameters: None required

13.4.4 Logs Plugin Configuration¶

Description: Displays the latest messages printed by the applications’ logging system.

Parameters: None required

13.4.5 JointCommander Plugin Configuration¶

Description: This tab provides slides to move each joint of TIAGo++ ‘s upper body.

Parameters: A list of the joints groups to be controlled. Each element of the list must be a dictionary containing “name”, “command_topic” and “joints”. Where “name“ is the name that will be displayed for this group, “command_topic” is the topic where the joint commands will be published and “joints” is a list containing the joint names to be commanded.

Example:

"8. Control Joint":

type: "JointCommander"

Parameters::

- name: Arm

command_topic: /arm_controller/safe_command

joints: [arm_1_joint, arm_2_joint, arm_3_joint, arm_4_joint, arm_5

- name: Torso

command_topic: /torso_controller/safe_command

joints: [torso_lift_joint]

- name: Head

command_topic: /head_controller/command

joints: [head_1_joint, head_2_joint]

13.4.6 General Info Plugin Configuration¶

Description: Displays the robot model, part number and serial number.

Parameters: None required

13.4.7 Installed Software Plugin Configuration¶

Description: Displays the list of all the software packages installed in both the robot’s computers.

Parameters: None required

13.4.8 Settings Plugin Configuration¶

Description: The settings tab allows to change the behaviour of TIAGo++.

Parameters: None required

13.4.9 NetworkingEmbedded Plugin Configuration¶

Description: This tab allows to change the network configuration.

Parameters: None required

13.4.10 Video Plugin Configuration¶

Description: Displays the images from a ROS topic in the WebCommander

Parameters:

topic Name of the topic to read images from, for instance: /xtion/rgb/image_raw/compressed

Figure: The Video Tab displays live video stream from the robot’s camera¶

13.4.11 Movements Plugin Configuration¶

Description: Enables playing pre-recorded motions on TIAGo++.

Parameters:

goal_type Either “play_motion” or “motion_manager”. Determines which action server will be used for sending the motions.

13.4.12 Commands Plugin Configuration¶

Description: Contains buttons that can be programmed through parameters to perform actions in the robot.

Parameters:

buttons A list of buttons, where each button is a dictionary with 2 fields. The name field is the text displayed on the button, and the second field name determines the type of button and is a dictionary with the configuration of the button.

wt:

"Example Buttons":

type: "Commands"

Parameters::

buttons:

- name: "Say some text"

say:

text: "This is the text that will be said"

lang: "en_GB"

- name: "Greetings"

say_tts:

section: "macro"

key: "greetings"

lang: ""

- name: "Wave"

motion:

name: "wave"

safe: True

plan: True

- name: "Change to Localization"

remote_shell:

cmd: "rosservice call /pal_navigation_sm \"input: 'LOC'\""

target: "control"

There are 4 types of buttons: say, say_tts, motion and remote_shell

say Sends a text to the Text-To-Speech engine. It requires a text field containing the text to be said, and lang containing the language in the format language_country specified in the RFC 3066.

say_tts Sends text as a section/key pair to the Text-To-Speech engine. It requires a section and key field

as specified in section 23.2.2 Action interface. The lang field can be left empty, but if it is specified it must be in the RFC336 format.

motion Sends a motion to the motion manager engine. Requires a name field specifying the name of the

motion, and two boolean fields plan and safe that determine to check for self-collisions and collisions

with the environment respectively. For safety reasons they should always be set to True.

remote_shell Enables the execution of a bash command in one of the robot’s computers. Requires a cmd

field containing a properly escaped, single line bash command, and a target field that can either be

control or multimedia, indicating to execute the command in the control computer or in the multimedia

computer of the robot.

Both say and say_tts require that the robot is running PAL Robotics’ TTS software.

14 Web User Interface¶

14.1 Overview¶

This section explains the use TIAGo++’s Web User Interface and its different plugins. The Web User Interface is a tool designed to simplify the configuration of the robot as well as the user experience. The Web User Interface can be accessed via browser, at the address http://tiago_dual-Xc, where X is the serial number of the robot.

14.2 Technical considerations¶

At the moment the Web User Interface supports only the Chrome browser on a laptop or computer. Accessing the Web User Interface from a mobile phone or tablet, or from a different browser, will result in some of the functions not working properly or at all.

14.3 Login screen¶

When accessing the Web User Interface a user and password will be requested. The default user and password is pal / pal . Once the correct user and password are introduced the user will automatically be redirected to the page he was accessing.

Sessions are not time-constrained, which means that once a user has logged in he won’t be logged out until either he closes the browser or the robot is rebooted.

Figure: Login screen of the WebGUI.¶

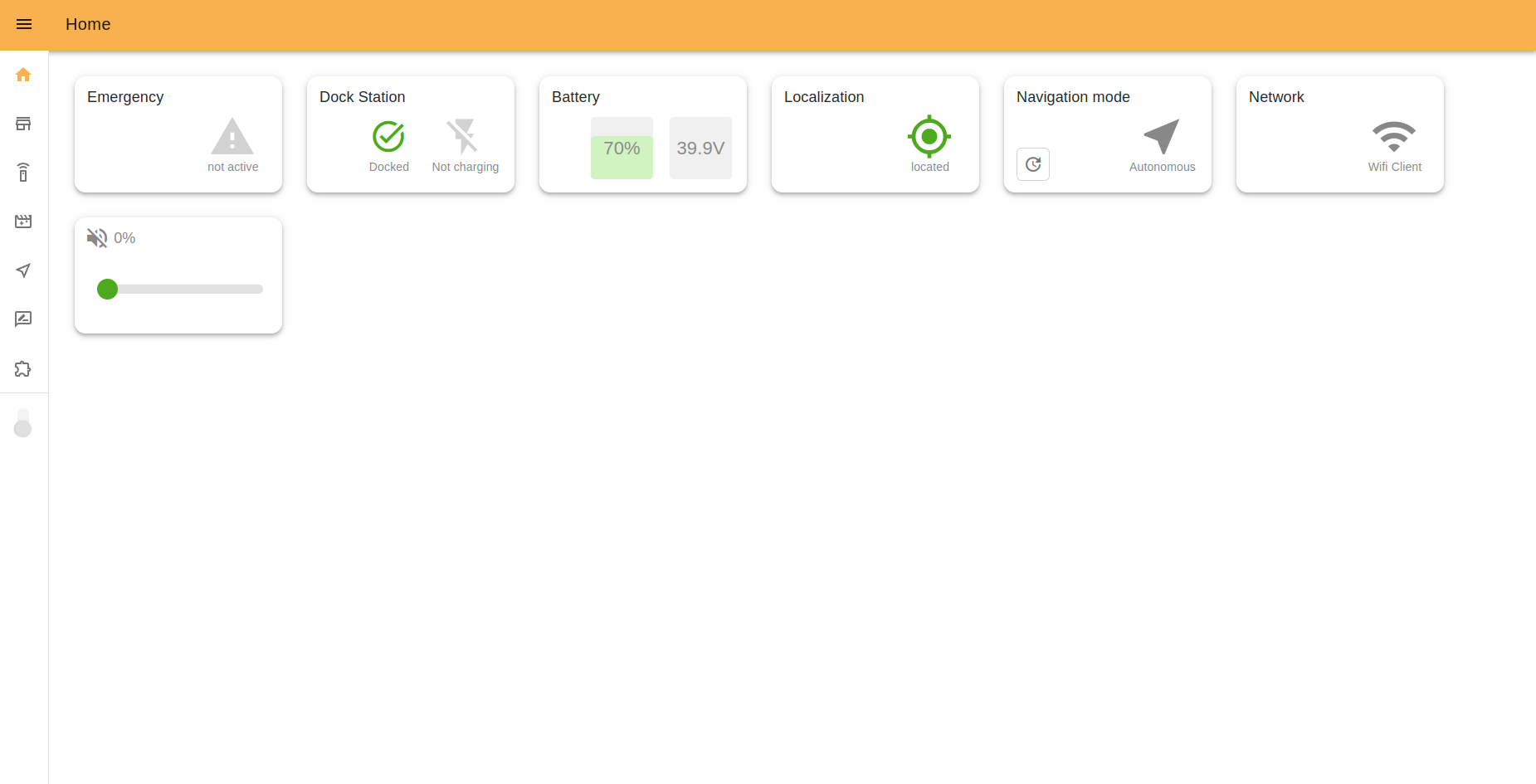

14.5 Information Panel¶

The Information Panel serves to provide visual information on the robot’s current state.

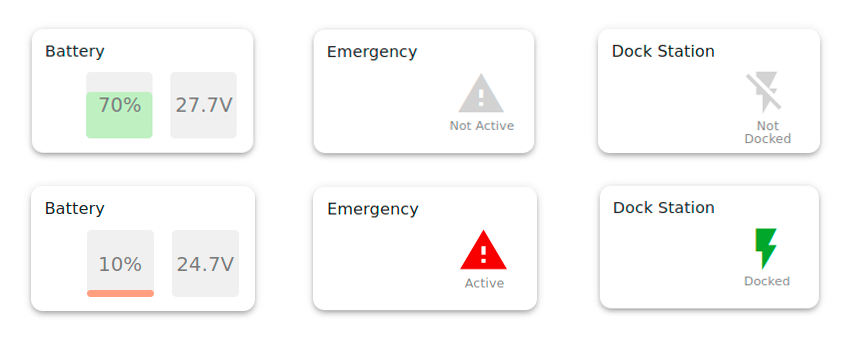

Emergency indicates the emergency button status (pressed or not). In the case of it being pressed, the icon will be red.

Dock Station indicates if the robot is connected to the dock or either with the charging connector.

Battery shows the current battery percentage and voltage. Yellow and red colors indicate middle and low battery levels.

Localization shows if the robot is located correctly in the navigation map.

Navigation Mode shows how the robot is moving: autonomously, by joystick input or if navigation is paused.

Network indicates the currently active connection mode of the robot. Can be: Wi-fi Client or Access point.

Volume allows management of the robot’s volume and shows the current volume percentage.

Some examples that may be displayed:

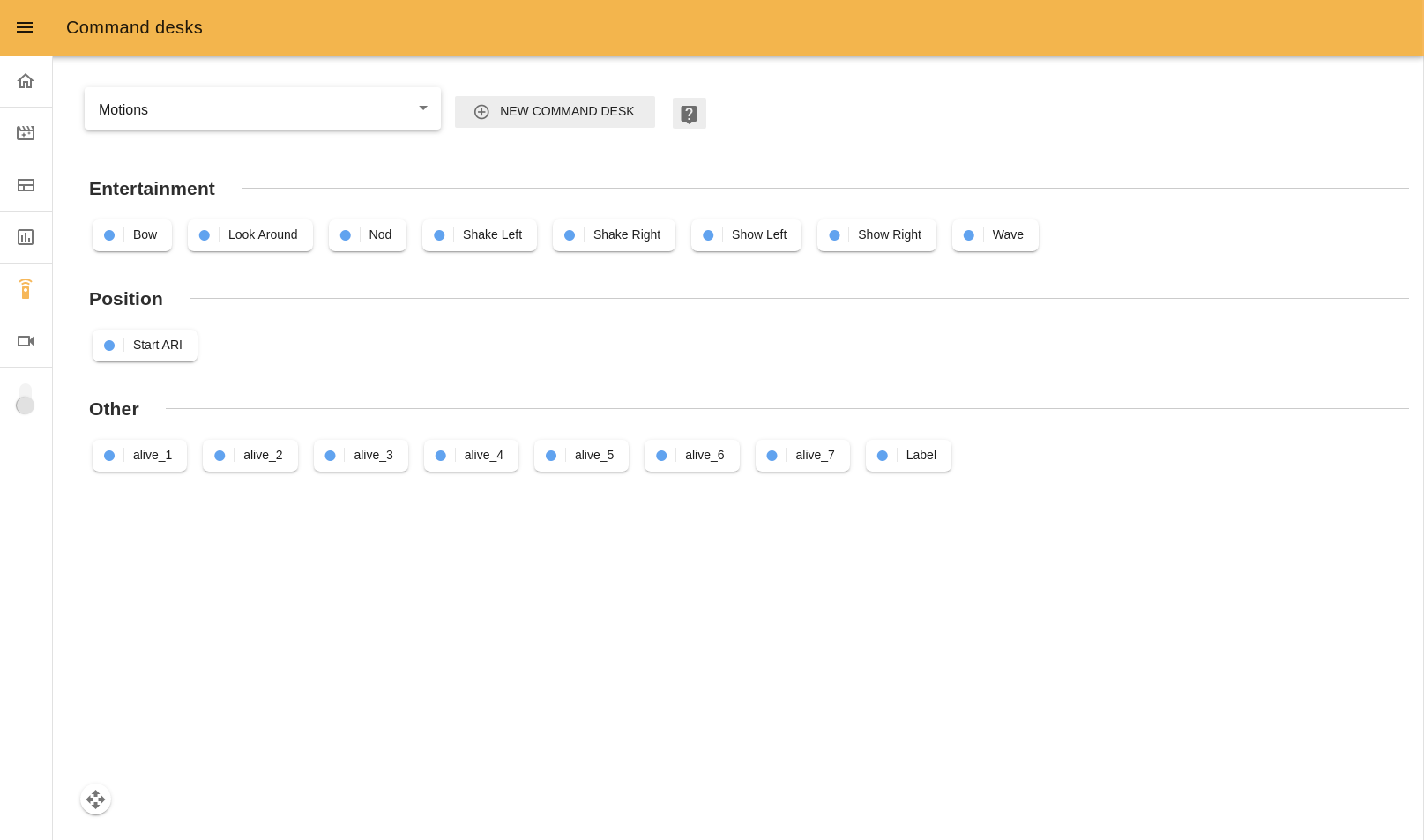

14.6 Command desks¶

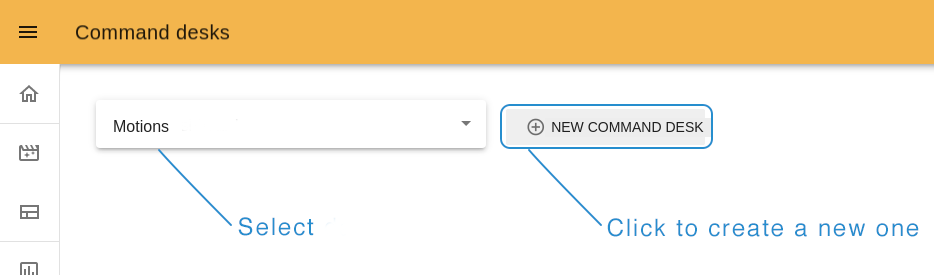

With this app, you can teleoperate the robot, and prepare it for events or other needs, by creating Command desks with groups of buttons inside them. Then you assign actions that the robot will perform when you click any button.

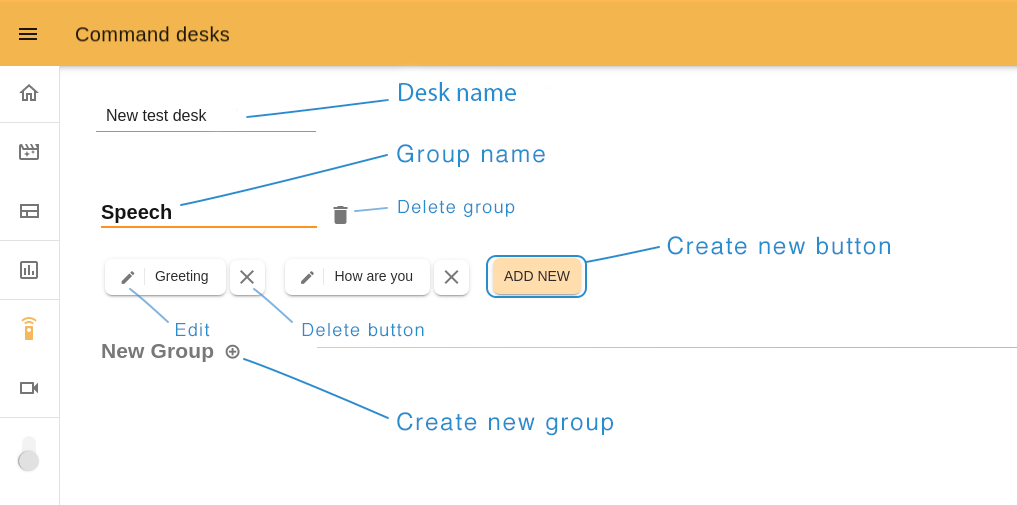

To create a new command desk click on the “NEW COMMAND DESK” button at the top of the page. Near it, at the left top corner menu, you can choose a Command desk created earlier.

14.6.1 Create a new desk¶

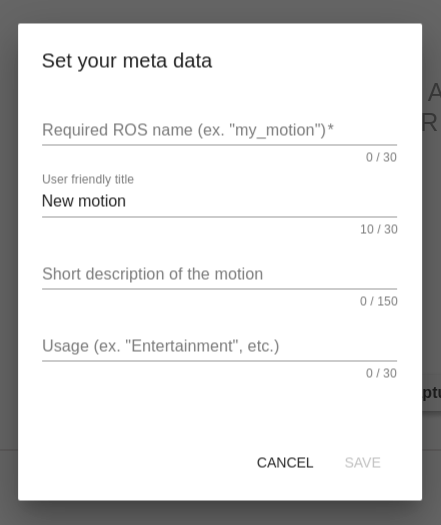

Type the name of your desk and create a first group (you must have at least one) by clicking on the “plus” icon near the “New group” title. To create a new button, click “ADD NEW”.

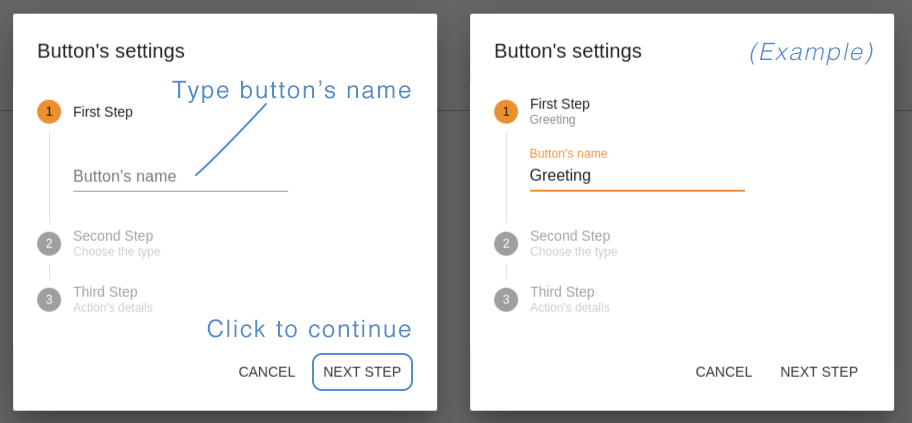

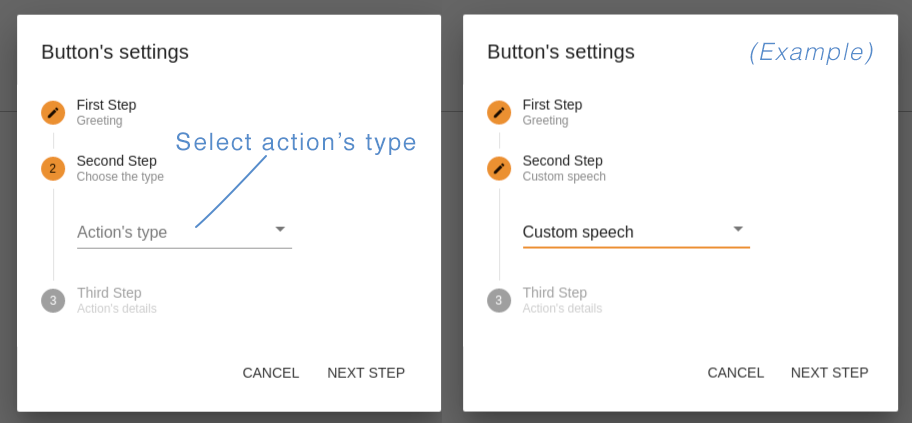

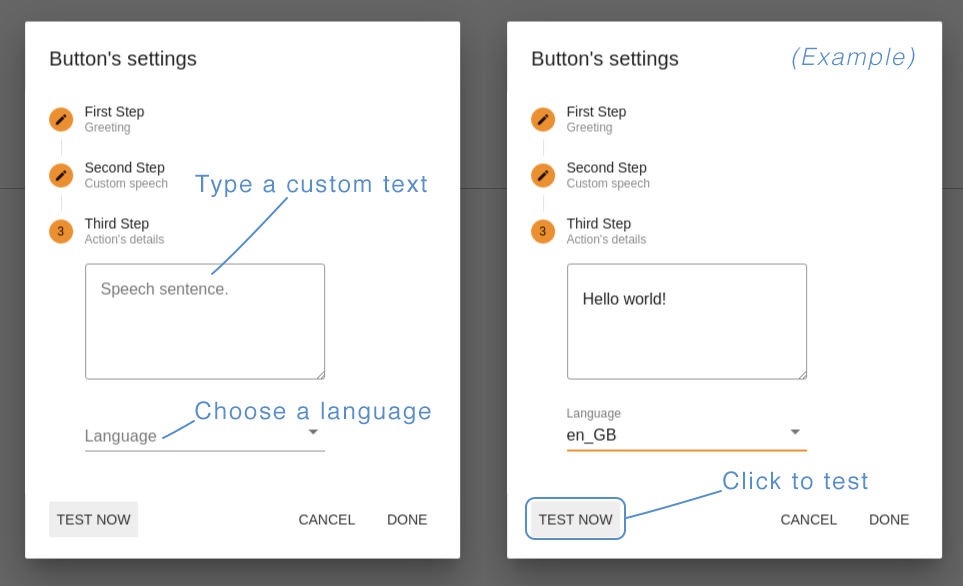

Step 1. Button’s name. Choose a name related to the action this button will perform.

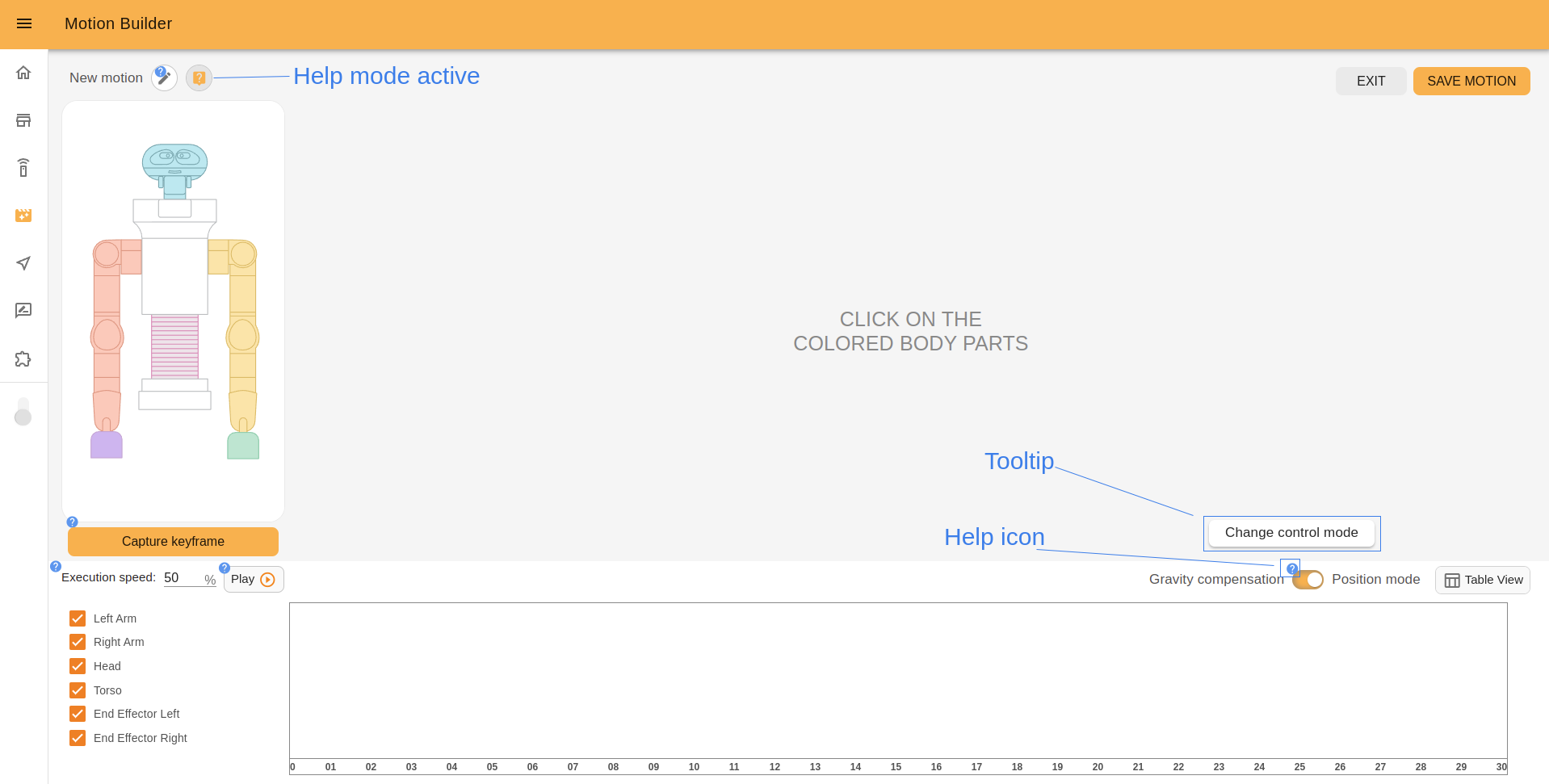

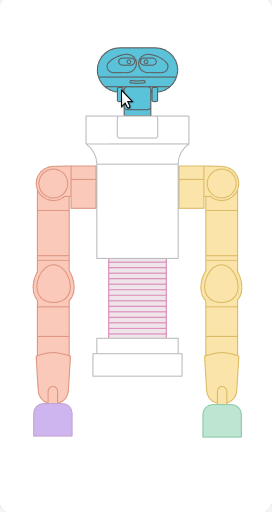

Step 2. Action’s type. You can create buttons with “Motion” type (14.8 Motion Builder), corresponding to the blue circles, so that the robot makes a movement, “Presentation” with a dark brown circle to execute your prerecorded (14.7 Presentations) or “Custom Speech type”, which is represented by an orange circle, so that the robot says the written text. In this example we chose “Custom speech”.

The available list of action types depends on the the installed web apps and the robot.

Step 3. Action’s details. Here you should define what TIAGo++ will do after clicking on this button. For “Motion” or “Presentation”, choose an option from the list, for “Custom speech” type a custom text for TIAGo++ to say, and choose the corresponding language.

The button “TEST NOW” allows you to try your button in action before saving it. If all is ok, click “DONE” to save and close the button editor.

After creating all the buttons you need, click “SAVE” at the right top of the page to exit the Edit mode and return to the Command desk page.

To play the Command press on the the button of the command you would like to execute.

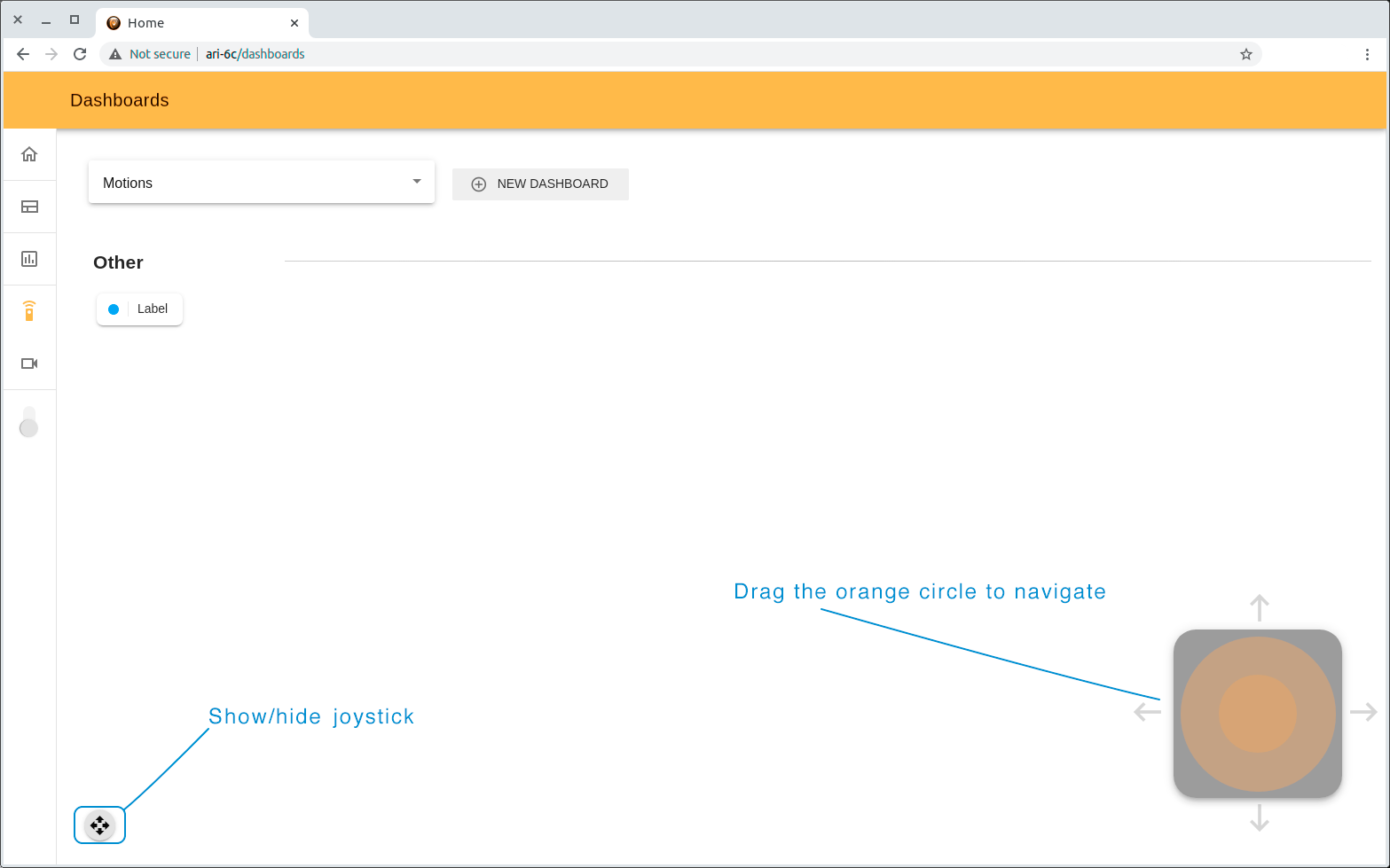

14.6.2 Joystick¶

With this webapp you can also navigate the robot. The small joystick button at the bottom of the page opens a virtual joystick. Drag the orange circle towards the direction you wish to move the robot.

14.7 Presentations¶

Presentations is a webapp that allows you to create prerecorded presentations (a combination of Speech, Motions, Touchscreen content and LEDs effects) and execute them.

The features of the Presentations tool include:

Creating, Editing, Deleting presentations and presentation slides

Adding the custom text to speech in a chosen language

Adding motions (previously created in MotionBuilder)

Managing the content on the touchscreen and it’s duration

Choosing and tuning the LED effects

Managing all possible combinations of presentation elements on a graphical timeline

Storing and executing the presentation

The interface of Presentations consists of a list of the presentations and a user-friendly editor, where you can create, edit and test presentations from your PC or mobile devices.

14.7.1 Homepage interface¶

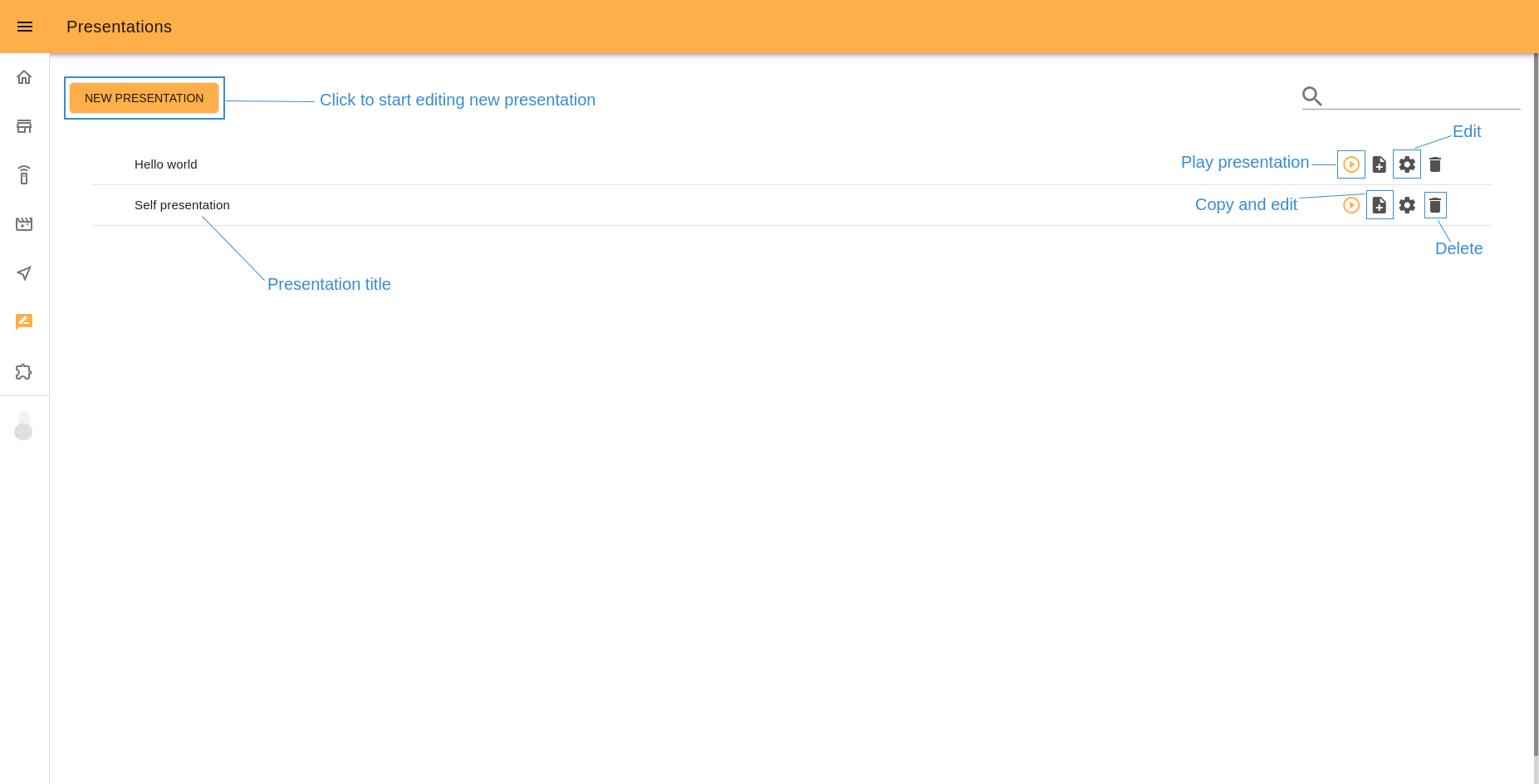

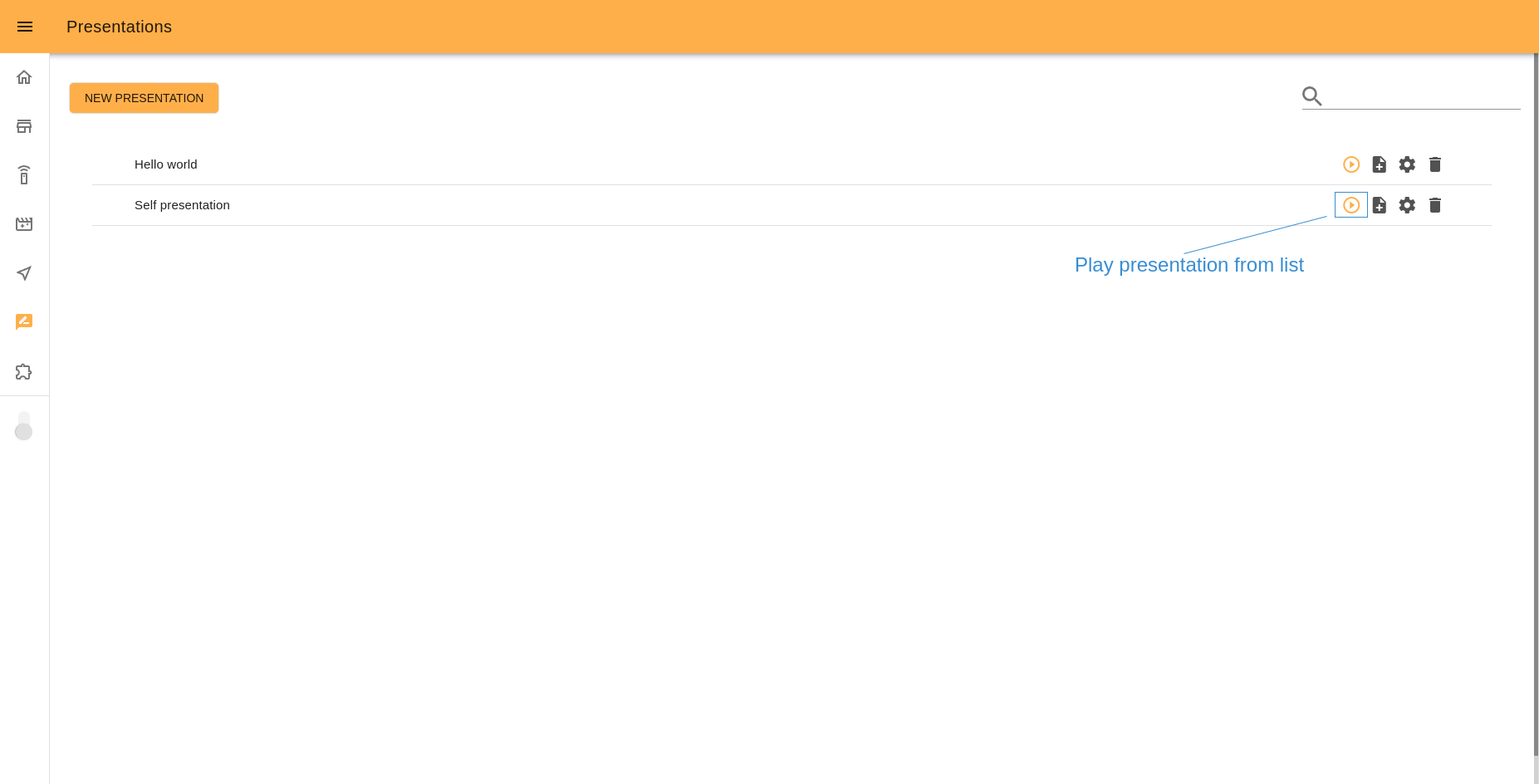

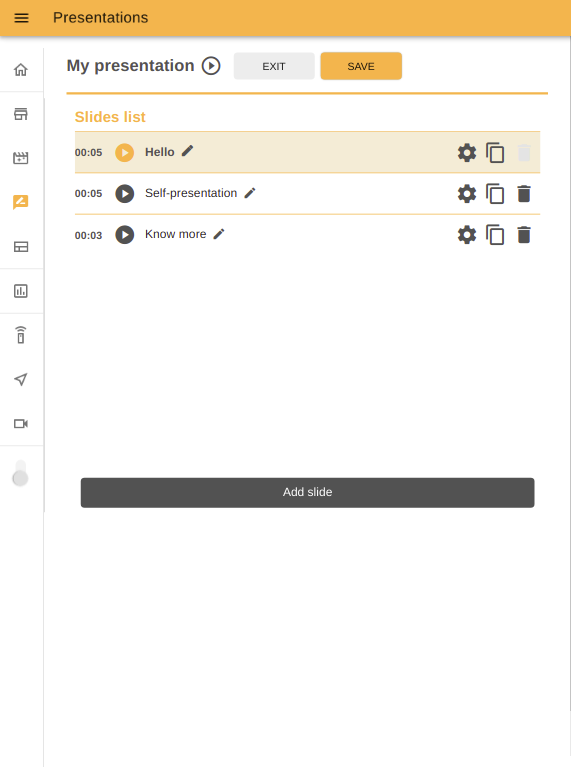

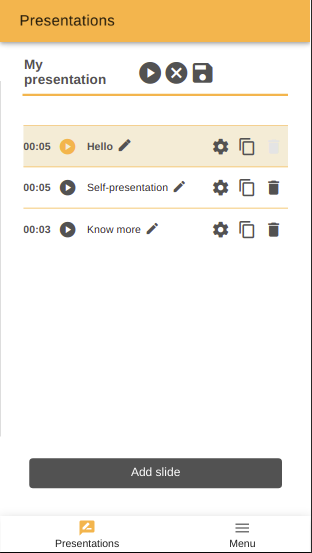

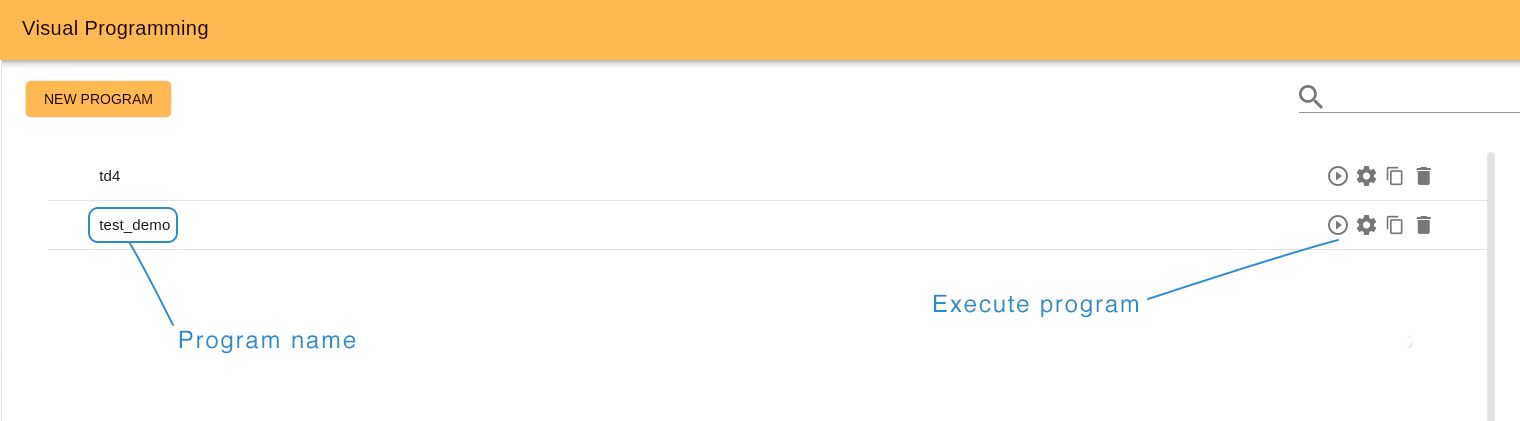

The start page of Presentations serves not only to display the list of stored presentations but also to manage them. From here you can execute any presentation, edit or delete it. To quickly search it is useful to use the search by title bar.

To open the Presentations editor click the “Create new presentation” button or “Edit” icon of an existing presentation.

14.7.2 Editor interface¶

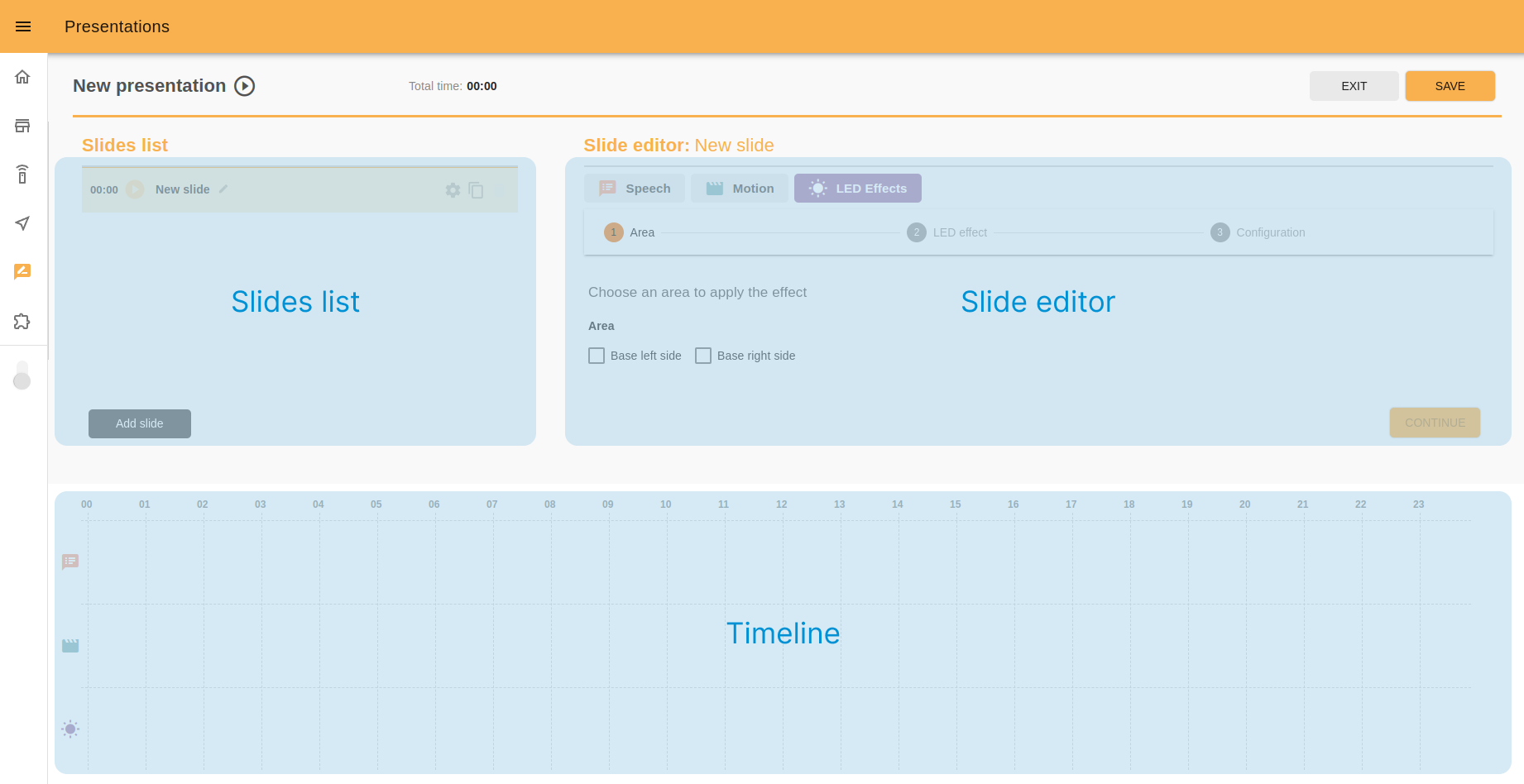

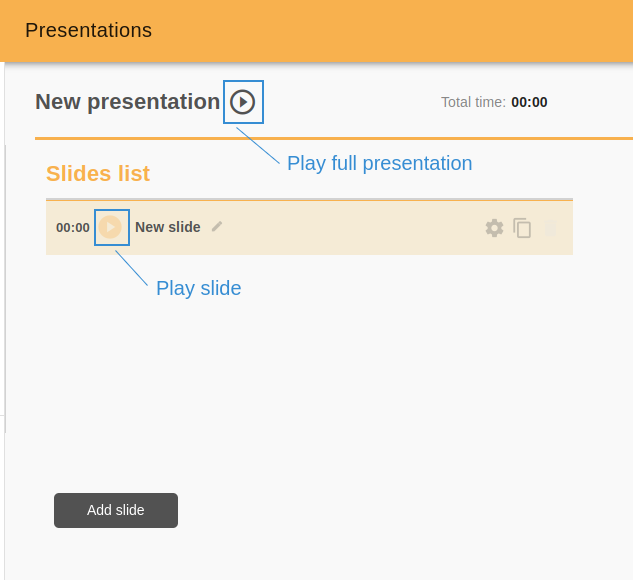

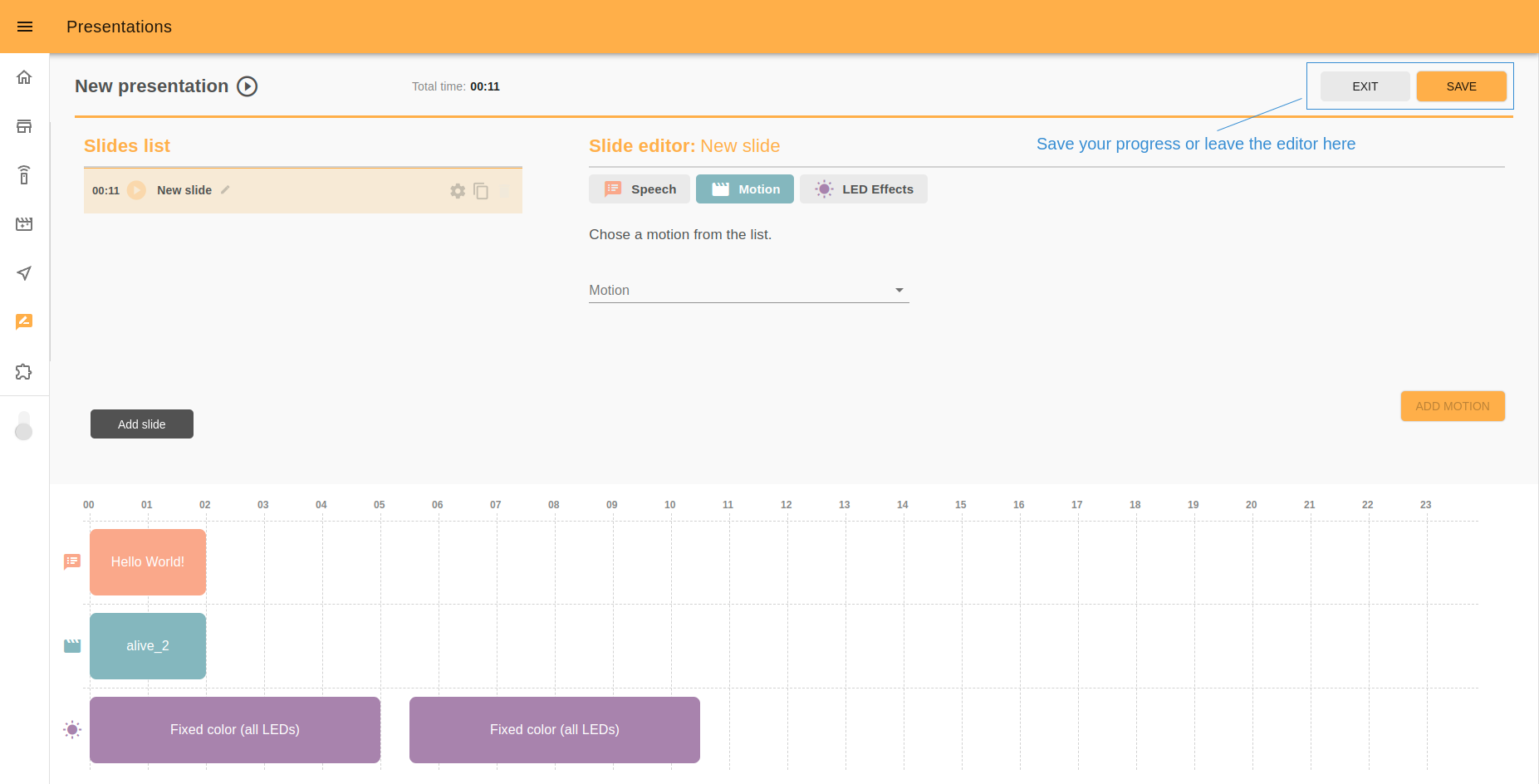

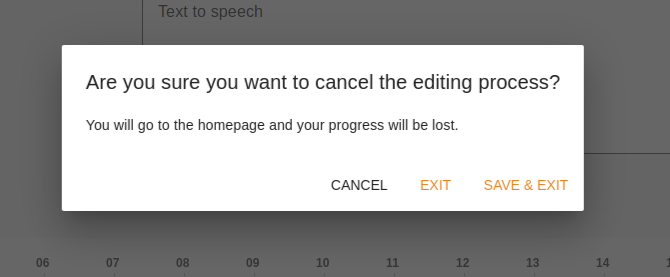

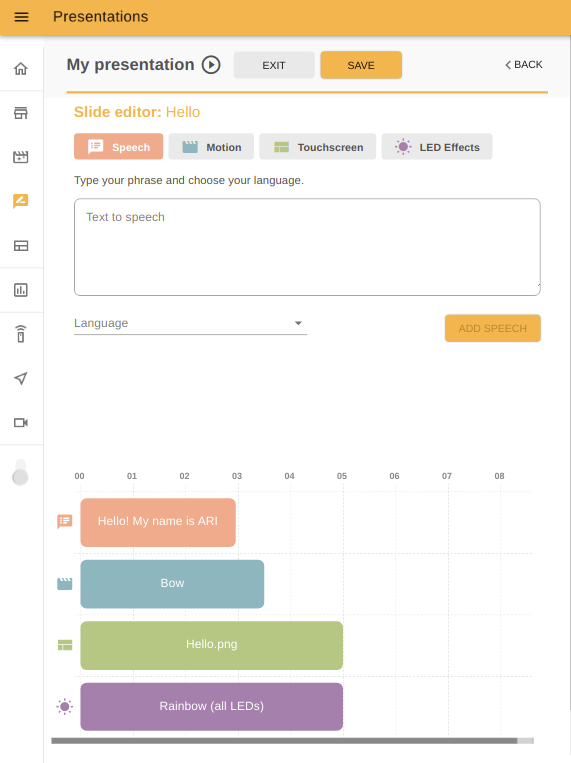

Let’s see what the interface Presentations has and how to interact with it. In the picture below you can see the principal parts of the editor: slides list, slide editor, timeline.

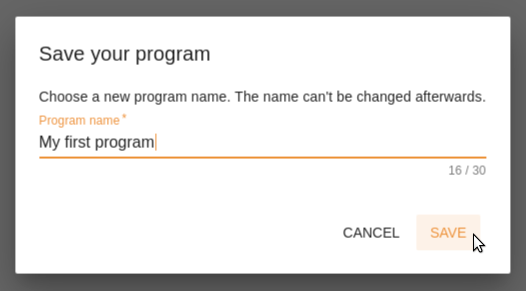

14.7.3 Presentation name¶

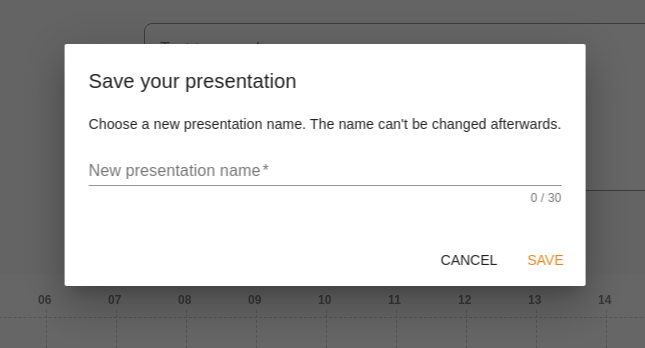

On the left top of the page there is a current presentation name with the Play presentation icon.

In case you are editing the new presentation after clicking the “Save” button you will see the popup with text input to set the presentation title. This step must be performed only once and the existing presentation cannot be renamed afterwards.

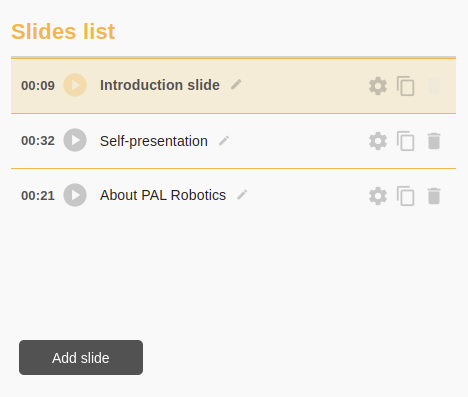

14.7.4 Slides list¶

It is good practice to divide your presentation to slides by the use case logic. In the slide list you can manage slides, add new ones, rename slides, delete, edit or play slides separately.

The slide you are editing right now will have a different color style.

14.7.5 Slide editor¶

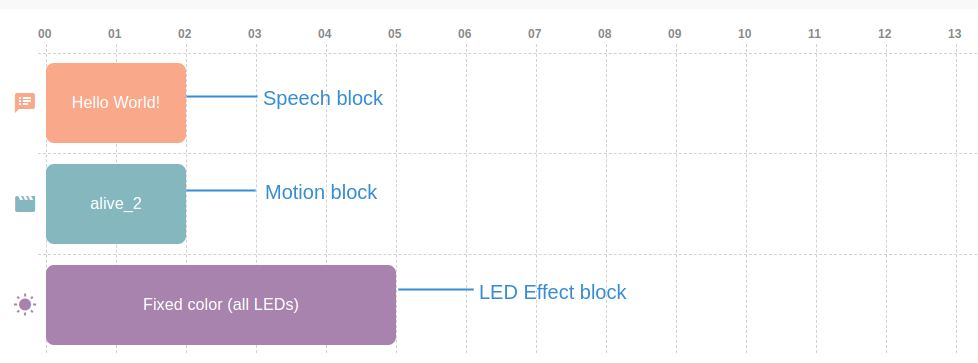

In this panel you can edit the elements you want to include in your presentation. For now there are four tabs: speech, motion, touchscreen and LED’s effects.

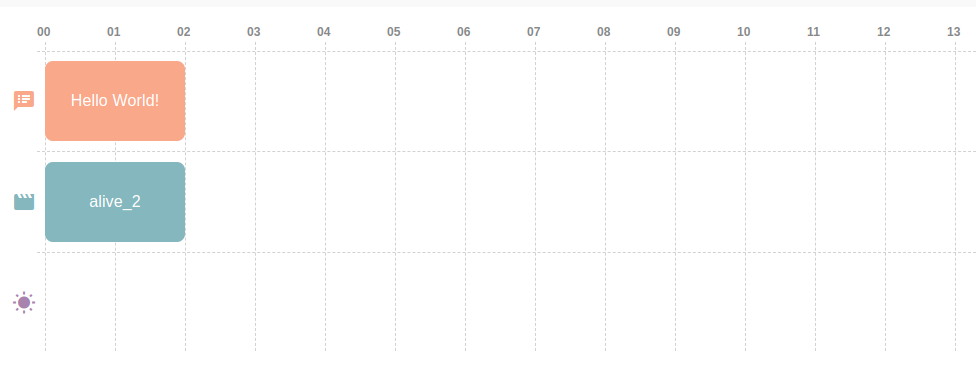

To add text to speech, choose the “Speech” tab, input the desired phrase on the text input, choose the corresponding language and click the “ADD” button.

A new block with the same color as the “Speech” tab will appear below on the timeline. The duration of each text block is computed by the robot so you cannot make ARI speech quicker or slower. However you can divide your text into small phrases or separated words, if you need, and add them to the presentation one by one.

To add a motion to your presentation go to the “Motion” tab, choose the needed motion from the list and click the “ADD” button.

A new block with the “Motion” tab color will appear on the second row of the timeline below. The duration of motions and other settings should be edited in the 14.8 Motion Builder app.

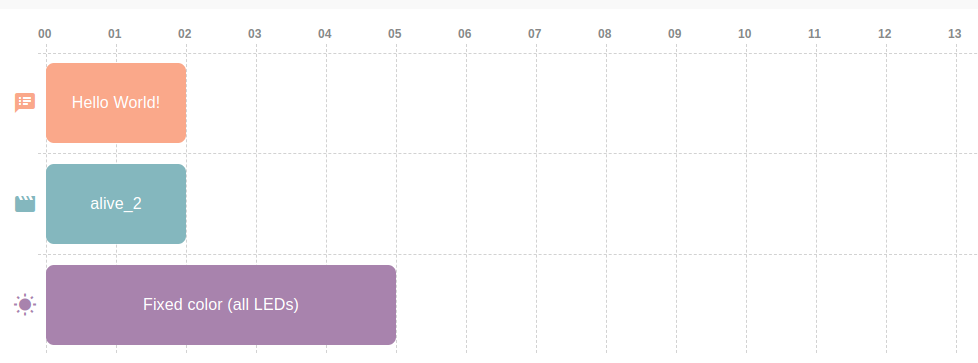

To manage the LED’s effects use the last tab. As a first step, choose the robot devices that you want to play the effect.

In the second step choose the effect you want and depending on this the third step may be slightly different.

Tune the timing for the effect, set the duration in seconds (you can use the quick-options buttons to set 3, 5 seconds duration in one click) and save changes.

Again you will see the new block on the timeline (this time on the last timeline row).

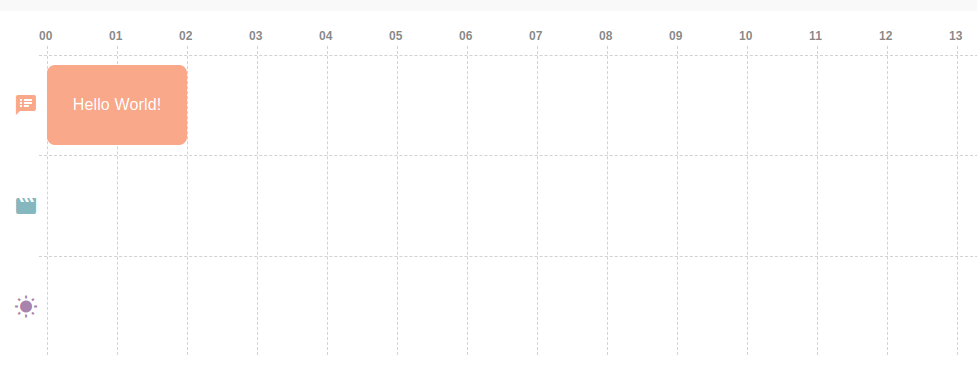

14.7.6 Timeline¶

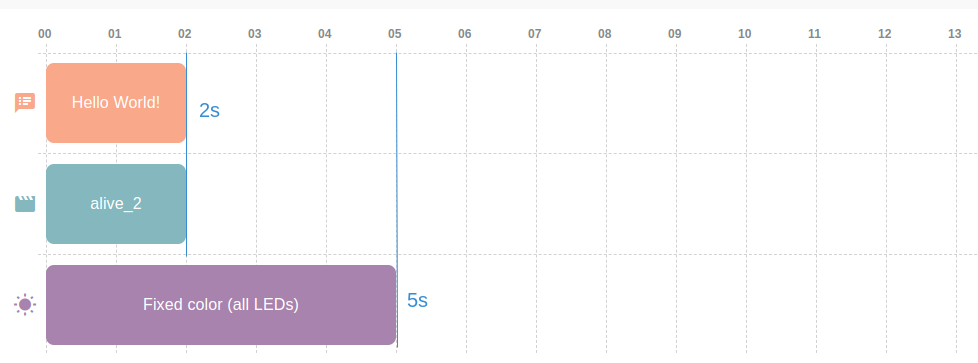

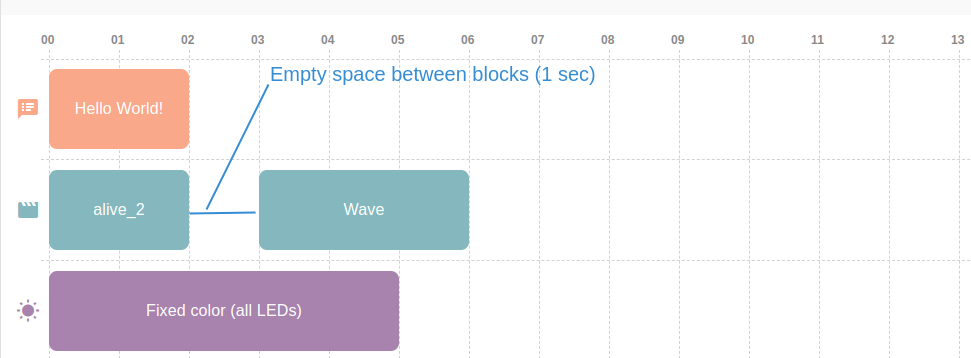

After adding a new element to the presentation it will appear graphically on the timeline as a colored block. The colors match with the color of the slide editor tabs. For example, pink for the speech blocks, blue for the motions, etc.

Each presentation element has its own row on the timeline. So, on the first row at all times only pink speech blocks will appear, on the second - motions and on the third row - LED’s effects.

The width of each block corresponds to its duration in seconds that are shown on the timeline as vertical lines.

You can also leave space between blocks to separate the execution or delay the play of a block.

You can drag the blocks using the cursor or touching it (for the tablets) and decide the position of each one on the timeline. This way you can make some actions start at the same time (for example, say the “Hello” word and make a “Waving” movement) and create a time pause between blocks.

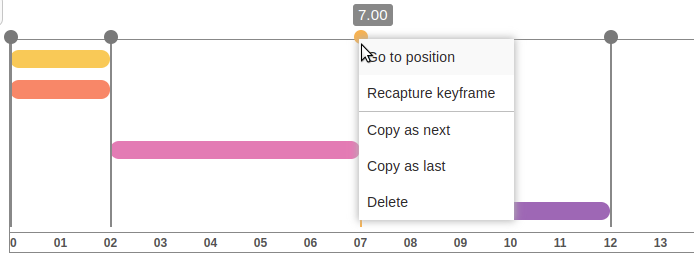

You can select one block by clicking it. Graphically it will change color. You will see the settings of the selected block on the slide editor tab, which will open automatically. There you can edit it and save the changes.

Also you can copy the selected block as the next or as the last one or delete it. For this use the small icons that appear on the top of the block when you select them.

14.7.7 Play presentation¶

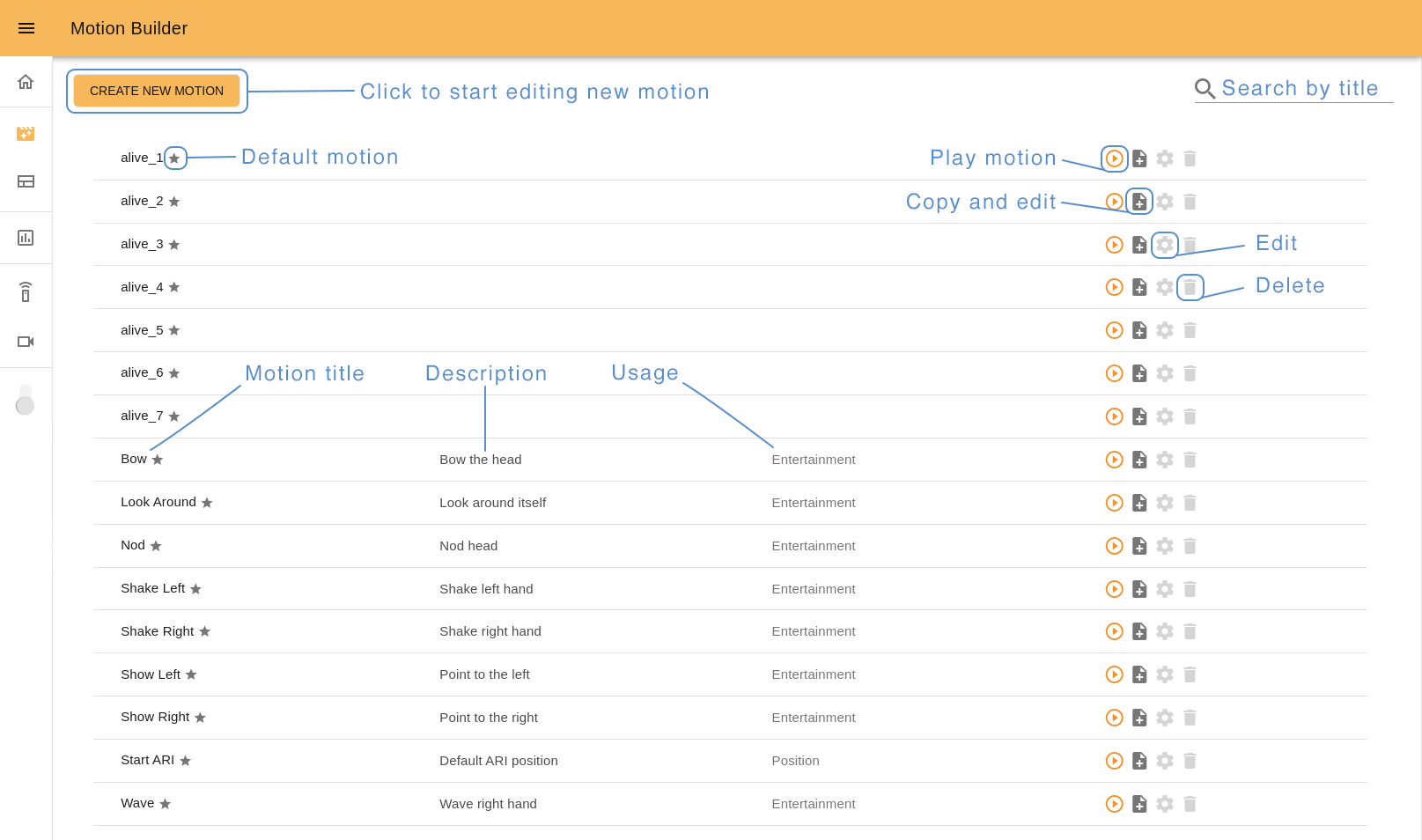

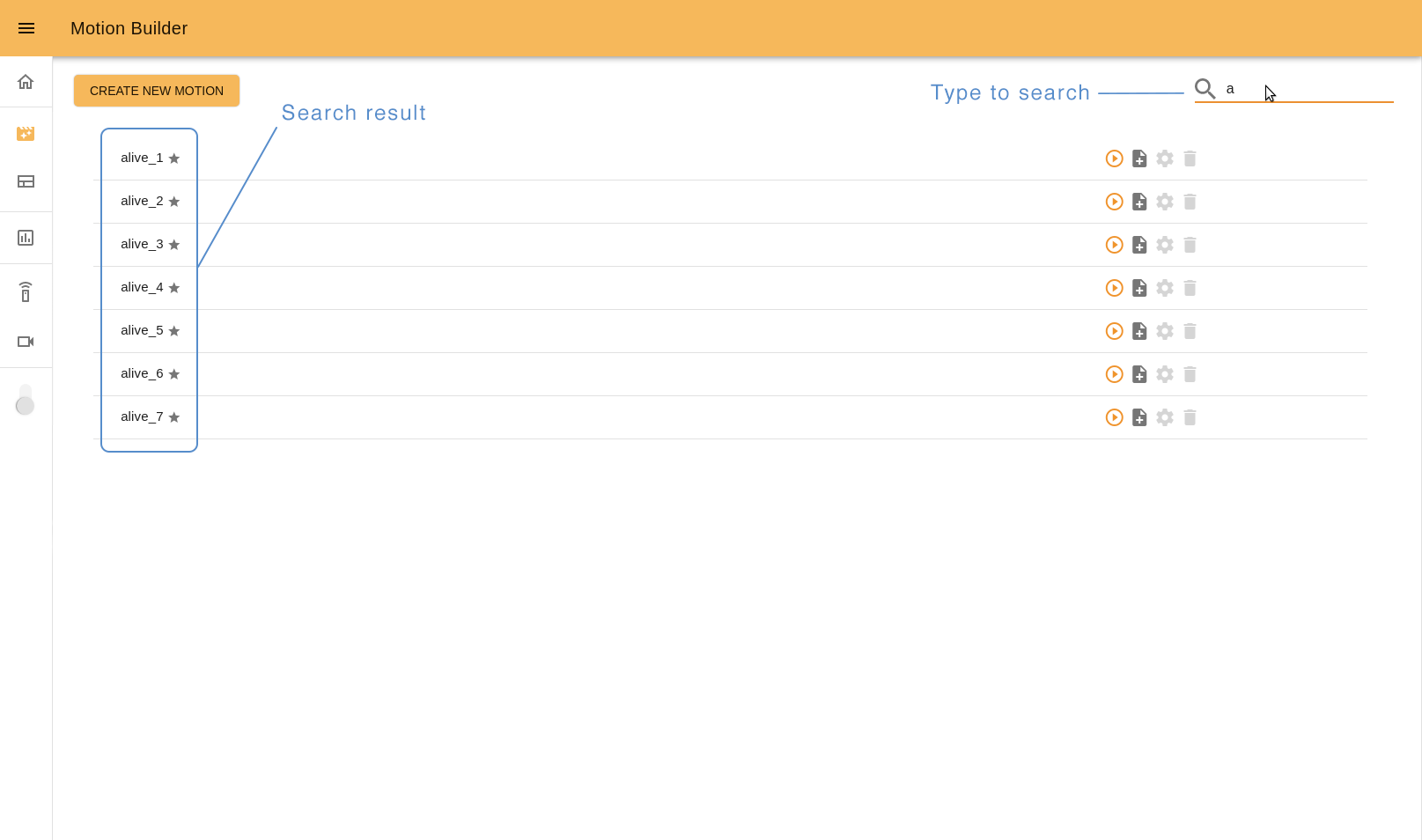

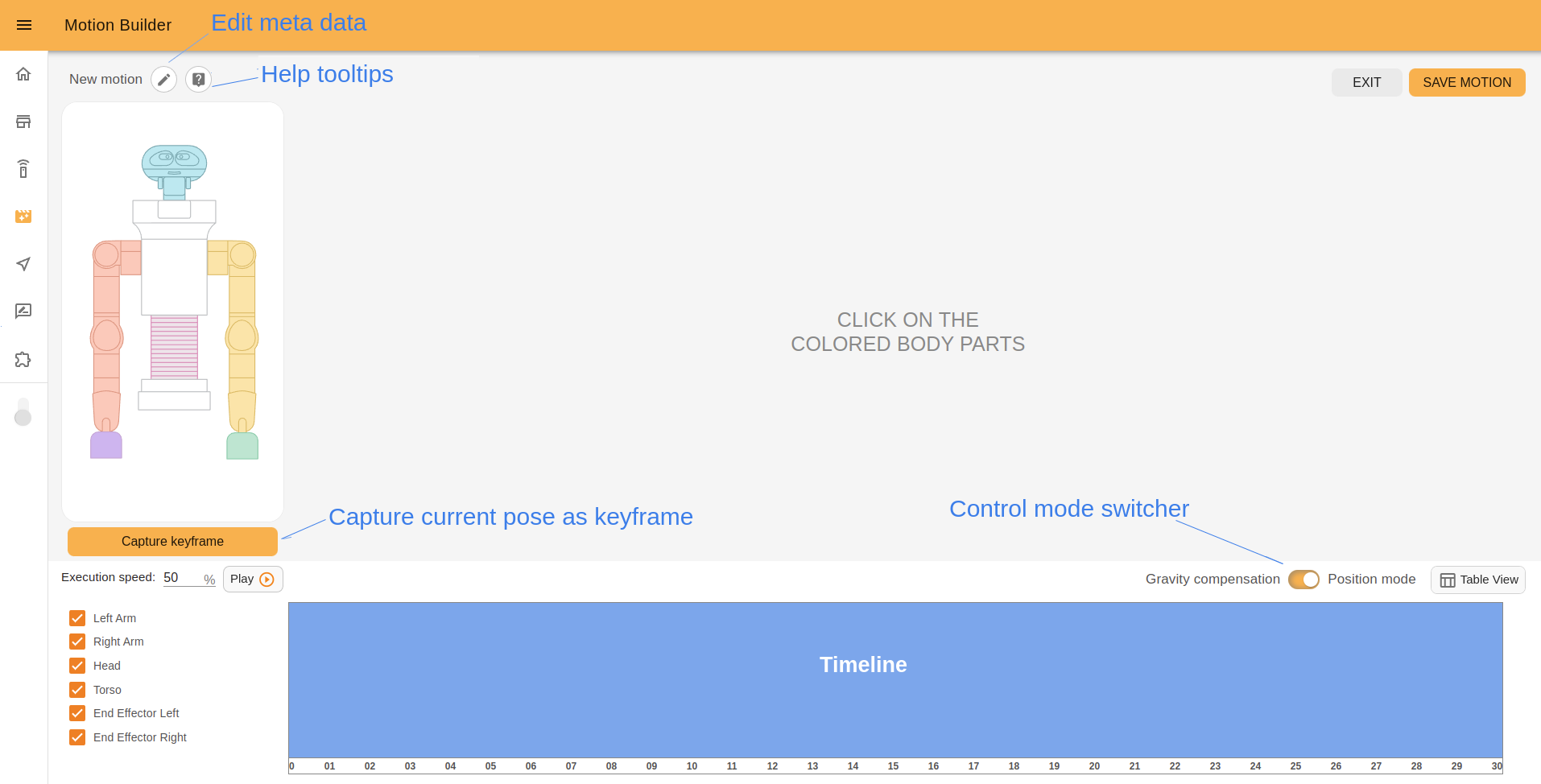

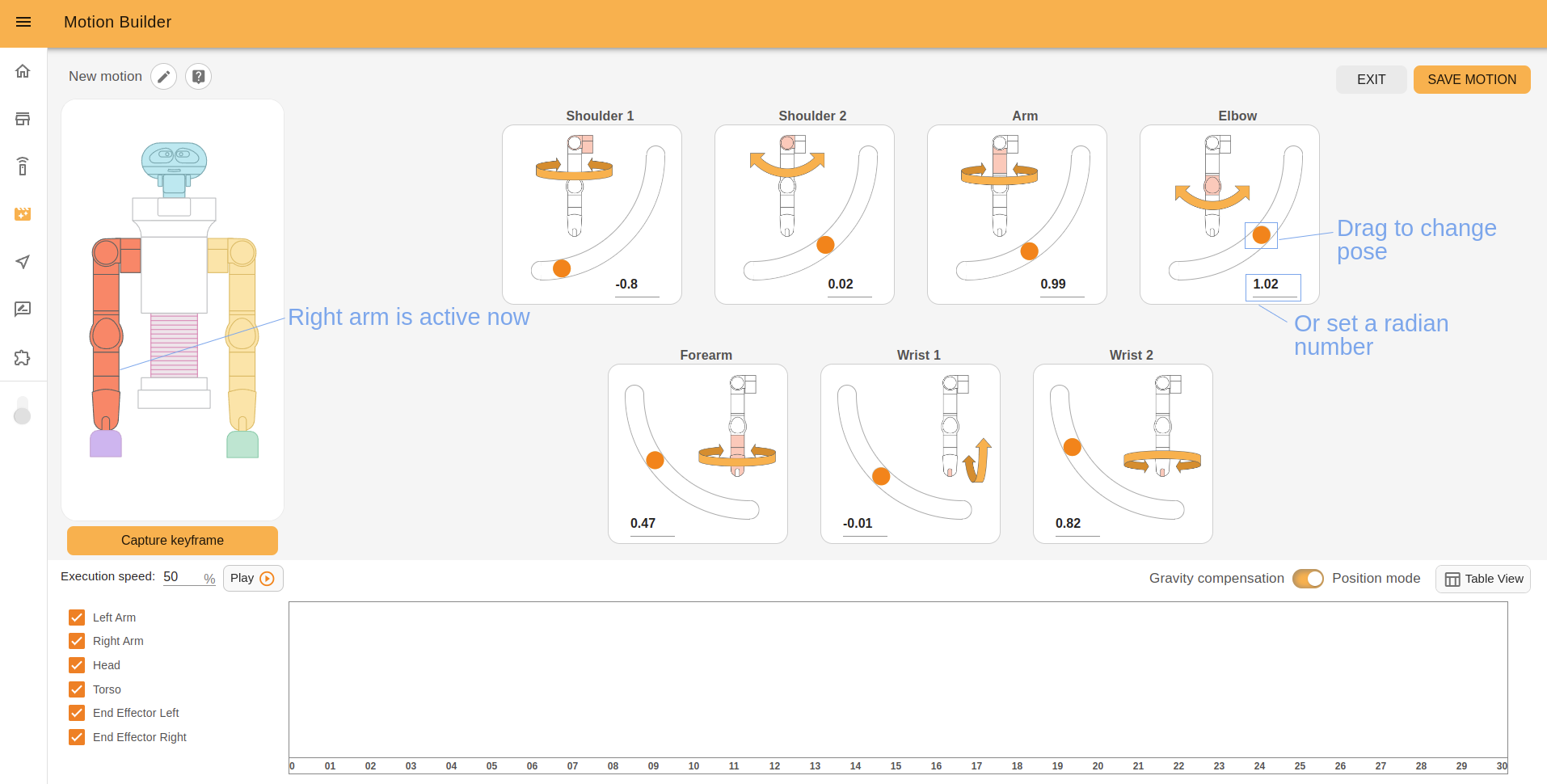

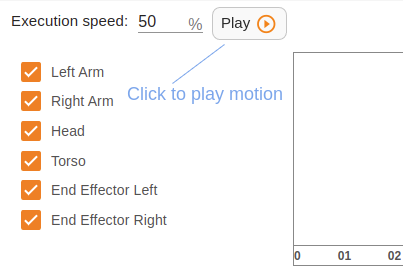

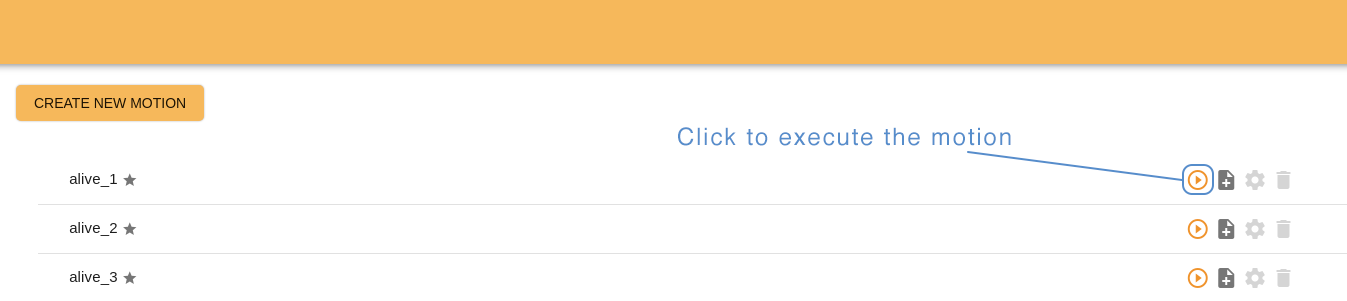

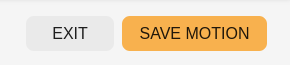

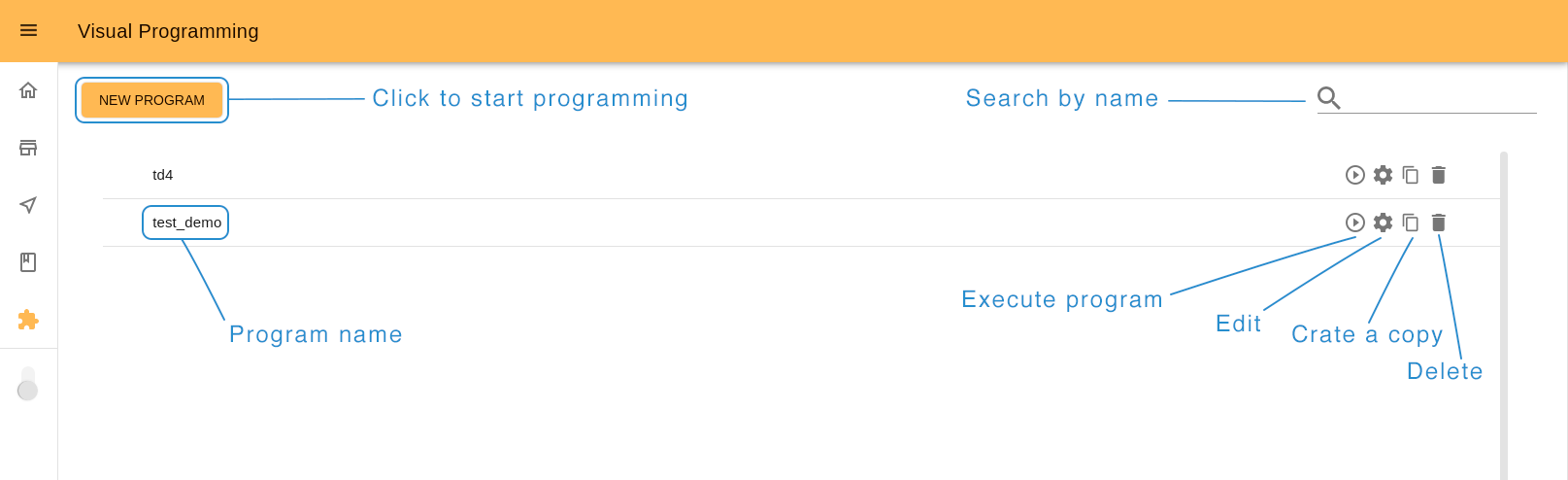

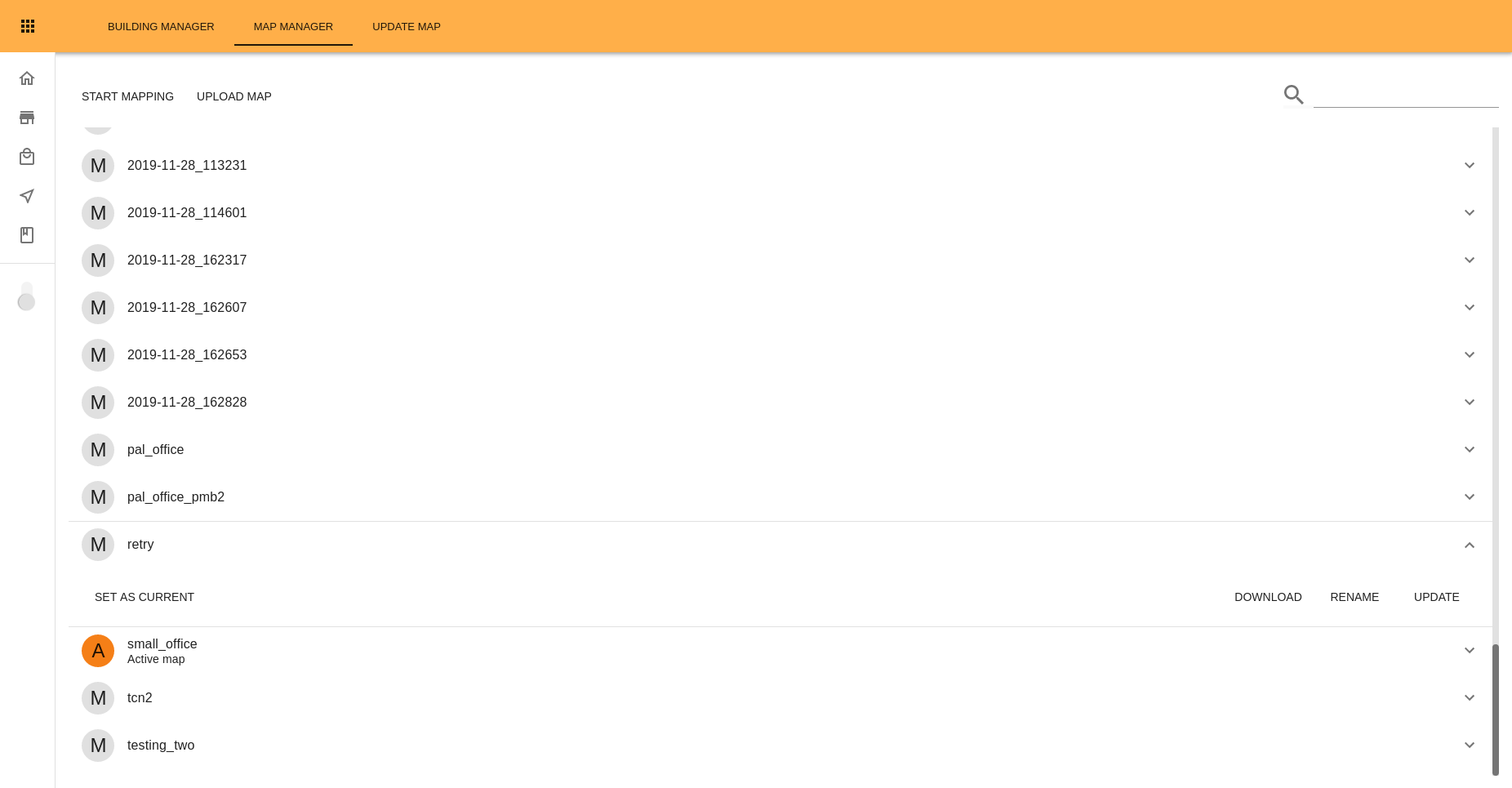

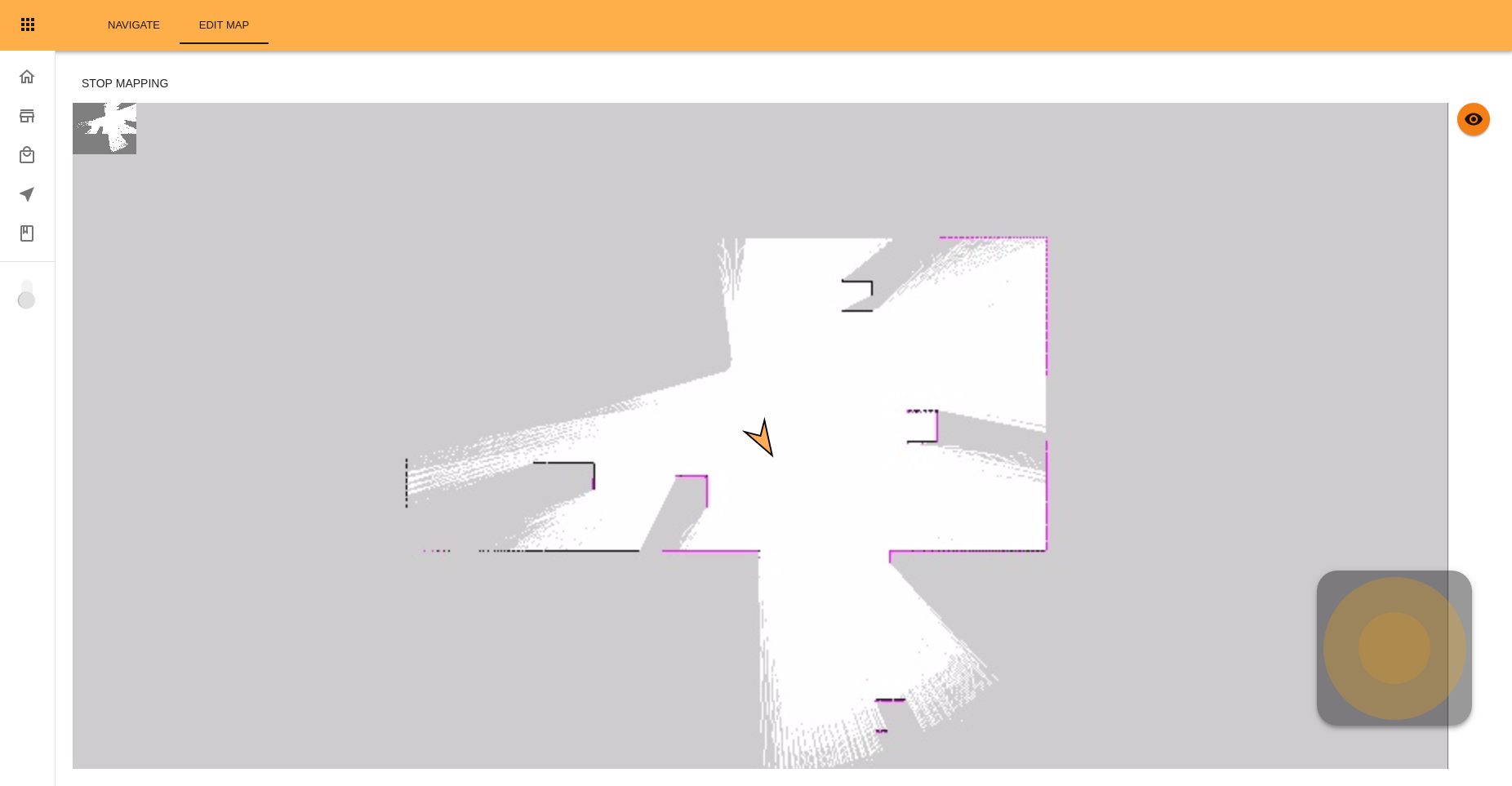

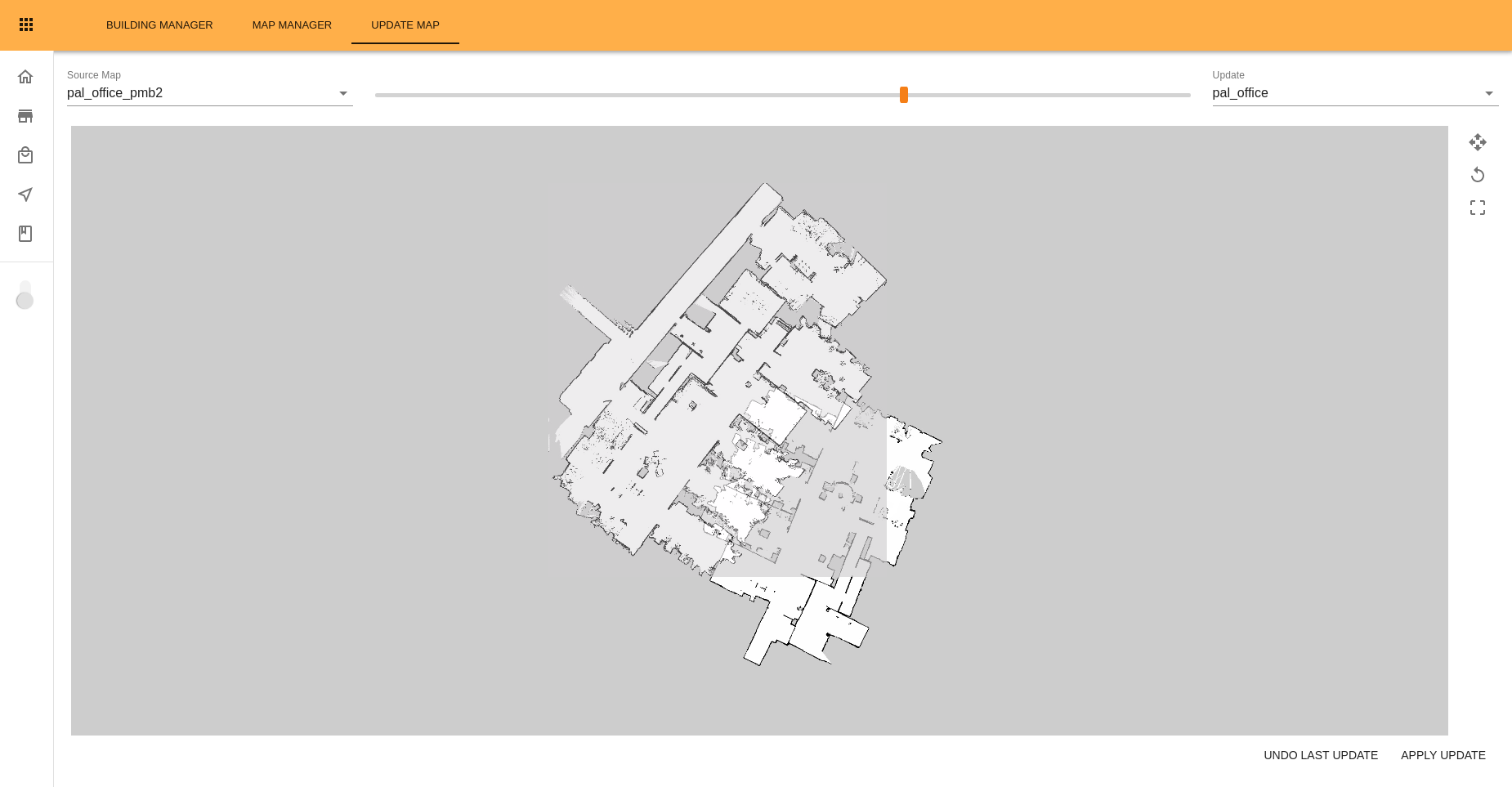

As the presentation consists of the slides, firstly you have an option to play each slide individually while you edit the presentation. To do it click the “Play” icon close to the chosen slide title.