1 ARI User Manual¶

Thank you for choosing PAL Robotics. This User Manual contains information related to the ARI robot developed by PAL Robotics. Every effort has been made to ensure the accuracy of this document. All the instructions must be strictly followed for proper product usage. The software and hardware described in this document may be used or replicated only in accordance with the terms of the license pertaining to the software or hardware. Reproduction, publication, or duplication of this manual, or any part of it, in any manner, physical, electronic or photographic, is prohibited without the explicit written permission of PAL Robotics.

1.1 Disclaimers General¶

Disclaimer ARI

ARI and its components and accessories are provided “as is” without any representations or warranties, express or implied. PAL Robotics makes no representations or warranties in relation to its products or the information and materials related to them, other than the ones expressly written in this User Manual. In no event shall PAL Robotics be liable for any direct, indirect, punitive, incidental, special or consequential damages to property or life, whatsoever, arising out of or connected with the use or misuse of ARI or the rest of our products.

User Manual Disclaimer

Please note that each product application may be subject to standard of identity or other will comply with such regulations in any country. It is the user’s responsibility to ensure that the incorporation and labeling of ARI complies with the regulatory requirements of their markets.

No warranties

This User Manual is provided “as is” without any representations or warranties, express or implied. PAL Robotics makes no representations or warranties in relation to this User Manual or the information and materials provided herein. Although we make a reasonable effort to include accurate and up to date information, without prejudice to the generality of this paragraph, PAL Robotics does not warrant that the information in this User Manual is complete, true, accurate or non-misleading. The ARI User Manual is provided solely for informational purposes. You should not act upon information without consulting PAL Robotics, a distributor, subsidiary or appropriate professional.

Limitations of liability

PAL Robotics will not be liable (whether under the law of contract, the law of torts or otherwise) in relation to the contents of, or use of, or otherwise in connection with, this User Manual: * to the extent that this User Manual is provided free-of-charge, for any direct loss;

for any indirect, special or consequential loss; or

for any business losses, loss of revenue, income, profits or anticipated savings, loss of contracts or business relationships, or loss of reputation or goodwill.

These limitations of liability apply even if PAL Robotics has been expressly advised of the potential loss.

Exceptions

Nothing in this User Manual Disclaimer will exclude or limit any warranty implied by law that it would be unlawful to exclude or limit; and nothing in this User Manual Disclaimer will exclude or limit PAL Robotics’s liability in respect of any: * personal injury caused by PAL Robotics’s negligence;

fraud or fraudulent misrepresentation on the part of PAL Robotics; or

matter which it would be illegal or unlawful for PAL Robotics to exclude or limit, or to attempt or purport to exclude or limit, its liability.

Reasonableness

By using this User Manual, you agree that the exclusions and limitations of liability set out in this User Manual Disclaimer are reasonable. If you do not think they are reasonable, you must not use this User Manual.

Other parties

You accept that, PAL Robotics has an interest in limiting the personal liability of its officers and employees. You agree that you will not bring any claim personally against PAL Robotics’s officers or employees in respect of any losses you suffer in connection with the User Manual. Without prejudice to the foregoing paragraph, you agree that the limitations of warranties and liability set out in this User Manual Disclaimer will protect PAL Robotics’s officers, employees, agents, subsidiaries, successors, assigns and sub-contractors, as well as PAL Robotics.

Unenforceable provisions

If any provision of this User Manual Disclaimer is, or is found to be, unenforceable under applicable law, that will not affect the enforceability of the other provisions of this User Manual Disclaimer.

2 ARI¶

2.1 What is ARI¶

ARI is PAL Robotics’ humanoid platform specifically created for Human-Robot-Interaction and to perform front-desk activities. ARI is a high-performance robotic platform designed for a wide range of multimodal expressive gestures and behaviors, suitable for Human-Robot-Interaction, perception, cognition and navigation. It is important to clarify the intended usage of the robot prior to any kind of operation. ARI is designed to be operated in a controlled environment, under supervision by trained staff at all times. ARI’s hardware and software enables research and development of activities in the following areas:

Navigation and SLAM

Manipulation

Perception

Speech recognition

Human-Robot interaction

2.2 Package contents¶

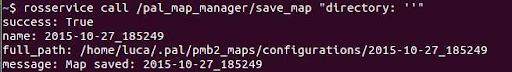

This section includes a list of items and accessories that come with ARI. Make sure the items in the following list are present:

ARI Robot

Battery Charger and Charger Adapter

USB flash drive with installation software

Docking station (optional)

Joystick (optional)

NVIDIA Jetson (optional)

2.3 ARI components¶

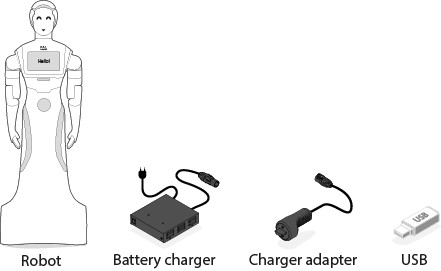

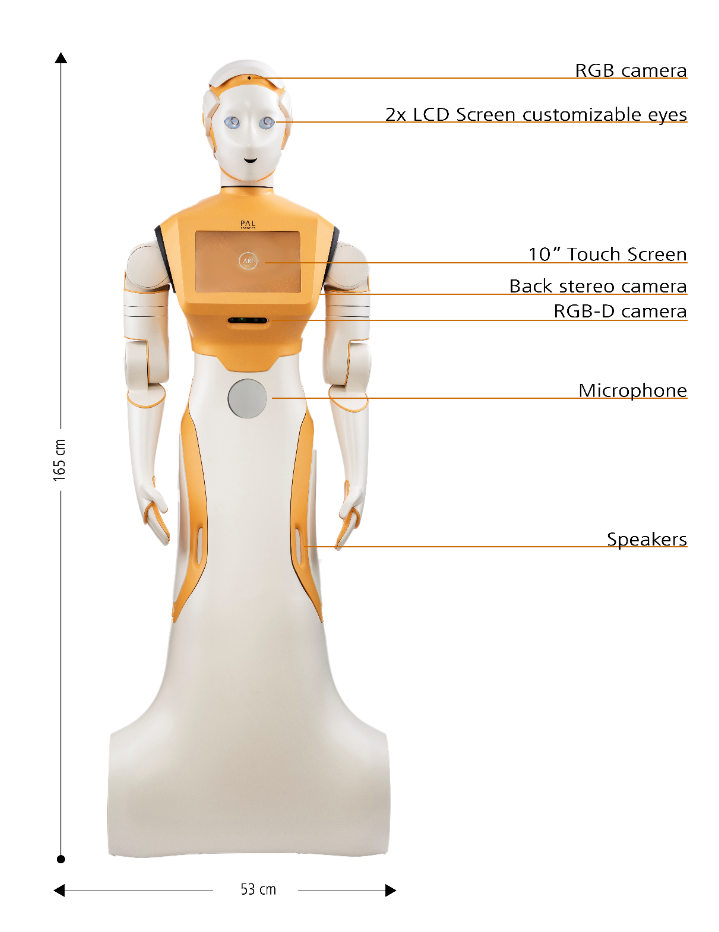

The following is a list of ARI’s components:

Humanoid Torso

2 DoF head

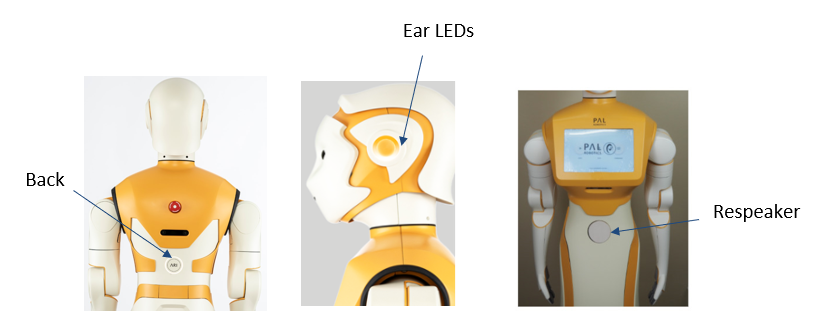

16 RGB LEDs per ear

Eyes LCD screens with custom animations

40 RGB LED ring on the back

Touchscreen 10.1” 1200x800 Projected Capacitive

802.11 a/b/g/n/ac/ad 5 GHz and 2.4 GHz

Ethernet 1000 BaseT

4 x High Performance Digital Microphones array

Optional head camera: Head Camera Sony 8MegaPixel (RGB), Head Intel Realsense D435i (RGB-D).

Torso Camera Intel Realsense D435i (RGB-D)

Torso Back Intel Realsense D265 (stereo-fisheye)

2x HiFi Full-range Speakers

Thermal camera (optional)

2.3.1 Battery¶

ARI comes with a 40 Ah Li-ion battery and can be charged manually or by using the docking station, that provides eight hours of autonomy before charging is needed. Below are further details on battery options:

The manual charger or the dock station can charge ARI in five hours from a completely empty battery to fully charged.

An increased autonomy can be achieved with a 60Ah battery pack, that will last 12 hours and will charge in eight hours.

2.3.2 Onboard computer¶

The specifications of ARI´s onboard computer depends on the configuration options that you have requested. The different possibilities are shown here:

CPU |

Intel i5/i7 |

RAM |

8/16/32 GB |

Hard disc |

250 GB or 500 GB |

Wi-Fi |

Yes |

2.3.3 Electric Switch¶

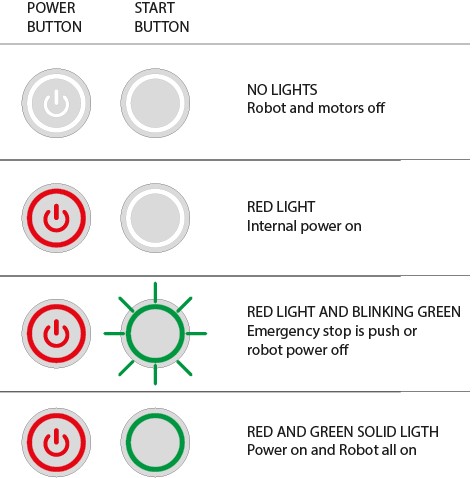

The Power Button is the main power control switch. Before turning ARI ON make sure first that this switch is ON, i.e. its red light indicator is ON.

When ARI is not going to be used for a long period, press the switch so that its red light indicator turns OFF.

Please note: This switch should not be turned OFF before using the ON/OFF button to turn OFF the onboard computer of the robot.

Turning OFF this switch will instantaneously cut the power supply to all the robot components, including the onboard computer. Do not use this switch as an emergency stop. For the emergency stop please refer to Emergency Stop.

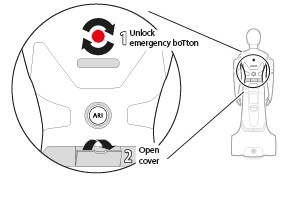

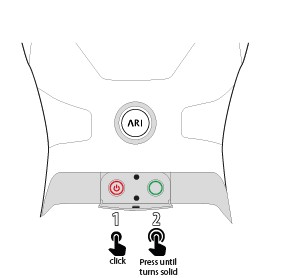

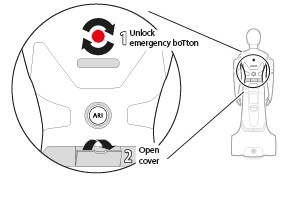

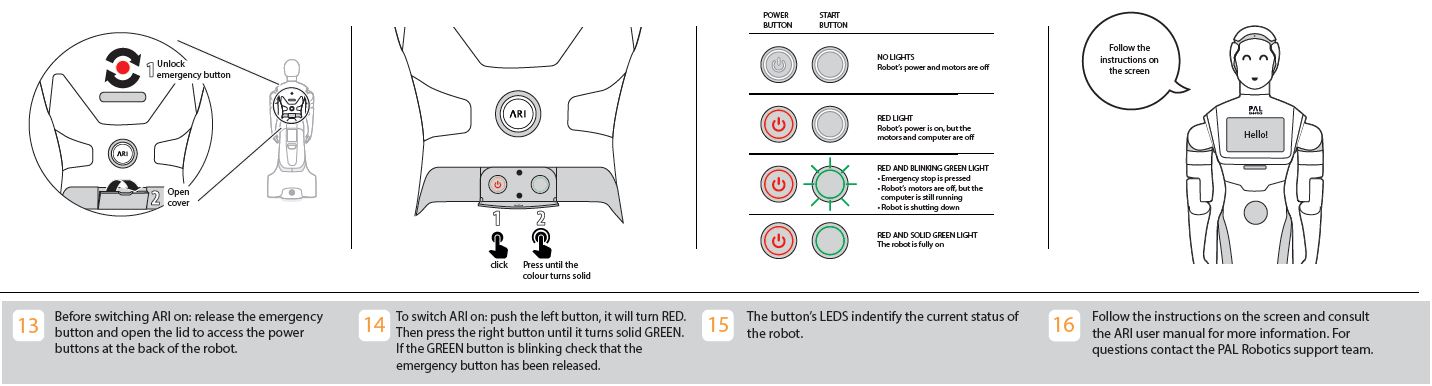

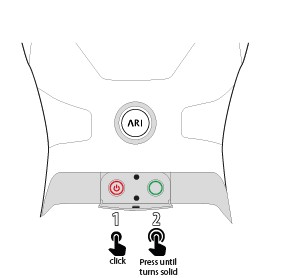

Before switching ARI ON: unlock the emergency button and open the lid to access the power buttons at the back of the robot.

To switch ARI ON: push the left button, it will turn RED. Then press the right button until it turns solid GREEN. If the GREEN button is blinking check that the emergency button has been released.

2.3.4 Connectivity¶

ARI is equipped with a dual band wireless card with the following specifications: 802.11.a/b/g/n/ ac 5GHz, 867 Mbps , Bluetooth 4.4, LTE Capable.

2.4 ARI description¶

ARI´s main dimensions are summarized in the table below:

Height |

165 cm |

Width |

53cm |

Depth |

75cm |

DoF Head |

2 |

DoF Arms |

4 (x2) Optional |

DoF Hands |

1 (x2) Optional |

DoF Mobile Base |

2 |

2.4.1 Payload¶

ARI’s arm payload, max speed, battery life, computing power, communication, AI and DoF are summarised in the following table:

Arm payload |

0.5 Kg |

Max Speed |

1.5 m/s |

Battery life |

Up to 12h |

Computing Power |

Intel i5/i7, up to 32GB RAM |

AI |

NVIDIA® embedded GPU |

Communication |

WiFi, Bluetooth, USB, Ethernet |

DoF |

Up to 14 DoF |

2.4.2 User panel¶

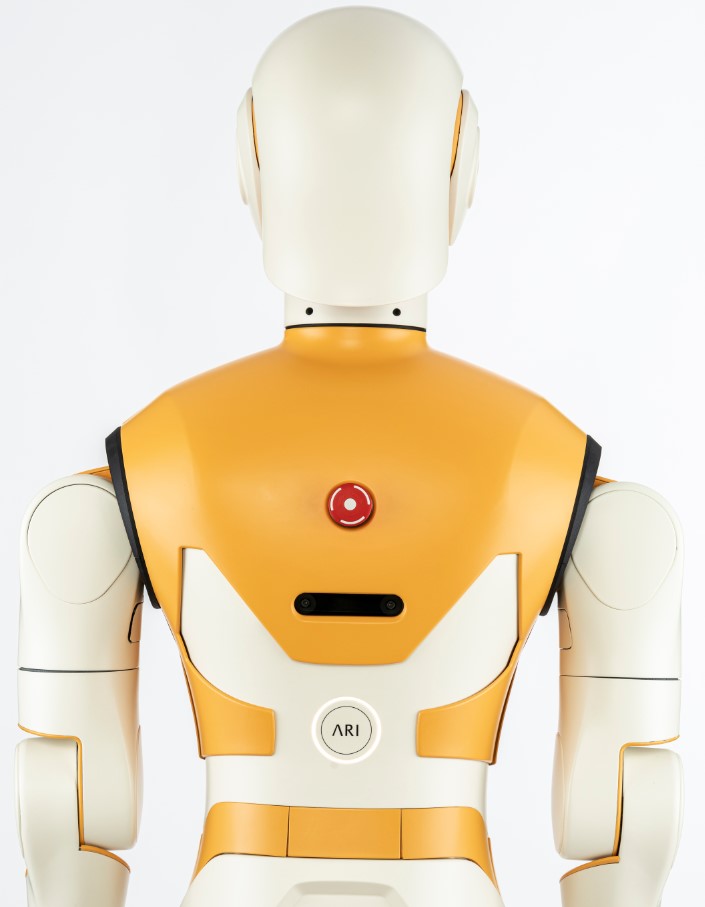

It is possible to access the user panel by removing the cover as shown in the figure below:

2.4.3 Main PC connectors¶

These are as follows:

1x Gigabyte Ethernet

2 x USB 3.0

HDMI

Audio In/Out port

2.4.4 External power connectors¶

These are as follows:

12V/ 3A Powe

2.4.5 Nvidia GPU Embedded PC¶

The details are as follows:

2 x USB 3.0

Jetson buttons

3 Regulatory¶

Safety is important when working with ARI. This section provides an overview of safety issues, general usage guidelines to maintain safety, and some safety-related design features. Read these instructions carefully to ensure the safety of people and surroundings and to prevent damages to the environment and to the robot. Follow these instructions everytime the robot is used.

3.1 Safety¶

Read the following safety precautions before setting up, using and maintaining the robot. Incorrect handling of this product could result in personal injury or physical damage to the robot. The manufacturer assumes no responsibility for any damage caused by mishandling what is beyond normal usage defined in this product manual.

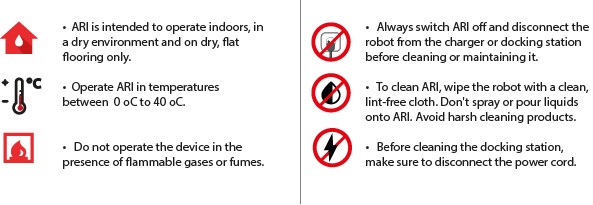

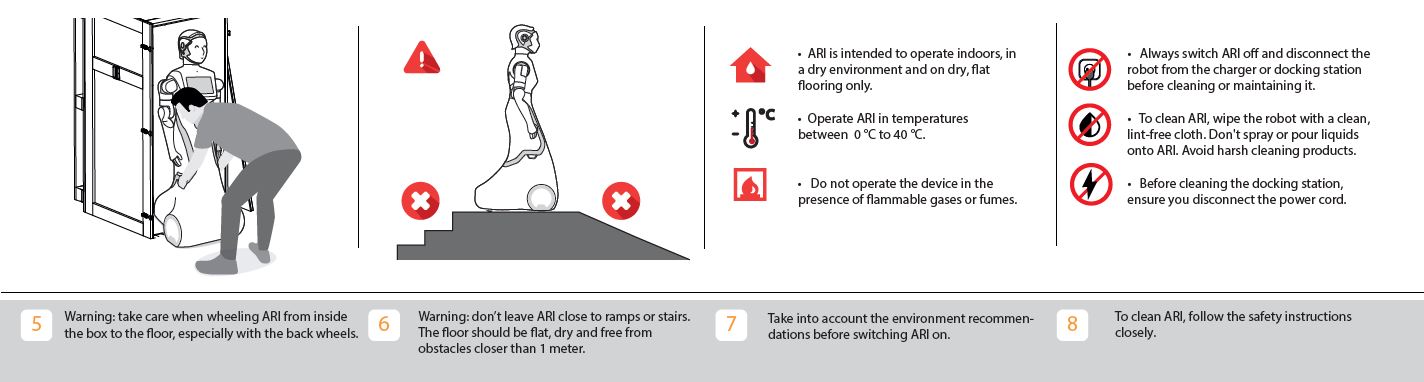

Environment

ARI is intended to operate and be stored indoors, in a dry environment and on dry, flat flooring only. Operating or storing ARI in different conditions may result in damage to the product.

ARI is designed to operate in temperatures between 0ºC to 40ºC (32ºF and 104ºF). Operating the product outside of this temperature range may result in damage to the product.

Do not operate the device in the presence of flammable gases or fumes. Operation of any electrical instrument in such an environment constitutes a definite safety hazard.

Usage

Take care while handling ARI. Do not apply any physical pressure or impact when operating ARI.

When ARI is running, do not place your fingers, hair, or other appendages on or near moving parts such as ARI’s neck, arms, hands, or wheels.

Children under the age of 13 must not use or interact in any way with ARI without adult supervision at all times.

Do not leave pets unattended around ARI.

Do not operate the robot outdoors.

Before using ARI’s autonomous navigation mode (follow, go-to, patrol or telepresence), ensure the floor is clear of small objects.

Do not place hot drinks, liquids in open containers, or flammable or fragile objects on ARI.

When ARI is moving, ensure that all people or pets are at a safe distance of at least 0.5 meters from the device at all times.

Keep ARI away from steep drops such as stairs, ledges and slopes.

Do not intentionally drive ARI into people, animals or objects.

Do not tie anything to ARI or use it to drag objects, pets or people.

Do not accessorize ARI or cover ARI with any fabrics, tapes or paint.

Be wary of driving ARI over wires, thresholds and carpets as they may harm ARI’s wheels or motor.

Do not point direct light or lasers at ARI as it may damage the performance of the sensors.

Do not manually move ARI while the robot is in autonomous navigation mode (follow, go-to, patrol or telepresence).

Do not break, scratch, paint or draw on ARI’s screen.

Keep ARI on ground surfaces. Do not place ARI on chairs, tables, counters etc.

Keep ARI in an upright position at all times.

Do not use ARI in completely dark settings.

Keep ARI away from flames and other sources of heat.

Do not stand or place anything on the docking station.

The space where ARI operates should have a flat floor and be free of hazards, particularly stairways and other drop-offs.

Avoid sharp objects (such as knives), sources of fire, hazardous chemicals or furniture that could be knocked over.

The terrain must be capable of supporting the weight of the robot. It must be horizontal and flat. Do not use carpets, to avoid the robot tripping over.

Make sure the environment is free from objects that could pose a risk if knocked, hit, or otherwise affected by ARI.

Make sure there are no cables or ropes that could be caught in the covers or wheels; these could pull other objects over.

Be aware of the location of emergency exits and make sure the robot cannot block them.

Avoid the use or presence of magnetic devices near the robot.

Power

ARI comes with a regionally approved power supply cord. Do not use any other power supply cord. lf the cord or jack is damaged, it must be replaced. For replacement cords, please contact the customer support team.

The docking station is designed to be plugged into a 100-240 V 50/60 Hz standard outlet. Using any power converters will immediately void the warranty.

lf you live in an area prone to electrical storms, it is recommended that you use additional surge protection on the power outlet to which the docking station cable is connected.

Use ARI with the installed battery only. Battery replacement is to be performed only by the official ARI customer service team.

Do not disassemble or modify the battery. The battery contains safety and protection devices, which, if damaged, may cause the battery to generate heat, explode or ignite.

Do not immerse the battery pack in any liquid

Maintenance

Always disconnect ARI from the docking station before cleaning or maintaining the robot.

Do not handle ARI or the docking station with wet hands or fluids.

To clean ARI, wipe the robot with a clean, lint-free cloth. Don’t spray or pour liquids onto ARI. Avoid harsh cleaners.

Before cleaning the docking station, ensure the power cord is disconnected.

Do not disassemble ARI or the docking station. Refer servicing only to qualified and authorized personnel.

lf you notice any missing, broken or falling parts, stop using ARI immediately and contact customer support.

Do not use ARI if the product box is open or damaged when you receive it. Contact the customer support team.

3.1.1 Warning Safety measures in practice¶

Please read our additional product warnings below. Failure to follow these warnings will invalidate our limited warranty.

ATTENTION! PRODUCT WARNINGS ARE LISTED BELOW:

DO NOT OPERATE THE DEVICE IN THE PRESENCE OF FLAMMABLE GASES OR FUMES.

DO NOT PLACE FINGERS, HAIR, OR OTHER APPENDAGES ON OR NEAR MOVING PARTS OF THE PRODUCT.

CHILDREN MUST NOT OPERATE THE DEVICE WITHOUT ADULT SUPERVISION AT ALL TIMES.

DO NOT LEAVE PETS UNATTENDED AROUND ARI

WARNING Chemical Exposure: Do not allow battery liquid to come into contact with skin or eyes. lf contact occurs, wash the affected area with plenty of water and promptly seek medical advice. Immediately contact the customer support team.

WARNING Fire or Explosion Hazard: Do not crush or dismantle battery packs. Do not heat or place the battery pack near any heat source or direct sunlight. Do not incinerate or short-circuit the battery pack. Do not subject batteries to mechanical shock.

WARNING Heat sources and direct sunlight: Do not place ARI’s docking station near any heat source or in direct sunlight. Do not touch or short-circuit the charging contacts on ARI’s docking station.

Contact your local waste management authority for battery recycling and disposal regulations in your area.

3.1.2 Emergency Stop¶

The emergency stop button can be found on the back of the robot. As the name implies this button may be used only in exceptional cases where the immediate stop of the robot is required. To activate the emergency stop the user has to push the button. To deactivate the emergency stop, the button has to be rotated clockwise according to the indications on the button until it pops out.

Pushing the Emergency button turns off the power to ARI’s motors. Be careful using this emergency stop action because the motors will be switched OFF, causing the head and arms to drop down.

Computers and sensors will NOT be powered down. To restore normal functioning, after releasing the Emergency button, the PC green button should be pressed until blinking stops.

When pushed, motors are stopped and disconnected. The green indicator of the ON/OFF button will blink fast in order to notify the user of the emergency state. To start normal behaviour again, a two step validation process must be executed.

First, the emergency button must be released by rotating clockwise, and then the ON/OFF button must be pressed for one second. The green light indicator on the ON/OFFbutton will then change to a solid state.

3.1.3 Fire fighting equipment¶

For correct use of ARI in a laboratory or location with safety conditions, it is recommended to have in place a C Class or ABC Class fire extinguisher (based on halogenated products), as these extinguishers are suitable for stifling an electrical fire. If a fire occurs, please follow these instructions:

Call the fire service.

Push the emergency stop button, as long as you can do so without any risk

Only tackle a fire in its very early stages

Always put your own and others’ safety first

Upon discovering the fire, immediately raise an alarm

Make sure the exit remains clear

Fire extinguishers are only suitable for fighting a fire in its very early stages. Never tackle a fire if it is starting to spread or has spread to other items in the room, or if the room is filling with smoke.

If you cannot stop the fire or if the extinguisher runs out, get yourself and everyone else out of the building immediately, closing all doors behind you as you go. Then ensure the fire service is on their way.

3.1.4 Measures to prevent falls¶

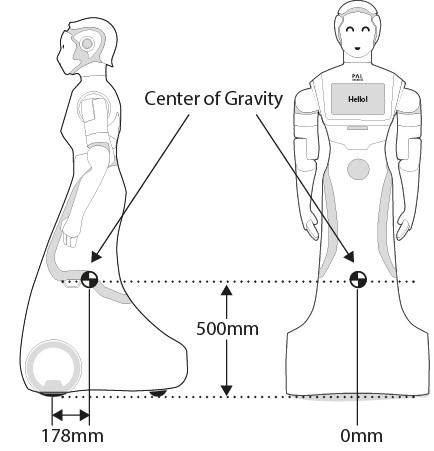

ARI has been designed to be statically stable, due to its low center of mass, fixed torso, and mass distribution with very lightweight arms. even when the arms are holding its maximum payload in its most extreme kinematic configuration. Nevertheless, some measures need to be respected in order to avoid the robot tipping over.

Measure 1

Do not apply external downward forces to the arms when they are extended.

Measure 2

ARI has been designed to navigate in flat floor conditions. Do not navigate on floors with unevenness higher than 5%.

Measure 3

Avoid navigating close to downward stairs, as ARI’s RGB-D camera will not detect this situation and the robot may fall down the stairs.

3.1.5 Measures to prevent collisions¶

Most collisions occur when moving ARI’s arm. It is important to take the following measures into account in order to minimize the risk of collisions.

3.1.6 Battery leakage¶

The battery is the only component of the robot that is able to leak. To avoid leakage of any substance from the battery, follow the instructions defined below, to ensure the battery is manipulated and used correctly.

The following guidelines must be respected when handling the robot in order to prevent damage to the robot’s internal batteries.

Do not expose the robot to fire.

Do not expose the robot to water or salt water, or allow the robot to get wet.

Do not open or modify the robot. Avoid, in all circumstances, opening the internal battery case.

Do not expose the robot to temperatures above 49◦C for over 24 hours.

Do not store the robot at temperatures below -5◦C for more than 7 days.

For long-term storage (more than 1 month) charge the battery to 50%.

Do not use ARI’s batteries for any other purpose.

Do not use any devices except the supplied charger to recharge the battery.

Do not drop the batteries.

If any damage or leakage is observed, stop using the battery.

3.2 Robot Identification (label)¶

The robot is identified by a physical label. This label contains:

Business name and full address.

Designation of the machine.

Part Number (P.N.).

Year of construction.

Serial number (S.N.).

4 Getting started with ARI¶

4.1 Unboxing ARI¶

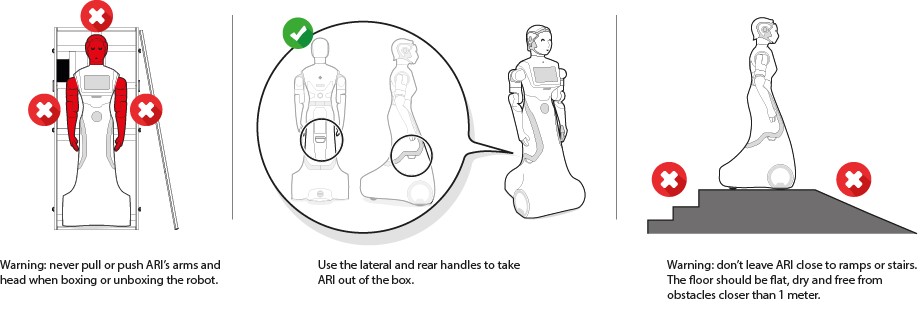

ARI comes with a Quick Start guide. Below are the instructions from the Quick Start guide on how to unbox the robot:

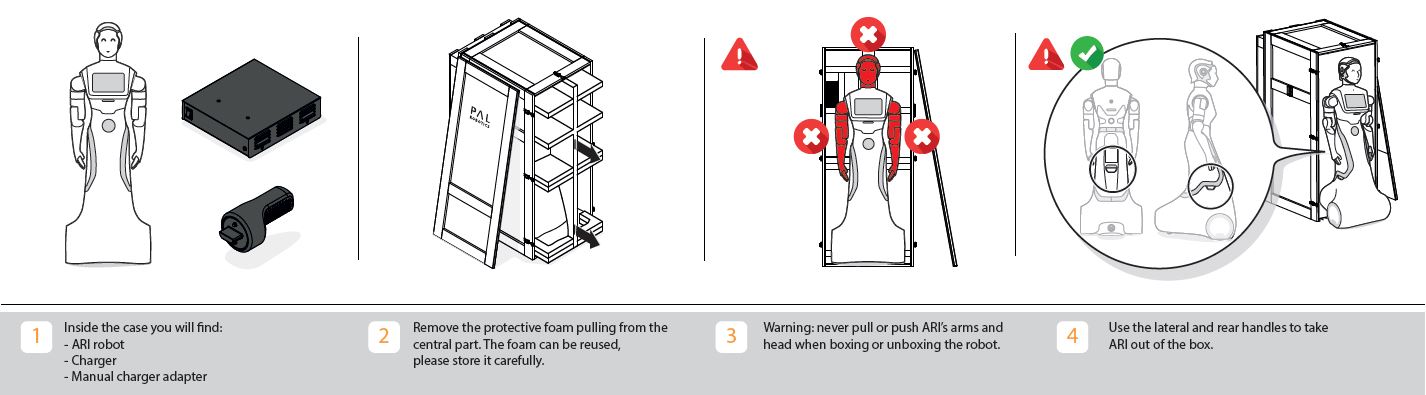

Inside the case you will find: ARI robot, charger, manual charger adapter

Remove the protective foam, pulling from the central part. The foam can be reused, please store it carefully.

Warning: never pull or push ARI’s arms and head when boxing or unboxing the robot.

Use the lateral and rear handles to take ARI out of the box.

Warning: take care when wheeling ARI from inside the box to the floor, especially with the back wheels.

Warning; don’t leave ARI close to ramps or stairs. The floor should be flat, dry and free from obstacles closer than 1 meter.

Take into account the environment recommendations before switching ARI on.

To clean ARI, follow the safety instructions closely.

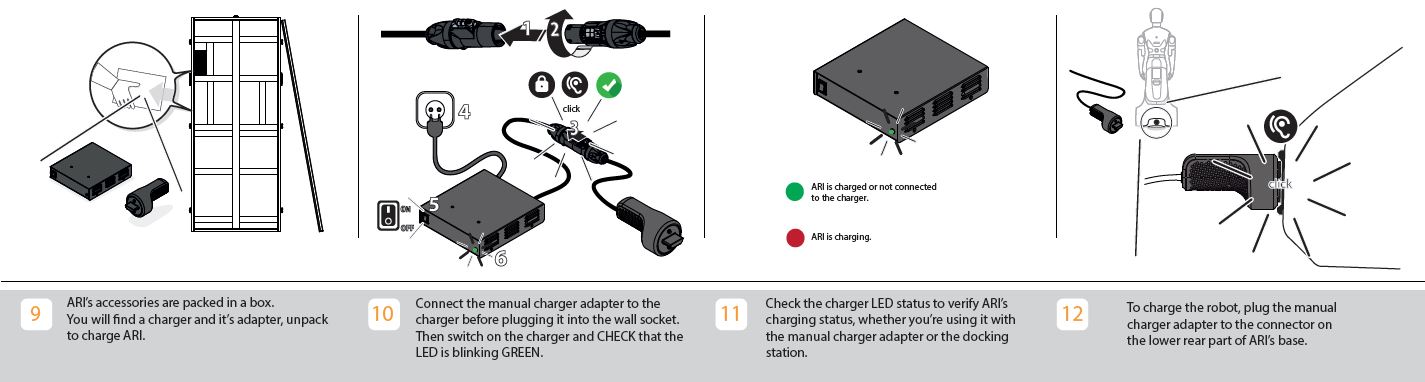

ARI’s accessories are packed in a box. You will find a charger and its adapter, unpack to charge ARI.

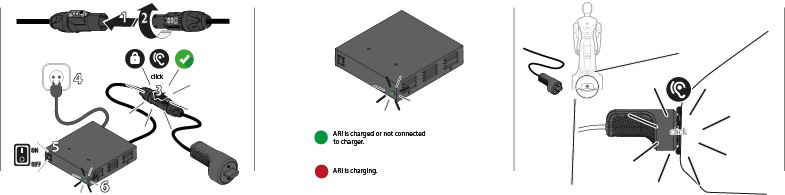

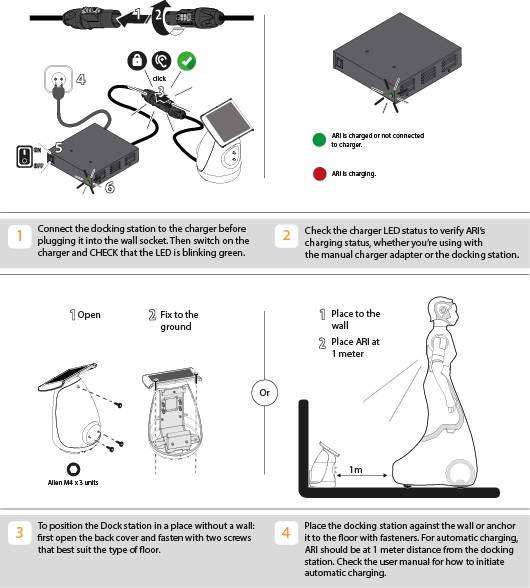

Connect the manual charger adapter to the charger before plugging it into the wall. Then switch on the charger and CHECk that that the LED is blinking green.

Check the charger LED status to verify ARI’s charging status, whether you’re using the manual charger adapter or the docking station.

To charge the robot, plug the manual charger adapter to the connector on the lower rear part of ARI’s base.

Before switching ARI on: release the emergency button and open the lid to access the power buttons at the back of the robot.

To switch ARI on: push the left button, it will turn RED. Then press the right button until it turns solid GREEN. If the GREEN button is blinking check that the emergency button has been released.

Follow the instructions on the screen for more information. For questions contact the PAL Robotics support team.

There is an available QR code for access to the ARI user manual.

4.1.1 What’s in the box¶

The ARI box will contain the following items:

ARI Robot

Battery Charger and Charger Adapter

USB flash drive with installation software

Docking station (optional)

NVIDIA Jetson (optional)

4.2 Power management¶

4.2.1 Emergency stop¶

When Emergency Stop is pushed, motors are stopped and disconnected. The green indicator of the ON/OFF button will blink fast in order to notify the user of the emergency state.

To start normal behaviour again, a two step validation process must be executed: the emergency button must be released by rotating clockwise, and then the ON/OFF button must be pressed for one second. The green light indicator of the ON/OFF button will change to a fixed state.

4.2.2 Charging the robot’s battery¶

Overview

Depending on the version acquired, your ARI may include a docking station that allows the robot to recharge itself automatically. This section will describe the components of the docking station and how to integrate it with your algorithms.

4.2.3 Charging ARI with cable charger¶

ARI must only be charged with the provided charger and adapter.

First you need to connect the manual adapter to the charger. Then it should be plugged into the wall socket. To charge the robot, plug the manual charger adapter to the connector on the lower rear part of ARI’s base. Then switch ON the charger and CHECK that the LED indicator is blinking GREEN if the robot is fully charged or NOT connected to the charger. The LED indicator should be RED when ARI is charging.

4.2.4 Charging ARI with dock station¶

The docking station hardware and installation

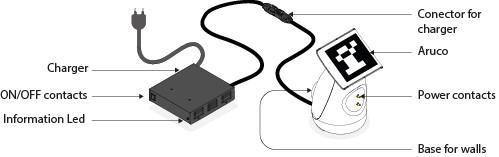

Figure below indicates the components of the docking station.

The dock station itself is composed of an adapter, where there are buttons to switch it on and off, charger and a LED indicating whether the robot is charging or not. The other end contains a base, that should be supported against a wall, power contacts, to establish contact with the robot, and an ARUCo marker, which is what the robot detects in order to dock.

The docking station should be preferably mounted against a hard surface, to avoid the robot displacing it while doing a docking manoeuvre. The power charger must be plugged into the respective plug. The user must also assure that no objects are present in the docking station surroundings, that could interfere with the docking manoeuvre.

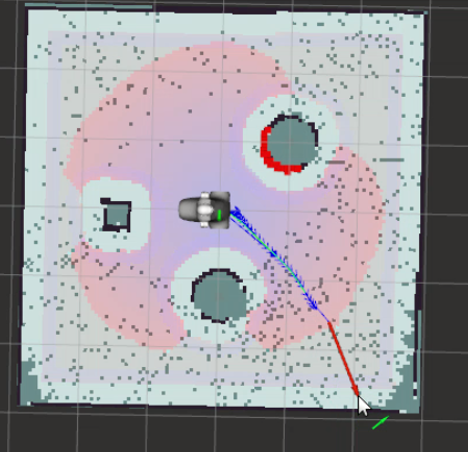

Docking algorithm

In order to carry out autonomous docking the robot uses its back stereo-fisheye camera, located right below the emergency button, in order to detect the ARUCo marker.

When the robot is asked to go to the dock station, it activates two services in parallel. The first is responsible for the pattern detection, while the second performs the serving to reach the power contacts:

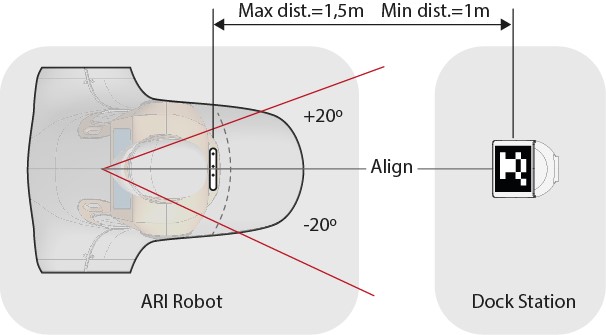

Pattern detector: the robot is capable of detecting the pattern up to 1-1.5 meters from the torso back RGB-D camera and with an orientation angle of 40º

Servoing manoeuvre: it comprises two steps, where first the robot aligns itself with the power contacts and, secondly, moves backwards advances until the contact is made or a timeout occurs (in the case the docking station is not powered, or the contact falls for example).

In order to initiate the docking the robot should be between 1 and 1.5 m away from the dock station. It is also an option to define a point of interest in order to pre-position the robot more easily.

Once the robot is docked, it will block most velocity commands sent to the base, in order to avoid manoeuvres that could possibly damage the robot or the dock station. There are only two ways of moving the robot after it is docked: By doing an undock manoeuvre, or using the graphical joystick, which can override all velocity commands.

WARNING: it is the sole responsibility of the user to operate the robot safely with the joystick after the robot has reached the docking station.

Usage

The docking/undocking manoeuvres are available through two different action servers that can be activated by using the provided rviz plugin or directly through the action server interface.

4.2.5 Dock/Undock through WebGUI¶

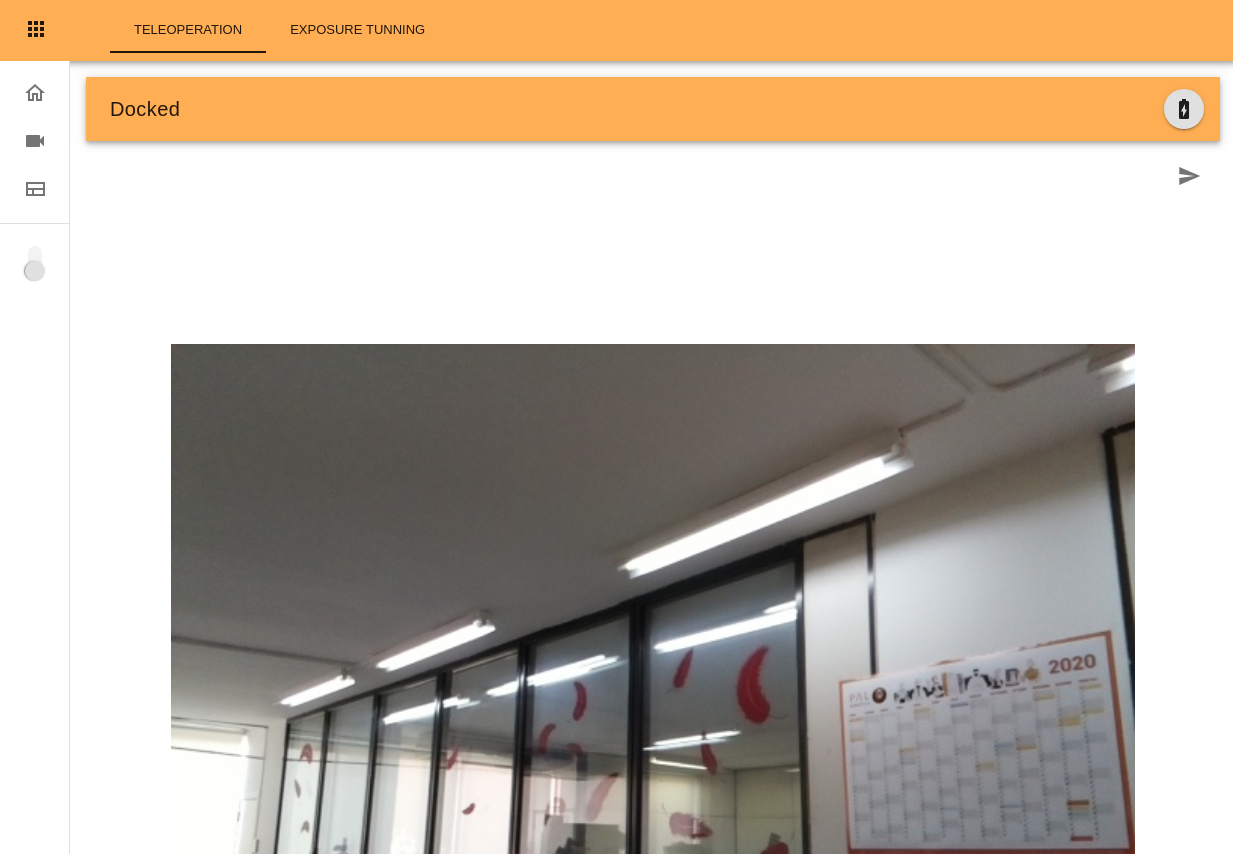

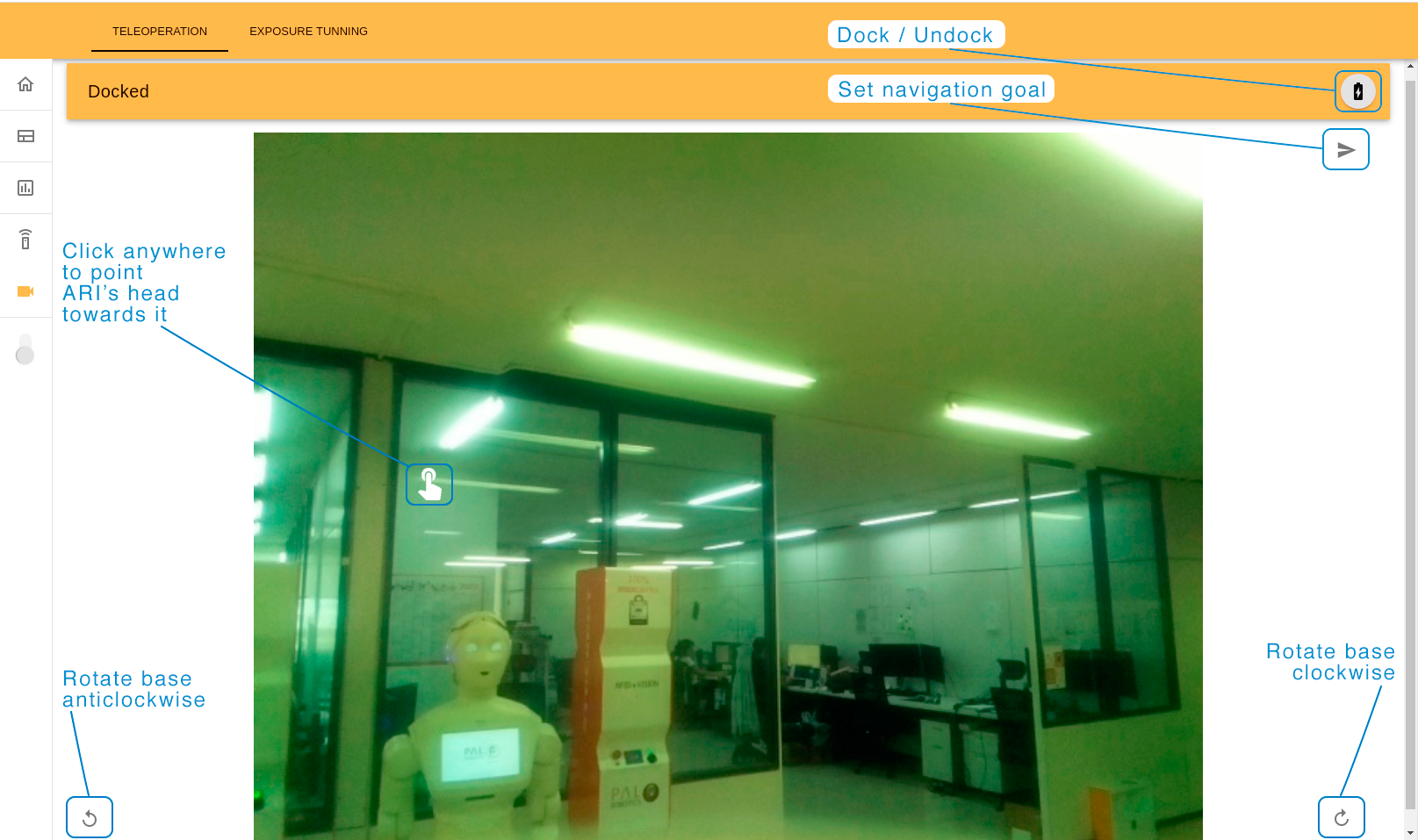

In order to dock/undock using the WebGUI first log in (username and password: pal).

Once logged in navigate to the Surveillance tool (camera icon).

On the Surveillance plugin the top bar shows the current state of the robot (Docked or Undocked), the icon on the left permits undocking if the robot is docked, or Dock if the robot is undocked and in front of a docking station.

See 10 WebGUI for further information.

4.2.6 Dock/Undock using RVIz plugins¶

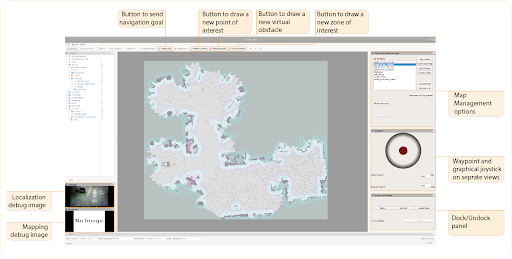

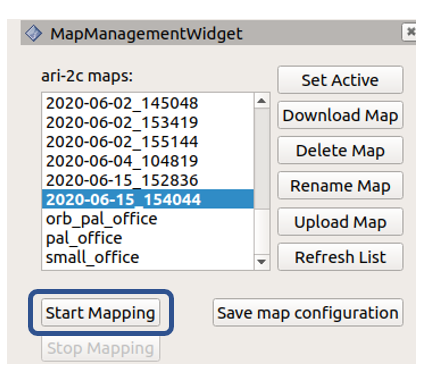

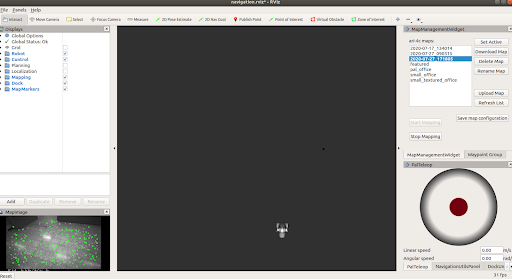

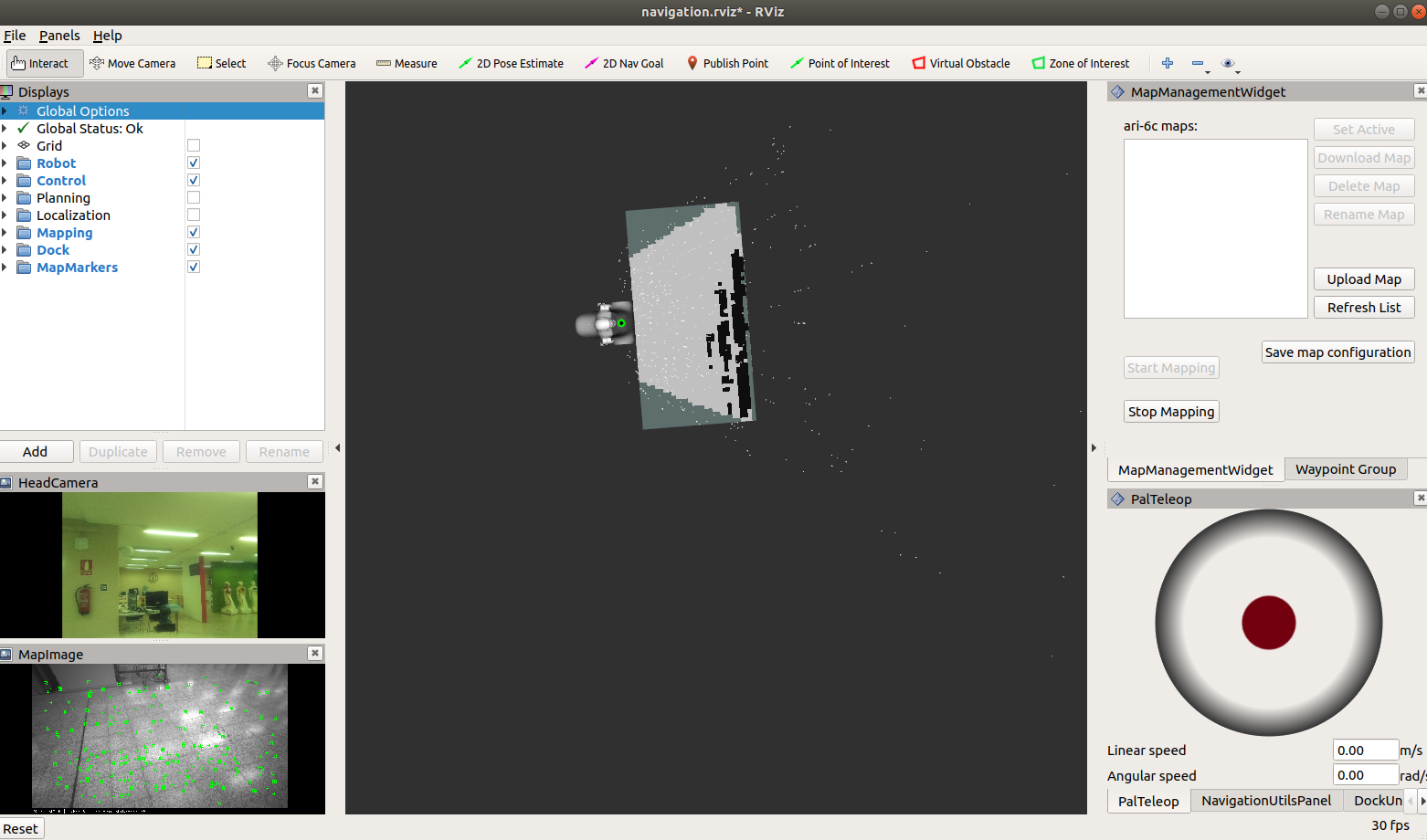

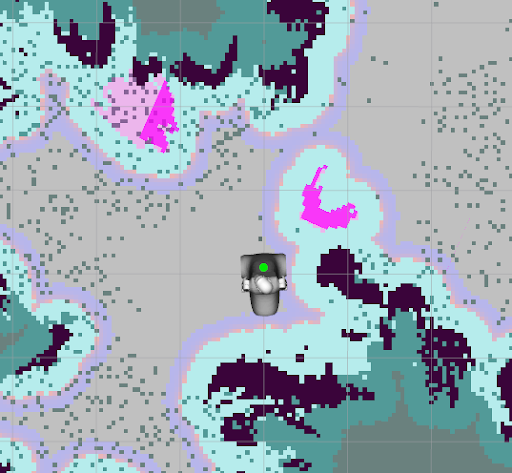

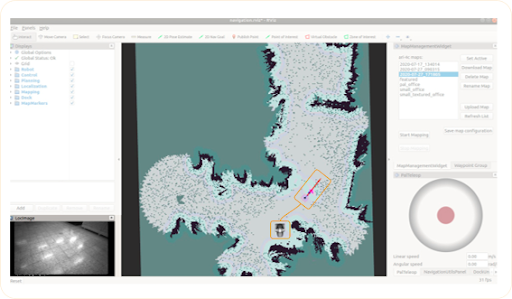

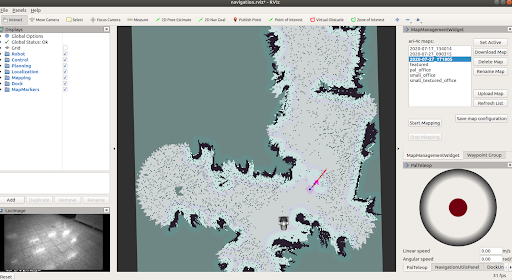

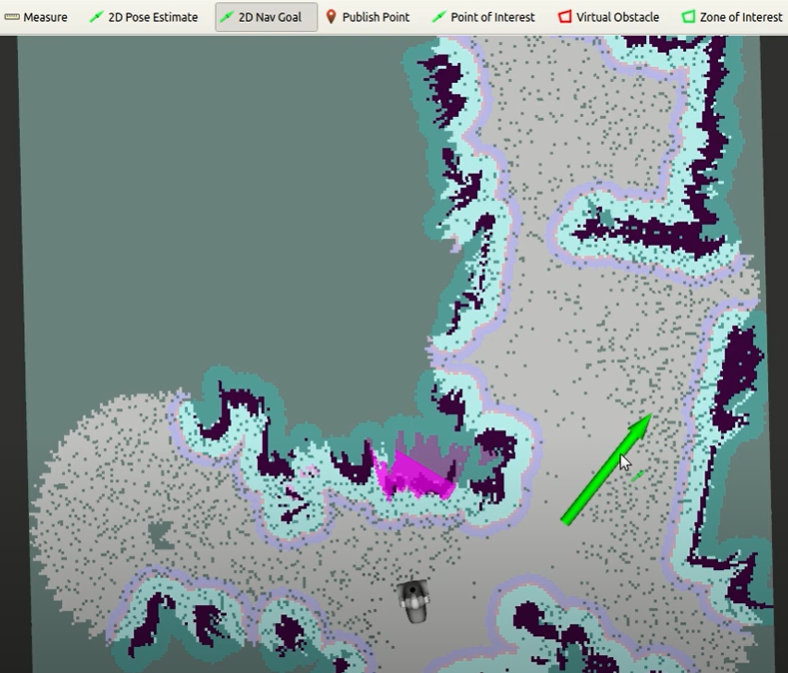

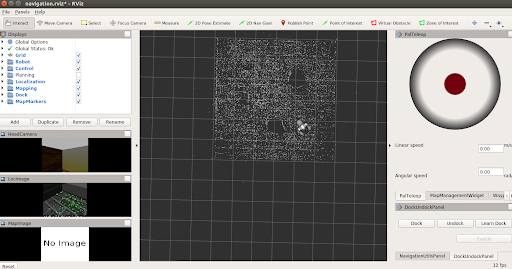

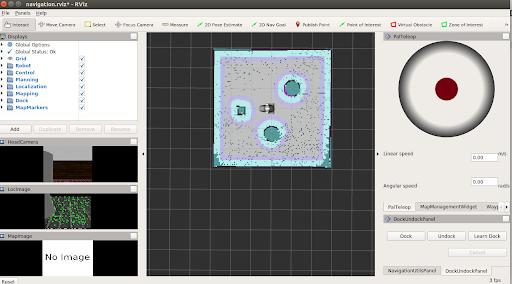

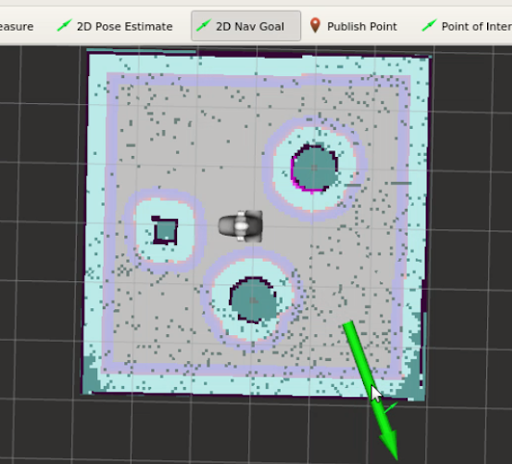

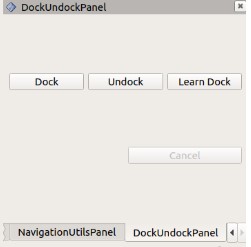

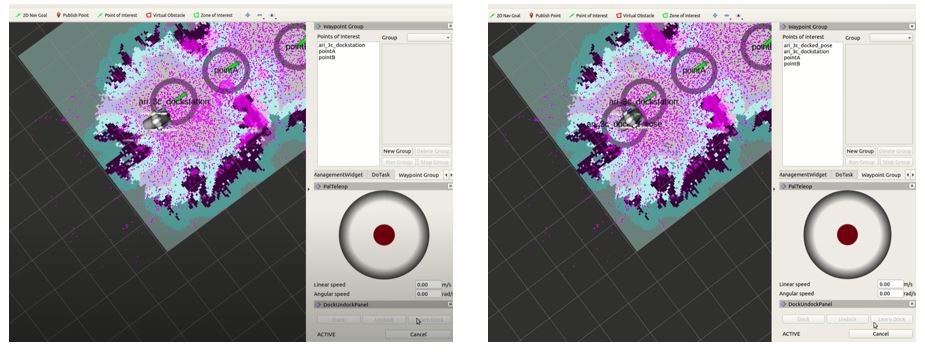

A dock/undock panel is available as an RViz plugin, that can be added to any RViz configuration by going to the Menu Panels -> Add New Panel and then choosing the DockUndock Panel. It is also loaded through the robot’s preconfigured navigation RViz file.

export ROS_MASTER_URI= http://ari-0c:11311

rosrun rviz rviz -d `rospack find ari_2dnav`/config/rviz/navigation.rviz

The panel appears on the right lower side of this interface.

Once the user has positioned the robot within the tolerances specified before, he/she can click the Dock button to perform a docking manoeuvre. At any moment it is possible to cancel the docking manoeuvre by clicking the Cancel button. In a similar way, the robot can be moved out from the dock by clicking the Undock button. A status message will be shown beside the Cancel button, informing the user about the status of the action requested.

Please note: the robot will only accept an undock order if it was previously docked, otherwise the action request will be rejected.

It is also possible to use the Learn Dock button to have the robot learn the position of a new dock station and add it as a POIs in the given map. For this it is necessary that a map of the environment be previously built.

4.2.7 Dock/Undock using action client¶

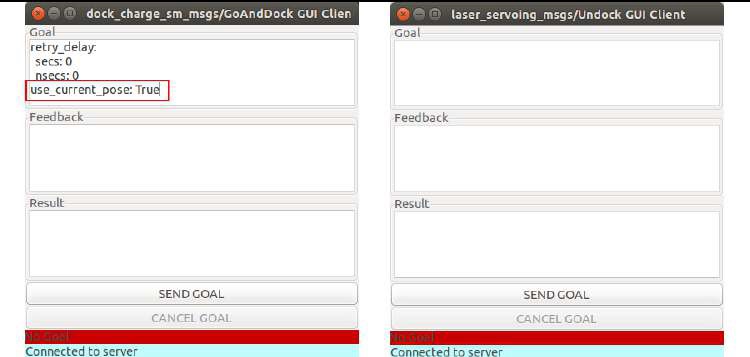

ROS provides an action client interface that can be used to communicate with the action servers responsible for the dock and undock manoeuvres. To run the action client, the following command should be entered for the docking manoeuvre:

export ROS_MASTER_URI= http://ari-0c:11311

# For ROS Melodic

rosrun actionlib axclient.py /go_and_dock

# For ROS Noetic

rosrun actionlib_tools axclient.py /go_and_dock

and for the undocking manoeuvre:

export ROS_MASTER_URI= http://ari-0c:11311

# For ROS Melodic

rosrun actionlib axclient.py /undocker_server

# For ROS Noetic

rosrun actionlib_tools axclient.py /undocker_server

After any of the previous commands is executed, a panel will pop up. The figure below shows both the /go_and_dock and the /undocker_server panels.

Please note: for the docking action client, the field use_current_pose should be set to True, otherwise the action will fail (this field is not needed for the /undocker_server). In this interface, the button SEND GOAL would start the docking (or undocking) manoeuvre. As before, the CANCEL GOAL button will abort the action, and the status of the server and of the goal are displayed in the bottom of the panel.

4.2.8 Switching on/off ARI¶

On/off buttons

Electric switch The electric switch is the main power control switch. Before turning ARI ON make sure first that this switch is ON, i.e. its red light indicator is ON. On the other hand, when ARI is not going to be used for a long period, please press the switch so that its red light indicator turns OFF. Note that this switch should not be turned OFF before using the ON/OFF button to turn OFF the onboard computer of the robot. Turning OFF this switch will cut instantaneously the power supply to all the robot components, including the onboard computer. Do not use this switch as an emergency stop. For the emergency stop please refer to the next section.

Power Button and On/off button

Before switching ARI on: release the emergency button and open the lid to access the power buttons at the back of the robot.

5 First time startup¶

5.1 Switching on ARI¶

5.1.1 Welcoming Screen¶

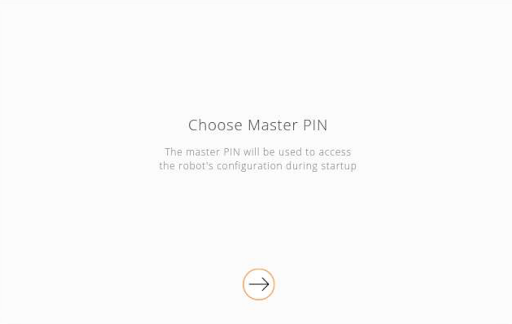

When switching on ARI for the first time, you will see the screen below. The setup process will take you through a number of screens to choose the connection mode, enter network details, choose a robot name, choose the language the robot will speak in and add a Master PIN.

5.1.2 First time Setup¶

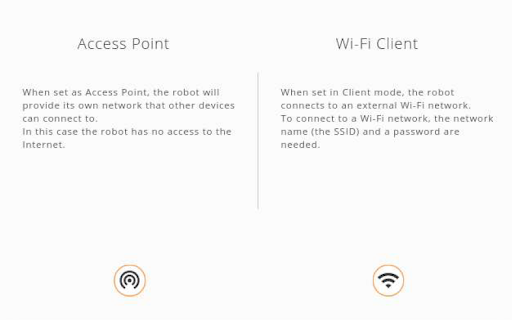

During the setup process for the robot you will be asked to choose the WiFi connection mode, which can be either Access Point (AP) or Client Mode

5.1.3 Master/Admin PIN¶

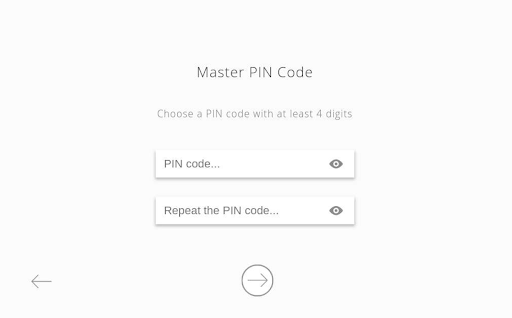

Select a Master PIN number for accessing ARI during the startup process. The Master PIN should be easy to remember and recorded somewhere else as it may be needed for future configuration. The PIN code should have at least four digits. You will be guided through the following screens:

5.1.4 Language selection¶

ARI is programmed to speak in multiple languages, however only the languages installed on the robot will be shown. You will see the following screens to choose the language the robot will speak in:

5.1.5 WiFi setup¶

When the robot is set up as Access Point (AP) it provides its own network. You can then connect any WiFi-enabled device to the network (such as a laptop, tablet or phone). In the case of a device with mobile data capabilities being connected, it is necessary to ensure that those capabilities have been disabled to force the OS to use the WiFi network (both Android and iOS ignore WiFi with no internet connection, which prevents the device from accessing the WebGUI). Please note that in this mode the robot will not be able to connect to the internet.

When the robot is set to Client Mode the robot connects to an external WiFi network. In this mode the robot may be able to access the internet (if the network is connected to the internet), and will be visible to any and all devices in the same network.

Regardless of the chosen mode a network SSID and a password must be configured. If the robot is in AP mode this will be the name of the network provided by the robot, and the password required to connect to it. When in Client Mode this configuration will be used by the robot to connect to the external network.

5.1.6 Robot Name¶

Finally, you will be prompted to choose a name for the robot with the following screen:

5.2 Connect from an external device¶

In order to connect to ARI with an external device, the device must be part of the same network:

If ARI is set up as Access Point the device must connect to the configured network using the password introduced during setup. ARI can then be accessed via the hostnames control or ari-Xc (where X is the serial number).

If ARI is set up as Client Mode, the device must be connected to the same network that was set up for ARI. ARI will then be accessible via the IP assigned by the network, or, if a DNS is set up, via the configured hostname.

In both cases, the WebGUI can be accessed using a web browser at the default port, and the Web Commander at port 8080 (see 10 WebGUI).

7 Sensors and joints¶

This section contains an overview of the sensors included in ARI, as well as their ROS and C++ API. Note that some cameras are optional.

7.1 Vision and inertial measurement unit sensors¶

The following Figure illustrates the cameras mounted on the robot.

7.1.1 Torso front¶

Stereo RGB-D camera with IMU (Inertial Measurement Unit): This camera is mounted on the frontal side of the torso below the touch-screen and provides RGB images along with a depth image obtained by using an IR projector and an IR camera. The depth image is used to obtain a point cloud of the scene. It has an integrated IMU sensor unit mounted at the base to monitor inertial forces and provide the altitude.

7.1.2 Torso back¶

Stereo-fisheye camera with IMU (Inertial Measurement Unit): This camera is mounted on the back side of the torso, right below the emergency button, and provides stereo, fisheye and black and white images. It also has an IMU sensor unit.

7.1.3 Head cameras¶

Either one of these cameras, located inside ARI’s head, selected by the client.

RGB camera: provides RGB images

RGB-D camera: provides RGB-D images

7.1.4 Optional cameras¶

Frontal and back stereo-fisheye cameras: The frontal camera is positioned just above the touch-screen and the back camera, above the emergency button They publsh stereo images at 30 frames per second, and also publish IMU data.

Thermal camera: positioned below the head camera, inside the robot, to monitor temperature

7.2 LEDs¶

7.2.1 Back ring¶

ARI has an LED back ring at the back, below the emergency button, with a variety of colour options.

7.2.2 Ears rings¶

ARI has LED rings in each of its ears with a variety of colour options.

7.3 Animated eyes¶

ARI has LCD eyes with animations, supported by the movement of the head and arms, which can provide gaze interaction. Through gaze ARI can show interest - express emotions, and coordinate conversation by turn-taking and reacting to the user’s actions.

7.4 Speakers and microphones¶

ARI has an array of four microphones that can be used to record audio and process it in order to perform tasks like speech recognition located on the circlar gap of the torso. There are two HIFI full-range speakers just below it.

7.5 Joints¶

7.5.1 Base¶

The base joints of ARI are the front and back wheels of the robot. Take care when wheeling ARI, especially with the smaller back wheels.

7.5.2 Arms¶

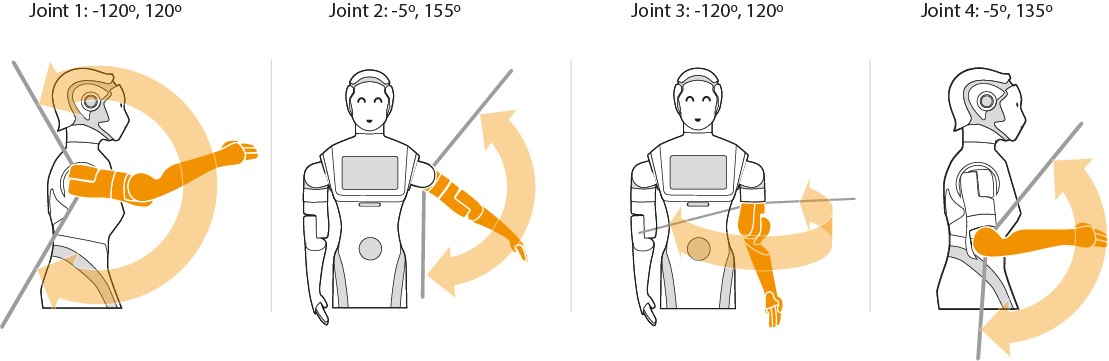

ARI’s joints and arm movements are illustrated below:

Joint 1: [-120º, 120º] Joint 2: [-5º, 155º] Joint 3: [-120º, 120º] Joint 4: [-5º, 135º]

7.5.3 Hands¶

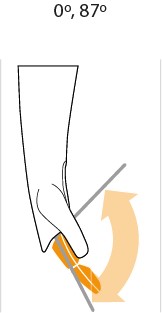

ARI’s hand movements are illustrated with the following diagram: [0º, 87º]

7.5.4 Head joints¶

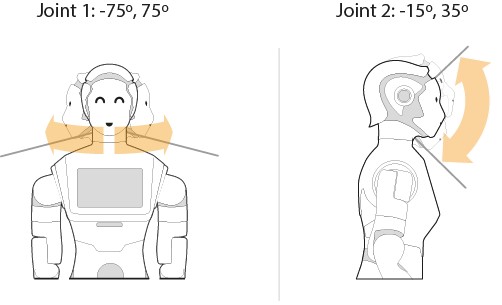

The joint movements for ARI’s head are shown below: Joint 1: [-75º, 75º] Joint 2: [-15º, 35º]

7.6 ROS API¶

The robot’s power status is reported in the /power_status ROS topic. Please note: This node is launched by default on startup.

Description

The following data is reported:

input: the voltage coming from the batteries.

charger: the voltage coming from the charger.

pc: the voltage coming from the PC.

charge: the percentage battery charge.

is_connected: whether ARI is currently connected to the charger.

is_emergency: whether the emergency stop button is currently enabled.

7.6.1 Sensors ROS API¶

Please note: Every node that publishes sensor data is launched by default on startup.

Inertial Measurement Unit:

Topics published

/torso_front_camera/accel/sample (sensor_msgs/Imu)

Inertial data from the IMU of the front camera

/torso_back_camera/accel/sample (sensor_msgs/Imu)

Inertial data from the IMU of the back camera

Torso front RGB-D camera topics:

/torso_front_camera/accel/sample (sensor_msgs/Imu)

Inertial data from the IMU of the front camera

/torso_front_camera/aligned_depth_to_color/camera_info (sensor_msgs/CameraInfo)

Intrinsics parameters of the aligned dept to color image

/torso_front_camera/aligned_depth_to_color/image_raw (sensor_msgs/Image)

Aligned depth to color image

/torso_front_camera/aligned_depth_to_infra1/camera_info (sensor_msgs/CameraInfo)

Intrinsics parameters of the aligned depth to infrared camera (aligned_depth_to_infra1 and aligned_depth_to_infra2)

/torso_front_camera/aligned_depth_to_infra1/image_raw (sensor_msgs/Image)

Aligned depth to infrared image

/torso_front_camera/color/camera_info (sensor_msgs/CameraInfo)

Camera calibration and metadata

/torso_front_camera/color/image_raw (sensor_msgs/Image)

Color rectified image. RGB format

/torso_front_camera/depth/camera_info (sensor_msgs/CameraInfo)

Camera calibration and metadata

/torso_front_camera/depth/color/points (sensor_msgs/Image)

Registered XYZRGB point cloud.

/torso_front_camera/depth/image_rect_raw (sensor_msgs/Image)

Rectified depth image

/torso_front_camera/infra1/camera_info (sensor_msgs/CameraInfo)

Camera calibration and metadata (infra1 and infra2)

/torso_front_camera/infra1/image_raw (sensor_msgs/Image)

Raw uint16 IR image

Torso front RGB-D camera services advertised

/torso_front_camera/rgb_camera/set_parameters (dynamic_reconfigure/Reconfigure)

Changes the specified parameters

Torso back RGB-D camera topics:

/torso_back_camera/accel/sample (sensor_msgs/Imu)

Inertial data from the IMU of the back camera

/torso_back_camera/fisheye1/camera_info

Camera calibration and metadata (fisheye1 and fisheye2)

/torso_back_camera/fisheye1/image_raw

Fisheye image (fisheye1 and fisheye2)

Head RGB topics:

/head_front_camera/camera_info (sensor_msgs/Image)

Intrinsics and distortion parameters of the RGB camera

/head_front_camera/image_raw (sensor_msgs/Image)

RGB image

Head RGB-D topics:

For the Head RGB-D camera, the following additional topics are available as well asides from the one for Head RGB:

/head_front_camera/accel/sample (sensor_msgs/Imu)

Inertial data from the IMU of the head RGB-D camera

/head_front_camera/aligned_depth_to_color/camera_info (sensor_msgs/CameraInfo)

Intrinsics parameters of the aligned dept to color image

/head_front_camera/aligned_depth_to_color/image_raw (sensor_msgs/Image)

Aligned depth to color image

/head_front_camera/aligned_depth_to_infra1/camera_info (sensor_msgs/CameraInfo)

Intrinsics parameters of the aligned depth to infrared camera (aligned_depth_to_infra1 and aligned_depth_to_infra2)

/head_front_camera/aligned_depth_to_infra1/image_raw (sensor_msgs/Image)

Aligned depth to infrared image

/head_front_camera/depth/camera_info (sensor_msgs/CameraInfo)

Camera calibration and metadata

/head_front_camera/depth/color/points (sensor_msgs/Image)

Registered XYZRGB point cloud.

/head_front_camera/depth/image_rect_raw (sensor_msgs/Image)

Rectified depth image

/head_front_camera/infra1/camera_info (sensor_msgs/CameraInfo)

Camera calibration and metadata (infra1 and infra2)

/head_front_camera/infra1/image_raw (sensor_msgs/Image)

Raw uint16 IR image

Optional stereo-fisheye camera topics:

/fisheye_rear_camera/rgb/image_raw/compressed (sensor_msgs/Image)

Fisheye image with IMU

/fisheye_front_camera/rgb/image_raw/compressed (sensor_msgs/Image)

Fisheye image with IMU

/fisheye_rear_camera/rgb/camera_info (sensor_msgs/CameraInfo)

Camera calibration and metadata

/fisheye_front_camera/rgb/camera_info (sensor_msgs/CameraInfo)

Camera calibration and metadata

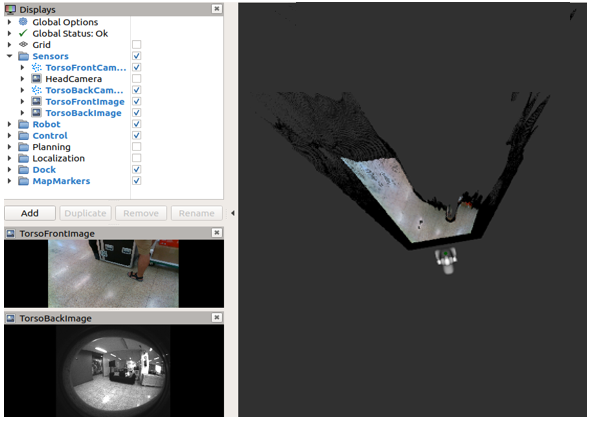

7.6.2 Sensors visualization¶

Most of the sensor readings of ARI can be visualized in rviz. In order to start the rviz GUI with a predefined configuration do as follows from the development computer:

export ROS_MASTER_URI=http://ari-0c:11311

rosrun rviz rviz -d `rospack find ari_bringup`/config/ari.rviz

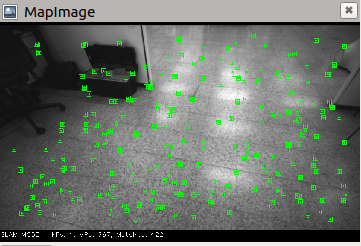

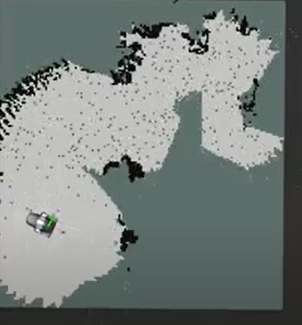

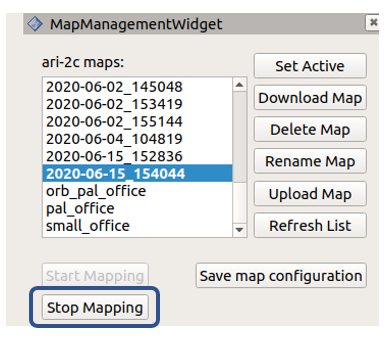

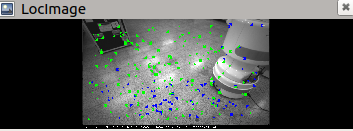

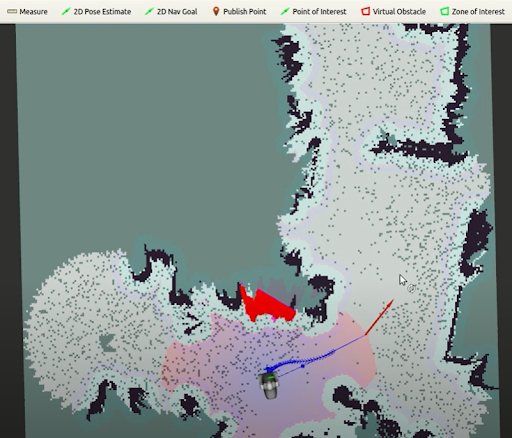

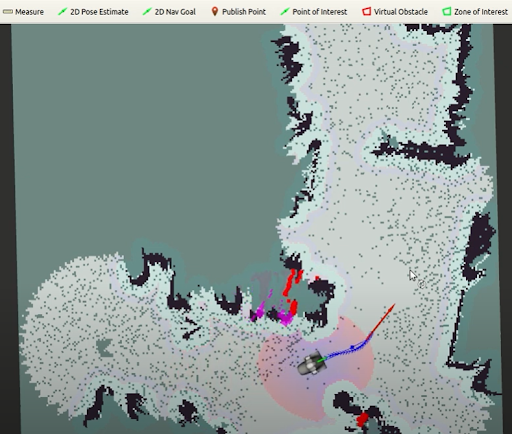

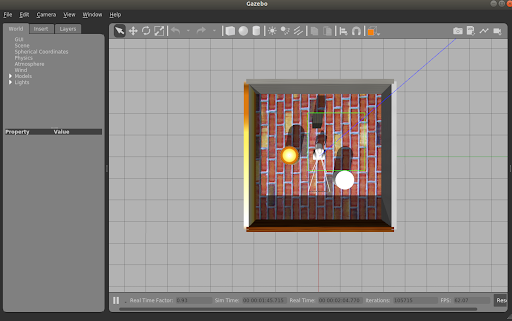

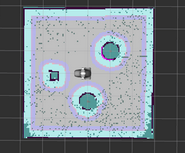

An example of how the torso front and back RGB-D cameras are visualized in rviz.To be specific, the /torso_front_camera/depth/color/points topic output as well as the two images.

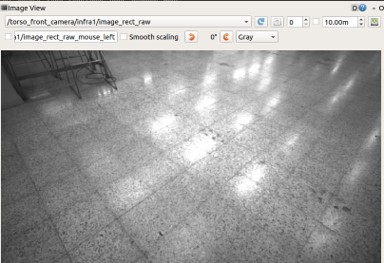

Another option is to visualize image output using the rqt GUI:

export ROS_MASTER=http://ari-0c:11311

rosrun image_view image_view image:=/torso_front_camera/infra1/image_rect_raw _image_transport:=compressed

When visualizing images from an external computer through WiFi, it is recommended to use the compressed topic to reduce bandwidth and latency.

7.6.3 Microphone sound recording¶

ARI has a ReSpeaker Mic Array V2.0 consisting of 4 microphones (https://www.seeedstudio.com/ReSpeaker-Mic-Array-v2-0.html).

In order to capture audio data, connect to the robot through ssh and list the available recording devices with the “arecord -l” command. You should see two soundcards that are detected on ARI:

ssh pal@ari-0c

arecord -l

Notice the name of the card and of the subdevice of the ReSpeaker device, and use that information to execute another arecord command.

arecord -fS16_LE -c6 -d 10 -r16000 > micro.wav

In this example we record 10 seconds of audio from the card 1, subdevice 0 into the micro.wav file.

To reproduce the recorded data the aplay command can be used.

aplay micro.wav

There are two main methods to access the microphone:

Using the ReSpeaker Python API (https://get-started-with-respeaker.readthedocs.io/en/latest/ReSpeakerPythonAPI/) to directly get microphone input.

Using the respeaker_ros open source ROS wrapper (https://github.com/furushchev/respeaker_ros). By default, the following launch file is running on the robot:

`` roslaunch respeaker_ros respeaker.launch``

It publishes the following topics of interest:

/sound_direction Result of DoA

/sound_localization Result of DoA as Pose

/is_speeching # Result of VAD

/audio # Raw audio > published as uint8 array

/speech_audio # Audio data while speeching

8 LED Control¶

This section contains an overview of the LED devices included in ARI and how to control them.

8.1 Overview¶

ARI has 4 led displaying devices capable of being controlled via our ROS interface. One at each ear, on in the back below the emergency stop and the respeaker leds.

ID |

#Leds |

Available Effects |

|

|---|---|---|---|

Back |

0 |

40 |

All |

Ear Left |

1 |

16 |

All |

Ear Right |

2 |

16 |

All |

Respeaker |

4 |

12 |

Fixed Color |

Different devices may have different capabilities, and may only be able to show part of the effects.

8.2 LED ROS Interface¶

All led devices are controlled using the same ROS Interface, provided by the PAL Led Manager. This interface allows clients to send effects to one or multiple led devices, for a duration of time and a priority. When a device has more than one effect active at a time, it displays the one with the highest priority, until the duration ends and then displays the next effect with the highest priority.

There’s a default effect with unlimited duration and lowest priority that display a fixed color.

The led interface is an Action Server with the name pal_led_manager/do_effect.

The type of the action is pal_device_msgs/DoTimedLedEffect

The fields are described in the .action and .msg files in the same repository.

As a summary, a goal consists of:

devices A list of devices the goal applies to.

params The effect type, and the parameters for the selected effect type.

effectDuration Duration of the effect, when the time is over the previous effect will be restored. 0 will make it display forever

priority Priority of the effect, 0 is no priority, 255 is max priority

9 WebCommander¶

9.1 Default network configuration¶

When shipped or when a fresh re-installation is performed, the robot is configured as an access point. Note that the SSID ends with the serial number of the robot, i.e. in the given example the s/n is 0.

The address range 10.68.0.0-24 has been reserved. The robot computer name is ARI-Xc, where X is the serial number without the “0s” on the left. The alias control is also defined in order to refer to the computer name of the robot when connecting to it when it is set as an access point or when using a direct connection, i.e. an Ethernet cable between the robot and the development computer.

The WebCommander is a web page hosted by ARI. It can be accessed from any modern web browser that is able to connect to ARI. It is an entry point for several monitoring and configuration tasks that require a Graphical User Interface (GUI).

9.2 Accessing the WebCommander website¶

Ensure that the device you want to use to access the website is in the same network and able to connect to ARI

Open a web browser and type in the address bar the hostname or IP address of ARI’s control computer and try to access port 8080: http://ARI-0c:8080

If you are connected directly to ARI, when the robot is acting as an access point, you can also use: http://control:8080

The WebCommander website contains visualizations of the state of ARI’s hardware, applications and installed libraries, as well as tools to configure elements of its behaviour.

9.3 Default tabs¶

ARI comes with a set of preprogrammed tabs that are described in this section, these tabs can also be modified and extended. Each tab is an instantiation of a web commander plugin. For each tab a description and the plugin type used to create it is defined.

Startup tab

Plugin:Startup

Description: Displays the list of PAL Robotics software that is configured to be started in the robot, and whether it has been started or not.

Each application, or group of applications, that provides a functionality, can choose to specify a startup dependency on other applications or groups of applications.

There are three possible states:

Green: All dependencies satisfied, application launched.

Yellow: One or more dependencies missing or in error state, but within reasonable time. Application not launched.

Red: One or more dependencies missing or in error state, and maximum wait time elapsed. Application not launched.

Additionally, there are two buttons on the right of each application. If the application is running, a “Stop” button is displayed, which will stop the application when pressed. If the application is stopped or has crashed, the button “Start” will be displayed, which will start the application when pressed. The “Show Log” button, allows to display the log of the application.

Startup extras tab

Plugin: Startup

Description: This tab is optional, if present it will contain a list of PAL Robotics’ software which is not started by default during the boot up of the robot. These are optional features that need to be manually executed by the user.

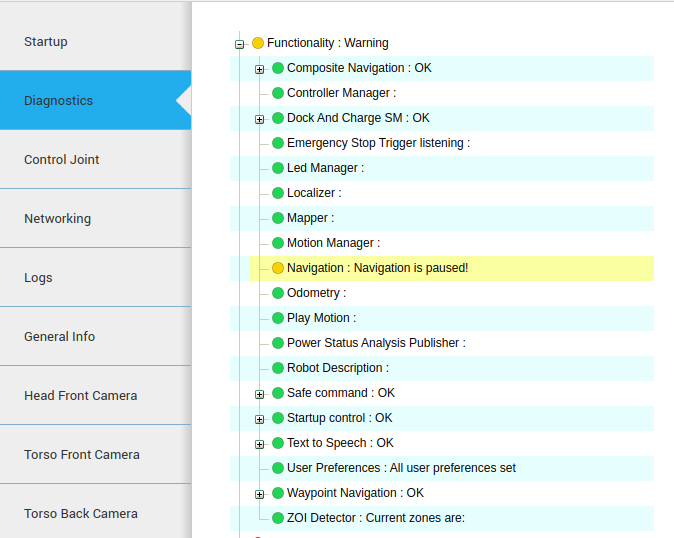

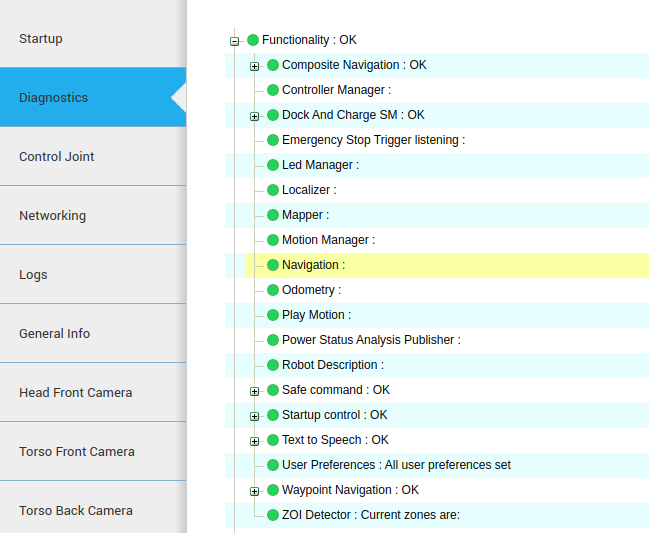

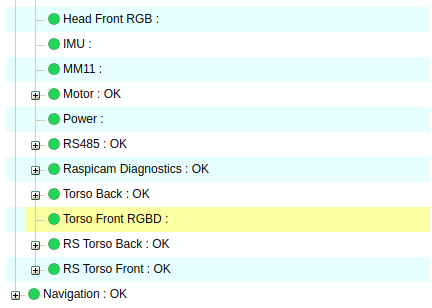

Diagnostics Tab

Plugin: Diagnostics

Description: Displays the current status of ARI’s hardware and software.

The data is organized in an hierarchical tree. The first level contains the hardware and functionality categories.

The functionalities are the software elements that run in ARI, such as vision or text to speech applications. Hardware diagnostics contain the hardware’s status, readings and possible errors. Inside the hardware and functionality categories, there’s an entry for each individual functionality or device. Some devices are grouped together (motors, sonars), but each device can still be seen in detail. The color of the dots indicates the status of the application or component.

Green: No errors detected.

Yellow: One or more anomalies detected, but they are not critical.

Red: One or more errors were detected which can affect the behavior of the robot.

Black: Stale, no information about the status is being provided.

The status of a particular category can be expanded by clicking on the “+” symbol to the left of the name of the category. This will provide information specific to the device or functionality. If there’s an error, an error code will be shown.

Logs Tab

Plugin: Logs

Description: Displays the latest messages printed by the applications’ logging system. The logs are grouped by severity levels, from high to low severity: Fatal, Error, Warn, Info and Debug. The logs are updated in real time, but messages printed before opening the tab can’t be displayed.

The log tab has different check-boxes to filter the severity of the messages that are displayed. Disabling a priority level will also disable all the levels below it, but they can be manually enabled. For instance, unchecking Error will also uncheck the Warn, Info and Debug levels, but the user can click on any of them to re-enable them.

General Info Tab

Plugin: General Info

Description: Displays the robot model, part number and serial number.

Installed Software Tab

Plugin: Installed Software

Description: Displays the list of all the software packages installed in both the robot’s computers

Settings Tab

Plugin: Settings

Description: The settings tab allows to change ARI’s behaviour.

Currently it allows to configure the language of ARI for speech synthesis. It is possible to select one from a drop down list. Changing the text-to-speech language will change the default language when sending sentences to be spoken by ARI.

Hardware Configuration

The Settings tab allows the user to configure the hardware of the robot. Hardware configuration will let the user to disable/enable the different motors, enable/disable the Arm module, choose different End Effector configuration, and also enable/disable the mounted F/T sensor.

For instance, to disable the “head_1_motor”, untick the head_1_motor checkbox in the “Enabled motors” options. If you want to switch to a different end-effector, then in the “End Effector” drop down, select the end effector that you are going to install, and click the “Save as Default” button at the bottom of the section. Reboot the robot for the above selected configuration to be taken into effect.

Remote Support

The Settings tab is equipped with the remote support connection widget. A technician from PAL Robotics can give remote assistance to the robot by connecting through this widget. Using an issue in the support portal, the PAL Robotics’ technician will provide the IP Address and the Port, this information needs to be filled in the respective fields of the widget and then pressing the Connect button will allow for the remote assistance. If the robot needs to be rebooted, the customer has to activate the remote support after each reboot because it is not persistent.

At any point of time after the connection has been established, the remote connection can be terminated by clicking the Disconnect button. Please note: After clicking the Connect, if the widget pops back to normal, instead of showing the connection status, then it means that the robot is either not connected to the internet (or) there should be some network issue.

Networking tab

By default, the controls for changing the configuration are not visible in order to avoid access by multiple users.

If the Enter button is pressed, the tab connects to the network configuration system and the controls will appear.

When a user connects to the configuration system, all the current clients are disconnected and a message is shown in the status line.

Configurations are separated in different blocks:

Wifi: – Mode: Can be selected whether WiFi connection works as client or access point.

SSID: ID of the Wi-Fi to connect to client mode or to publish in access point mode.

Channel: When the robot is in access point mode, use this channel.

Mode Key: Encryption of the connection. For more specific configurations select manual. In this case it is used the file /etc/wpa_supplicant.conf.manual that can be manually created in the robot.

Password: Password for the WiFi connection

Ethernet: – Mode: Can be selected whether the ethernet connection works as an internal LAN or external connection.

IPv4 – Enable DHCP Wifi: Enables DHCP client in WiFi interface.

Enable DHCP Ethernet: Enables DHCP client in the external ethernet port.

Address, Network, Gateway: In client mode, the manual values of the building’s network are used by the Wi-Fi interface. This is the same for the external ethernet port.

DNS

Server: DNS server.

Domain: Domain to use in the robot.

Search: Domain to use in the search.

VPN – Enable VPN: If the customer has a PAL basestation, the robot can be connected to the customer’s VPN.

Enable Firewall: When activating the VPN, a firewall can be connected to avoid an incoming connection from outside the VPN.

Address: Building network IP address of the basestation.

Port: Port of the base station where the VPN server is listening.

No changes are set until the Apply change button is pressed.

When the Save button is pressed (and confirmed), the current configuration is stored in the hard disk.

Be sure to have a correct networking configuration before saving it. A bad configuration can make it impossible to connect to the robot. If this happens, a general reinstallation is needed.

Changes to the WiFi between client and access point could require a reboot of the computer in order to be correctly applied.

Using the diagnostic tab, it is possible to see the current state of the WiFi connection.

Connecting ARI to a LAN In order to connect ARI to your own LAN follow the steps below.

First of all you need to access the WebCommander via the URL http://ARI-0c:8080 and go to the Networking tab. Press Enter button.

Once you have filled in the right configuration and pressed the Apply change button it is very important to wait until you are able to ping the new robot IP in your own LAN. If it does not happen you might have to reboot the robot as the configuration changes have not been saved yet. The robot will reboot with its previous networking configuration, allowing you to repeat the process properly.

When the new configuration allows you to detect the robot in your own LAN then you may proceed to enter the WebCommander again and press the Save button and then the Confirm button.

Setting ARI as an Access Point In order to configure ARI as an access point open the WebCommander via the URL http://ARI-0c:8080 and go to the Networking tab.

Once you have filled in the right configuration and pressed the Apply change button it is very important to wait until the new Wi-Fi network is detected. A smartphone, a tablet or a computer provided with a WiFi card can be used for this purpose. If it does not happen you might have to reboot the robot as the configuration changes have not been saved yet. The robot will reboot with its previous networking configuration, allowing you to repeat the process properly.

When the new configuration allows you to detect the robot’s Wi-Fi then you may proceed to enter the WebCommander after connecting to the Wi-Fi of the robot and press the Save button and then the Confirm button.

Video Tab

Plugin:Video

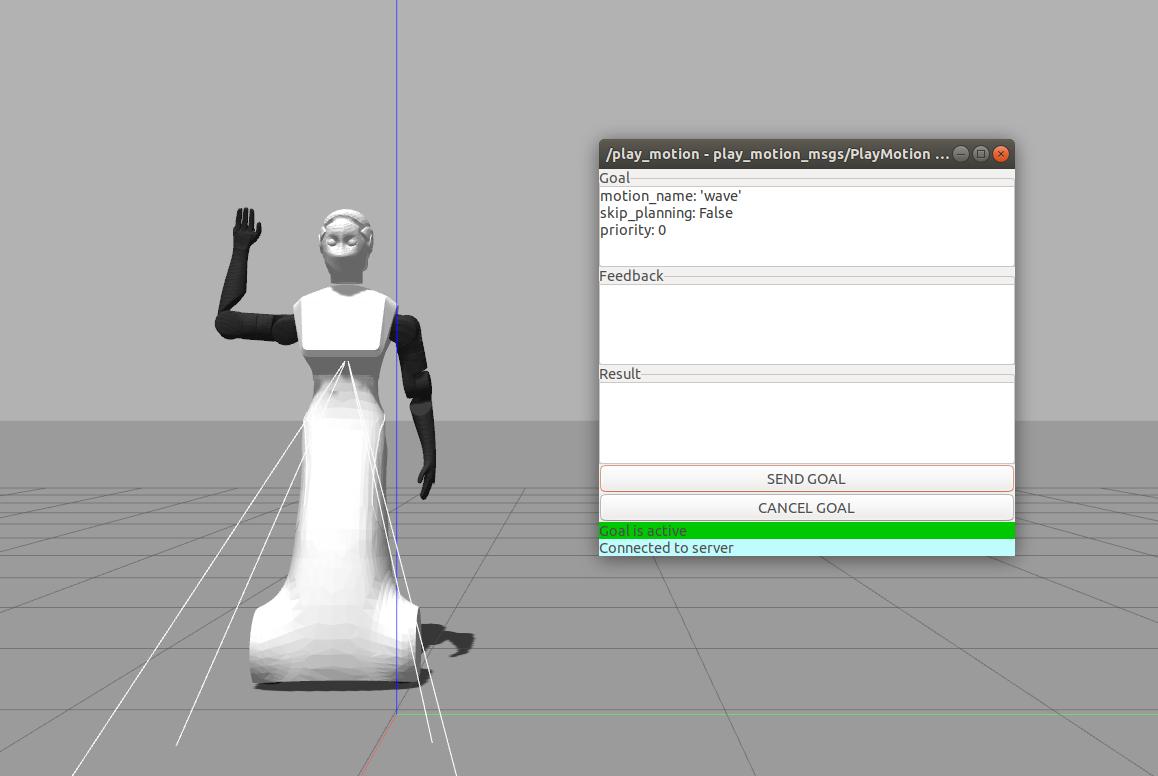

Movements Tab

Plugin: Movements

Description: Enables playing pre-recorded motions on ARI.

The movement tab allows a user to send upper body motion commands to the robot. Clicking on a motion will execute it immediately in the robot. Make sure the arms have enough room to move before sending a movement, to avoid possible collisions.

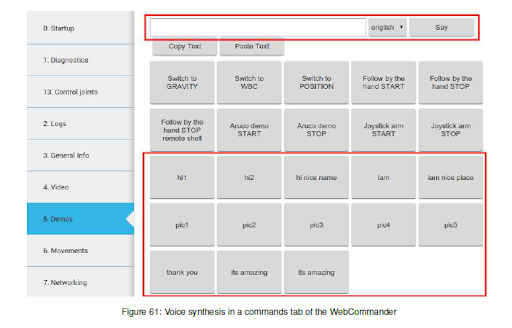

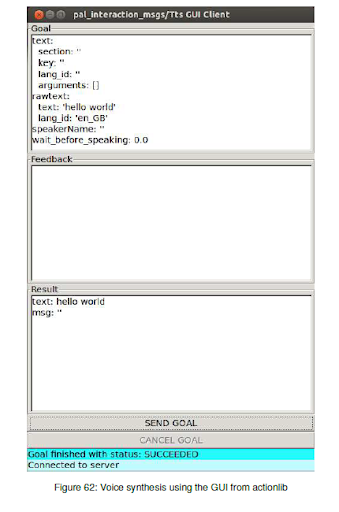

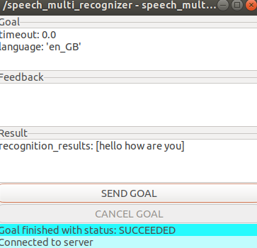

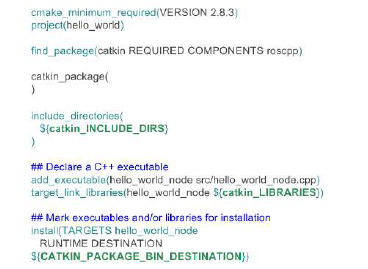

Demos tab

Plugin: Commands

Description: This tab provides buttons to start and stop different demos and synthesize voice messages with ARI, using either predefined buttons or by typing new messages in a text box.

9.4 Configuration¶

The WebCommander is a configurable container for different types of content, and the configuration is done through the /wt parameter in the ROS Parameter Server. On the robot’s startup, this parameter is loaded by reading all the configuration files in /home/pal/.pal/wt/. For a file to be loaded, it needs to have a .yaml extension containing valid YAML syntax describing ROS parameters within the /wt namespace.

In the box below, an example of how a WebCommander configuration is displayed. It is a YAML file, where /wt is a dictionary and each key in the dictionary creates a tab in the website with the key as the title of the tab. Each element of the dictionary must contain a type key, whose value indicates the type of plugin to load. Additionally, it can have a parameters key with the parameters that the selected plugin requires.

The parameters in the box of this section would create four tabs. Named “0. Startup”, “1. Diagnostics”, “2. Logs” and “3. Behaviour”, of the types Startup, Diagnostics, Logs and Commands respectively. The first three plugins do not require parameters, but the Command type does, as explained in the Command Plugin section.

Startup Plugin Configuration

Description: Displays the list of PAL Robotics’ software that is configured to be started in the robot, and whether it has been started or not.

Parameter format

Parameters startup_ids

A list of strings that contains the startup groups handled the instance of the plugin.

Diagnostics Plugin Configuration

Description: Displays the current status of ARI’s hardware and software.

Parameters: None required

Logs Plugin Configuration

Description: Displays the latest messages printed by the applications’ logging system.

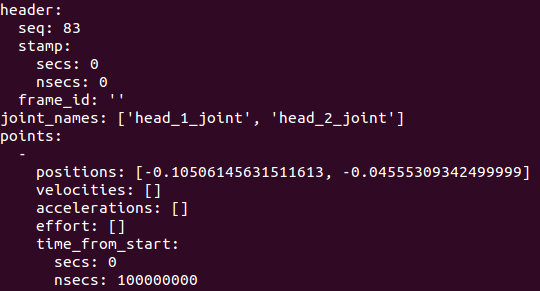

JointCommander Plugin Configuration

Description: This tab provides slides to move each joint of ARI ’s upper body.

Parameters: A list of the joints groups to be controlled. Each element of the list must be a dictionary containing “name”, “command_topic” and “joints”. Where “name“ is the name that will be displayed for this group, “command_topic” is the topic where the joint commands will be published and “joints” is a list containing the joint names to be commanded.

General Info Plugin Configuration

Description: Displays the robot model, part number and serial number.

Parameters: None required

Installed Software Plugin Configuration

Description: Displays the list of all the software packages installed in both the robot’s computers.

Parameters: None required

Settings Plugin Configuration

Description: The settings tab allows you to change the behaviour of ARI.

Parameters: None required

Networking Embedded Plugin Configuration

Description: This tab allows you to change the network configuration.

Parameters: None required

Video Plugin Configuration

Description: Displays the images from a ROS topic in the WebCommander.

Parameters: topic Name of the topic to read images from, for instance: /xtion/rgb/image_raw/compressed

Movements Plugin Configuration

Description: Enables playing pre-recorded motions on ARI.

Parameters: goal_type Either “play_motion” or “motion_manager”. Determines which action server will be used for sending the motions.

Commands Plugin Configuration

Description:Contains buttons that can be programmed through parameters to perform actions in the robot.

Parameters buttons: A list of buttons, where each button is a dictionary with 2 fields. The name field is the text displayed on the button, and the second field name determines the type of button and is a dictionary with the configuration of the button.

9.5 Access Web Commander¶

IF the robot is AP: select ARI WIFI network and use the passw:”pal”. Then from the browser of the mobile device go to control:8080 or ari-SNc:8080

IF the robot uses external WIFI: from the browser connect to the address ari-assigned-ip:8080.

The WebCommander is a web page hosted by ARI. It can be accessed from any modern web browser that is able to connect to ARI.

It is an entry point for several monitoring and configuration tasks that require a Graphical User Interface (GUI).

Accessing the WebCommander website

Ensure that the device you want to use to access the website is in the same network and able to connect to ARI.

Open a web browser and type in the address bar the hostname or IP address of ARI’s control computer and try to access port 8080: http://ARI-0c:8080.

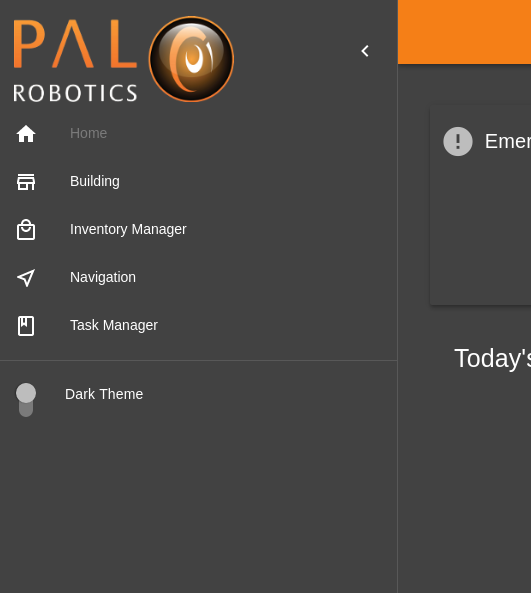

10 WebGUI¶

10.1 Overview¶

This section explains the use ARI’s Web User Interface and its different plugins. The Web User Interface is a tool designed to simplify the configuration of the robot as well as the user experience. The Web User Interface can be accessed via browser, at the address http://ari-Xc, where X is the serial number of the robot.

10.2 Technical considerations¶

At the moment the Web User Interface supports only the Chrome browser on a laptop or computer. Accessing the Web User Interface from a mobile phone or tablet, or from a different browser, will result in some of the functions not working properly or at all.

10.3 Login screen¶

When accessing the Web User Interface a user and password will be requested. The default user and password is pal / pal . Once the correct user and password are introduced the user will automatically be redirected to the page he was accessing.

Sessions are not time-constrained, which means that once a user has logged in he won’t be logged out until either he closes the browser or the robot is rebooted.

Figure: Login screen of the WebGUI.¶

10.5 ARI Homepage¶

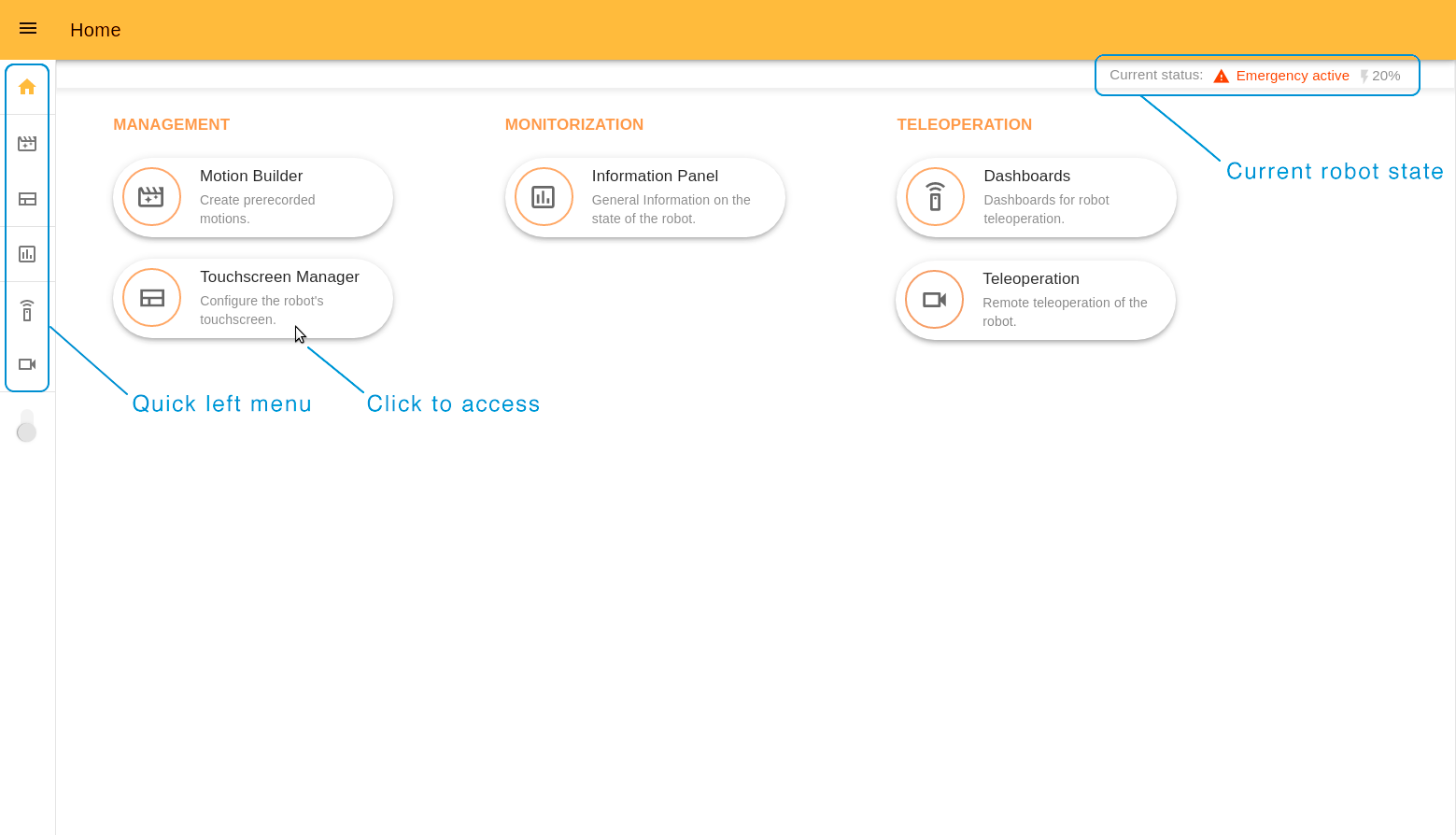

In this page there is a grouped list of webapps with short descriptions of each of them. Clicking on any icon will redirect you to the corresponding app:

Management - to configure the robot aspects (includes 10.7 Motion Builder, 10.8 Touchscreen Manager).

Monitorization - to access the robot status information (includes 10.6 Information Panel).

Teleoperation - to operate the robot remotely (includes 10.9 Command desks, 10.10 Teleoperation).

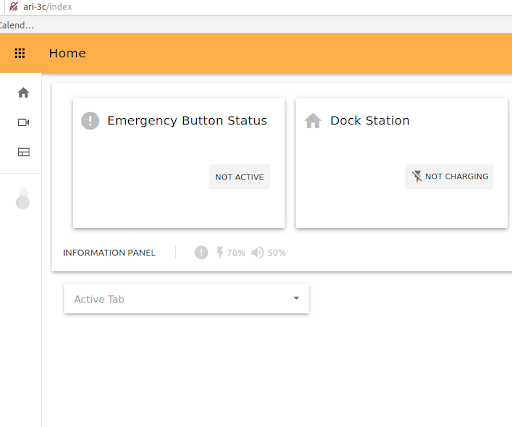

On top of the page there is a summary of the robot’s status in real-time. It indicates if the emergency button is pressed, if the robot is docked (if it’s charging now) and the level of the robot’s battery.

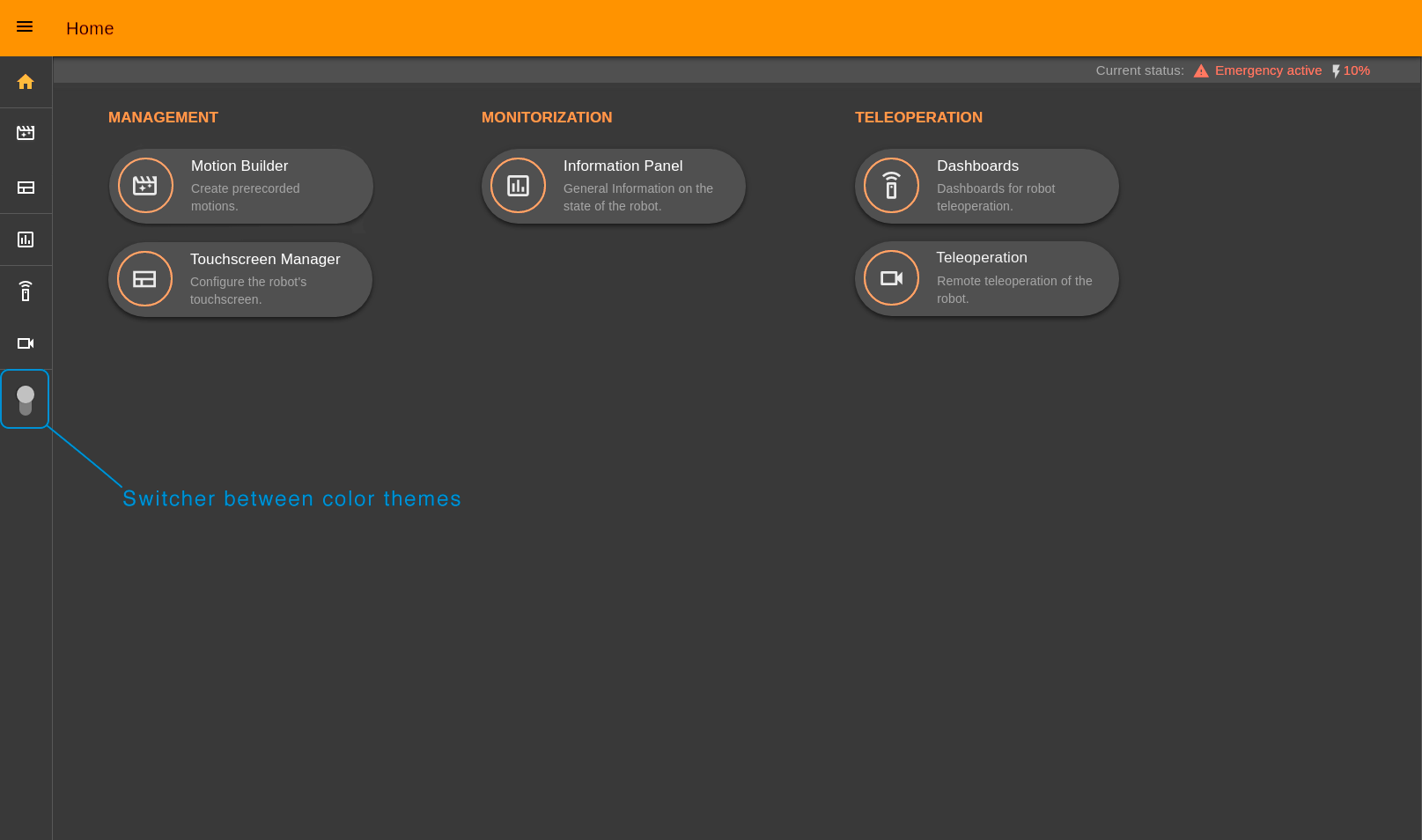

To quickly access any webapp use the menu on the left hand side. Under the menu icons there is a switcher between Light and Dark color themes.

10.6 Information Panel¶

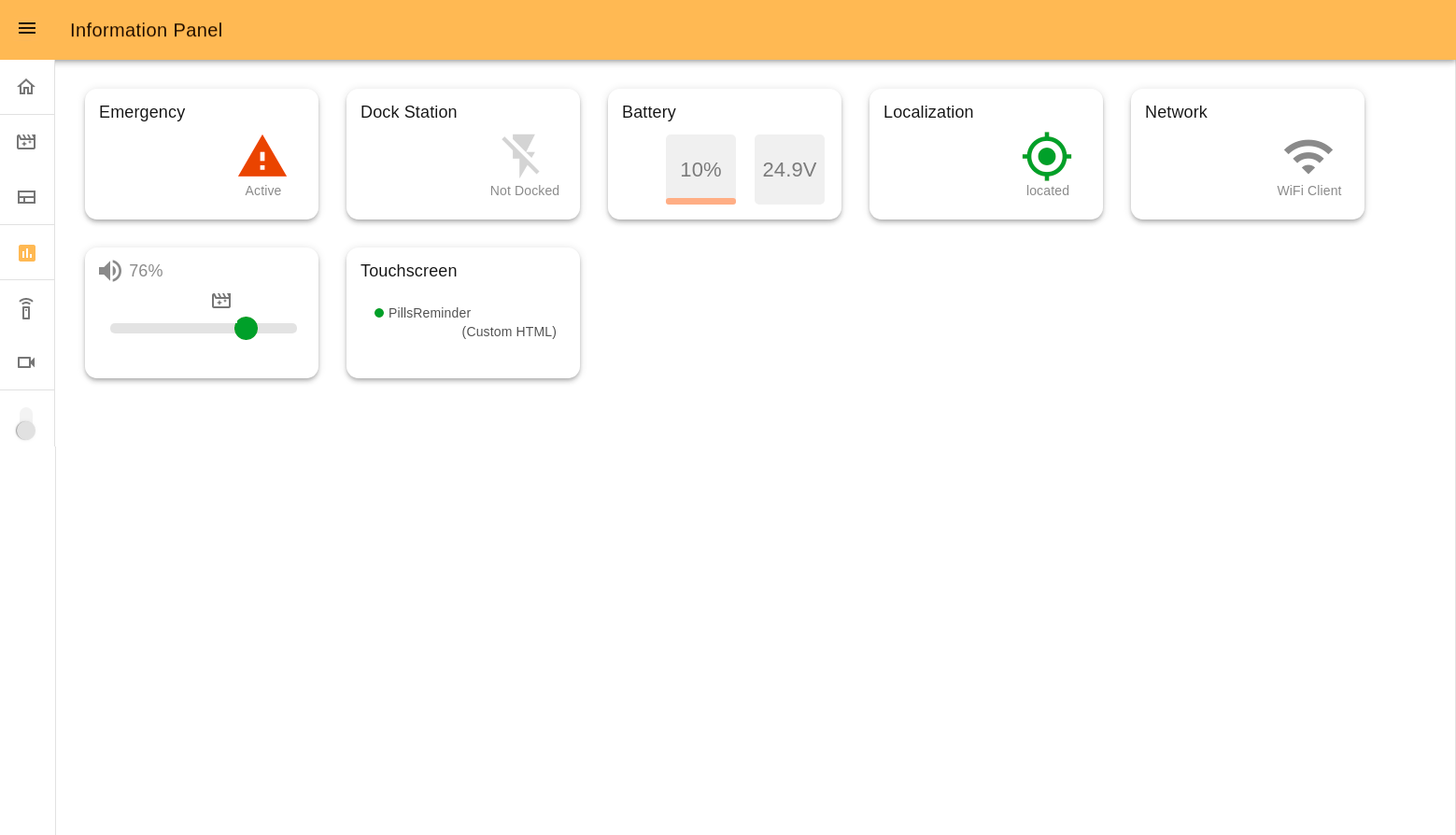

The Information Panel serves to provide visual information on the robot’s current state.

Emergency indicates the emergency button status (pressed or not). In the case of it being pressed, the icon will be red.

Dock Station indicates if the robot is connected to the dock or not.

Battery shows the current battery percentage and voltage. Yellow and red colors indicate middle and low battery levels.

Localization shows if the robot is located correctly in the navigation map.

Network indicates the currently active connection mode of the robot. Can be: Wi-fi Client or Access point.

Volume allows management of the robot’s volume and shows the current volume percentage.

Touchscreen is connected with the 10.8 Touchscreen Manager webapp. Here there is the information on the page that is displayed on the touchscreen and its template.

Some examples that may be displayed:

10.7 Motion Builder¶

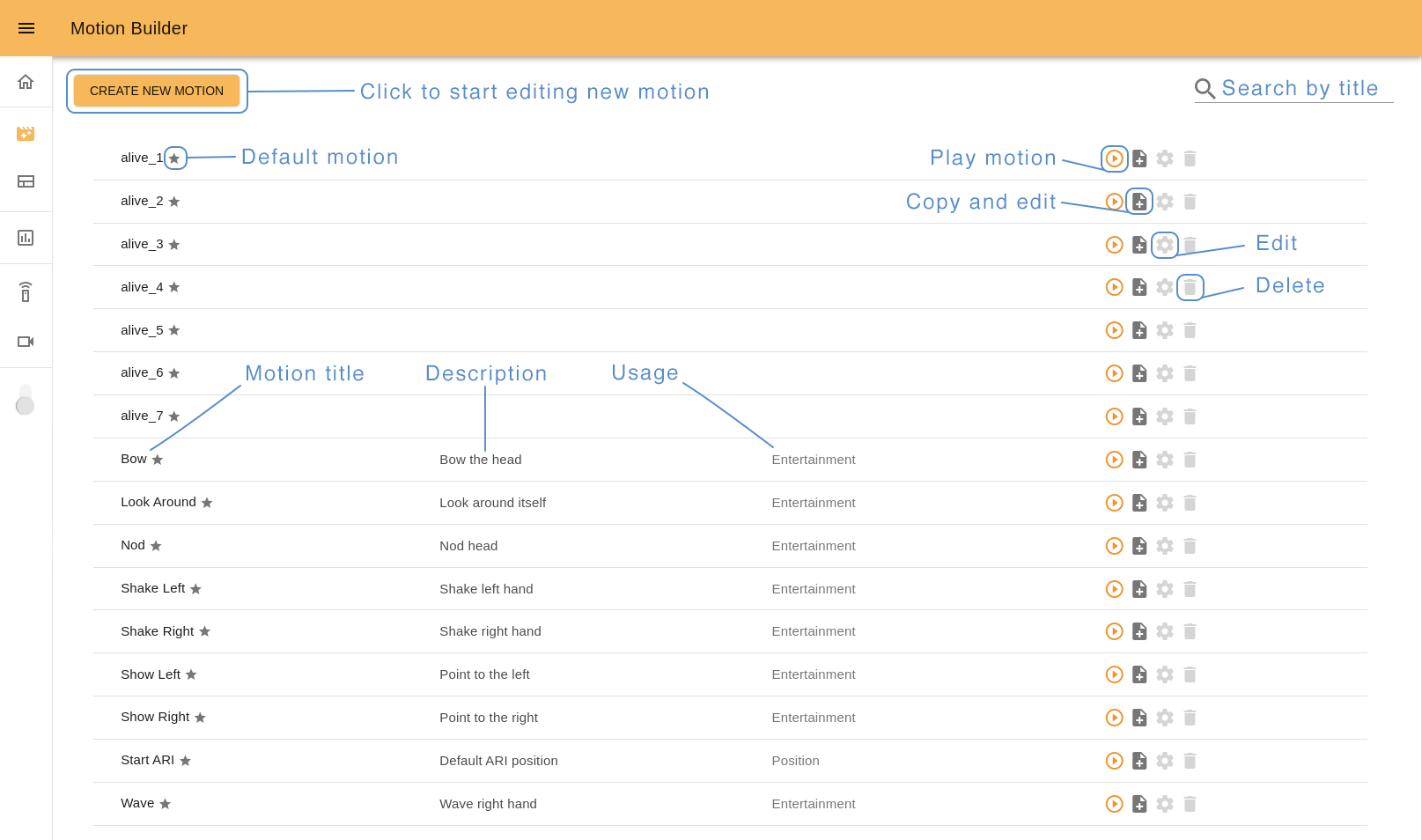

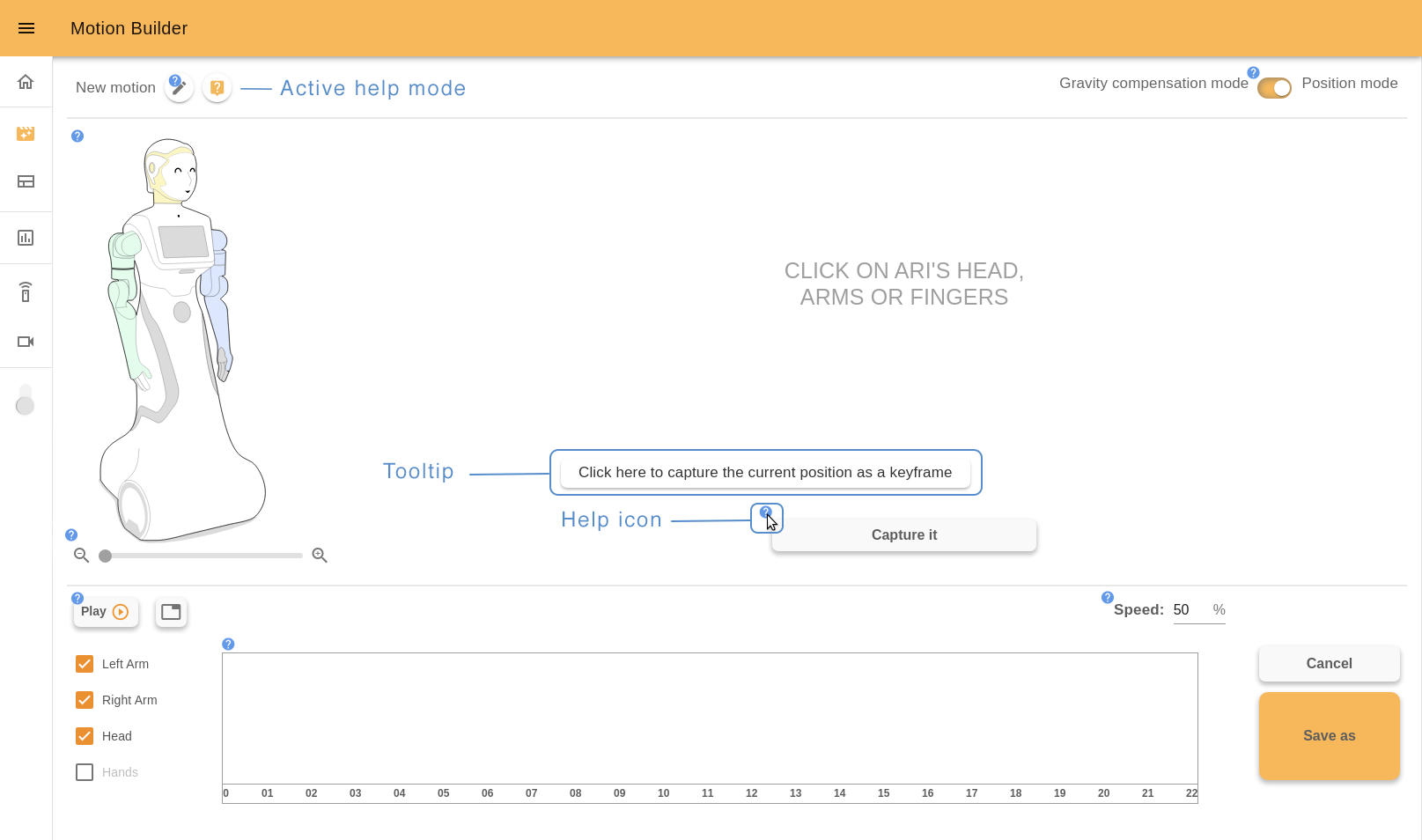

The Motion Builder is a webapp that allows you to create prerecorded motions and execute them.

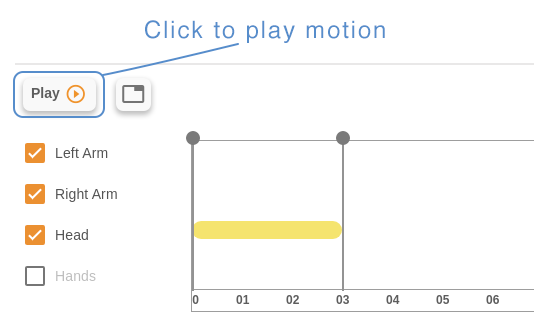

The features of Motion Builder tool include:

Creating, Editing, Deleting Motions

Adding, Editing and Deleting keyframes

Positioning the robot in any keyframe

Managing joints and groups used for the motion

Executing the motion at different speeds for testing purposes

Store the motion

The interface of Motion Builder consists of a list of the motions and a user-friendly editor, where you can create, edit and test motions without special technical skills.

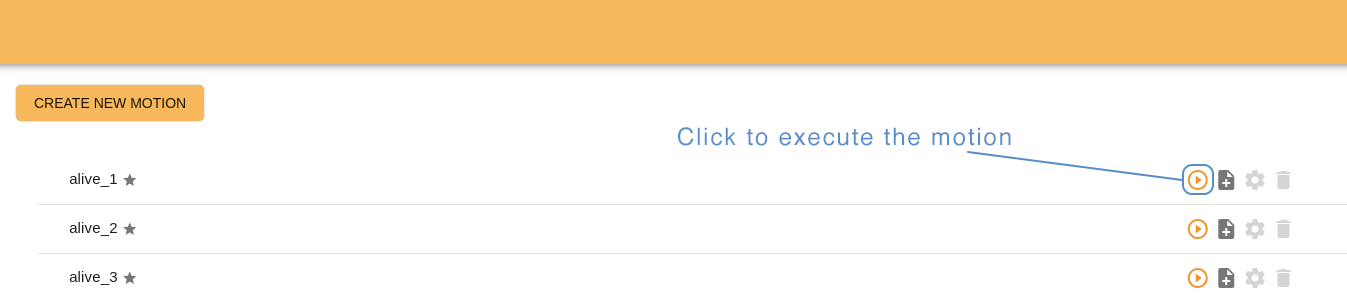

10.7.1 Start page interface¶

The start page of Motion Builder serves not only to display stored motions but also to manage them. From here you can execute any motion, create copy and edit it, edit motion, delete it. Functions “Edit” and “Delete” are active only for motions created by the user.

Motions that have a star icon are default motions and can’t be edited or deleted. Click “Copy and edit icon” to use them as an example or a part of a new motion.

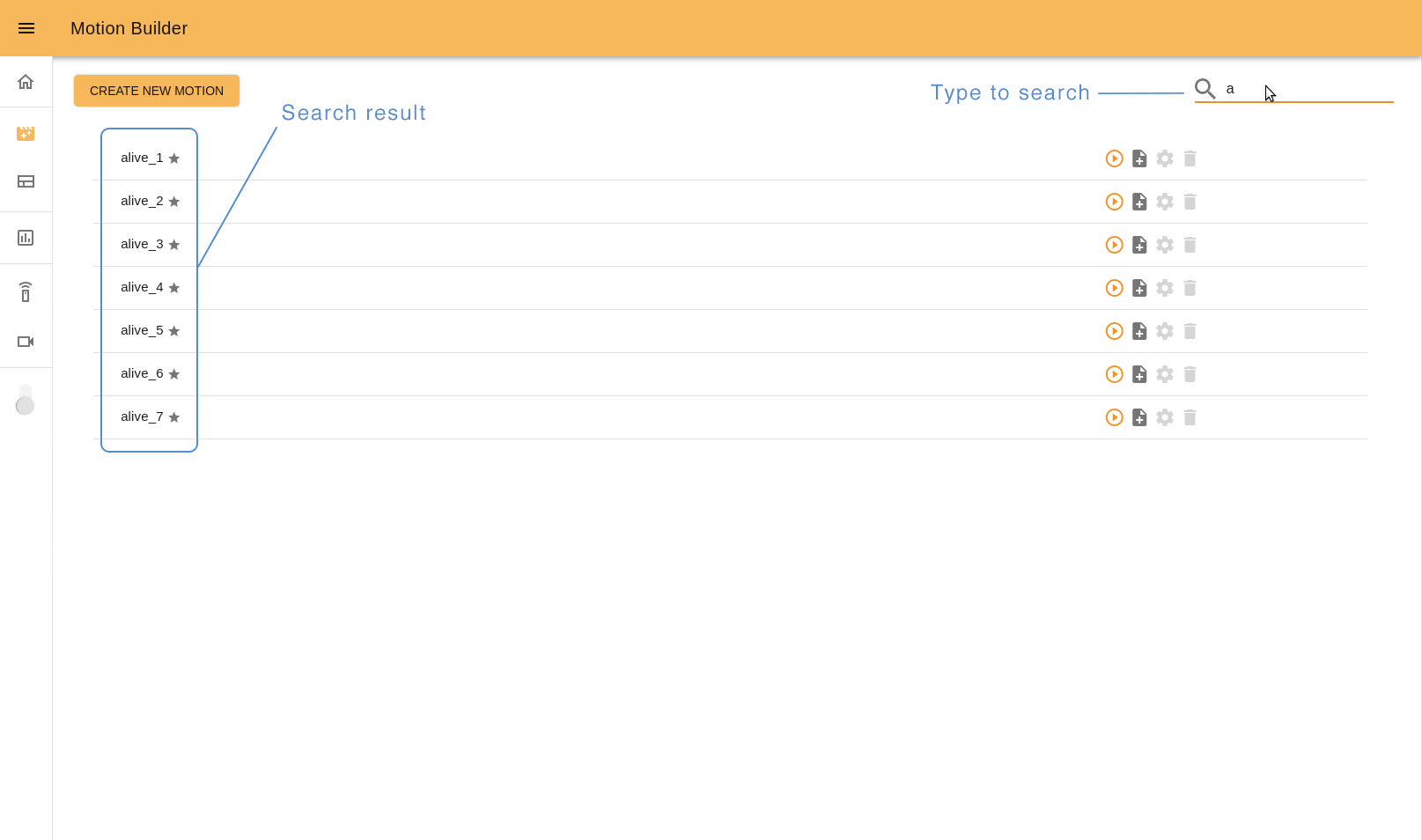

Use Search input (loupe icon) to browse the list easier. Start type the name of the motion you are looking for and you will see the corresponding results.

To open Motion Builder editor click “Create new motion” button, “Copy and edit” or “Edit” icons.

10.7.2 It’s important to know before starting¶

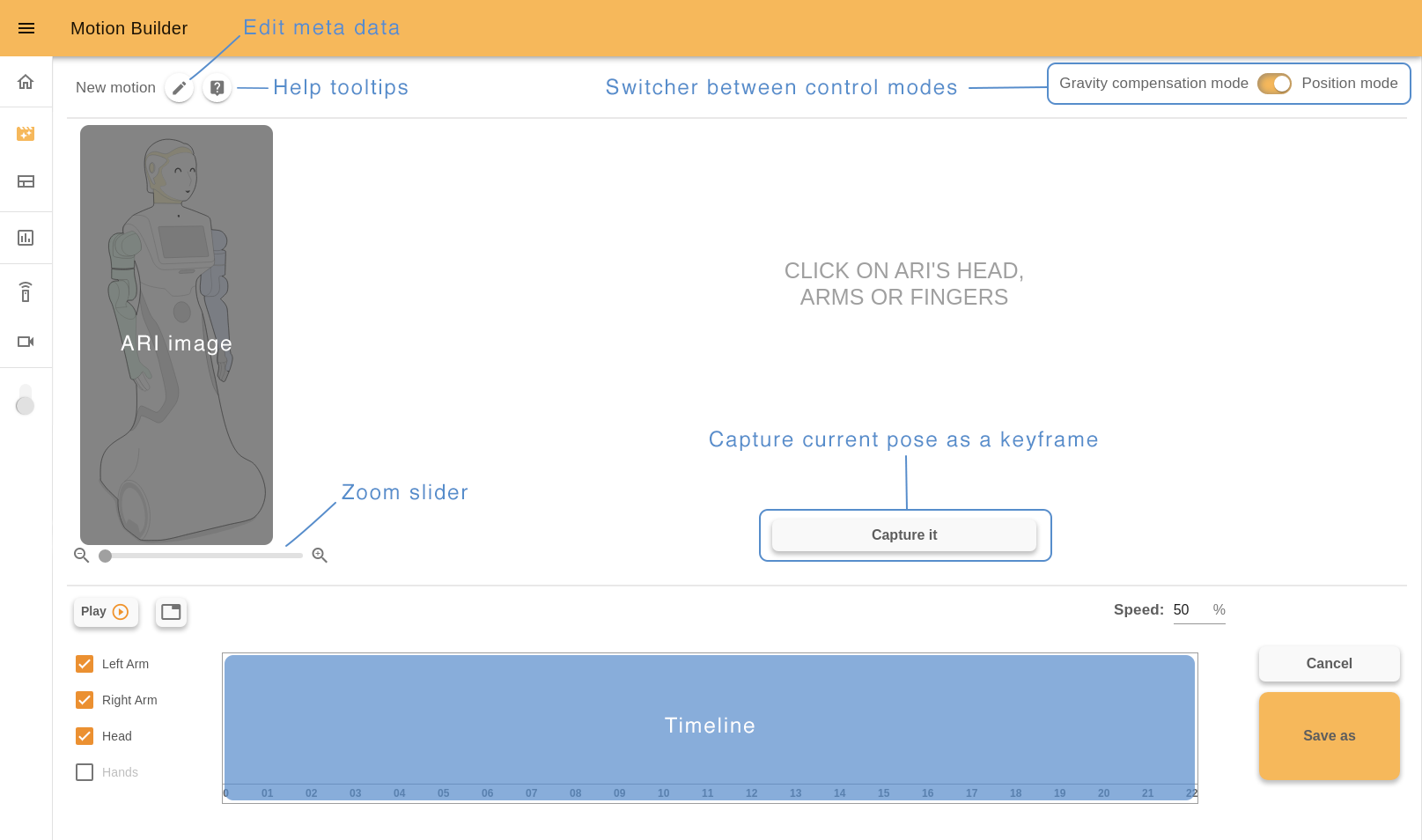

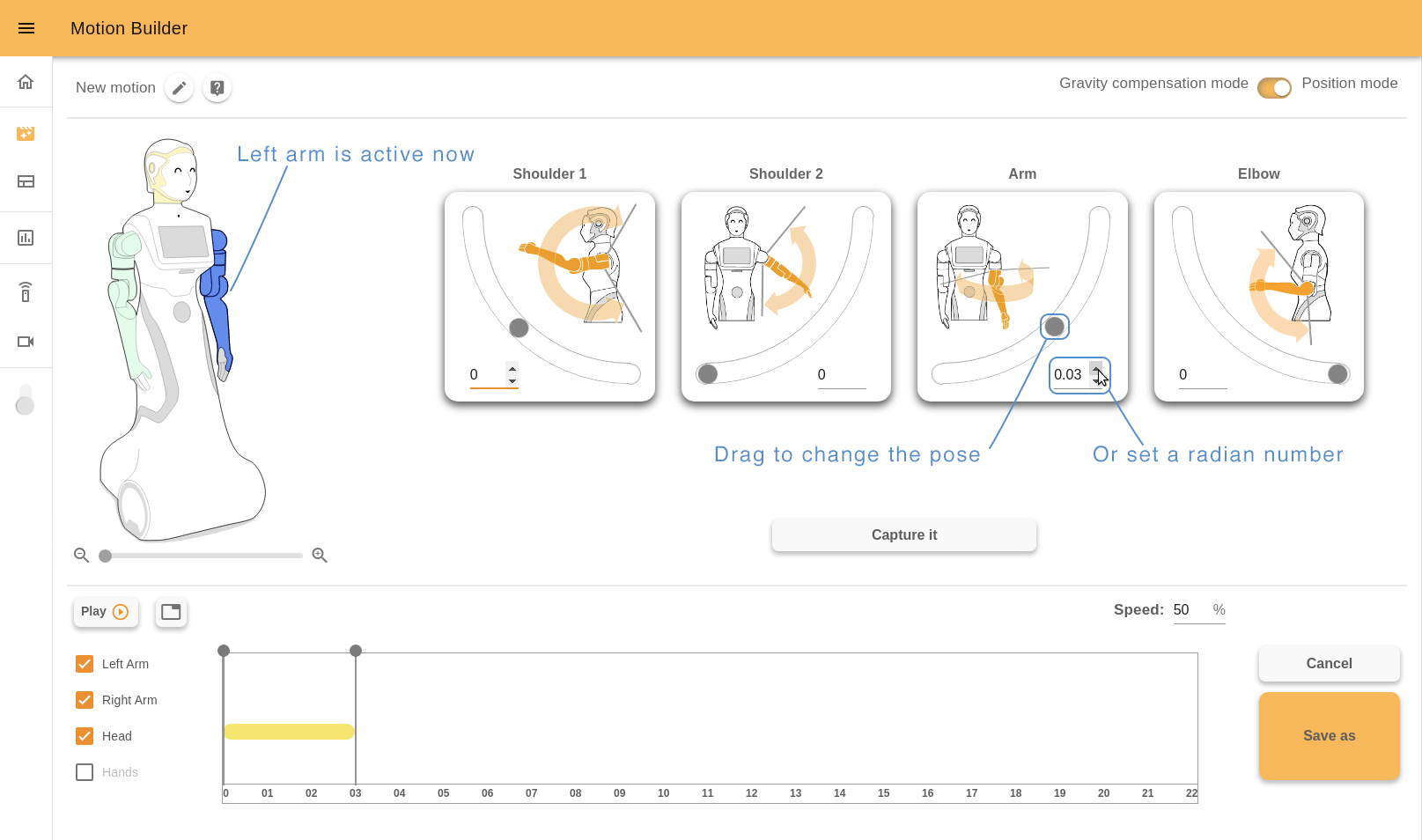

Each movement consists of a group of robot motors poses (keyframes) that the user has to define and capture.

The first you need to do is to position the robot in the desired keyframe. There are two ways to do it: by positioning the robot manually (Gravity compensation mode - optional package) or by using online Editor tools (Position mode).

In Gravity compensation mode the robot doesn’t have a motor control. You can freely fix the position of the robot’s motors (head, arms and hands) by moving them manually. In Position mode you can control the robot position by using the online tools: sliders and joystick. You can switch between different control modes instantly.

So to create a whole movement, capture the robot’s keyframes in the desired order.

10.7.3 Editor interface¶

Let’s see what the interface Motion Builder has and how to interact with it.

On the left top of the page there is a current motion name, “Edit meta” button and “Help mode” button.

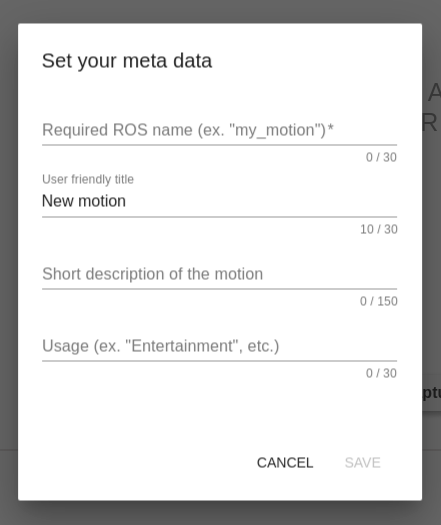

10.7.4 Edit meta popup¶

Click the “Edit meta” button to set meta data of your motion: required ROS name, title, short description, and usage. This info is displayed on the Motion Builder start page (normally the ROS name is not shown but if a motion title wasn’t set previously, ROS name is used instead of it).

ROS name is a required field. It should be a unique word that describes the motions, starts with a letter and consists of the letters, numbers and _. It’s not allowed to change it after saving a new motion. Examples, “nod”, “shake_1”, “shake_2”, etc.

User friendly title should be a word or short phrase that helps you quickly understand what the motion does. It’s not a required field but it’s a good practise to fill it as well. Examples, “Nod”, “Shake Left”, “Shake Right”, etc.

In a short description field, you can describe some important details of the motion to distinguish it easily from the others. Examples, “Nod head”, “Shake right hand”, “Shake left hand”, etc.

In a usage field define the main usage of the motion. It is also a good practise to fill it because in the 10.9 Command desks webapp (“Motions” desk), you will see all your motions divided precisely by Usage names. Examples, “Entertainment”, “Greeting”, “Dance”, etc.

10.7.5 Help tooltips¶

“Help mode” button shows/hides help tooltips on the screen. If you click this button you will see blue icons with question signs. Hover the cursor over any icon to read a tip about Motion Builder usage.

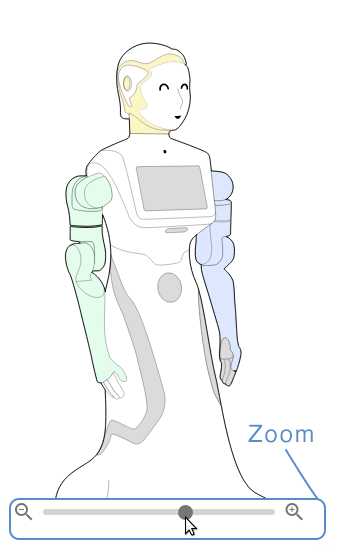

10.7.6 Position mode¶

On the right top of the page there is a switcher between different control modes. You can use it any moment during the editing process.

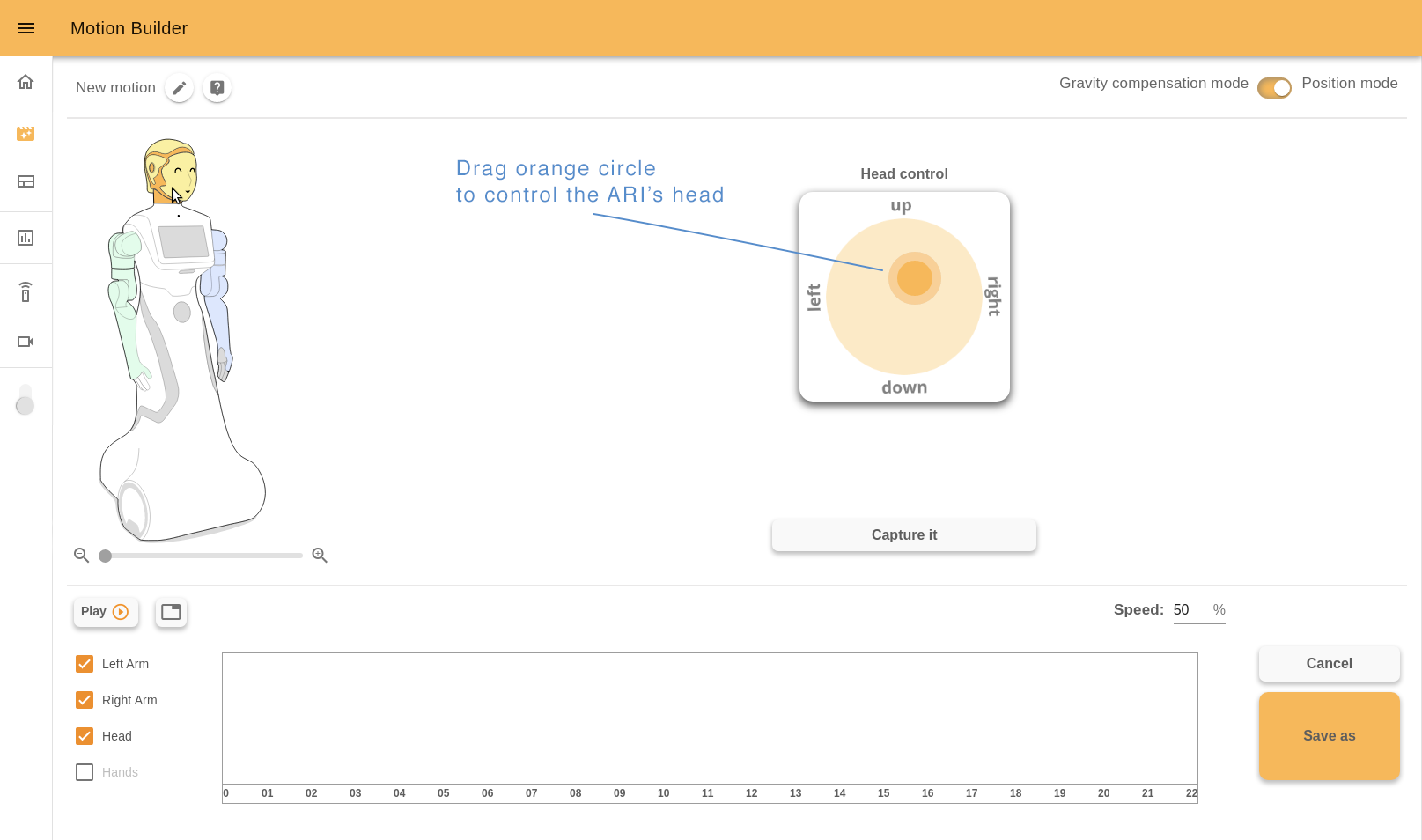

When the Position mode is active, you can interact with the ARI image and choose the part of the robot to control. Use a zoom slider under it to change the image size.

The painted parts are available to choose. For example, click ARI’s head to control it.

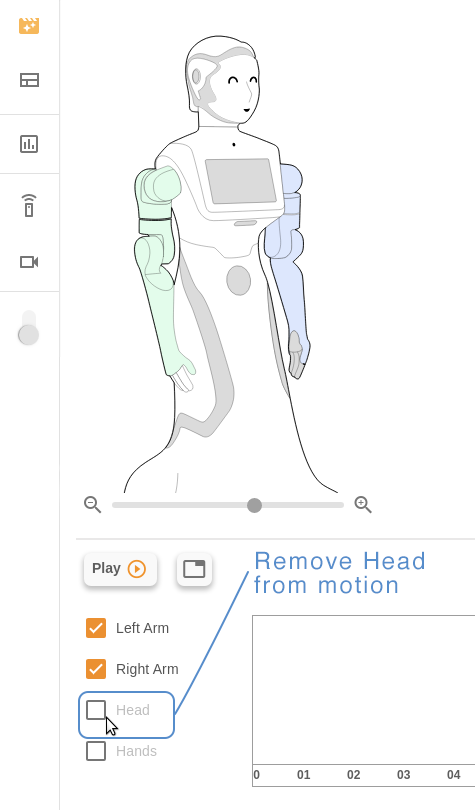

You also can manage joints and groups used for motion. Check the box with joints names on the bottom left of the page to add or remove it. In the example below we removed ARI’s head from the motion and now it’s not painted on the interactive image.

10.7.7 Joystick¶

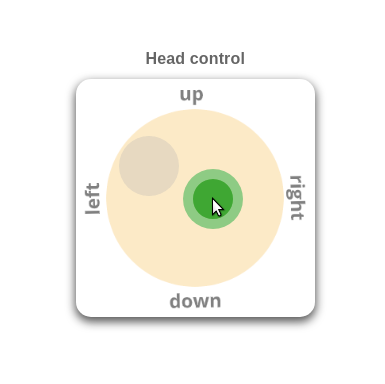

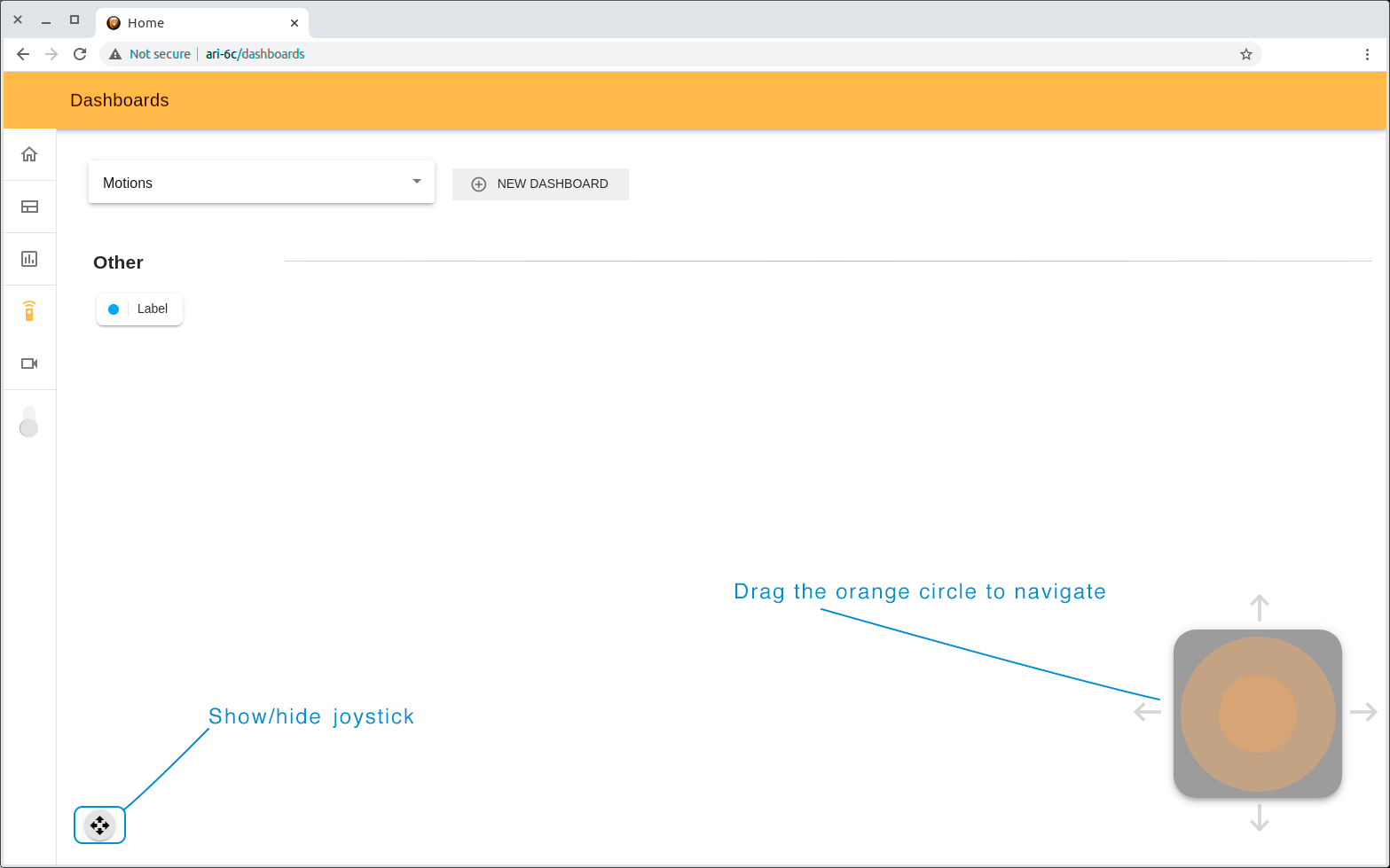

To control ARI’s head use a joystick. Just drag the orange circle (green when the cursor is over it) and watch how the pose of the robot’s head changes.

When you move a joystick quickly, for a short time it’s possible that the robot didn’t change its pose yet. In this case you will see a grey circle that shows the current pose of the head. When the robot has the same pose as the joystick, the grey circle will disappear.

Click the “Capture it” button to store the keyframe.

10.7.8 Sliders¶

Sliders are used to control other groups of robots joints. Let’s click ARI’s arm.

ARI’s arm consists of a group of joints. You can create complex motion by changing the joints pose by separate.

Each joint has a title and a description image. To control the joint, drag a slider circle or change the position number in the input field (type a desired number or use arrows).

Click the “Capture it” button to store the keyframe.

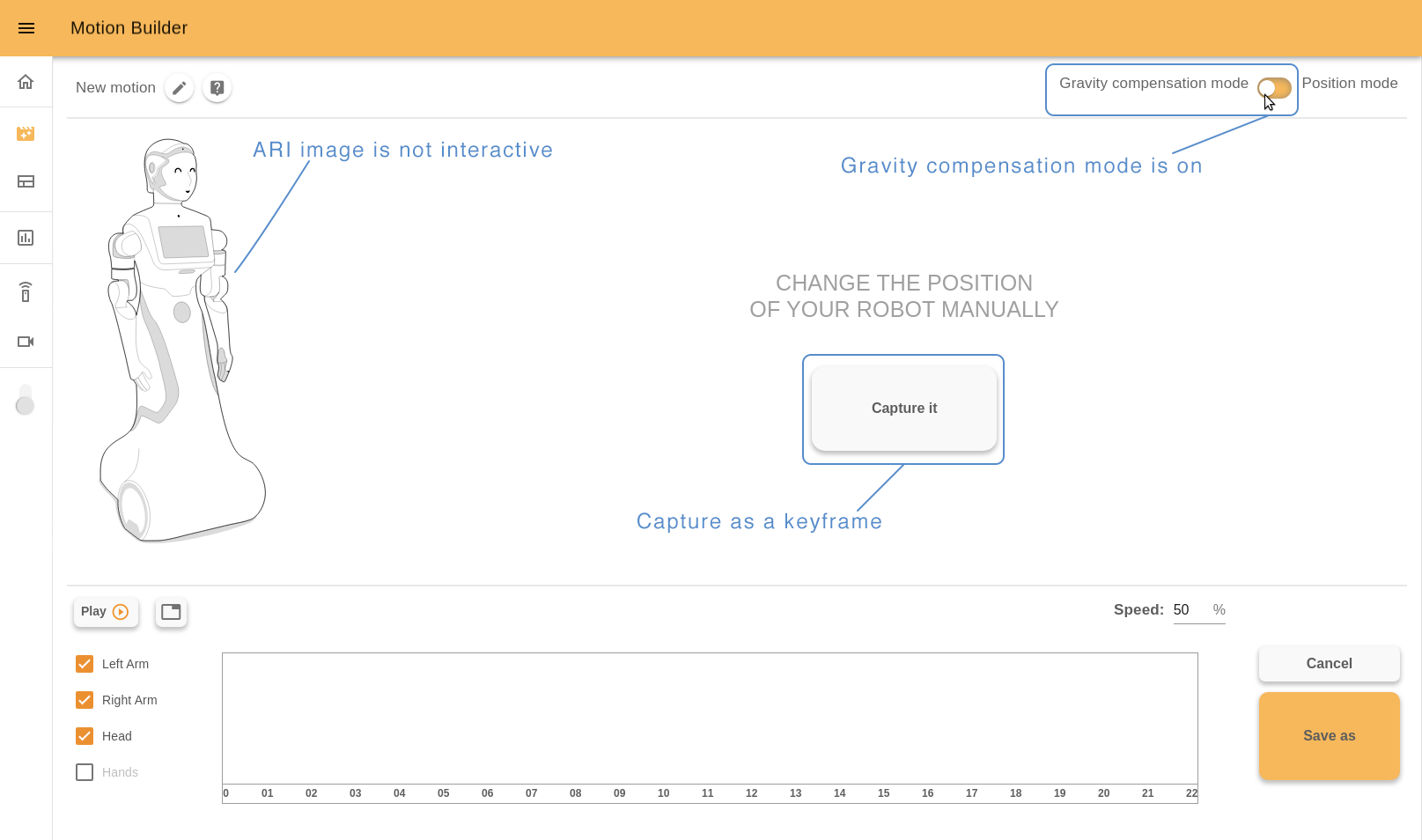

10.7.9 Gravity compensation mode (optional package)¶

When the Gravity compensation mode is chosen, ARI’s image will not be interactive. You should change the robot pose manually and click the “Capture it” button to store the keyframe.

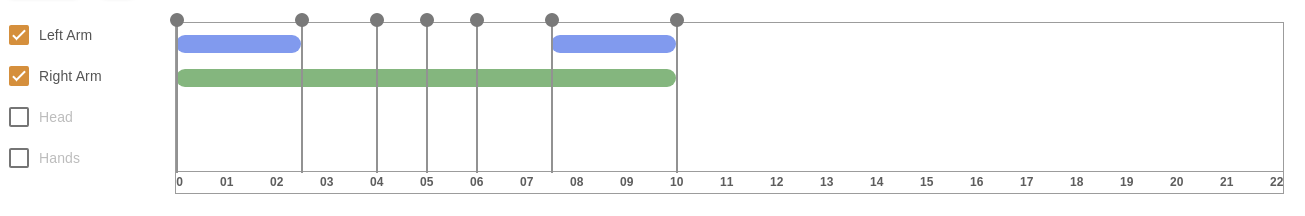

10.7.10 Timeline¶

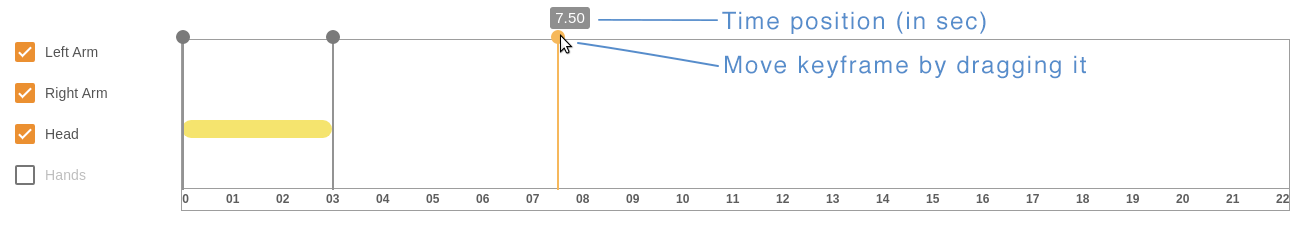

After creating a new keyframe it will appear graphically on the timeline as a pointer. If the pose of any joints group was changed, opposite the corresponding checkbox will appear a colored line. The colors match with the color of the joints group on ARI’s image. For example, orange for the head, green for the right arm, blue for the left arm.

Each pointer is a keyframe you captured. It is situated on the timeline relatively to the time the robot needs to implement the motion. By dragging you can move the keyframe on the timeline.

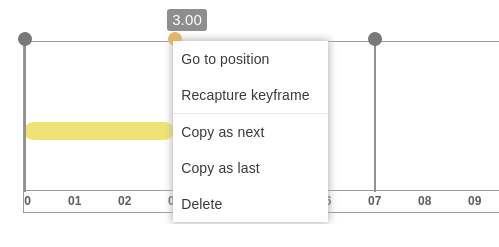

By double clicking you can open a context menu of the selected keyframe.

Go to position - robot will move to the captured position. Recapture keyframe - the selected keyframe will be replaced with the current pose of the robot. Copy as next - copy this keyframe right after it. Copy as last - add copy to the end of the motion. Delete - delete one keyframe.

10.7.11 InfoTable¶

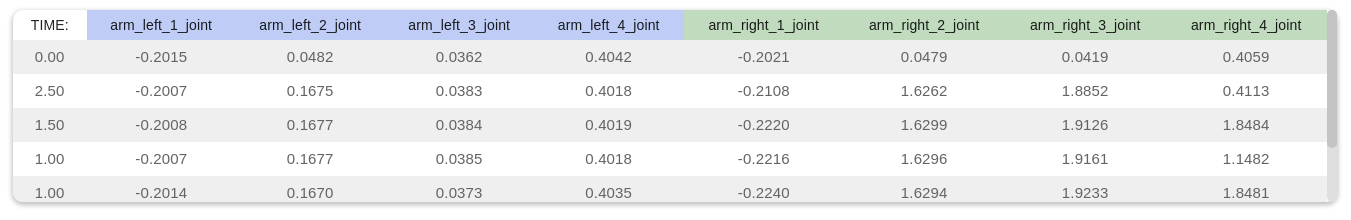

The timeline has another view mode - infotable. Here you can see detailed info about each keyframe: how long this keyframe lasts (in seconds) and angular position of each joint in radians.

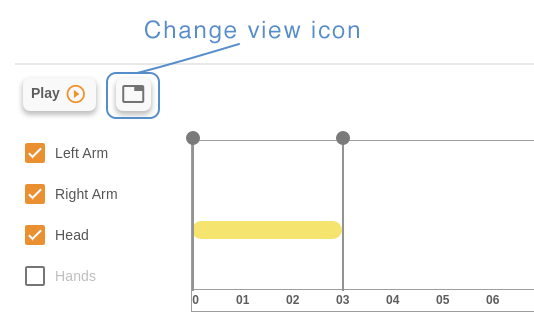

To change the view click a ‘table” icon near the “Play” button.

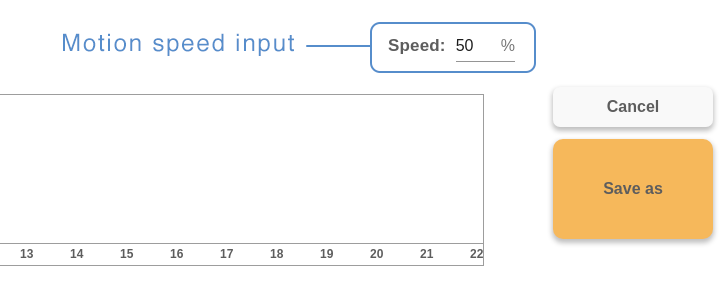

10.7.12 Speed of the execution¶

Speed number allows to control the speed of the motion execution. Switch to 100% to execute the motion at full speed, reduce it to slow down the motion.

10.7.13 Play motion¶

You can play motion while editing or after it. To play motion from the Editor click the “Play” button under ARI’s image.

If you want to play the motion from the start page, click the “play” icon of the chosen motion.

Also you can play motions from Command desks webapp. Open the “Motions” desk and click the corresponding button.

ARI’s touchscreen allows play motions as well. Create a page with buttons using “Page with buttons” or “Slideshow” template and assign any motion to the button on the screen. When a user touches this button, ARI will execute the motion.

10.7.14 Save motion¶

To leave the Editor without saving the changes click “CANCEL”, to store the changes and leave click “SAVE”. Remember that the ROS name is a required field. Fill it before saving a motion and leaving Editor.

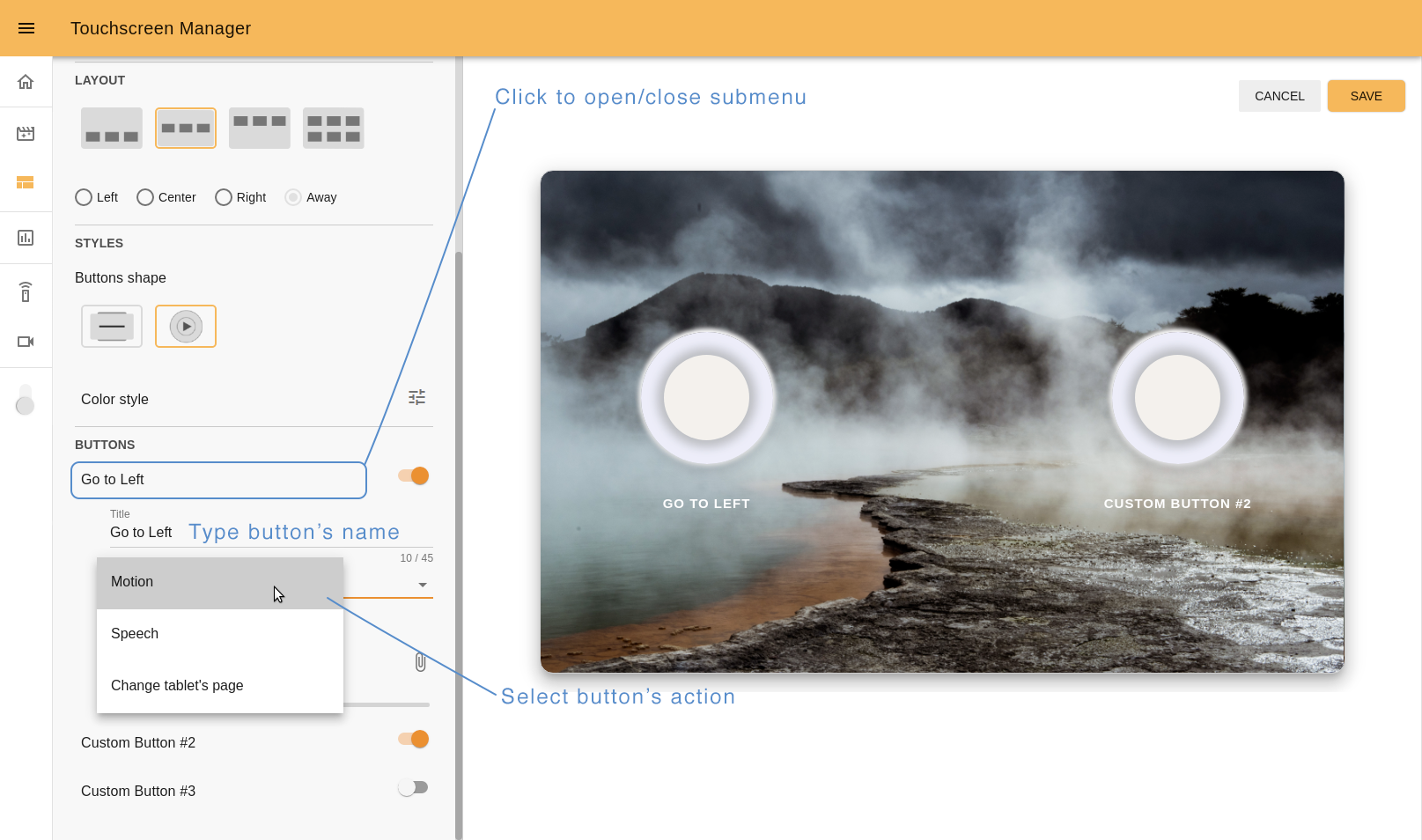

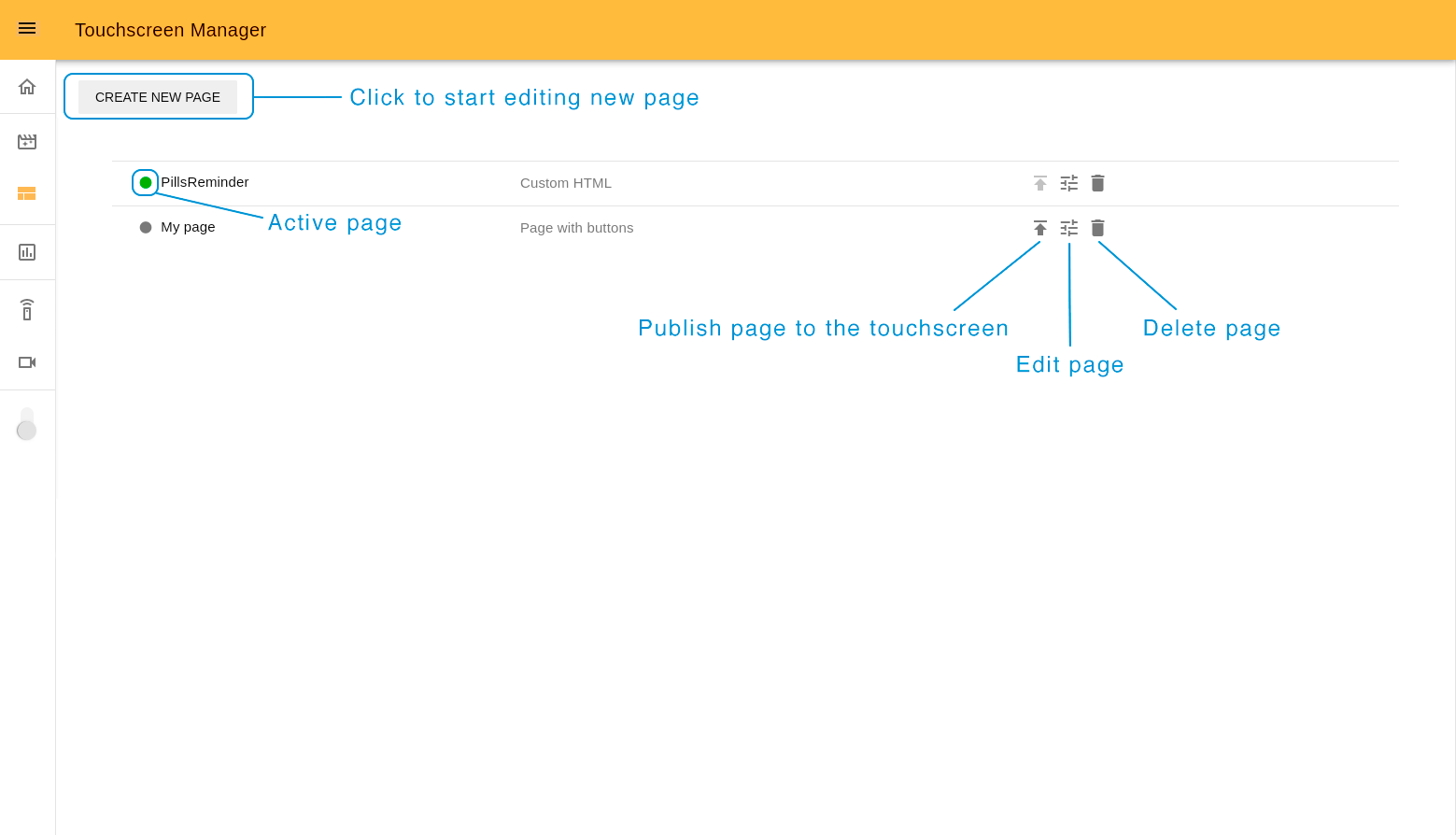

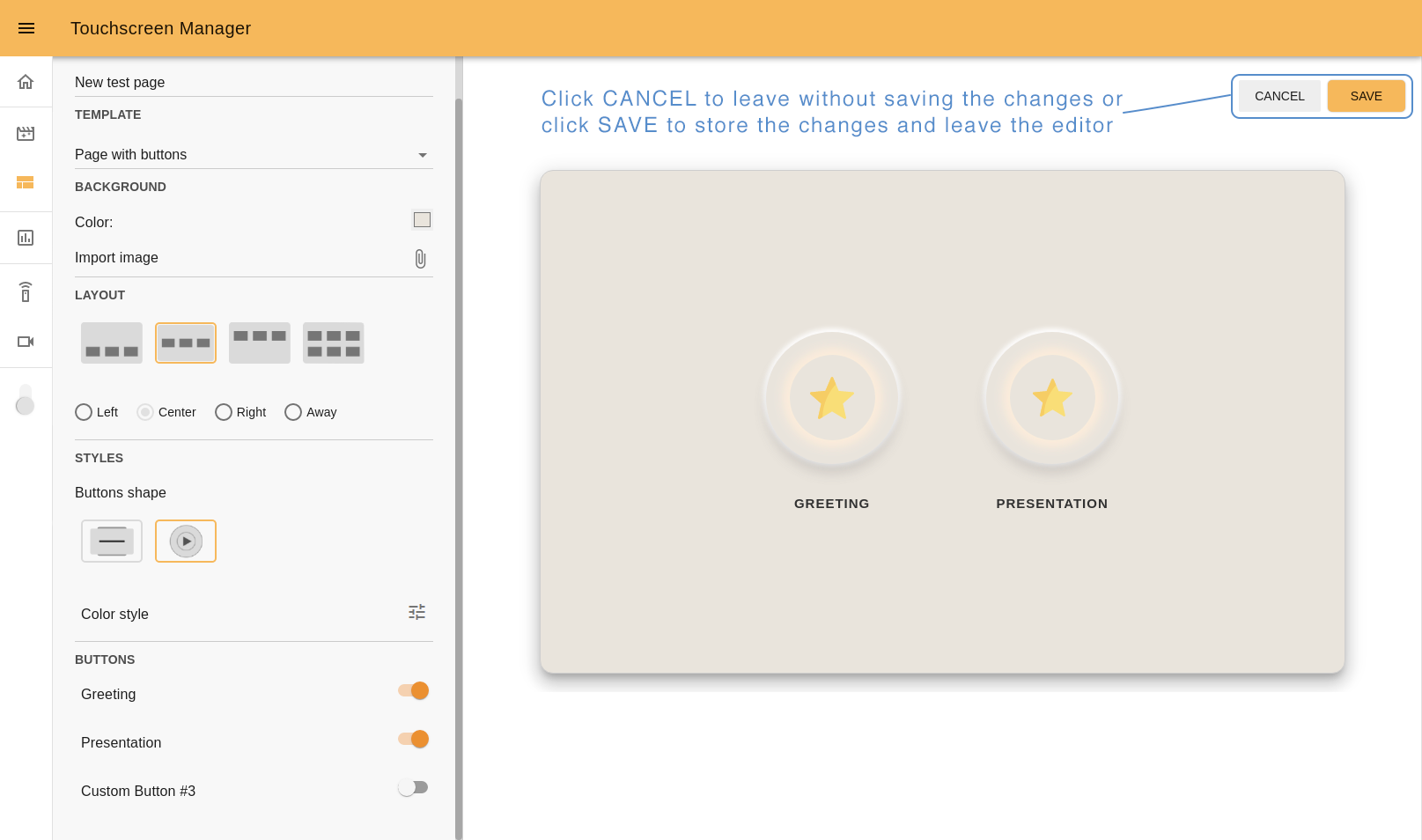

10.8 Touchscreen Manager¶

The Touchscreen Manager is a webapp that allows you to create web pages and publish them on the ARI’s touchscreen.

The Touchscreen Manager consists of a list of the pages to display and an editor, where you can create pages using some predefined templates, or load your own HTML files.

From here, you can see all the pages that have been created, and you can edit them or delete them as well. To create a new page click on the “CREATE NEW PAGE ”button.

To make the page appear on ARI’s screen, click the publish button next to the page, and then it will be indicated with a green circle.

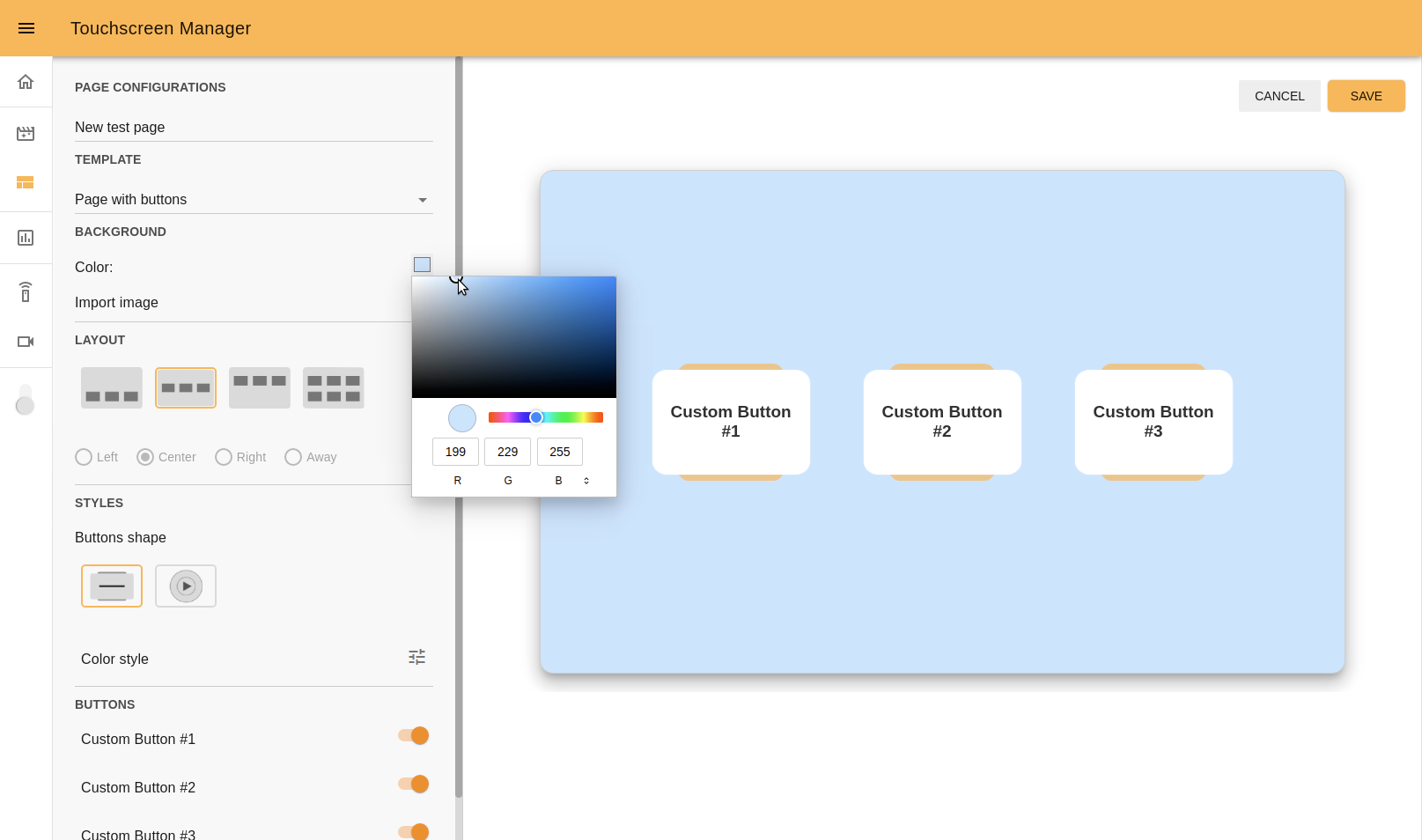

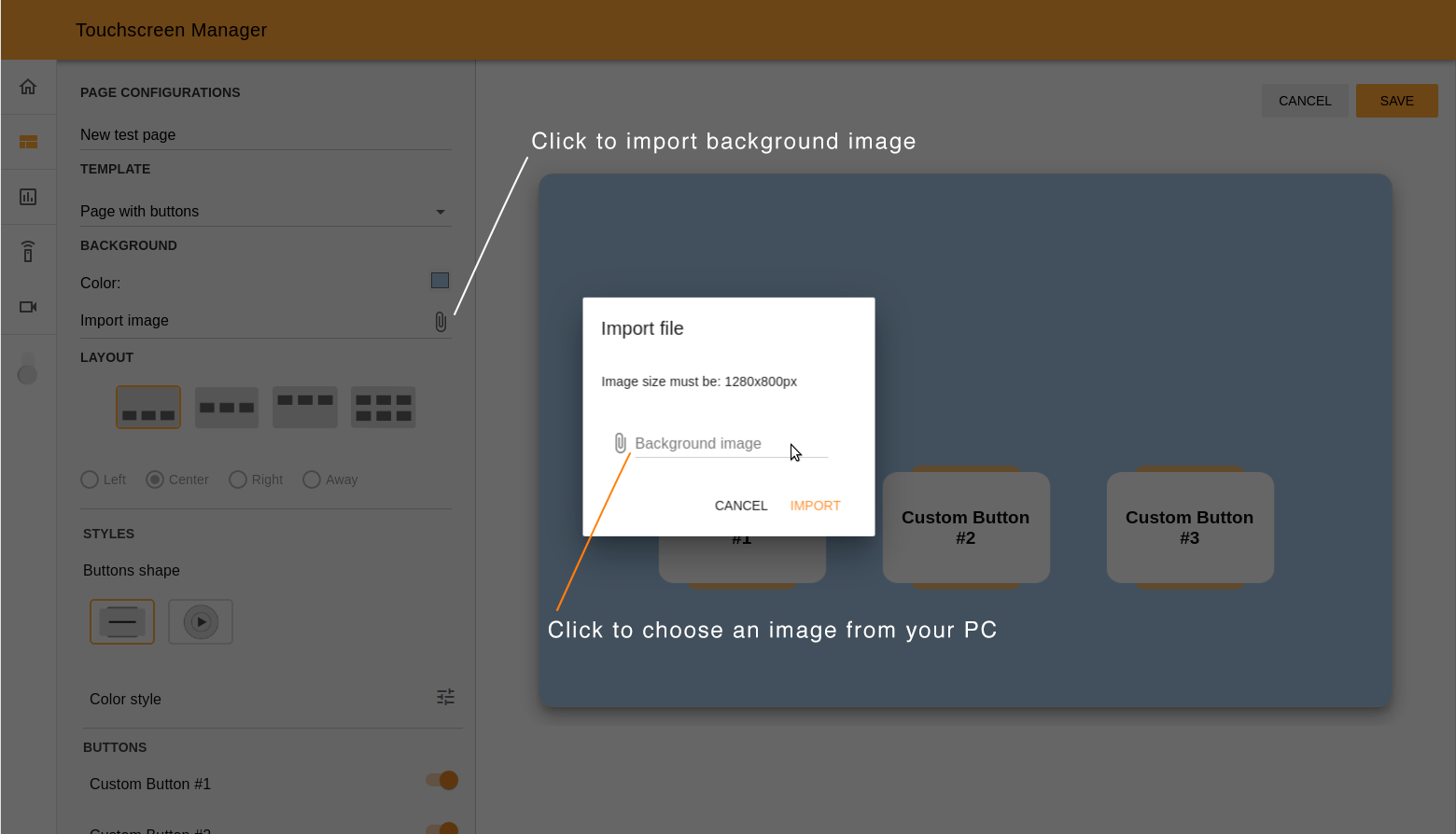

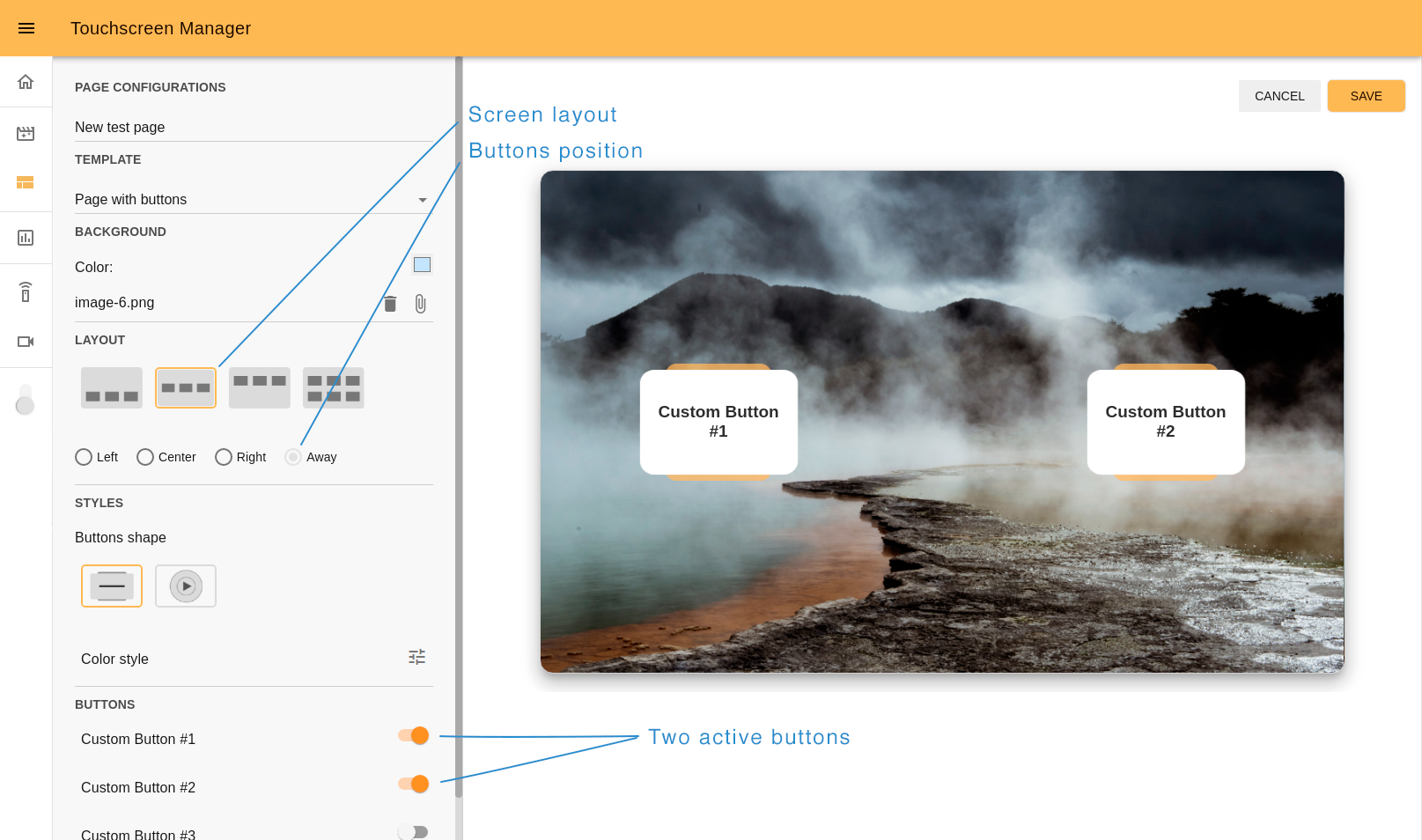

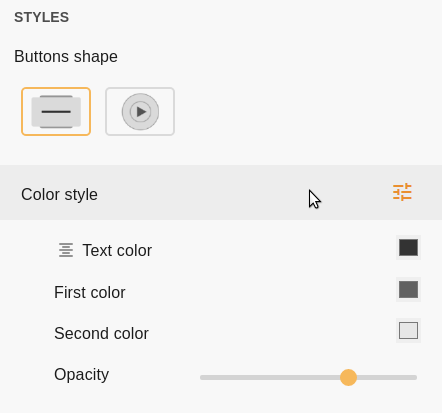

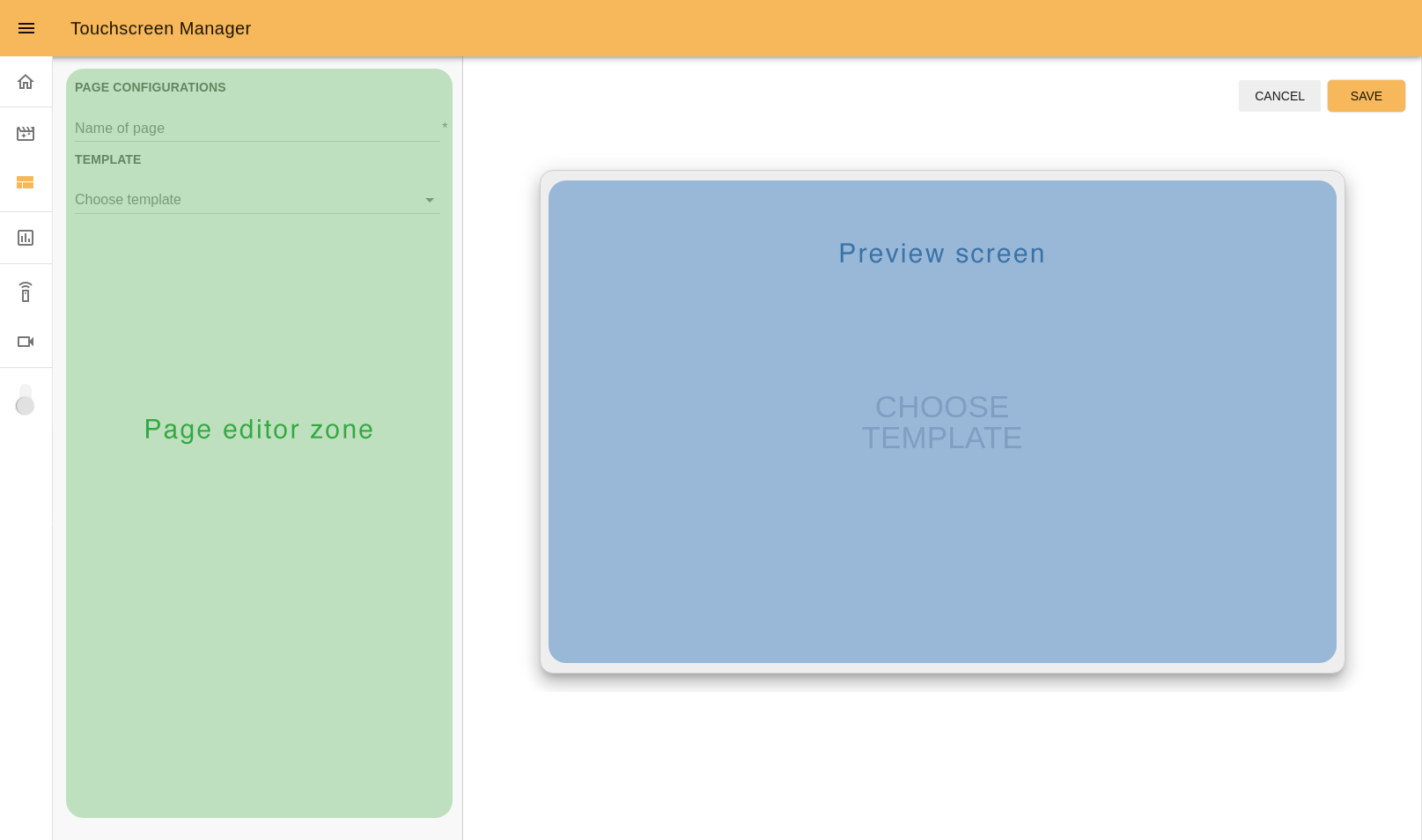

10.8.1 Create a new page¶

The Touchscreen editor is a tool that allows creating pages by customizing predefined templates or by importing your own webpage in .zip format. While the page is being edited a preview is displayed on the preview screen on the right.

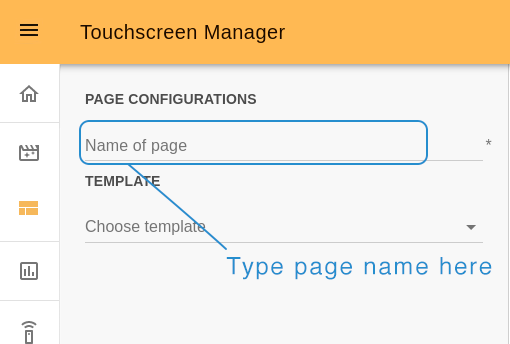

Let’s check the full process for creating a new page.

Step 1. Choose the page name. Remember it will be permanent and it is impossible to change it later.

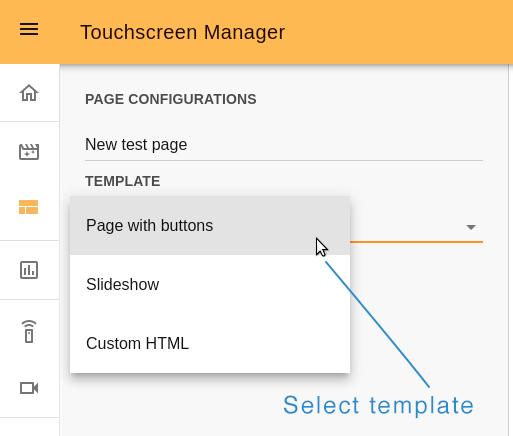

Step 2. Choose the template. There are three options: 10.8.2 Page with buttons, 10.8.3 Slideshow and 10.8.4 Custom HTML.

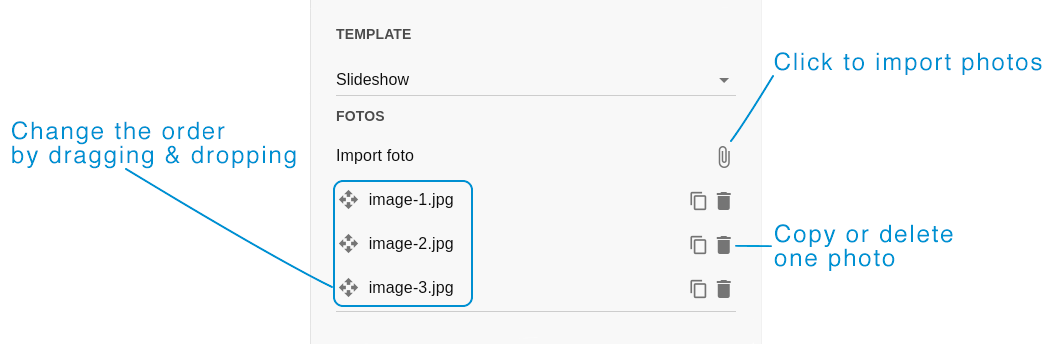

10.8.3 Slideshow¶

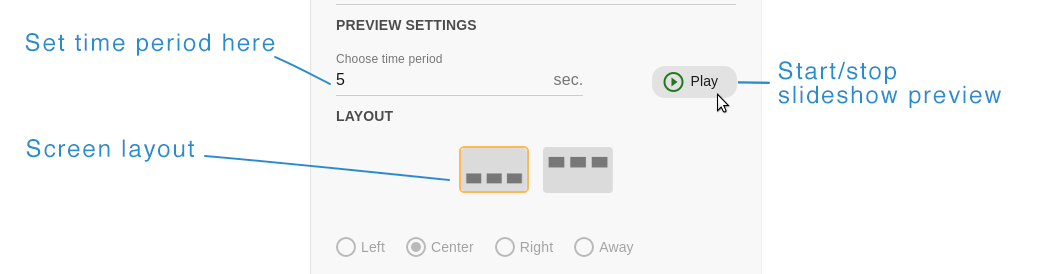

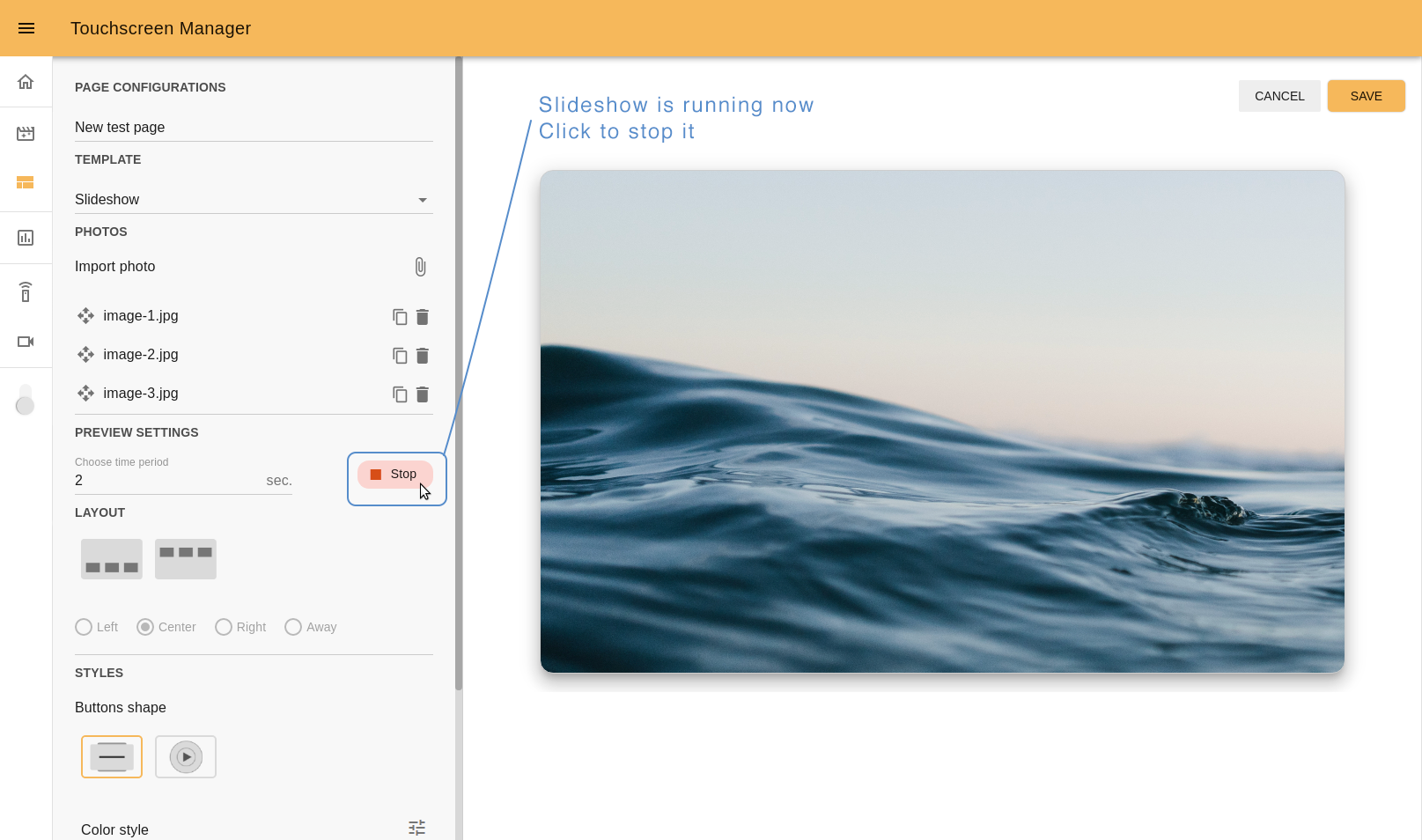

With this template you can create a presentation of a series of still images. After choosing a page name and the Slideshow template the next step will be:

Step 3. Import photo. Click on the attach icon and one by one import photos to your presentation. In the same section you will see the list of imported photos. By dragging and dropping it is also possible to change the order in which the images will appear.

Step 4. Set preview settings. Choose how often the images will change. Also with the button “Play/Stop” you can check the results on the preview screen.

Importing at least one photo and setting a time period are mandatory steps before you can use the Play button.

By clicking the Stop button you can put on pause a reproduction of slideshow preview.

Step 5. Choose layout. The main difference between this section and the same one in the 10.8.2 Page with buttons template is the quantity of buttons that can be displayed. It’s possible to choose up to three static custom buttons that will be shown during the presentation. The buttons can be displayed on the top or on the bottom of the touchscreen, and can be aligned with each other (if you have less than three buttons in a row).

Step 6. Customize buttons. This step is identical to the one in the previous template: 10.8.2 Page with buttons, step 5.

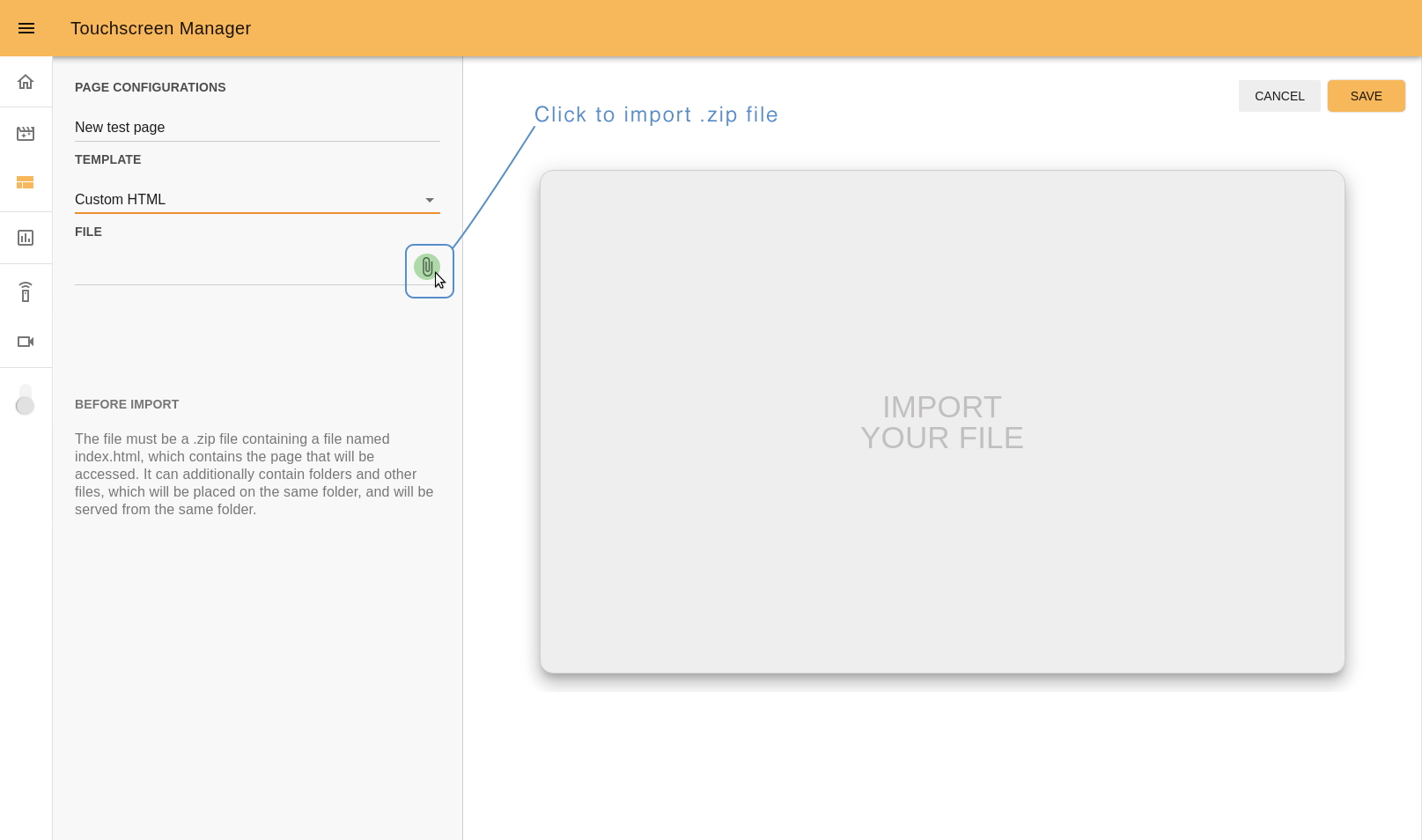

10.8.4 Custom HTML¶

This template can be used if you want to display your own webpage. In the section File click the attach icon and import your file.

To display your webpage correctly:

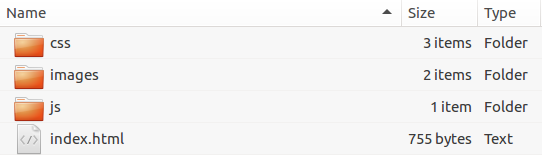

the file must be a

.zipformat;the Zip file must contain a file named

index.html, which contains the page that will be accessed;it can additionally contain folders and other files, which will be placed in the same folder.

An example of how files can be organized before zip compression:

To check if you imported the file correctly, save the page, publish it, and view the result directly on ARI’s touchscreen.

10.8.5 Cancel or save¶

On the top right of the page there are the buttons “CANCEL” and “SAVE”. Click “CANCEL” if you want to stop editing and don’t want to save your progress. Click “SAVE” to save your page and go back to the pages list, where you can publish it on ARI’s touchscreen.

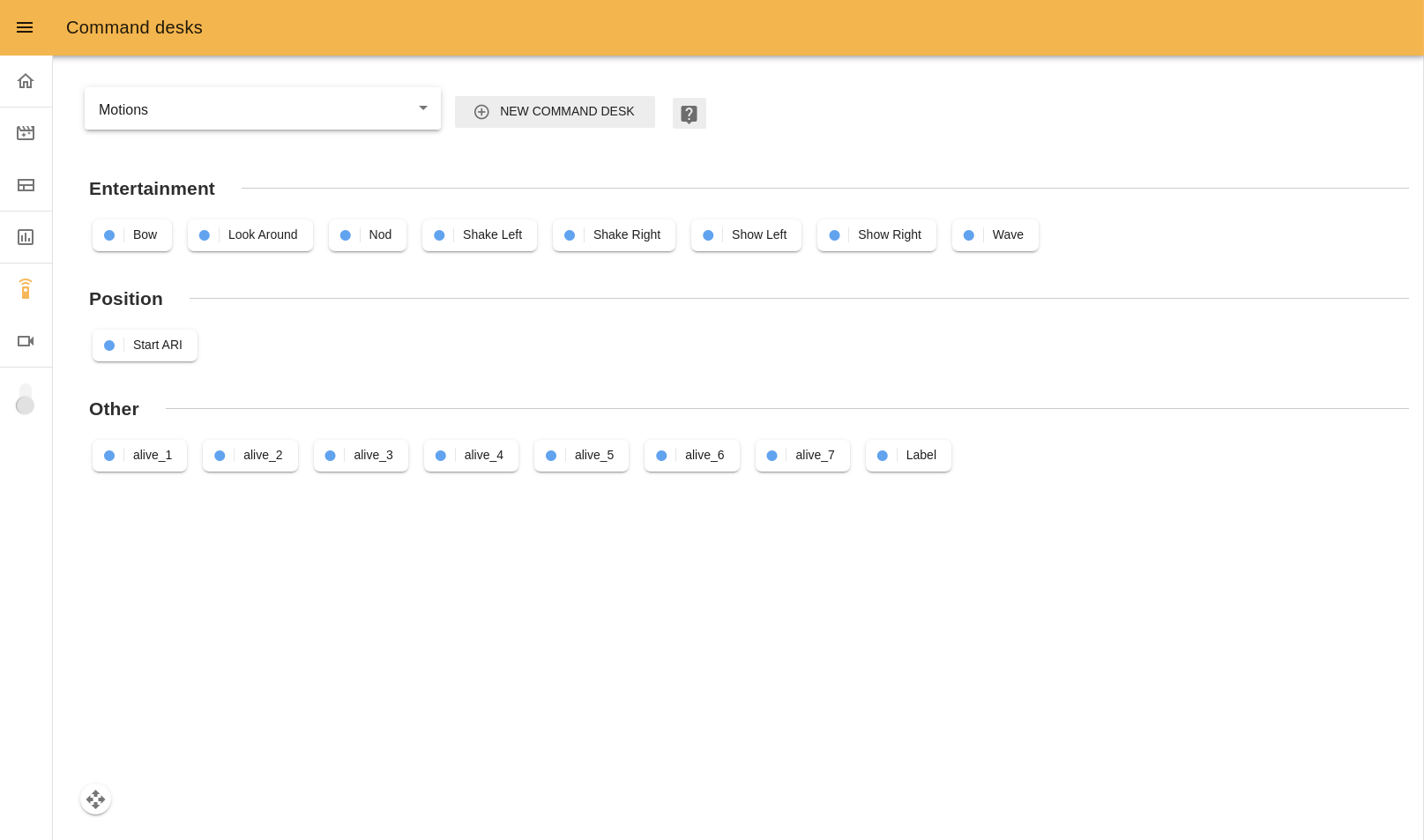

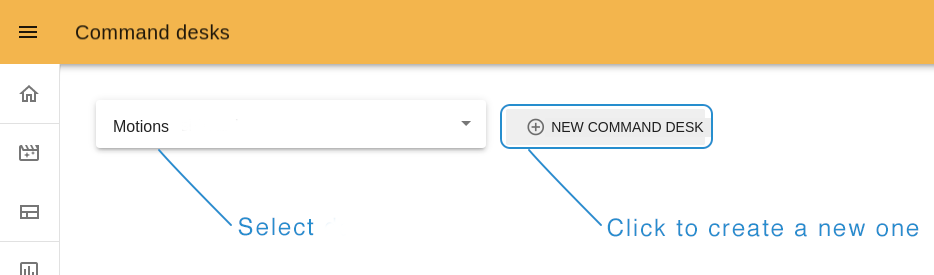

10.9 Command desks¶

With this app, you can teleoperate the robot, and prepare it for events or other needs, by creating Command desks with groups of buttons inside them. Then you assign actions that the robot will perform when you click any button.

To create a new command desk click on the “NEW COMMAND DESK” button at the top of the page. Near it, at the left top corner menu, you can choose a Command desk created earlier.

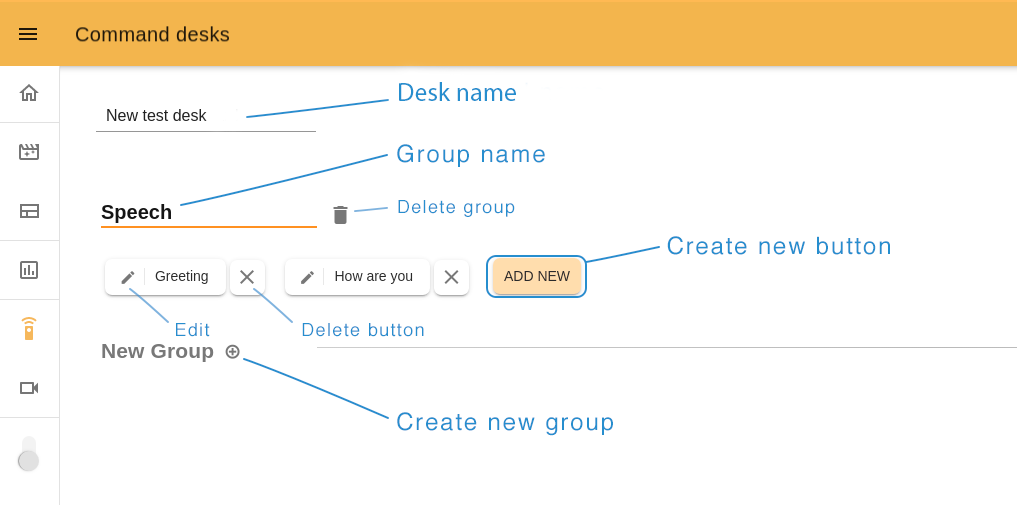

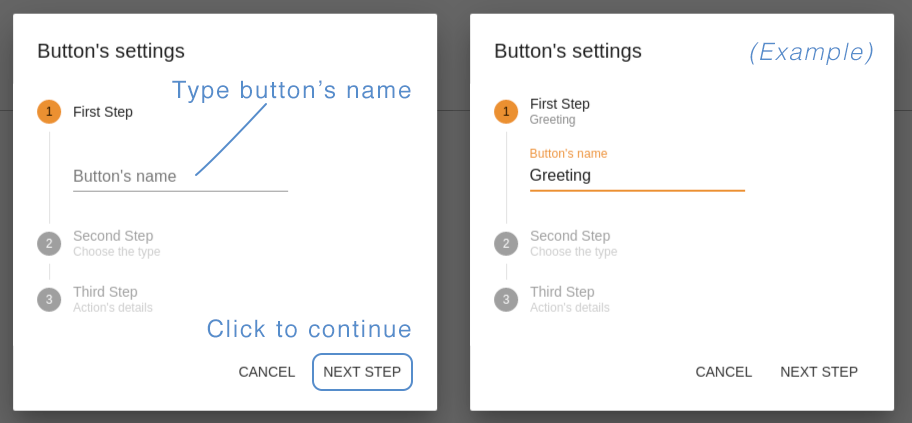

10.9.1 Create a new desk¶

Type the name of your desk and create a first group (you must have at least one) by clicking on the “plus” icon near the “New group” title. To create a new button, click “ADD NEW”.

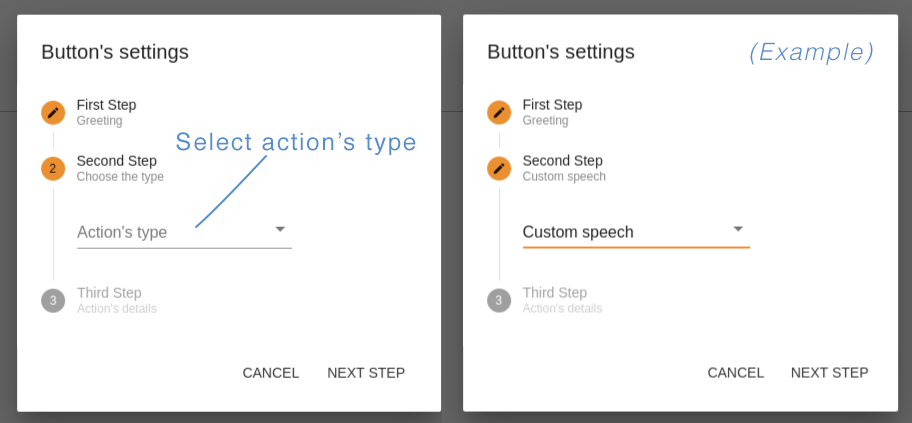

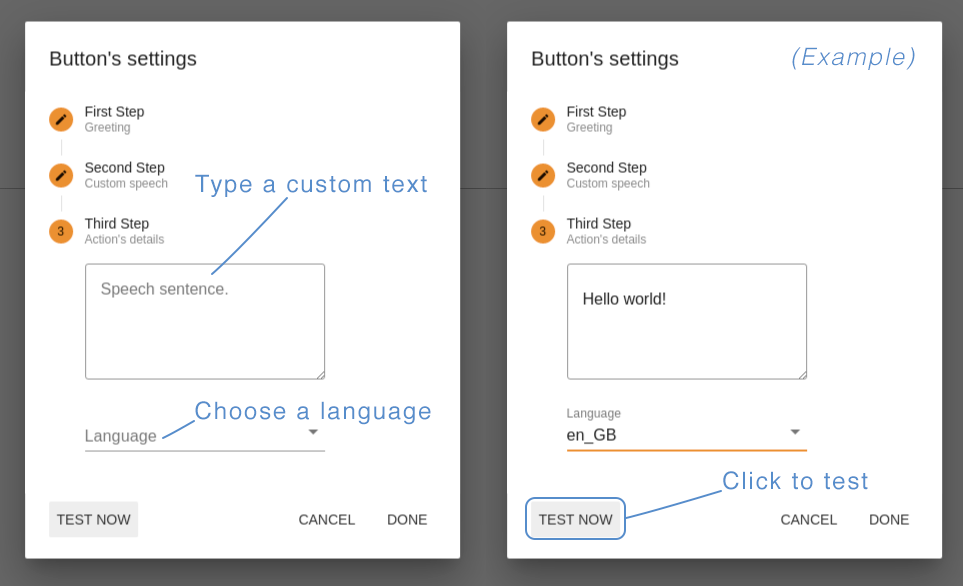

Step 1. Button’s name. Choose a name related to the action this button will perform.

Step 2. Action’s type. You can create buttons with “Motion” type (10.7 Motion Builder), corresponding to the blue circles, so that the robot makes a movement, “Presentation” with a dark brown circle to execute your prerecorded (Presentations) or “Custom Speech type”, which is represented by an orange circle, so that the robot says the written text. In this example we chose “Custom speech”.

The available list of action types depends on the the installed web apps and the robot.

Step 3. Action’s details. Here you should define what ARI will do after clicking on this button. For “Motion” or “Presentation”, choose an option from the list, for “Custom speech” type a custom text for ARI to say, and choose the corresponding language.

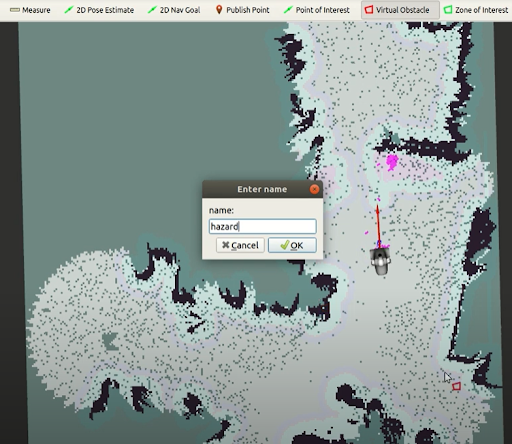

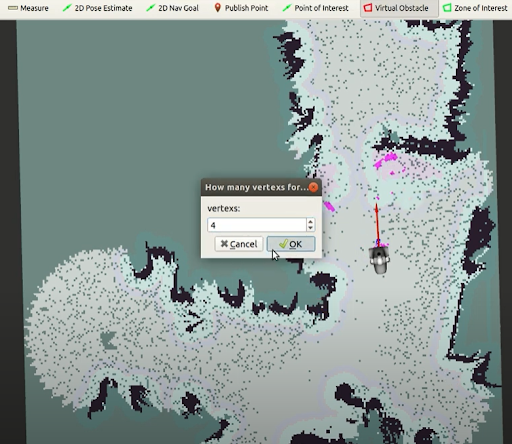

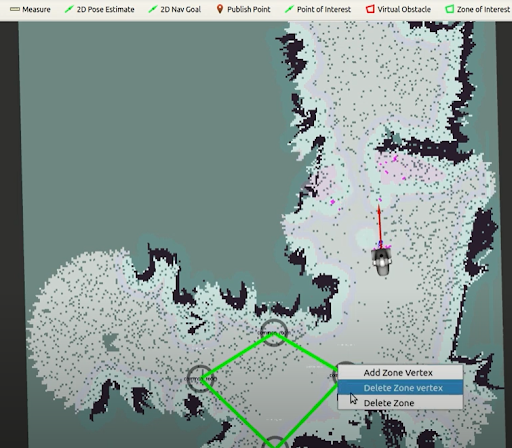

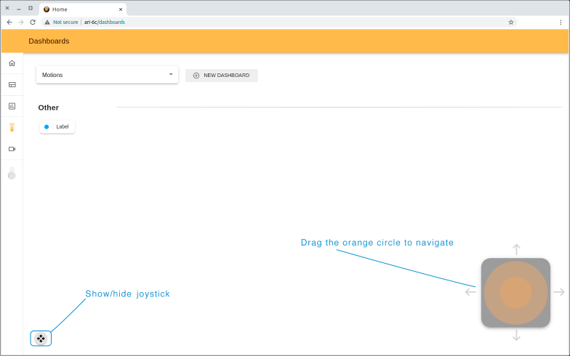

The button “TEST NOW” allows you to try your button in action before saving it. If all is ok, click “DONE” to save and close the button editor.